文章探讨了非开发者通过AI生成代码(Vibe Coding)的现象及其对安全治理的影响。这种趋势降低了技术门槛,使更多人能够快速构建解决方案,但也带来了安全风险和治理挑战。文章提出需要从被动阻断转向主动赋能,在开发过程中嵌入安全指导,并强调文化和治理模式的重要性。 2026-1-20 17:26:52 Author: www.guidepointsecurity.com(查看原文) 阅读量:0 收藏

Vibe code is what results when someone who isn’t a developer needs to build something that doesn’t exist yet, and uses AI to make it happen. Software development used to be gatekept by the skills required to do it. Now, by describing a problem and what they want, anyone can guide AI tools to generate, refine, and debug code. It shifts the focus from writing code to ideas and experimentation, making building faster and more accessible. It also creates new problems for security teams.

This two-part series explores vibe coding and the chance security teams have, right now, to shape its future in a way that lets people build without creating security nightmares. In part one, we’ll look at where vibe coding fits in relation to governance, and a cultural model for keeping things secure even when there’s no developer at the helm.

Upfront Acknowledgment

Let me be clear about what I’m not talking about.

If you’re running a proper Application Security (AppSec) program (assessments in your pipeline, code review gates, DevSecOps maturity model, the whole thing), that’s not this conversation. You’re the inner ring. You have governance, or the start of it. Keep doing what you’re doing.

I’m also not talking about your citizen developer program. That’s the middle ring. You’ve got platform constraints, approved connectors, and some governance, risk management, and compliance in there, too.

I’m talking about the long tail that’s starting to emerge. The outer ring. The part that’s coming, whether we’re ready or not.

Where Vibe Code Sits in the Three Rings of Governance

When looking at this “three-ring” model, the inner ring has the governance machinery. The middle ring has platform constraints.

The outer ring has nothing. And that’s where the growth is happening.

And honestly? I find this exciting.

For the first time, people who aren’t developers can build things that solve real problems. And for the first time, security has a chance to be part of that process from the very beginning. Not as a blocker, but as a partner.

That’s new. That’s worth paying attention to.

What Is a Vibe Coder?

The term comes from Andrej Karpathy (February 2025):

“There’s a new kind of coding I call ‘vibe coding’, where you fully give in to the vibes, embrace exponentials and forget that the code even exists… It’s not really coding — I just see stuff, say stuff, run stuff, and copy-paste stuff, and it mostly works.”

In an enterprise context, this isn’t a developer who’s gotten lazy. It’s someone who was never a developer, who is now building things because AI made it possible.

Who is Writing Vibe Code?

They’re in your organization right now:

- The security analyst who built a quick script to check AWS IAM roles

- The business analyst who automated a reporting workflow

- The HR person who created a tool to parse resumes

- The finance team member who built a reconciliation checker

- The marketing analyst who created a customer data aggregator

They’re not trying to become developers. They have a problem, they have access to AI, and they’re solving it.

The “Accidental Developer” Reality

Another way to put it: accidental developers.

People who find themselves building software without ever intending to enter that world. They didn’t learn to code. They learned to describe what they wanted, and an AI built it.

Vibe Code Introduces a Governance Gap

From a security perspective, here’s what the pipelines look like:

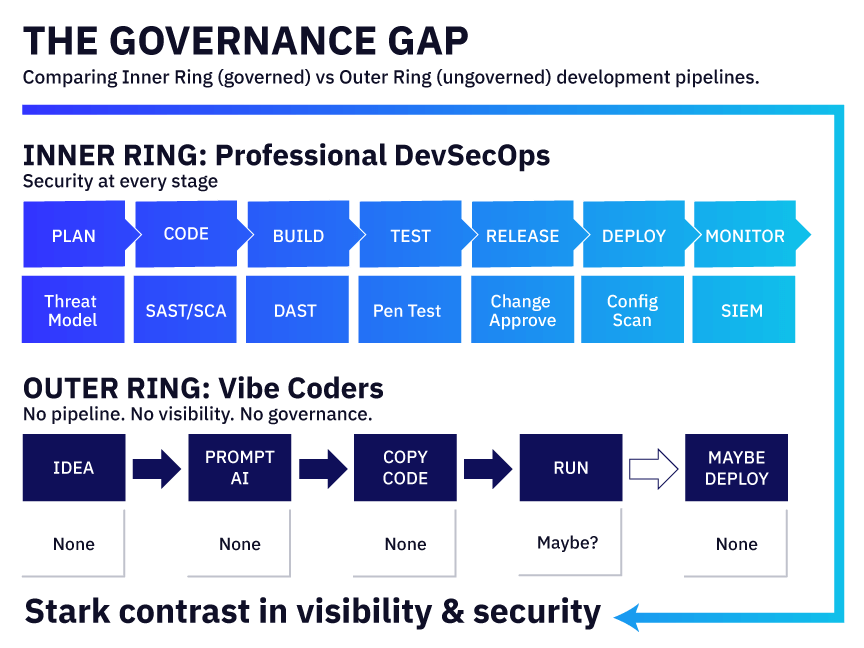

Inner Ring (Professional DevSecOps):

- Plan → Threat model

- Code → Static analysis, dependency scanning

- Build → Security testing

- Test → Penetration testing

- Release → Change approval

- Deploy → Configuration scanning

- Monitor → Security logging

Outer Ring (Vibe Coders):

- Idea → Nothing

- Prompt AI → Maybe some security

- Copy code → Nothing

- Run → Maybe some testing

- Deploy → Nothing

Security researchers call this “dark development”: unversioned, untested, invisible to IT until something breaks.

Why Traditional Governance Doesn’t Reach Vibe Coders

| Inner Ring Has… | Outer Ring Has… |

| CI/CD pipeline to insert controls | No pipeline |

| Code repository with visibility | Local files, maybe a random folder |

| Security tooling integration | No tooling |

| Review gates before deployment | Self-service deployment |

| Training requirements | The internet and vibes |

You can’t shift left when there’s no lane (or road) to shift in.

The Scale of What’s Coming

The numbers suggest vibe code isn’t a fringe phenomenon:

| Segment | Estimated Population |

| Professional developers using AI assistance | 20-25 million |

| Citizen developers on formal low-code platforms | 15-20 million |

| Non-developers regularly building with AI | 5-15 million (and growing fast) |

| Non-developers experimenting with AI coding | 50-100+ million |

Gartner predicts that by 2026, non-professionals will build 80% of technology products.

Whether that number is exactly right doesn’t matter. The trend is obvious.

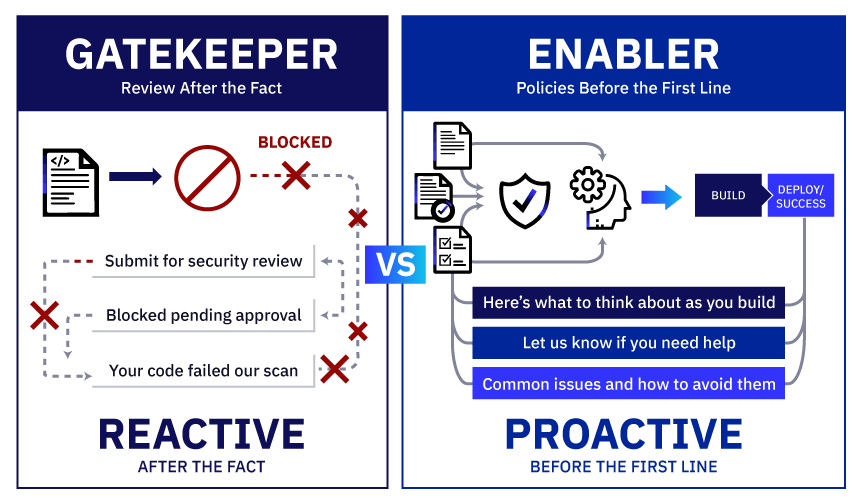

The Gatekeeper Problem

If you’re a security organization operating as a gatekeeper in this context, you have a problem:

There’s no gate.

No PR (pull request) to review or deployment to approve. No pipeline to insert your scanner into or visibility into what’s being built.

By the time you find out about it, the code is already running, possibly for months. It could be processing customer data, but you have no way of knowing. Maybe it has hardcoded credentials. Or it might be calling APIs you didn’t know about.

If this sounds familiar, it should. Security teams have been fighting shadow IT for decades. Shadow AI is the same battle, but it’s faster to deploy, harder to find, and potentially capable of processing sensitive data from day one.

The gatekeeper model assumes a gate exists. For the outer ring, it doesn’t.

AI Lockdown Is Hard

Before we talk about enabling vibe code, we need to acknowledge something uncomfortable: most organizations that claim to control AI usage simply aren’t.

Many security leaders believe they have this handled. They’ve blocked ChatGPT, written policies, and sent the memo.

The data tells a different story:

- IT can’t see 89% of enterprise AI usage (LayerX, 2025)

- Only 17% of organizations have technical controls that actually enforce their AI policies. The rest rely on written policies alone. (Kiteworks, 2025)

- 41% of employees find workarounds when AI tools are blocked (UpGuard, 2025)

- 46% say they won’t stop using AI even if banned (Software AG, 2025)

- 97% of AI-related breaches occur in organizations lacking proper access controls (IBM, 2025)

These statistics clearly demonstrate that organizational AI “blocks” are failing. Their policies are theater. The activity is happening anyway.

This isn’t to say blocking is wrong. Technical controls matter. But they’re not sufficient on their own, and, for many organizations, the budget, tooling, or political will to implement comprehensive controls simply isn’t there yet.

So what do you do in the meantime?

If You Can’t Block It All, Enable Instead

Let’s say you’ve done what you can. You’ve blocked the obvious tools, written the policy, and trained the staff. But you know it’s not airtight. You don’t have the budget for enterprise-wide DLP. You don’t have executive buy-in for a full AI governance program. You’re doing your best with what you have.

The question becomes: what else can you do?

Some security organizations are adding a second layer to their approach. Not replacing technical controls, but supplementing them. If vibe coders are going to build things with AI assistants anyway, make sure your security guidance is part of the conversation from the start. Not buried in a SharePoint site no one visits, but in places the AI can actually find and reference.

In addition to: Blocking and reviewing after the fact (gatekeeper)

They’re also: Publishing policies the AI can read before the first line of code (enabler)

Two Cultures

The way your organization responds to vibe code will shape the culture that emerges. Think of it as a spectrum with these two examples on it:

Culture A: Compliance is invisible until you hit a wall.

In this culture, security requirements aren’t findable. Policies exist, but they’re buried in SharePoint. Someone wrote the guidance for auditors, not builders. People follow the path of least resistance because no one showed them a better path. They’d happily comply if they knew what compliance meant and where to find it.

When these folks eventually surface their work (because they need budget, or want to scale, or something breaks), they hit friction. They didn’t know there was a review process. They didn’t know about the requirements. Now they’re frustrated, security is frustrated, and the relationship starts off adversarial.

Culture B: Compliance is baked into the building process.

In this culture, security guidance is clear, accessible, and findable. You write it in plain language. It lives where the AI assistants can see it. People who want to do the right thing can find the right thing without hunting for it.

When these folks surface their work, they’re already partway there. They thought ahead. They pointed their AI at the policies (or better yet, the AI comes pre-trained on those policies). Maybe they even documented what they built (or the AI did it for them). The conversation starts collaboratively, with at least some shared understanding of the importance of security.

The difference isn’t the people. It’s the environment that security creates.

Passive enablement means putting your policies where they can be found. Being present in the context where building happens. Making it easy to do the right thing.

This doesn’t replace blocking. It complements it. And for the activity that gets past your controls, it’s the difference between cleanup and collaboration.

Successfully Enable Your Teams to Vibe Code – Securely

1. Publish policies in plain text, where AI assistants can find them

- In the wiki

- In repo README files

- In .coderules (or similar AI context files)

- Anywhere the vibe coder’s AI might look (bonus points if they’re using an AI that’s pre-trained to find your policies)

2. Write for consumption, not compliance

Rather than vague platitudes, “Store credentials in accordance with the organization’s secrets management policy.”

Be prescriptive: “Don’t hardcode API keys. Use environment variables.” (The AI will know what to do, even if the vibe coder does not.)

3. Think of yourself as the invisible co-pilot

- You’re enabling vs. blocking their deployment

- You’re in the proverbial room when they first describe the problem to their AI assistant

- Your policies are part of the AI’s context from the start

4. Be findable, enable them

- Go to them rather than waiting for them to come to you (they won’t)

- Repeat and remind. Don’t assume they’ll look for or read your Confluence page (they won’t)

- Make your guidance findable by the tools they’re actually using

What’s Next?

In Part 2 of this series, “A Competitive Dynamic,” we’ll explore the benefits of enabling (rather than restricting) security-powered vibe coding, along with a detailed analysis of where and how security feeds into the process.

In the meantime, check out our whitepapaer, “Secure the Future: A Framework for Resilient AI Adoption at Scale.” It delivers a security-first approach to help your organization adopt AI responsibly and at scale. In this paper, you will learn:

- The AI “unknown unknowns” and how they feed the expanding risk surface

- Why traditional defenses aren’t built for what’s coming

- What in AI security is hype vs. reality and how to know the risks

- A framework for AI at scale, including managing the knowns and unknowns

- The importance of leveraging AI security experts as part of your AI strategy

- Key indicators of AI security success

Victor Wieczorek

VP, AppSec and Threat & Attack Simulation,

GuidePoint Security

Victor Wieczorek drives offensive security innovation at GuidePoint Security, leading three professional services practices alongside the operational teams behind that work. This creates a feedback loop that makes delivery better for everyone. His practices (Application Security, Threat & Attack Simulation, and Operational Technology) cover the full offensive spectrum: secure code review, threat modeling, and DevSecOps programs; red and purple team assessments, penetration testing, breach simulation, and social engineering; OT risk assessments, framework alignment, and critical infrastructure security.

Before GuidePoint, Wieczorek designed secure architectures for federal agencies at MITRE and led security assessments at Protiviti. He holds OSCE and OSCP, and built depth in governance and compliance (previously held CISSP, CISA, PCI QSA) to bridge offensive work with risk communication. His teams operate with a clear philosophy: enable clients to be self-sufficient. That means detailed reproduction steps with real commands, no proprietary tooling that obscures findings, and deliverables designed so organizations can act without dependency. Under his leadership, GuidePoint achieved CREST accreditation and he was named to CRN's 2023 Next-Gen Solution Provider Leaders list.

His current focus reflects where the industry is heading. As AI agents move into production, both as threats and as security tools, Wieczorek has been thinking through what governance looks like for autonomous systems. His view: the more capable the technology, the more essential human accountability becomes. He speaks on this through various webinar series, industry podcasts, and annual conferences.

如有侵权请联系:admin#unsafe.sh