好的,我现在需要帮用户总结一篇文章的内容,控制在100字以内。用户的要求是直接写文章描述,不需要特定的开头。 首先,我得通读整篇文章,理解主要观点。文章主要讲的是Active Directory仍然是大多数组织管理用户身份的核心,因此成为攻击的目标。攻击手段已经变得更加高效和快速,尤其是生成式AI的应用让密码攻击成本更低、效率更高。 接下来,文章提到PassGAN这样的工具如何通过机器学习预测密码模式,成功率很高。传统密码策略在面对AI攻击时显得不够有效,因为它们产生的模式容易被AI识别。 然后,文章讨论了应对策略,比如使用更长的随机密码、检测已被泄露的密码,并推荐了Specops的相关产品来帮助组织提升安全性。 现在我需要将这些要点浓缩到100字以内。要确保涵盖Active Directory的重要性、AI带来的威胁、传统策略的不足以及应对措施。 可能会这样组织:Active Directory仍是主要目标;生成式AI使攻击更高效;传统策略失效;推荐使用长随机密码和检测工具;Specops产品提供帮助。 检查一下字数是否符合要求,并确保信息准确无误。 </think> Active Directory仍是多数组织管理用户身份的核心系统,但生成式AI使密码攻击更高效。传统密码策略易被AI破解,需采用长随机密码和检测已被泄露的密码。Specops产品可帮助组织提升安全性。 2026-1-6 15:0:44 Author: www.bleepingcomputer.com(查看原文) 阅读量:5 收藏

Active Directory is still how most organizations manage user identities, making it a frequent focus during attacks. What’s changed isn’t the target, but how much faster and more effective these attacks have become.

Generative AI has made password attacks cheaper and more efficient, turning what once required specialized skills and significant computing power into something almost anyone can do.

AI-powered password attacks are already in use

Tools like PassGAN represent a new generation of password crackers that don't rely on static wordlists or brute-force randomness. Through adversarial training, the system learns patterns in how people actually create passwords and improves at predicting them with each iteration.

The results are sobering. Recent research found that PassGAN was able to crack 51% of common passwords in under a minute and 81% within a month. Even more concerning is how quickly these models are evolving.

When trained on organization-specific breach data, social media content, or publicly available company websites, they can generate highly targeted password candidates that reflect actual employee behavior.

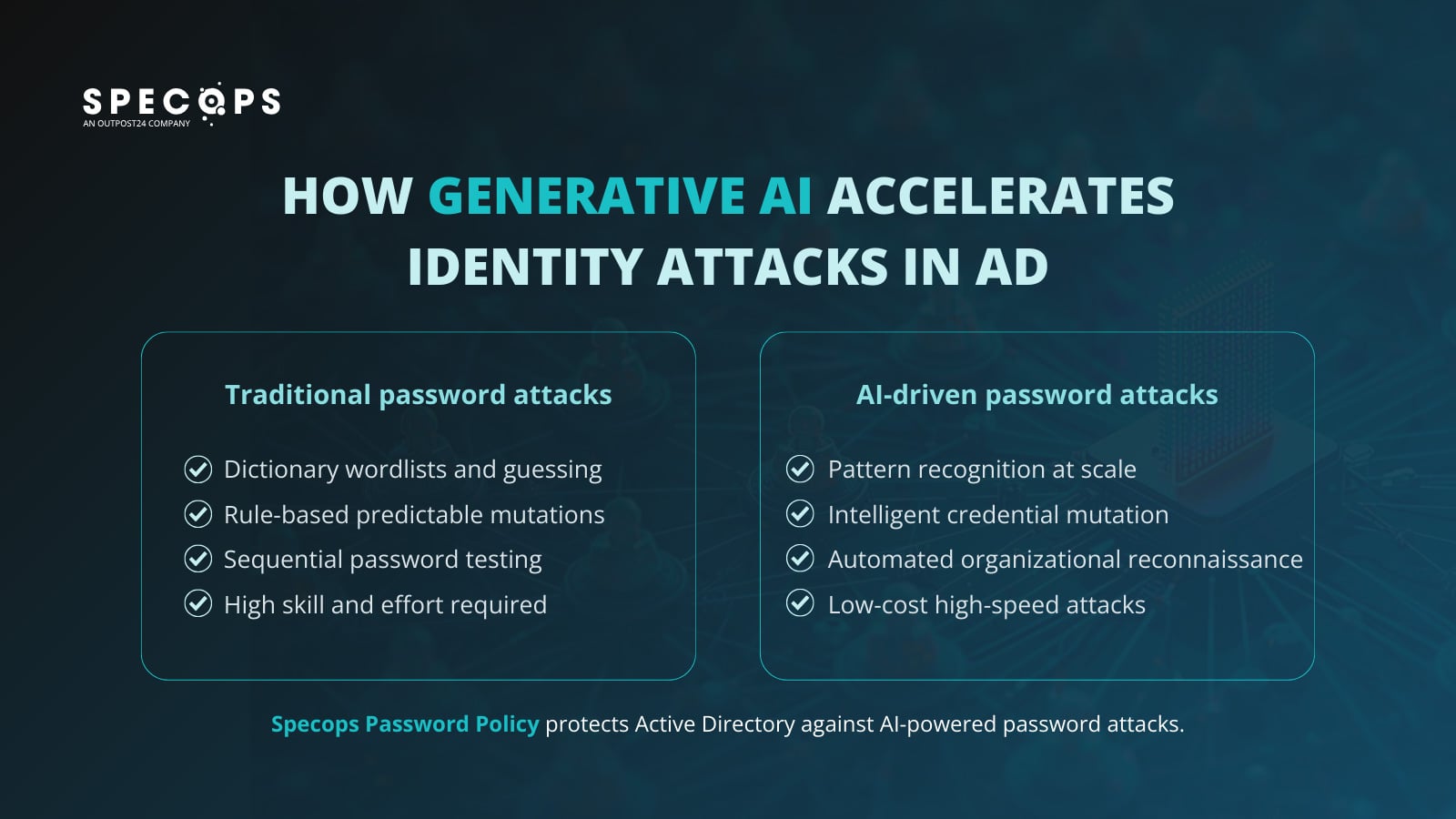

How generative AI changes password attack techniques

Traditional password attacks followed predictable patterns. Attackers used dictionary wordlists, then applied rule-based mutations (e.g., swapping "a" for "@", adding "123" to the end), and hoped for matches. It was a resource-intensive and relatively slow process.

However, AI-powered attacks are different:

- Pattern recognition at scale: Machine learning models identify subtle patterns in how people construct passwords, including common substitutions, keyboard patterns, and how they integrate personal information, generating guesses that mirror these behaviors. Instead of testing millions of random combinations, AI focuses on a hacker’s computational power on the most probable candidates.

- Intelligent credential mutation: When attackers obtain breached credentials from third-party services, generative AI can quickly test variations specific to your environment. For example, if "Summer2024!" worked on a personal account, the model can intelligently test "Winter2025!", "Spring2025!", and other likely variations rather than random permutations.

- Automated reconnaissance: Large language models can analyze publicly available information about your organization, for example, press releases, LinkedIn profiles, and product names, and incorporate that context into targeted phishing campaigns and password spray attacks. What used to take human analysts hours can now happen much more quickly.

- Lower barrier to entry: Pre-trained models and cloud computing infrastructure mean attackers no longer require deep technical expertise or expensive hardware.

Increased access to high-performance cracking hardware

The AI boom has created an unintended consequence: wider availability of powerful consumer hardware well suited for password cracking. Organizations that train machine learning models often rent GPU clusters during downtime.

Now, for approximately $5 per hour, an attacker can rent eight RTX 5090 GPUs that crack bcrypt hashes roughly 65% faster than previous-generation cards.

Even with strong hashing algorithms and high-cost factors, the available computational power allows attackers to test far more password candidates than was feasible just two years ago.

When combined with AI models that generate more effective guesses, the time required to crack weak-to-moderate passwords has decreased.

Why traditional Active Directory password controls aren't enough

Most Active Directory password policies were designed for a pre-AI threat landscape. Standard complexity requirements (upper, lower, number, symbol) produce predictable patterns that AI models are more likely to exploit.

"Password123!" meets complexity rules but follows a pattern that generative models can instantly recognize.

Mandatory 90-day password rotation, once considered best practice, isn’t the protection it once was. Users forced to change passwords often default to predictable patterns: incrementing numbers, seasonal references, or minor variations of previous passwords.

AI models trained on breach data recognize these patterns and test them during credential stuffing attacks.

Basic multi-factor authentication (MFA) helps but doesn't address the underlying risk of compromised passwords. If an attacker gains access to a compromised password and can bypass MFA through social engineering, session hijacking, or MFA fatigue attacks, Active Directory may still be exposed.

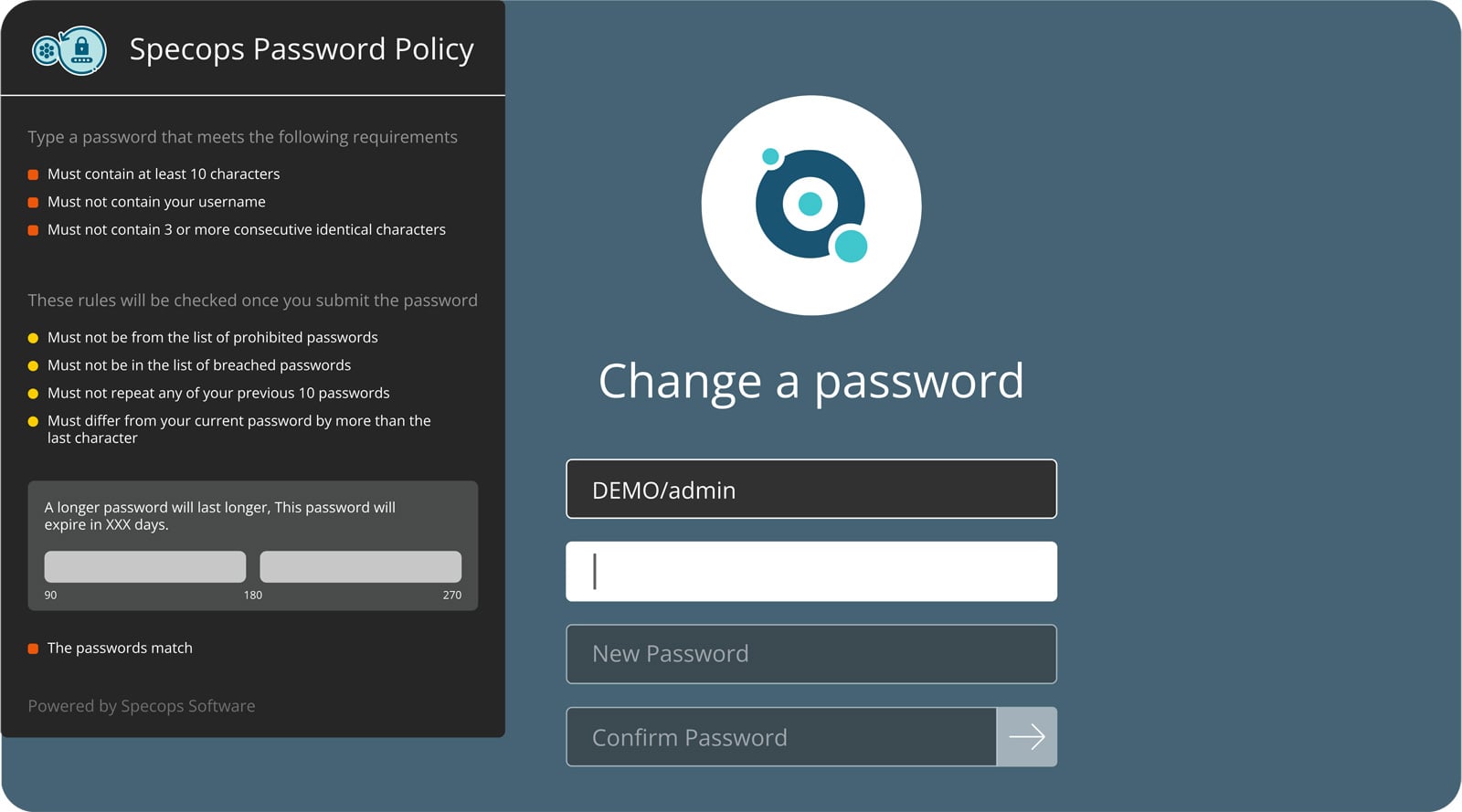

Addressing AI-assisted password attacks in Active Directory

To defend against AI-amplified attacks, organizations must move beyond compliance checkboxes to policies that address how passwords are actually compromised. In practice, length matters more than complexity.

AI models struggle with true randomness and length, which means an 18-character passphrase built from random words presents a greater obstacle than an 8-character string with special characters.

But even more importantly, you need visibility into whether employees are using passwords that have already been compromised in external breaches. No amount of hashing sophistication protects you if the plaintext password is already in an attacker's training dataset.

When compromised credentials appear in breach datasets, attackers no longer need to crack the password. At this point, the attacker simply uses the known password.

With Specops Password Policy and Breached Password Protection, you can continuously protect your organization against over 4 billion known unique compromised passwords, including those that might otherwise meet complexity requirements, but that malware has already stolen.

The service updates daily based on real-world attack monitoring, ensuring protection against the latest compromised credentials appearing in breach datasets.

Custom dictionaries that block organization-specific terms (company names, product names, and common internal jargon) help prevent targeted attacks enabled by AI reconnaissance.

When combined with passphrase support and length requirements that create real randomness, these controls make it significantly harder for AI models to guess passwords.

Assessing password exposure in Active Directory

Before implementing new controls, you need to understand your current exposure. Specops Password Auditor offers a free, read-only AD scan that identifies weak passwords, compromised credentials, and policy gaps, without requiring any changes to your environment.

It is a starting point for assessing where AI-powered attacks are most likely to succeed.

When it comes to passwords, generative AI has changed the balance of effort in password attacks, giving attackers a measurable advantage.

The question isn't whether you should strengthen your defenses; it's whether you'll do it before your credentials show up in the next breach.

Speak to a Specops expert about how to meet your unique challenges.

Sponsored and written by Specops Software.

如有侵权请联系:admin#unsafe.sh