文章探讨了在Command and Control (C2)系统中使用高信誉重定向器的原因及其设计原理,并详细介绍了如何利用AWS、Azure和GCP等云服务创建这些重定向器以隐藏C2通信。文中还讨论了域名前端化技术及其局限性,并提供了多种云服务的分类及配置方法以实现高信誉重定向功能。 2025-10-2 03:4:0 Author: thegreycorner.com(查看原文) 阅读量:13 收藏

- Why do we use high reputation redirectors in Command and Control designs?

- What about domain fronting? An aside….

- Protection/filtering for the C2 servers HTTP endpoint

- What characteristics make a service suitable to be used as a high reputation redirector?

- Previous work on using cloud services as high reputation redirectors

- Abusable cloud services by category

- Abusable cloud services by provider

Last weekend, I presented at BSides Canberra on abusing native cloud services for high reputation redirectors for Command and Control (C2) systems. The talk discussed the high reputation redirector concept, why they are used in Command and Control designs, and talked about a number of different native cloud services from AWS, Azure and GCP that could be used to create these redirectors. The slides from the presesntation are shared here, and at some point in the near future the recording of the talk will be released on the BSides Canberra YouTube channel. I will update the post with a direct link to the talk once its available.

Given that I have been working on this subject since late 2023, and have all the relevant information scattered across multiple posts here, I thought I would do a single summary post to organise everything and make specific information easier to find.

This post will explain the cloud fronting concept, list the various applicable cloud services that can be used, and will provide a direct link to posts that provides implementation detail for that service. Some of the information below providing high level context or descriptions will be reproduced here from the original posts, so be aware you might see some repeated information that you can skip over once you follow through to the individual entries in the series.

To my knowledge, the idea for C2 fronting was first discussed back in 2017 in a post from the Cobalt Strike blog which can be found here. This post talks about two different concepts, domain fronting and high reputation redirectors. As discussed in the following section, domain fronting no longer works well in many modern CDNs, but the high reputation redirector concept still has value.

So, what do we mean by high reputation redirectors and why would we want to use them?

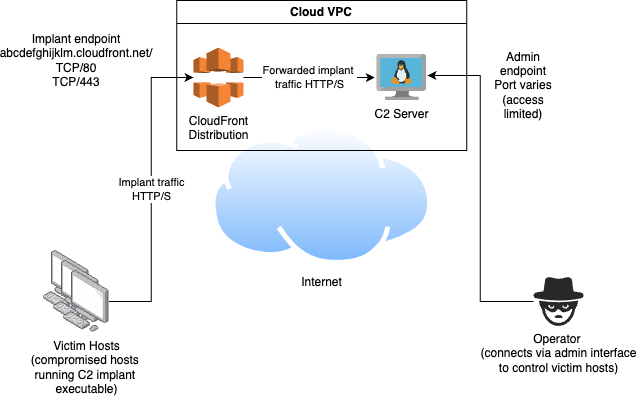

Consider this basic architecture diagram of a C2 system which uses HTTP/S for implant communications.

In this case we are using a single C2 host accessible on the Internet. We as an attacker compromise victim systems (shown on the left) and install our implant software. This software connects back to our C2 server via HTTP/S to request tasking. This tasking could involve running other executables, uploading files or data from the victim system and more. Operators of the C2 system (shown on the right), connect to the C2 via a seperate admin communication channel to provide tasking through the C2 for the victim systems.

In this simple type of design the implant endpoint of the C2 is usually given a DNS name that victim hosts will connect to. In this case the DNS name is www.evil.com. Given that implant traffic will need to bypass any network security controls used by the victim system, choosing the right endpoint address can impact the liklihood of getting a successful connection.

In the case of the high reputation redirectors concept, we are trying to take advantage of the good reputation of the redirector services domain name to avoid our implant traffic being blocked due to poor domain reputation. Instead of some newly registered dodgy looking domain like www.evil.com, we instead connect to the domain used by the forwarding service. This forwarding services are widely used for legitimate traffic, and are often trusted by security solutions.

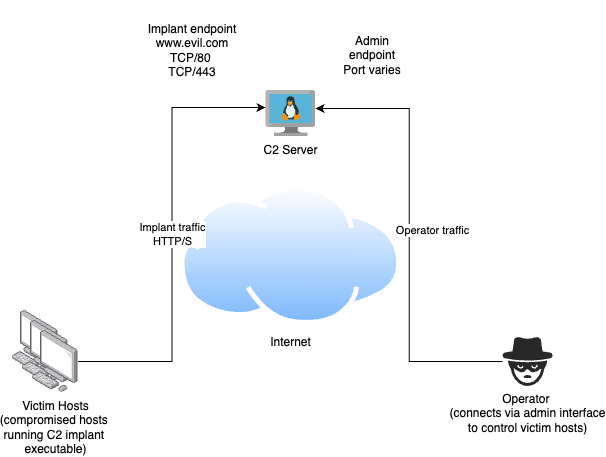

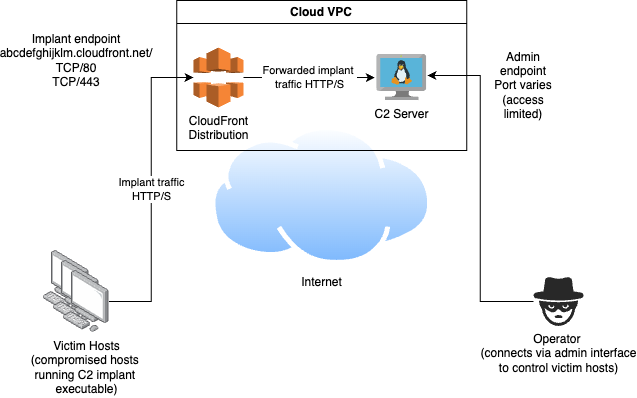

Heres an example of a redesigned C2 system using a high reputation cloud service for fronting.

Here, the basic design has been modified to host the C2 service in a private VPC. The C2 server is no longer directly exposed to the Internet, and the implant traffic is directed to the C2 service by the use of a AWS Cloudfront distribution, which has not had a custom domain associated with it. So, instead of the implants having to connect to the suspicious looking address of www.evil.com, the implants instead connect to the much more trustworthy address abcdefghijklm.cloudfront.net that the CloudFront service auto generates for us when we create a new distribution. We even get a SSL certificate for HTTPS traffic included!

Something that you will see repeated endlessly if you search on this topic, including in the Cobalt Strike blog post mentioned in the last section, is that you can use the CloudFront Content Delivery Network (CDN) (and sometimes other CDN services) to perform domain fronting for C2 services.

Back in 2023 when I first started looking into the high reputation redirector concept, I also looked into domain fronting given how many people were talking about the ideas simultaneously. What follows in this section is reproduced from what I wrote at the time on the subject, specific to AWS services. Keep in mind I have not done any testing to update this information on domain fronting for GCP or Azure services or to account for changes since 2023, so while the details about how domain fronting works are still accurate, specifics about whether or how it works with particular cloud services may have changed.

Domain fronting is a technique that attempts to hide the true destination of a HTTP request or redirect traffic to possibly restricted locations by abusing the HTTP routing capabilities of CDNs or certain other complex network environments. For version 1.1 of the protocol, HTTP involves a TCP connection being made to a destination server on a given IP address (normally associated with a domain name) and port, with additional TLS/SSL encryption support for the connection in HTTPS. Over this connection a structured plain text message is sent that requests a given resource and references a server in the Host header. Under normal circumstances the domain name associated with the TCP connection and the Host header in the HTTP message match. In domain fronting, the destination for the TCP connection domain name is set to a site that you want to appear to be visiting, and the Host header in the HTTP request is set to the location you actually want to visit. Both locations must be served by the same CDN.

The following curl command demonstrates in the simplest possible way how the approach is performed in suited environments. In the example, http://fakesite.cloudfront.net/ is what you want to appear to be visiting, and http://actualsite.cloudfront.net is where you actually want to go:

curl -H 'Host: actualsite.cloudfront.net' http://fakesite.cloudfront.net/

In this example, any DNS requests resolved on the client site are resolving the “fake” address, and packet captures will show the TCP traffic going to that fake systems IP address. If HTTPS is supported, and you use a https:// URL, the actual destination you are visiting located in the HTTP Host header will also be hidden in the encrypted tunnel.

While this is a great way of hiding C2 traffic, due to a widespread practice of domain fronting being used to evade censorship restrictions, various CDNs did crack down on the approach a few years ago. Some changes were rolled back in some cases, but as at the time of my testing in 2023 this simple approach to domain fronting does not work in CloudFront for HTTPS. If the DNS hostname that you connect to does not match any of the certificates you have associated with your CloudFront distribution, you will get the following error:

The distribution does not match the certificate for which the HTTPS connection was established with.

This applies only to HTTPS - HTTP still works using the approach shown in the example above. However, given the fact that HTTP has the Host header value exposed in the clear in network traffic this leaves something to be desired when the purpose is hiding where you’re going. Depending on the capability of inspection devices, it might be good enough for certain purposes however.

It is possible to make HTTPS domain fronting work on CloudFront via use of Server Name Indication (SNI) to specify a Server Name value during the TLS negotiation that matches a certificate in your Cloudfront distribution. In other words, you TCP connect via HTTPS to a fake site on the CDN and set the SNI servername for the TLS negotation AND the HTTP Host to your actual intended host.

Heres how this connection looks using openssl.

openssl s_client -quiet -connect fakesite.cloudfront.net:443 -servername actualsite.cloudfront.net < request.txt

depth=2 C = US, O = Amazon, CN = Amazon Root CA 1

verify return:1

depth=1 C = US, O = Amazon, CN = Amazon RSA 2048 M01

verify return:1

depth=0 CN = *.cloudfront.net

verify return:1

Where file request.txt contains something like the following:

GET / HTTP/1.1

Host: actualsite.cloudfront.net

Unfortunately, I’m not aware of any C2 implant that supports specifying the TLS servername in a manner similar to what is shown above, so C2 HTTPS domain fronting using CloudFront is not a viable approach until this time. However, this does not mean that CloudFront is completely unusable for C2. As already mentioned, you can do domain fronting via HTTP. Its also possible to access the distribution via HTTPS using the <name>.cloudfront.net name that is created randomly for you when you setup your distribution. This domain does have a good trust profile in some URL categorisation databases.

When I setup C2 using one of these high reputation as an implant front end, I usually also add in a seperate HTTP proxy service between the cloud service redirector and the C2s HTTP listener. This provides some additional protection to the C2 server from malicious access or attempts to fingerprint the service. The ideal design would have this proxy sitting on its own restricted dedicatd bastion host. However, in the POC designs I have discussed in the majority of the blog posts in this series, I have used a design where I run this proxy on the same host running the C2 server. The proxy is an Apache instance listening on port 80, which will in turn forward certain incoming HTTP traffic on to the C2 HTTP endpoint, which is listening internally on port 8888.

A script for Ubuntu Linux that can be set in a cloud VM’s user data instance that will set this up for you using the Sliver C2 server can be found here. This is the same script that is used in the deployment templates that I created for the Azure entries in this series.

The filtering in this design is pretty simple, configured in /var/www/html/.htaccess and works in the following manner:

- Any requests for any file that exists in the Apache web root (

/var/www/html) will be served directly by Apache - there is a default index page, a robots.txt and a randomly named php script that exists for troubleshooting - Any requests for any URL that does not exist in the Apache web root will be forwarded to the C2 endpoint if it comes from a non-bot/automated/command-line user agent, otherwise the request will get a generic 404 response

This is not perfect, and can be refined to filter more stringently based on the HTTP communication profile of a particular C2, but it does help prevent against casual identification of the C2 or certain direct attacks against its implant endpoint, as the robust and tested Apache server receives the web traffic first and does some response header modifications. The use of Apache also gives us an easy and well tested way of setting up a HTTPS listener that forwards traffic as well if we want this.

So what qualities do we look for in a cloud service that suggests it would be a good candidate for use as a high reputation redirector for C2?

Lets define the goal as achieving functional HTTP/S communication for unmodified C2 software. While there are C2 solutions that support all sorts of different communications methods such as Slack, Discord, GitHub and more, I was interested only in services that would allow support for the greatest breadth of C2 software packages using HTTP/S.

More specifically, the service needs to have the following characteristics to be a good candidate:

- Provides the ability to proxy, redirect or forward HTTP/1.1 traffic to a configurable backend. This backend could be a service in a private VPC hosted in the same cloud providers network, or a service accessible more generally over the Internet.

- Provides a trusted DNS name from the cloud provider. We dont want to have to provide our own custom domain name, so we dont have to worry about aging and building trust for that domain.

- Ideally, provides a trusted certificate for the cloud provider DNS domain, that will enable HTTPS for you without having to set it up yourself and have the domain appear in certificate transparency logs as a result of creating that certificate yourself using a service such as LetsEncrypt.

- Supports at least the GET and POST HTTP methods.

- Supports binary content in the HTTP message body for requests and responses.

- Supports the ability to send requests and responses uncached.

- Retains any cookies set by the C2 HTTP server (through the Set-Cookie header).

- Maintains the URL path and query parameters for all forwarded requests as-is. Some consistent content included at the start of the URL path is fine (e.g.

/api/), as most C2 servers can deal with this, as long as everything after this section of the path is sent with the same value as sent by the client.

A number of other people have discussed creating high reputation using cloud services before I started looking at the subject in 2023. This section will list a few of the posts on the subject that I found, categorised by cloud provider.

AWS

I found the following approaches discussed online for AWS services:

- This post by Adam Chester which talks about using the Serverless framework to create an AWS Lambda that will receive traffic from the AWS API Gateway and then forward it to another arbitrary destination. In Adams example, he forwards the traffic using ngrok to a local web server, but this approach could be used point to an EC2 instance in the same AWS account or other server on the Internet.

- This post from Scott Taylor which again uses a Lambda to perform traffic redirection, but this time the entry point is via Lambda function URLs instead of the API Gateway. There is associated code/instructions to deploy this Lambda to AWS as well as to create an EC2 instance to forward the traffic to.

- Many posts on using a CloudFront distribution and domain fronting to receive traffic from CloudFront sites and send them to a C2 server somewhere else. Some examples are this, this, this and this.

Azure

I found the following approaches discussed online for Azure services:

- A number of posts discuss using Azure Function Apps, including this and this

- This previously mentioned post also discusses using the (no longer operational) Azure Edge CDN (replaced by Azure Front Door) and the Azure API Management Service

- Finally we have this post which talks about using an Azure Static Web App. The discussed approach however, involves a configuration which performs a HTTP 301 client side redirect to the backend server, which allows leakage of the origin C2, something we dont want. The discussed Static Web App service also allows the ability to proxy directly to a backend API service. This would ordinarily be a good way to provide a high reputation redirector, however this API proxying approach does remove any cookies set using the

Set-Cookieheader by the backend API service, meaning it will not work with certain C2 servers. This is why Azure Static Web Apps are not in my list of services for creating high reputation redirectors for C2.

GCP

I wasn’t able to find very many examples of other people abusing GCP for fronting C2 in this way. Perhaps its bad to my poor searching, but the only example I found was this post from 2017 talking about using Google App Engine for C2 fronting. Even this post does not provide a working example configuration that works for forwarding to generic C2 servers, and instead used a custom C2 backend - but the App Engine service itself WILL work .

Now we will start to look at the cloud services (AWS, Azure and GCP) that I’ve personally verified for use as high reputation redirectors for C2 in the last 6 months. This section will group the services by service category, and the following section will group them by provider.

VM

When you create a virtual machine instance in Azure or AWS, and give that instance a public IP address, you can get a cloud provider domain name associated with that public IP. You can create your own proxy instance on that instance that can forward incoming traffic reaching the address to a backend of your choice. This requires that you setup the proxying yourself on the instance, using Apache, Nginx or similar. If you want HTTPS, you need to set this up yourself, you can do this by getting a certificate from LetsEncrypt for the cloud provider domain name that you get assigned and set this up with your proxying service. This will result in your domain name ending up in the certificate transparency logs and receiving some amount of attention in the form of scanning.

Note: I only have a blog post discussing a POC implementation of the Azure VM approach, but the discussed approach is largely transferrable to AWS as well. In addition, the POC (designed to only provide a working demonstration of the concept) involves running the C2 server on the same VM, with an Apache server running proxying traffic to the C2 HTTP interface listening on localhost:8888. This configuration is discussed in some more detail in the section above. I dont recommend running the C2 on the same host that is receiving public traffic for any production use, and would suggest instead running just the proxy on the public VM, and having the C2 elsewhere with traffic forwarding used to . The Apache config in the design forwards communication to http://backend:8888 (with the address of backend defined in the /etc/hosts file), and this can be modified to a new destination of your choice.

AWS EC2:

- Domain name:

ec2-<IP-ADDRESS>.<REGION>.compute.amazonaws.com - HTTPS?: BYO (configure using LetsEncrypt or similar)

- Domain name:

<YOUR-LABEL>.<REGION>.cloudapp.azure.com - HTTPS?: BYO (configure using LetsEncrypt or similar)

Content Delivery Networks (CDN)

Content Delivery Networks make your content more globally available through points of presence around the world. These servics are designed to just proxy HTTPS, so configuring these services for C2 redirection just involves setting the backend C2 service in the CDN configuration. These CDN services are also designed to cache, which will cause a problem for C2 HTTP communication, so you need to make sure you disable caching.

- Domain name:

<RANDOM>.cloudfront.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<RANDOM>.*.azurefd.net - HTTPS?: Yes

API Services

API Services make it easy to run your HTTP based API and make it available on the Internet. The approach required to configure these services to forward arbitrary HTTP traffic differs depending on the particular service. For AWS, you have options to either send incoming traffic to backend serverless code which can be written to forward the incoming messages, or you can configure direct proxying. For Azure and GCP, you need to provide specifically structured API definitions that will forward traffic from arbitrary routes.

Note: As mentioned, the AWS API Gateway can use two different backend, both approaches of which are discussed in the same post here linked below. The first approach is forwarding to a Lambda, and the second approach is direct proxying to a backend HTTP service. Lambdas can also have web traffic directed into them using two different approaches, and are discussed below in the Serverless category.

AWS API Gateway forwarding to Lambda

- Domain name:

<RANDOM>.execute-api.<REGION>.amazonaws.com - HTTPS?: Yes

AWS API Gateway direct proxying

- Domain name:

<RANDOM>.execute-api.<REGION>.amazonaws.com - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.azure-api.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<RANDOM>.<REGIONID>.gateway.dev - HTTPS?: Yes

Managed Website services

These services make it easier to launch a website on the Internet. In the case of AWS Amplify this website can be a direct backend proxy configuration, for Elastic Beanstalk you can run a custom code based web application that you can write to forward traffic to a chosen backend.

- Domain name:

<YOUR-LABEL>.<RANDOM>.amplifyapp.com - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<RANDOM>.<REGION>.elasticbeanstalk.com - HTTPS?: BYO (configure using LetsEncrypt or similar)

Container execution services

These services make it easier to run a container and make it accessible on the Internet for web traffic. To use this for C2 redirection, we run a custom container similar to this that will forward traffic to a configured backend. The Cloud Run service also operates as a Serverless service - if you provide it a container it runs the container, if you provide it code it will convert that to a container for you and run it.

Note: The GCP Cloud Run post only discusses implementation using the Serverless code approach.

- Domain name:

<RANDOM>.<REGION>.awsapprunner.com - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<ENVIRONMENT-ID>.<REGION>.azurecontainerapps.io - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<PROJECT>.<REGION>.run.app - HTTPS?: Yes

Serverless code execution services

Serverless code execution services allow you to provide a bit of code that the cloud provider will wrap in a lightweight VM or container that is managed for you. This will then be made available to receive web traffic at a cloud provider domain name. The code used in the POCs I discuss below are variants on a Python app that rewrites HTTP requests. Flask is used for receiving web requests where this is not handled by the parent framework, and either requests or the Python standard HTTP and SSL libraries are used to replay the requests to the backend C2 service.

Note: Traffic can be directed into a Lambda using either a Function url or an API Gateway instance.

AWS API Gateway forwarding to Lambda

- Domain name:

<RANDOM>.execute-api.<REGION>.amazonaws.com - HTTPS?: Yes

- Domain name:

<RANDOM>.lambda-url.<REGION>.on.aws - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.azurewebsites.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<PROJECT>.<REGION>.run.app - HTTPS?: Yes

- Domain name:

<REGION>-<PROJECT>.cloudfunctions.net/<YOUR-LABEL>/ - HTTPS?: Yes

- Domain name:

<PROJECT>.<REGION>.*.appspot.com - HTTPS?: Yes

This section lists the same services from the section above, but categorised by cloud provider.

AWS Services

- Domain name:

<RANDOM>.cloudfront.net - HTTPS?: Yes

AWS API Gateway forwarding to Lambda

- Domain name:

<RANDOM>.execute-api.<REGION>.amazonaws.com - HTTPS?: Yes

AWS API Gateway direct proxying

- Domain name:

<RANDOM>.execute-api.<REGION>.amazonaws.com - HTTPS?: Yes

- Domain name:

<RANDOM>.lambda-url.<REGION>.on.aws - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<RANDOM>.amplifyapp.com - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<RANDOM>.<REGION>.elasticbeanstalk.com - HTTPS?: BYO

- Domain name:

<RANDOM>.<REGION>.awsapprunner.com - HTTPS?: Yes

AWS EC2 (follow the approach discussed in the post on abusing Azure VMs )

- Domain name:

ec2-<IP-ADDRESS>.<REGION>.compute.amazonaws.com - HTTPS?: BYO (configure using LetsEncrypt or similar)

Azure services

All of the following Azure services can be easily deployed in POC form using deployment templates as discussed in the post here. These templates are available on GitHub here.

- Domain name:

<YOUR-LABEL>.azurewebsites.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<RANDOM>.*.azurefd.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<REGION>.cloudapp.azure.com - HTTPS?: BYO (configure using LetsEncrypt or similar)

- Domain name:

<YOUR-LABEL>.azure-api.net - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>.<ENVIRONMENT-ID>.<REGION>.azurecontainerapps.io - HTTPS?: Yes

GCP services

- Domain name:

<YOUR-LABEL>-<PROJECT>.<REGION>.run.app - HTTPS?: Yes

- Domain name:

<REGION>-<PROJECT>.cloudfunctions.net/<YOUR-LABEL>/ - HTTPS?: Yes

- Domain name:

<PROJECT>.<REGION>.*.appspot.com - HTTPS?: Yes

- Domain name:

<YOUR-LABEL>-<RANDOM>.<REGIONID>.gateway.dev - HTTPS?: Yes

如有侵权请联系:admin#unsafe.sh