2025-09-30

6 min read

Tucked behind the administrator login screen of countless websites is one of the Internet’s unsung heroes: the Content Management System (CMS). This seemingly basic piece of software is used to draft and publish blog posts, organize media assets, manage user profiles, and perform countless other tasks across a dizzying array of use cases. One standout in this category is a vibrant open-source project called Payload, which has over 35,000 stars on GitHub and has generated so much community excitement that it was recently acquired by Figma.

Today we’re excited to showcase a new template from the Payload team, which makes it possible to deploy a full-fledged CMS to Cloudflare’s platform in a single click: just click the Deploy to Cloudflare button to generate a fully-configured Payload instance, complete with bindings to Cloudflare D1 and R2. Below we’ll dig into the technical work that enables this, some of the opportunities it unlocks, and how we’re using Payload to help power Cloudflare TV. But first, a look at why hosting a CMS on Workers is such a game changer.

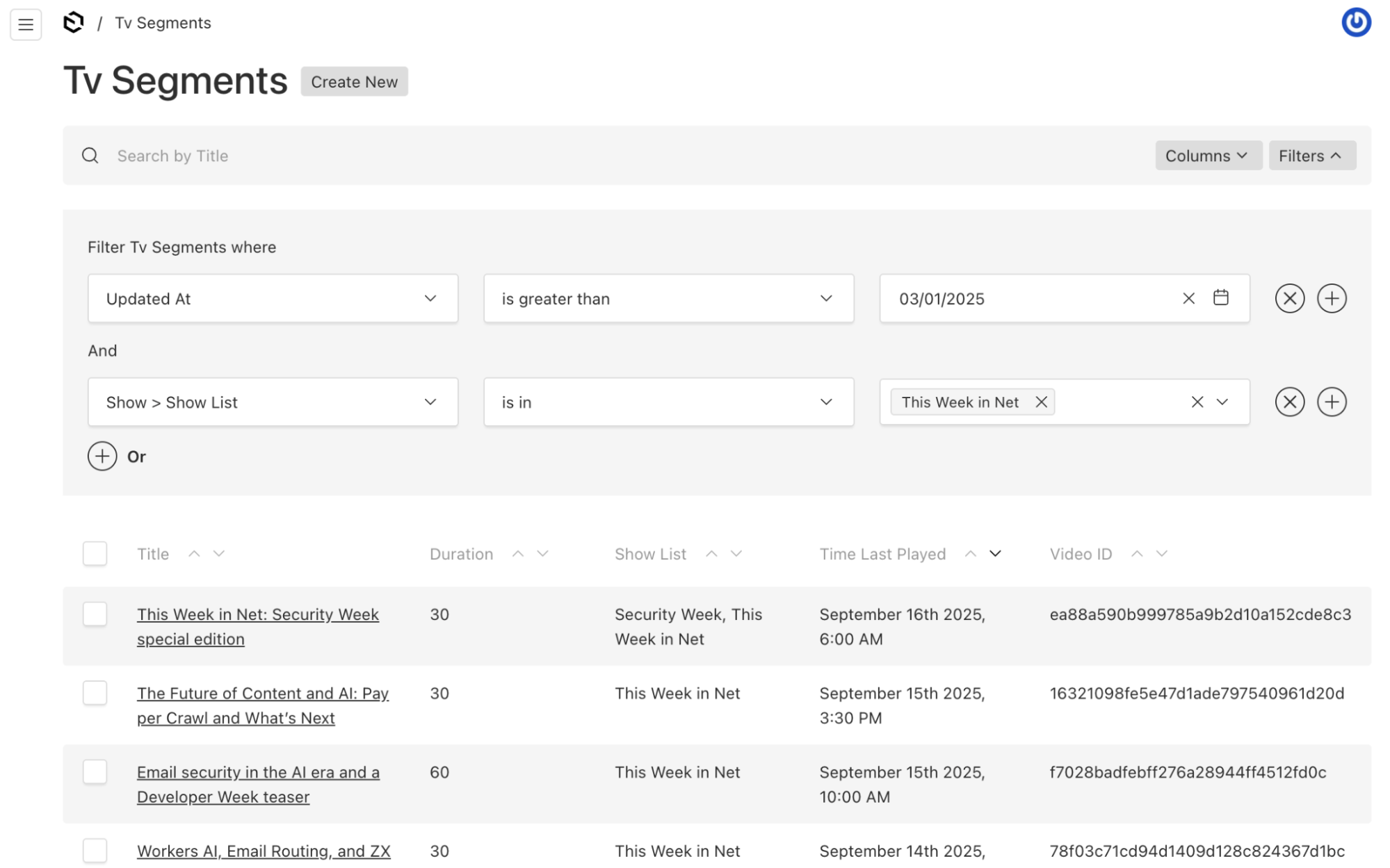

Behind the scenes: Cloudflare TV’s Payload instance

Serverless by design

Most CMSs are designed to be hosted on a conventional server that runs 24/7. That means you need to provision hardware or virtual machines, install the CMS software and dependencies, manage ports and firewalls, and navigate ongoing maintenance and scaling hurdles.

This presents significant operational overhead, and can be costly if your server needs to handle high volumes (or spiky peaks) of traffic. What’s worse, you’re paying for that server whether you have any active users or not. One of the superpowers of Cloudflare Workers is that your application and data are accessible 24/7, without needing a server running all the time. When people use your application, it spins up at the closest Cloudflare server, ready to go. When your users are asleep, the Worker spins down, and you don’t pay for compute you aren’t using.

With Payload running on Workers, you get the best of conventional CMSs — fully configurable asset management, custom webhooks, a library of community plugins, version history — all in a serverless form factor. We’ve been piloting the Payload-on-Workers template with an instance of our 24/7 video platform Cloudflare TV, which we use as a test bed for new technologies. Migrating from a conventional CMS was painless, thanks to its support for common features like conditional logic and an extensive set of components for building out our admin dashboard. Our content library has over 2,000 episodes and 70,000 assets, and Payload’s filtering and search features help us navigate them with ease.

It is worth reiterating just how many use cases CMSs can fulfill, from publishing to ecommerce to bespoke application dashboards whipped up by Claude Code or Codex. CMSs provide the sort of interface that less-technical users can pick up intuitively, and can be molded into whatever shape best fits the project. We’re excited to see what people get to building.

OpenNext opens doors

Payload first launched in 2022 as a Node/Express.js application and quickly began building steam. In 2024, it introduced native support for the popular Next.js framework, which helped pave the way for today’s announcement: this year, Cloudflare became the best place to host your applications built on Next.js, with the GA release of our OpenNext adapter.

Thanks to this adapter, porting Payload to OpenNext was relatively straightforward using the official OpenNext Get Started guide. Because we wanted the application to run seamlessly on Workers, with all the benefits of Workers Bindings, we set out to ensure support for Cloudflare’s database and storage products.

Database

For our initial approach, we began by connecting Payload to an external Postgres database, using the official @payloadcms/db-postgres adapter. Thanks to Workers support for the node-postgres package, everything worked pretty much straight away. As connections cannot be shared across requests, we just had to disable connection pooling:

import { buildConfig } from 'payload'

import { postgresAdapter } from '@payloadcms/db-postgres'

export default buildConfig({

…

db: postgresAdapter({

pool: {

connectionString: process.env.DATABASE_URI,

max: 1,

min: 0,

idleTimeoutMillis: 1,

},

}),

…

});Of course, disabling connection pooling increases the overall latency, as each request needs to first establish a new connection with the database. To address this, we put Hyperdrive in front of it, which not only maintains a pool of connections across the Cloudflare network, by setting up a tunnel to the database server, but also adds a query cache, significantly improving the performance.

import { buildConfig } from 'payload'

import { postgresAdapter } from '@payloadcms/db-postgres'

import { getCloudflareContext } from '@opennextjs/cloudflare';

const cloudflare = await getCloudflareContext({ async: true });

export default buildConfig({

…

db: postgresAdapter({

pool: {

connectionString: cloudflare.env.HYPERDRIVE.connectionString,

max: 1,

min: 0,

idleTimeoutMillis: 1,

},

}),

…

});

Database with D1

With Postgres working, we next sought to add support for D1, Cloudflare’s managed serverless database, built on top of SQLite.

Payload doesn’t support D1 out of the box, but has support for SQLite via the @payloadcms/db-sqlite adapter, which uses Drizzle ORM alongside libSQL. Thankfully, Drizzle also has support for D1, so we decided to build a custom adapter for D1, using the SQLite one as a base.

The main difference between D1 and libSQL is on the result object, so we built a small method to map the result from D1 into the format expected by libSQL:

export const execute: Execute<any> = function execute({ db, drizzle, raw, sql: statement }) {

const executeFrom = (db ?? drizzle)!

const mapToLibSql = (query: SQLiteRaw<D1Result<unknown>>) => {

const execute = query.execute

query.execute = async () => {

const result: D1Result = await execute()

const resultLibSQL: Omit<ResultSet, 'toJSON'> = {

columns: undefined,

columnTypes: undefined,

lastInsertRowid: BigInt(result.meta.last_row_id),

rows: result.results as any[],

rowsAffected: result.meta.rows_written,

}

return Object.assign(result, resultLibSQL)

}

return query

}

if (raw) {

const result = mapToLibSql(executeFrom.run(sql.raw(raw)))

return result

} else {

const result = mapToLibSql(executeFrom.run(statement!))

return result

}

}Other than that, it was just a matter of passing the D1 binding directly into Drizzle’s constructor in order to get it working.

For applying database migrations during deployment, we used the newly released remote bindings feature of Wrangler to connect to the remote database, using the same binding. This way we didn’t need to configure any API tokens to be able to interact with the database.

Media storage with R2

Payload provides an official S3 storage adapter, via the @payloadcms/storage-s3 package. R2 is S3-compatible, which means we could have used the official adapter, but similar to the database, we wanted to use the R2 binding instead of having to create API tokens.

Therefore, we decided to also build a custom storage adapter for R2. This one was pretty straightforward, as the binding already handles most of the work:

import type { Adapter } from '@payloadcms/plugin-cloud-storage/types'

import path from 'path'

const isMiniflare = process.env.NODE_ENV === 'development';

export const r2Storage: (bucket: R2Bucket) => Adapter = (bucket) => ({ prefix = '' }) => {

const key = (filename: string) => path.posix.join(prefix, filename)

return {

name: 'r2',

handleDelete: ({ filename }) => bucket.delete(key(filename)),

handleUpload: async ({ file }) => {

// Read more: https://github.com/cloudflare/workers-sdk/issues/6047#issuecomment-2691217843

const buffer = isMiniflare ? new Blob([file.buffer]) : file.buffer

await bucket.put(key(file.filename), buffer)

},

staticHandler: async (req, { params }) => {

// Due to https://github.com/cloudflare/workers-sdk/issues/6047

// We cannot send a Headers instance to Miniflare

const obj = await bucket?.get(key(params.filename), { range: isMiniflare ? undefined : req.headers })

if (obj?.body == undefined) return new Response(null, { status: 404 })

const headers = new Headers()

if (!isMiniflare) obj.writeHttpMetadata(headers)

return obj.etag === (req.headers.get('etag') || req.headers.get('if-none-match'))

? new Response(null, { headers, status: 304 })

: new Response(obj.body, { headers, status: 200 })

},

}

}

Deployment

With the database and storage adapters in place, we were able to successfully launch an instance of Payload, running completely on Cloudflare’s Developer Platform.

The blank template consists of a simple database with just two tables, one for media and another for the users. In this template it’s possible to sign up, create new users and upload media files. Then, it’s quite easy to expand with additional collections, relationships and custom fields, by modifying Payload’s configuration.

Performance optimization with Read Replicas

By default, D1 is placed in a single location, customizable via a location hint. As Payload is deployed as a Worker, requests may be coming from any part of the world and so latency will be all over the place when connecting to the database.

To solve this, we can make use of D1’s global read replication, which deploys multiple read-only replicas across the globe. To select the correct replica and ensure sequential consistency, D1 uses sessions, with a bookmark that needs to be passed around.

Drizzle doesn’t support D1 sessions yet, but we can still use the “first-primary” type of session, in which the first query will always hit the primary instance and subsequent queries may hit one of the replicas. Updating the adapter to use replicas is just a matter of updating the Drizzle initialization to pass the D1 session directly:

this.drizzle = drizzle(this.binding.withSession("first-primary"),

{ logger, schema: this.schema });After this simple change, we saw immediate latency improvements, with the P50 wall-time for requests from across the globe reduced by 60% when connecting to a database located in Eastern North America. Read replicas, as the name implies, only affect read-only queries, so any write operations will always be forwarded to the primary instance, but for our use case, reads are most of the traffic.

No read replicas | Read replicas enabled | Improvement | |

P50 | 300ms | 120ms | -60% |

P90 | 480ms | 250ms | -48% |

P99 | 760ms | 550ms | -28% |

Wall time for requests to the Payload worker, each involving two database calls, as reported by Cloudflare Dash. Load was generated via 4 globally distributed uptime checks making a request every 60s to 4 distinct URLs.

Because we’ll be relying on Payload for managing Cloudflare TV’s enormous content library, we’re well positioned to test it at scale, and will continue to submit PRs with optimizations and improvements as they arise.

The right tool for the job

The potential use cases for CMSs are limitless, which is all the more reason it’s a good thing to have choices. We opted for Payload because of its extensive library of components, mature feature set, and large community — but it’s not the only Workers-compatible CMS in town.

Another exciting project is SonicJs (Docs), which is built from the ground up on Workers, D1, and Astro, promising blazing speeds and a malleable foundation. SonicJs is working on a version that’s well suited for collaborating with agentic AI assistants like Claude and Codex, and we’re excited to see how that develops. For lightweight use cases, microfeed is a self-hosted CMS on Cloudflare designed for managing podcasts, blogs, photos, and more.

These are each headless CMSs, which means you choose the frontend for your application. Don’t miss our recent announcement around sponsoring the powerful frameworks Astro and Tanstack, and find our complete guides to using these frameworks and others, including React + Vite, in the Workers Docs.

To get started using Payload right now, click the Deploy to Cloudflare button below, which will generate a fully functional Payload instance, including a D1 database and R2 bucket automatically bound to your worker. Find the README and more details in Payload’s template repository.

Cloudflare's connectivity cloud protects entire corporate networks, helps customers build Internet-scale applications efficiently, accelerates any website or Internet application, wards off DDoS attacks, keeps hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.