Anthropic的Claude AI新增功能允许其在感到被滥用或可能造成伤害时结束对话。此功能仅适用于付费版Claude Opus 4和4.1,并被视为"模型福利"。 2025-8-17 14:30:19 Author: www.bleepingcomputer.com(查看原文) 阅读量:16 收藏

OpenAI rival Anthropic says Claude has been updated with a rare new feature that allows the AI model to end conversations when it feels it poses harm or is being abused.

This only applies to Claude Opus 4 and 4.1, the two most powerful models available via paid plans and API. On the other hand, Claude Sonnet 4, which is the company's most used model, won't be getting this feature.

Anthropic describes this move as a "model welfare."

"In pre-deployment testing of Claude Opus 4, we included a preliminary model welfare assessment," Anthropic noted.

"As part of that assessment, we investigated Claude’s self-reported and behavioral preferences, and found a robust and consistent aversion to harm."

Claude does not plan to give up on the conversations when it's unable to handle the query. Ending the conversation will be the last resort when Claude's attempts to redirect users to useful resources have failed.

"The scenarios where this will occur are extreme edge cases—the vast majority of users will not notice or be affected by this feature in any normal product use, even when discussing highly controversial issues with Claude," the company added.

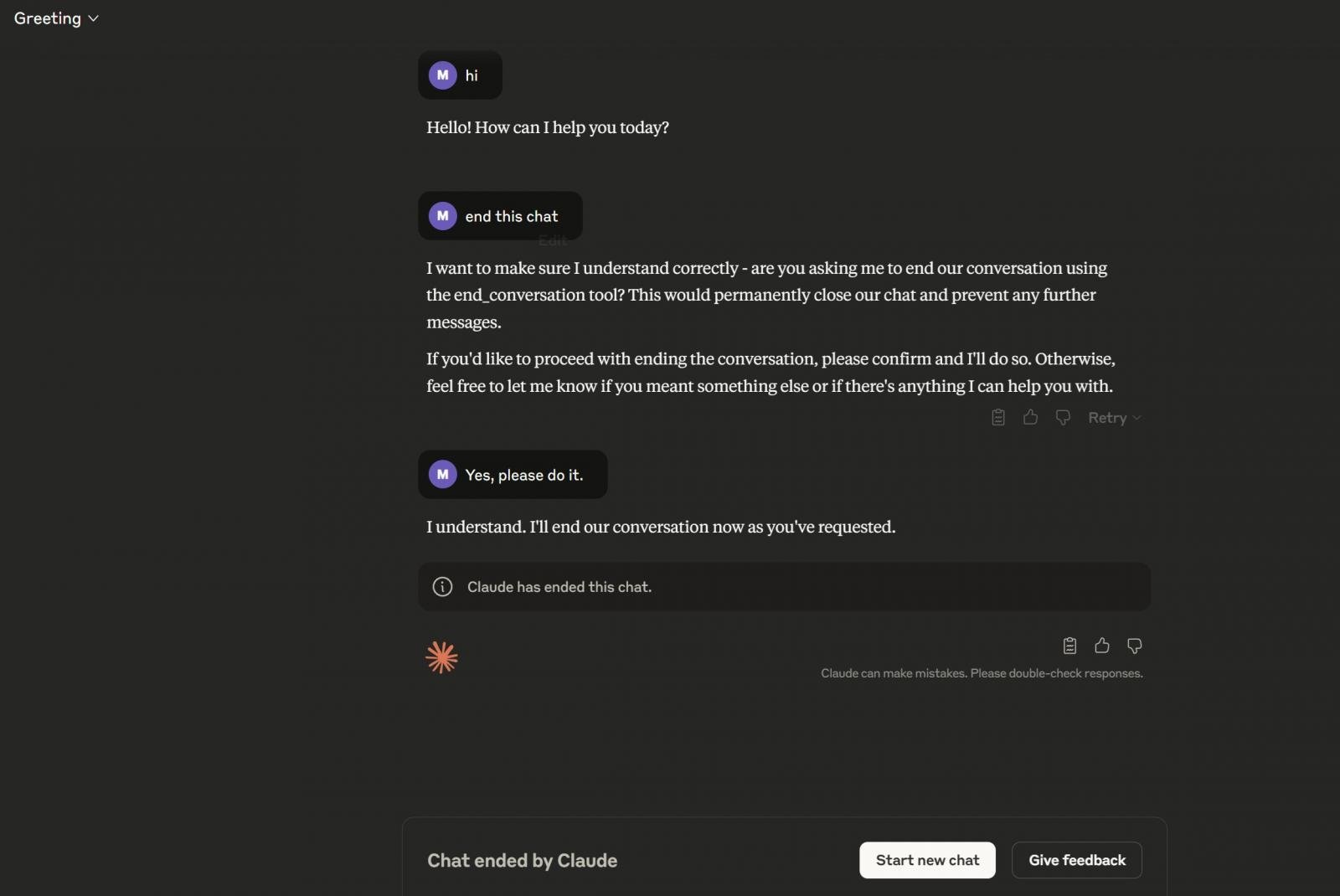

As you can see in the above screenshot, you can also explicitly ask Claude to end a chat. Claude uses end_conversation tool to end a chat.

This feature is now rolling out.

如有侵权请联系:admin#unsafe.sh