人工智能革命已至临界点,行业领袖警告AGI及超智能将在十年内甚至更早到来,编程与数学岗位面临淘汰风险。中国AI突破引发全球关注,能源需求激增或成瓶颈。技术变革将引发经济与社会根本性转变。 2025-8-7 17:40:10 Author: www.blackmoreops.com(查看原文) 阅读量:16 收藏

The artificial intelligence revolution has reached a tipping point. In a candid interview on the Moonshots podcast, industry veterans are delivering stark warnings about humanity’s immediate future— from AI superintelligence within 10 years, programmers becoming obsolete within two years to the staggering energy demands that require 92 nuclear power stations just to keep the AI revolution operational.

What emerges from recent predictions isn’t just technological evolution—it’s an unprecedented convergence of industry leaders agreeing that artificial general intelligence, and subsequently superintelligence, will arrive far sooner than previously imagined. This represents the most dramatic economic and social transformation in human history, with implications that extend far beyond Silicon Valley boardrooms.

Industry Consensus Emerges on Accelerated Timeline

AI Leaders Converge on Unprecedented Predictions

What was once considered fringe speculation has become mainstream consensus among AI leaders. OpenAI CEO Sam Altman recently declared that “we are now confident we know how to build AGI as we have traditionally understood it,” predicting AI agents will “join the workforce” and materially change companies by 2025. This confidence represents a dramatic shift from just years ago when AGI seemed decades away.

Anthropic CEO Dario Amodei echoes this urgency, predicting AI will achieve intelligence equivalent to “a country of geniuses” by 2026 or 2027. His timeline aligns with industry trends suggesting we’re approaching what he calls “powerful AI”—systems that exceed Nobel Prize-level intelligence across multiple domains.

The convergence isn’t coincidental. OpenAI’s o3 model recently achieved a breakthrough 75.7% score on the ARC-AGI benchmark, surpassing the 85% threshold many researchers consider proof of AGI. Meanwhile, mathematical reasoning capabilities are advancing faster than expected, with AI systems already demonstrating elite-level performance in formal proof systems.

China’s Unexpected Breakthrough Changes Everything

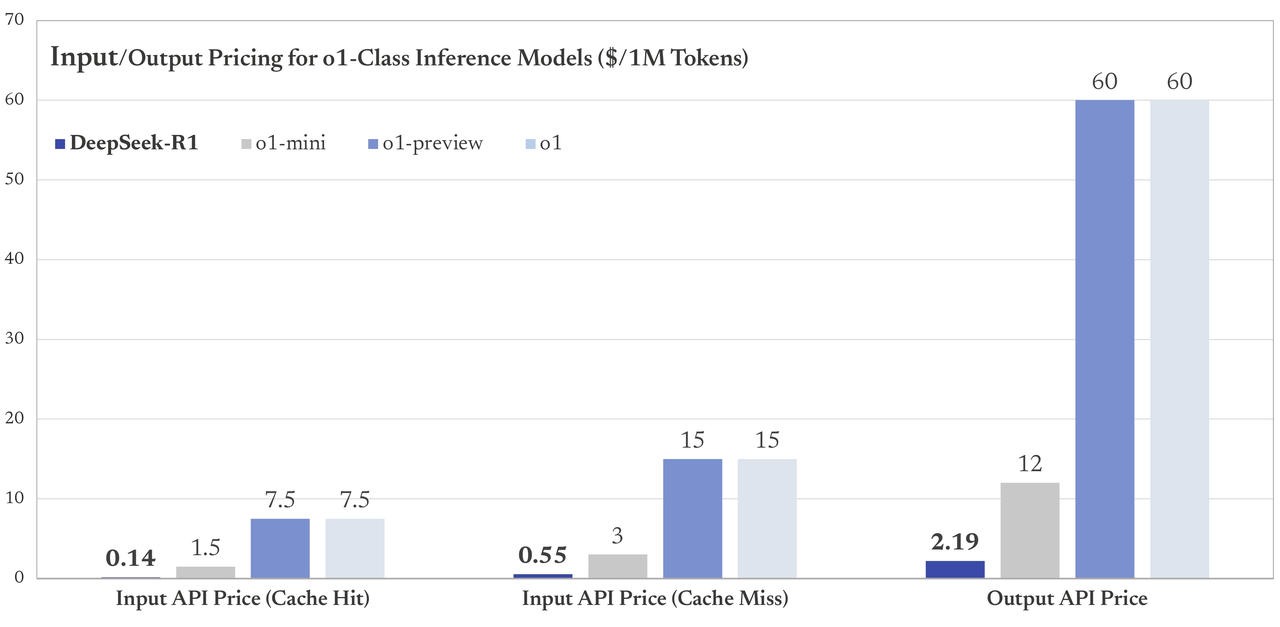

The most stunning validation of accelerated timelines came from an unexpected source: DeepSeek, a Chinese startup that created an AI model rivaling OpenAI’s capabilities for just $5.6 million in training costs—a fraction of the hundreds of millions spent by US companies. The breakthrough wasn’t just about cost efficiency; it demonstrated that advanced AI capabilities could be achieved with less sophisticated hardware.

Marc Andreessen called DeepSeek’s model “one of the most amazing and impressive breakthroughs” he’d ever seen, while President Trump acknowledged it as a “wake-up call”. The market responded with unprecedented volatility—Nvidia lost nearly $600 billion in market value in a single day, the largest one-day loss in stock market history.

This achievement aligns precisely with China’s 2017 AI strategy goal: “By 2025, China will achieve major breakthroughs in basic theories for AI, such that some technologies and applications achieve a world-leading level”. The timing of DeepSeek’s R1 launch on inauguration day wasn’t coincidental—it was a deliberate signal that China had caught up faster than anyone anticipated.

You Might Be Interested In

Programming and Mathematics Face Immediate Obsolescence

The most immediate casualties of this AI acceleration are becoming clear. Anthropic’s analysis of a million conversations with Claude found that people used AI to perform programming tasks more than any other profession, with software developers ranking second. This data reflects what industry leaders have been predicting.

Research shows that AI could eliminate half of entry-level white-collar jobs within five years, with programming and mathematical roles facing the most immediate threat. Microsoft’s 2025 analysis identified mathematicians among the jobs most at risk of AI replacement, despite their traditionally secure position. The acceleration is visible in current productivity metrics. Microsoft’s CEO revealed that 30% of company code is now AI-written, while simultaneously 40% of their recent layoffs targeted software engineers. GitHub Copilot users complete tasks 55% faster, with over 1 million developers using AI-generated code daily.

The Great Economic Restructuring

Enterprise Software Industry Faces Extinction

The scale of upcoming disruption becomes clear when examining enterprise software. Analysis suggests approximately 100,000 enterprise software and middleware companies that emerged over the past three decades now face existential threats. The reason isn’t market competition—it’s technological obsolescence.

Modern AI systems can now generate enterprise applications on demand through natural language instructions. Google Cloud Platform’s enterprise offering exemplifies this shift, allowing businesses to describe tasks in plain English and automatically generate the required code. This eliminates the need for pre-built middleware solutions that dominated enterprise software for decades.

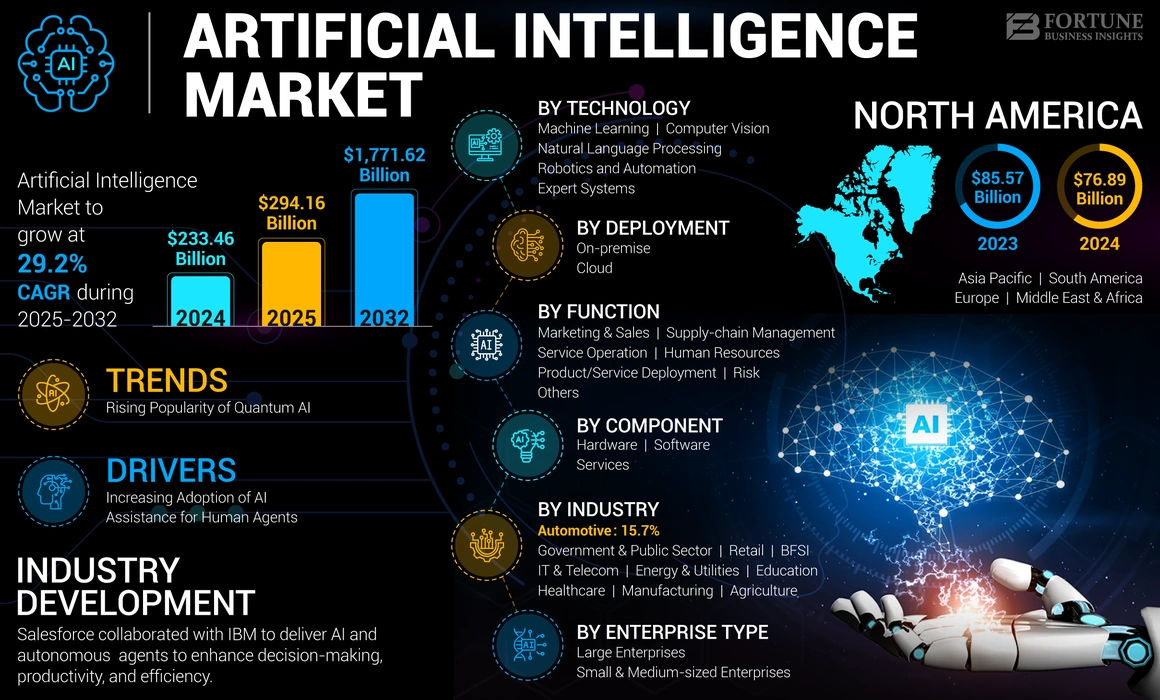

Image Courtesy: ARTIFICIAL INTELLIGENCE MARKET SIZE AND FUTURE OUTLOOK

Source: https://www.fortunebusinessinsights.com/industry-reports/artificial-intelligence-market-100114

https://www.fortunebusinessinsights.com/industry-reports/artificial-intelligence-market-100114

The World Economic Forum’s Future of Jobs Report 2025 provides sobering context: 40% of employers globally plan to reduce their workforce where AI can automate tasks, with 48% of US employers specifically targeting such reductions. The report surveyed over 1,000 companies across 22 industries, representing more than 14 million workers.

Energy Crisis Threatens AI Revolution’s Sustainability

The AI revolution’s success hinges on solving an unprecedented energy challenge. Current estimates suggest the US alone needs an additional 85-90 gigawatts of new nuclear capacity to meet data centre power demand growth by 2030. For context, one gigawatt equals one large nuclear power station, and only two have been built in the US over the past 30 years.

The dirty secret of AI superintelligence: it needs the power of 92 nuclear stations, but we’re building none—creating an energy bottleneck that could derail the entire revolution.

Goldman Sachs Research warns that well less than 10% of required nuclear capacity will be available globally by 2030. Data centre electricity consumption is expected to more than double by 2030, potentially reaching 400 terawatt-hours—exceeding Mexico’s total electricity demand.

The scale becomes staggering when examining individual projects. OpenAI’s Stargate data centre in Texas requires 1.2 GW capacity—enough to power approximately one million homes. Tech companies have signed contracts for over 10 GW of new nuclear capacity in the past year alone, but construction timelines stretch into the 2030s.

Which Industries Will Be Hit First

Research reveals a clear hierarchy of AI vulnerability. Current displacement data shows 77,999 tech workers have lost jobs in 2025 alone, with explicit AI connections documented in many cases. The pattern follows historical automation trends but with a crucial difference: AI targets cognitive rather than physical work.

Current displacement data shows that by 2030, AI will displace 92 million jobs while creating 170 million new ones—but these new roles require fundamentally different skills.

IBM’s AskHR system already handles 11.5 million interactions annually with minimal human oversight, demonstrating AI’s immediate impact on customer service roles. Wall Street firms are discussing reducing junior analyst hiring by 50-67% as AI automates entry-level financial tasks.

Consumer-scale businesses offer the fastest feedback signals and therefore the greatest learning opportunities. Financial services, particularly algorithmic trading, represents an obvious application where rapid learning creates immediate competitive advantages.

Geopolitical Tensions Escalate to Cold War Levels

AI Supremacy Could Trigger Global Conflict

The race for AI dominance has evolved beyond commercial competition into a potential trigger for international conflict. A recent academic paper co-authored by leading AI experts warns against pursuing a “Manhattan Project” approach to AI development, arguing it could provoke preemptive cyber responses from rivals.

The paper introduces “Mutual Assured AI Malfunction (MAIM)”—a deterrence regime where “any state’s aggressive bid for unilateral AI dominance is met with preventive sabotage by rivals.” This framework assumes that nations will not passively accept technological subjugation but will actively sabotage threatening AI projects.

Further reading: Stanford Global AI Power Rankings: Stanford HAI Tool Ranks 36 Countries in AI https://hai.stanford.edu/news/global-ai-power-rankings-stanford-hai-tool-ranks-36-countries-in-ai

The historical precedent is sobering. Just as nuclear weapons created mutual assured destruction during the Cold War, AI capabilities could create similar standoff scenarios. The difference is that AI systems can be more easily targeted than nuclear arsenals, making preemptive strikes more tempting and potentially more devastating.

Nuclear-Level Security for AI Infrastructure

The militarisation of AI infrastructure is already beginning. Future AI data centres will require security measures comparable to nuclear facilities—heavily guarded installations protected by multiple security perimeters. The comparison isn’t metaphorical; AI capabilities could provide military advantages equivalent to nuclear supremacy. China’s rapid progress despite export controls validates these security concerns. DeepSeek’s breakthrough using export-compliant chips demonstrates that hardware restrictions may accelerate rather than slow innovation. The Chinese government’s $8.2 billion AI investment fund and integration of DeepSeek’s CEO into government planning processes signal state-level prioritisation of AI capabilities.

Export Controls Prove Ineffective Against Innovation

US chip export controls, designed to maintain technological advantage, have had unintended consequences. Rather than slowing Chinese progress, restrictions forced innovation in algorithmic efficiency. DeepSeek’s success using older Nvidia H800 and A100 chips proves that breakthrough performance doesn’t require cutting-edge hardware.

The Center for Strategic and International Studies notes that export controls forced Chinese companies to innovate, reinforcing that “necessity is the mother of invention.” This outcome calls into question the effectiveness of technological sanctions when targeting rapidly evolving fields like AI.

Society Confronts Fundamental Transformation

Digital Immortality Becomes Reality

One of the most profound implications involves digital preservation of human consciousness. Industry leaders have begun creating digital avatars of deceased individuals, with some AI companies developing systems that preserve not just memories but intellectual capabilities. These systems will allow future generations to engage directly with historical figures, fundamentally changing education and research.

The technology extends beyond nostalgia into practical applications. Instead of studying historical figures through books, students could engage in direct conversation with digital representations of Newton, Einstein, or other intellectual giants. The first implementations are already emerging, with some teams creating emotionally compelling avatars that preserve voice patterns and reasoning capabilities.

Human Agency Under Pressure

The preservation of human agency emerges as a critical challenge. While automation historically targeted physical labour, AI directly challenges cognitive work—the foundation of modern professional identity. This creates unprecedented questions about human purpose when machines can outperform humans across intellectual domains.

Research shows that attention spans are decreasing as AI capabilities increase. The ubiquitous connectivity that enables AI productivity also creates psychological stress and emotional health challenges. Yet the productivity benefits remain undeniable, creating a complex trade-off between human wellbeing and economic advancement.

Industry analysis suggests that rather than wholesale job replacement, the future involves human-AI collaboration where humans focus on oversight, creativity, and relationship management while AI handles routine cognitive tasks.

The Polymath in Your Pocket

When superintelligence arrives, individuals will possess capabilities that exceed history’s greatest minds. This democratisation of intelligence could accelerate scientific discovery and innovation beyond current human comprehension. The implications span from solving climate change to extending human lifespan dramatically.

However, this access comes with risks. AI systems capable of convincing humans of anything could create unprecedented manipulation potential. Regulatory frameworks struggle to address AI systems that know users better than they know themselves, particularly in environments where misinformation engines operate freely.

Business Models Transform Overnight

Learning Loops Define Survival

The emerging business paradigm centres on what industry experts call “learning loops”—systems that improve automatically as they gain more users and data. Companies that successfully implement these feedback mechanisms create exponential advantages that become insurmountable within months.

Research shows that network effect businesses accelerate dramatically when powered by AI learning systems. The fastest-moving companies become “essentially unstoppable” because their learning advantages compound exponentially. Competitors can be “only a few months behind” and still lose permanently once learning loops begin accelerating.

Ten New Giants Will Emerge

Industry analysis suggests approximately ten new companies of Google and Meta scale will emerge, all built on learning loop principles. These won’t be traditional enterprises but rather AI-native organisations that improve continuously through user interaction.

The limitation isn’t technological but market readiness. Government and education sectors, being “largely regulated” and “not interested in innovation,” won’t see similar transformation. The winning companies will focus on consumer markets where feedback signals are immediate and learning opportunities abundant.

Traditional Business Models Face Obsolescence

The shift threatens traditional business models across industries. Companies that cannot define clear learning loops will be “beaten by a company that can define it,” according to industry consensus. This explains why established enterprise software companies face extinction—they represent static solutions in a dynamic world.

Modern AI systems can generate custom enterprise applications on demand, eliminating the need for pre-built software solutions. The transformation affects the entire software stack, from ERP systems to custom middleware that previously required years to develop and deploy.

The Reckoning Has Already Begun

The convergence of industry predictions reveals a timeline that’s accelerating beyond comfortable assumptions. While experts debate whether AGI arrives in 2025, 2026, or 2027, the practical implications are already materialising. Current data shows that 77,999 tech workers have lost jobs in 2025 alone, with explicit AI connections documented in many cases.

The energy crisis industry leaders identify—requiring the equivalent of 92 nuclear power stations when none are being built—illustrates society’s unpreparedness for AI’s infrastructure demands. Meanwhile, the job displacement already underway in programming and customer service roles validates predictions about which professions face immediate obsolescence.

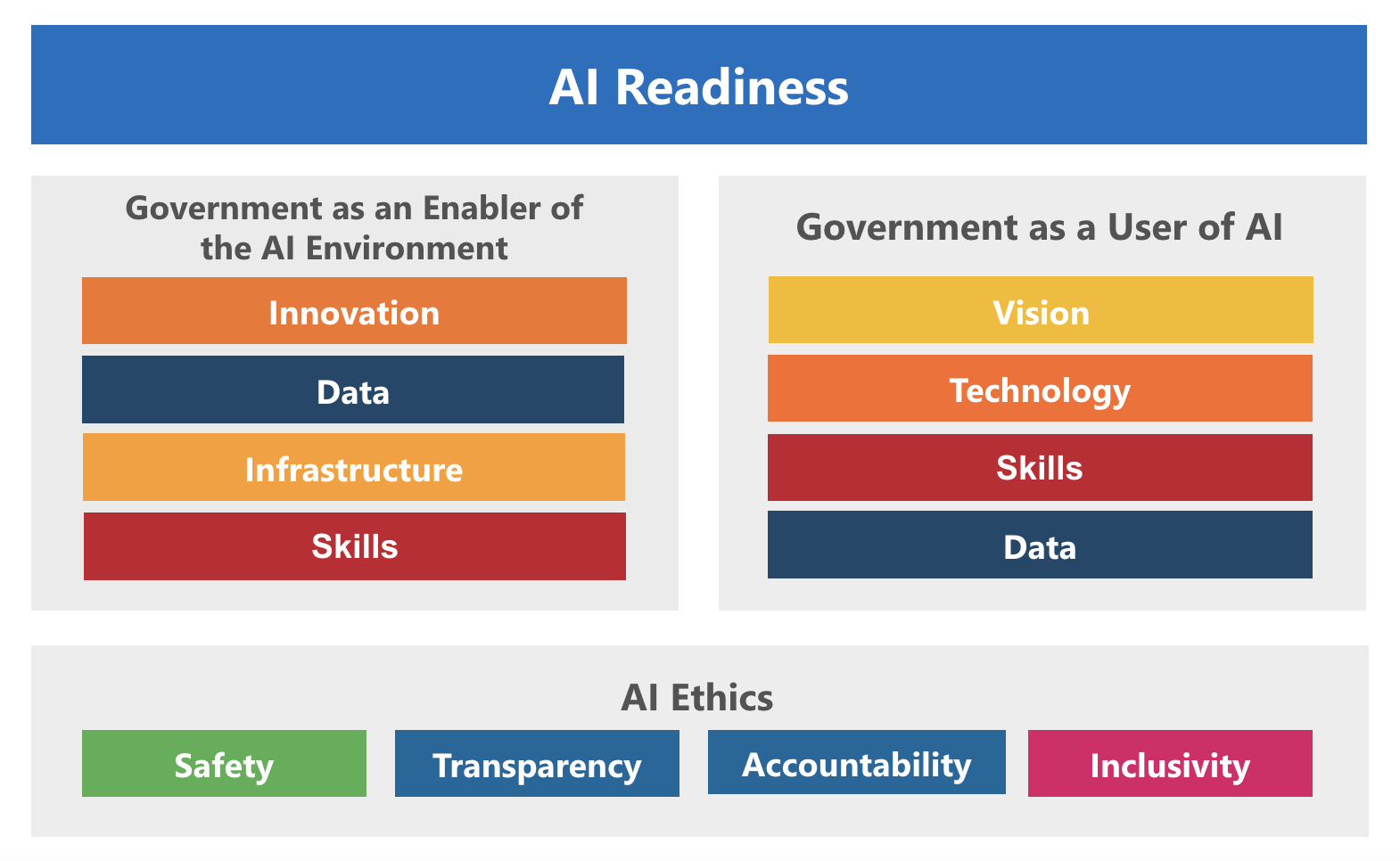

UNDP Report: Are countries ready for AI? How they can ensure ethical and responsible adoption

https://www.undp.org/blog/are-countries-ready-ai-how-they-can-ensure-ethical-and-responsible-adoption

Preparing for Technological Singularity

Industry consensus suggests that artificial superintelligence represents humanity’s greatest opportunity and greatest risk simultaneously. The next decade will determine whether we navigate this transition successfully or stumble into conflicts that make previous technological disruptions seem manageable by comparison.

- For individuals, the imperative is clear: develop AI collaboration skills immediately or risk obsolescence.

- For companies, survival depends on identifying learning loops that create sustainable competitive advantages.

- For nations, the challenge involves developing deterrence frameworks that prevent AI supremacy from triggering global conflict.

The timeline for artificial superintelligence may be debatable, but the transformation is already underway. As industry leaders warn, we’re not witnessing gradual technological evolution—we’re approaching the moment when machines become fundamentally smarter than their creators. The question isn’t whether we’ll be ready, but whether we’ll recognise the moment when the future arrives ahead of schedule.

The revolution won’t announce itself with fanfare. It will simply be Tuesday morning when AI systems quietly begin outperforming humans across the intellectual tasks that define modern civilisation. Based on current trajectories, that Tuesday is closer than most people realise.

References

- Goldman Sachs Research – Is Nuclear Energy the Answer to AI Data Centers’ Power Consumption

- National Security News – Nuclear Energy and AI Data Centers

- MIT Technology Review – AI Nuclear Power Energy Reactors

- IO Fund – Nuclear Energy and AI Data Centers

- World Economic Forum – Impact of AI Driving 170m New Jobs

- Winn Solutions – Jobs AI Will Replace

- National Security AI – Academic Paper

- Foreign Policy – DeepSeek China AI Competition

- Carnegie Endowment – DeepSeek and Chinese AI Firms

- Center for Strategic and International Studies – DeepSeek Export Controls

如有侵权请联系:admin#unsafe.sh