文章指出,社会工程学在2025年将成为最可靠、可扩展和影响深远的入侵方法之一。其成功源于高接触攻击的兴起、非钓鱼技术的使用、低检测覆盖率、业务中断加剧以及人工智能的助力。防御需强化身份验证、零信任原则和行为分析能力。 2025-7-30 10:0:33 Author: unit42.paloaltonetworks.com(查看原文) 阅读量:23 收藏

Executive Summary

We see social engineering evolving into one of the most reliable, scalable and impactful intrusion methods in 2025 for five key reasons:

First, social engineering remained the top initial access vector in Unit 42 incident response cases between May 2024 and May 2025. These attacks consistently bypassed technical controls by targeting human workflows, exploiting trust and manipulating identity systems. More than one-third of social engineering incidents involved non-phishing techniques, including search engine optimization (SEO) poisoning, fake system prompts and help desk manipulation.

Second, high-touch attacks are on the rise. Threat actors such as Muddled Libra bypass multi-factor authentication (MFA) and exploit IT support processes to escalate privileges in minutes, often without malware. In one case, a threat actor moved from access to domain administrator in under 40 minutes using only built-in tools and social pretexts.

Third, low-detection coverage and alert fatigue remain key enablers. In many cases, social engineering attacks succeeded not through advanced tradecraft, but because organizations missed or misclassified critical signals. This was a particular issue for identity recovery workflows and lateral movement paths.

Fourth, business disruption resulting from these attacks continues to grow. Over half of social engineering incidents led to sensitive data exposure, while other incidents interrupted critical services or affected overall organizational performance. These high-speed attacks are designed to deliver high financial returns while requiring minimal infrastructure or risk.

Fifth, artificial intelligence (AI) is accelerating both the scale and realism of social engineering campaigns. Threat actors are now using generative AI to craft personalized lures, clone executive voices in callback scams and maintain live engagement during impersonation campaigns.

Beneath these trends lie three systemic enablers: over-permissioned access, gaps in behavioral visibility and unverified user trust in human processes. Identity systems, help desk protocols and fast-track approvals are routinely exploited by threat actors mimicking routine activity.

To counter this, security leaders must drive a shift beyond traditional user awareness to recognizing social engineering as a systemic, identity-centric threat. This transition requires:

- Implementing behavioral analytics and identity threat detection and response (ITDR) to proactively detect credentials misuse.

- Securing identity recovery processes and enforcing conditional access.

- Expanding Zero Trust principles to encompass users, not just network perimeters.

Social engineering works not because attackers are sophisticated, but because people still trust too easily, compromising organizational security.

Introduction

Social engineering continues to dominate the threat landscape. Over the past year, more than a third of the incident response cases my team handled began with a social engineering tactic. These intrusions didn’t rely on zero-days or sophisticated malware. They exploited trust. Attackers bypassed controls by impersonating employees, manipulating workflows and taking advantage of gaps in how organizations manage identity and human interaction.

What stands out this year is how sharply these attacks are evolving. Unit 42 is tracking two distinct models, both designed to bypass controls by mimicking trusted activity:

- High-touch compromise that targets specific individuals in real time. Threat actors impersonate staff, exploit help desks and escalate access without deploying malware, often using voice lures, live pretexts, and stolen identity data.

- At-scale deception, including ClickFix-style campaigns, SEO poisoning, fake browser prompts, and blended lures to trigger user-initiated compromise across multiple devices and platforms.

- Our team has seen attackers move from gaining initial access to becoming a domain administrator in under an hour, using only built-in tools and a convincing story.

These aren’t edge cases. They’re repeatable, reliable techniques that adversaries are refining week after week. One of the most revealing trends we’ve observed is the rise of non-phishing vectors. This includes voice-based lures, spoofed browser alerts and direct manipulation of support teams. In many environments, these tactics enable attackers to slip through undetected, exfiltrate data and cause significant operational harm.

This report brings together intelligence from Unit 42 threat researchers, telemetry from real-world intrusions, and insight from the front lines of our global incident response (IR) practice. The goal of this report is to help you understand how social engineering actually works in 2025 and what it takes to stop it.

One thing is clear: adversaries aren’t just hacking systems: They’re hacking people.

High-Touch, High-Impact Compromise

How Attackers Breach Trust

A growing class of attacks targets individuals using tailored, real-time manipulation, often within large enterprises where identity systems and human workflows are more complex. These organizations present a richer set of access points, from federated identity platforms to distributed support operations, which attackers exploit to escalate access discreetly. Enterprises’ size and complexity make it easier for malicious activity to blend in with routine requests, increasing the time to detection.

High-touch operations are often financially motivated, driven by threat actors who invest time and research to breach identity defenses without triggering alerts. They impersonate employees, exploit trust and escalate quickly from user-level access to privileged control. We have investigated multiple high-impact cases where attackers bypassed MFA and convinced help desk staff to reset credentials. In one recent case, the attacker progressed from gaining initial access to obtaining domain administrator rights in minutes, without deploying malware at all.

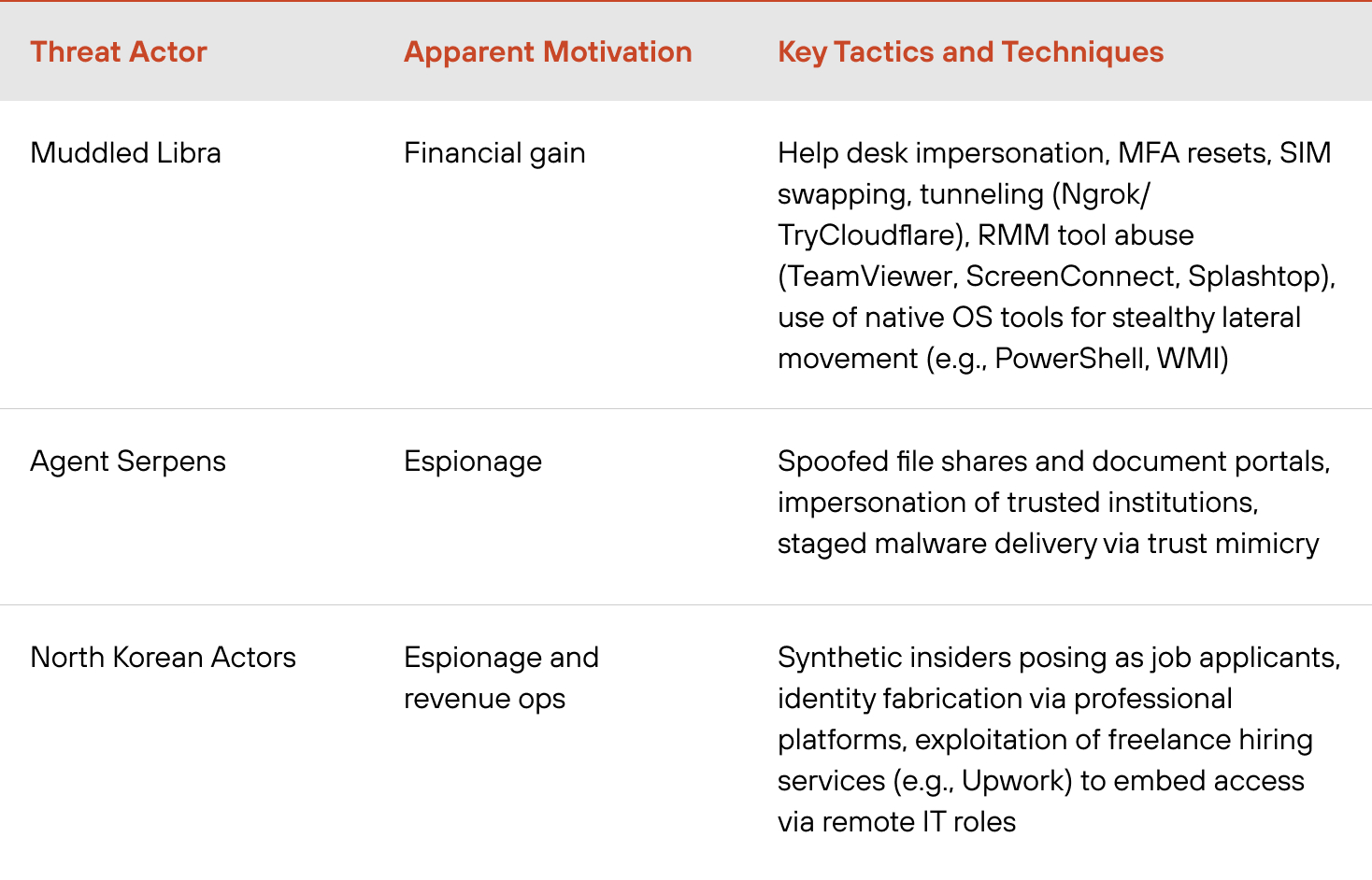

Groups such as Muddled Libra, a global, financially motivated cybercrime operation, exemplify this model. Instead of phishing broadly, these attackers identify key personnel, build a profile using public data and impersonate them convincingly. As a result, these groups gain deep access, broad system control and the ability to monetize attacks quickly .

Not all high-touch operations are profit-driven. We have also tracked state-aligned actors using similar tactics for espionage and strategic infiltration. Campaigns attributed to state-aligned threat actors such as Iran-affiliated Agent Serpens and threat groups from North Korea have relied on spoofed institutional identities, custom-crafted lures and counterfeit documentation to compromise diplomatic and public sector targets.

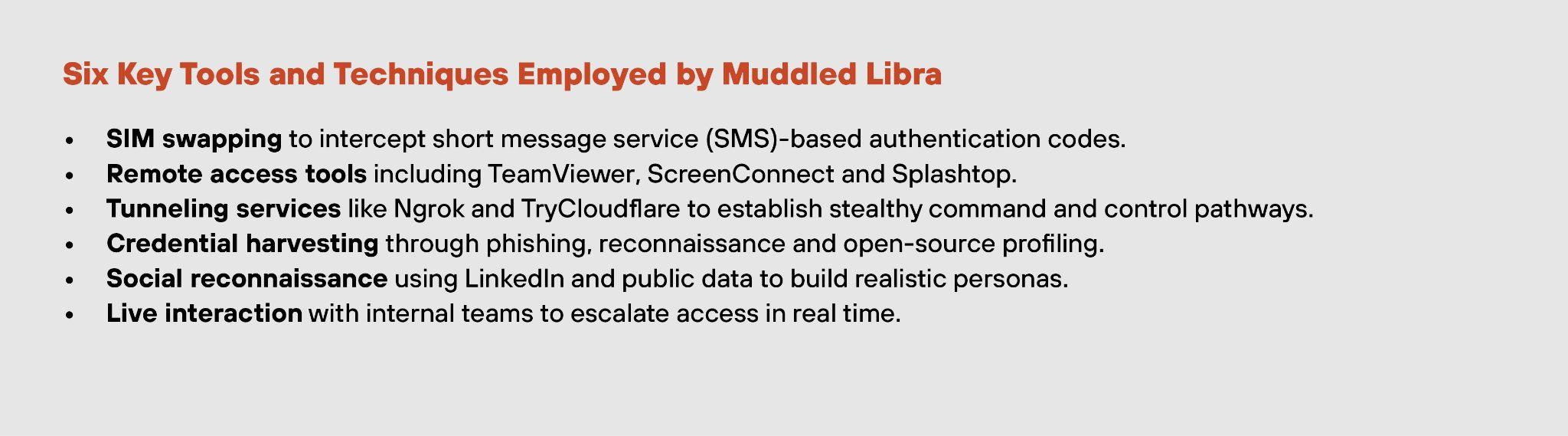

Profile #1: Muddled Libra

Among financially motivated actors, few have demonstrated the persistence, adaptability and technical fluency of Muddled Libra. Tracked by Unit 42 across multiple IR cases and threat research streams, this group is known for its ability to move from initial access to domain-level control in under 40 minutes, without relying on malware or exploits.

Muddled Libra operates within a broader cluster of actors. These threat actors are financially motivated, bypassing technical controls by exploiting identity systems directly. Muddled Libra stands out, however, due to its persistence, speed and human-led tradecraft.

The group doesn’t just phish for credentials. It impersonates employees in real time, often targeting help desk staff to reset MFA, take over identities and gain access to internal systems. Once inside, Muddled Libra leverages remote monitoring and management (RMM) tools to maintain persistent access and establish control.

Their infrastructure is lightweight and evasive. For command and control (C2), we have observed repeated misuse of tunneling services, allowing the group to operate from outside the network perimeter without raising immediate red flags.

MFA resets, subscriber identity module (SIM) swapping and the misuse of public-facing trust signals are recurring patterns. In several cases, attackers gathered detailed personal data from sources like LinkedIn to build convincing personas, increasing their chances of bypassing identity verification steps.

Profile #2: Nation-State Actors

High-touch social engineering is not exclusive to cybercriminal groups. Nation-state actors have long relied on human-first tradecraft to achieve strategic access, whether for espionage, surveillance or geopolitical disruption. Recent campaigns show that state-aligned intrusions increasingly mirror the same identity-centric methods seen in financially motivated operations.

Iran-affiliated actors are one such group. We tracked Iran-affiliated Agent Serpens campaigns in which the Iran-affiliated group impersonated trusted institutions, often delivering malware via spoofed emails that mimicked legitimate document-sharing workflows.

North Korean actors have developed a distinct variation of high-touch compromise known as synthetic insiders. These campaigns involve attackers posing as job applicants, using fabricated curriculum vitae (CVs) and professional personas to secure remote employment in target organizations.

From Muddled Libra’s financially driven intrusions to the strategic targeting seen in Agent Serpens and North Korean campaigns, these attackers’ strategies remain consistent. Manipulate people, mimic trust and escalate access without triggering detection.

Threat Actors and Their Tactics, Techniques and Procedures (TTPs) Tradecraft

High-Touch Intrusions in the Field

The following case studies drawn from our IR caseload illustrate how attackers can bypass controls not through exploits, but by navigating human systems with precision and intent.

Help Desk Deception Leads Unlocks 350 GB Breach

Target: Customer records, proprietary documentation and internal files were hosted in cloud storage. An attacker staged and exfiltrated over 350 GB of data without triggering endpoint or endpoint detection and response (EDR) alerts. Attackers used no malware — only legitimate credentials and living-off-the-land binaries.

Technique: The attacker contacted the organization's help desk, impersonating a locked-out employee. Using a mix of leaked and publicly available details, the adversary passed identity checks and gained the agent’s trust, prompting a reset of MFA credentials. With access secured, the attacker logged in and moved laterally using legitimate administrative tools. Every action mimicked legitimate user behavior, deliberately avoiding triggers that might raise alerts.

Rapport-Building To Bypass the Help Desk

Target: Internal corporate systems. The attacker sought employee-level access to escalate privileges and stage a broader compromise. The intrusion was contained shortly after login.

Technique: Over several days, the attacker made repeated low-pressure calls to the help desk, each time impersonating a locked-out employee. They gathered process details and refined their pretext with each attempt. Once the story aligned with internal escalation protocols, they issued a time-sensitive request that prompted an MFA reset to grant access. The attacker logged in successfully but was flagged minutes later due to geographic anomalies.

Executive MFA Reset Blocked by Conditional Access

Target: Mid-level executive credentials with broad system permissions. The attacker aimed to use these credentials to access sensitive business data and perform reconnaissance via cloud APIs. The attempt was contained before data exfiltration, thanks to conditional access controls.

Technique: After several failed phishing attempts, the attacker called IT support, impersonating the executive and citing travel-related access issues. The pretext was convincing enough to prompt an MFA reset. With fresh credentials, the attacker initiated Graph API queries to enumerate permissions, group memberships and file paths. However, the conditional access policy flagged the session due to an unusual login from an unrecognized device and location, blocking further escalation.

These case studies show that high-touch attackers succeed not by breaking systems, but by understanding them. They manipulate processes, personnel and platforms across industries in ways that appear routine until it is too late.

How AI Is Shaping the Social Engineering Threat Landscape

Recent months have seen a shift in how AI and automation are being used in social engineering campaigns. While conventional techniques remain dominant, some attackers are now experimenting with tools that offer greater speed, realism and scalability.

Unit 42’s incident response cases point to three distinct layers of AI-enabled tooling:

- Automation: Tools in this category follow predefined rules to accelerate common intrusion steps, such as phishing distribution, spoofed SMS delivery and basic credential testing. These capabilities are not new, but they are increasingly configured to mimic enterprise workflows and bypass common detection measures.

- Generative AI (GenAI): Used to produce credible, human-like content across channels including email, voice and live chat. In multiple investigations, threat actors employed GenAI to craft highly personalized lures using public information. Some campaigns went further, using cloned executive voices in callback scams to increase the plausibility of urgent phone requests. In more sustained intrusions AI was used to refine attacker personas, generate tailored phishing follow-ups and draft real-time responses. These adaptive techniques allowed threat actors to maintain engagement across multiple stages of the intrusion, with a level of tone and timing that previously required a live operator.

- Agentic AI: Refers to role-based, context-aware systems capable of autonomously executing multi-step tasks with minimal human input and learning from feedback. While adoption remains limited to date, we observed Agentic AI’s use in chaining activities such as cross-platform reconnaissance and message distribution. In one case, attackers built multi-layered synthetic identities (including fake CVs and social media profiles) to support fraudulent job applications in a targeted insider campaign.

This spectrum of use reflects a hybridization of tactics, where conventional social engineering methods are increasingly supported by AI-enabled components. Adversary adoption of AI remains uneven, but the underlying shift is clear: automation and AI are beginning to reshape the scale, pacing and adaptability of social engineering attacks.

At-Scale Attacks

At-Scale Deception: The Rise of ClickFix Campaigns

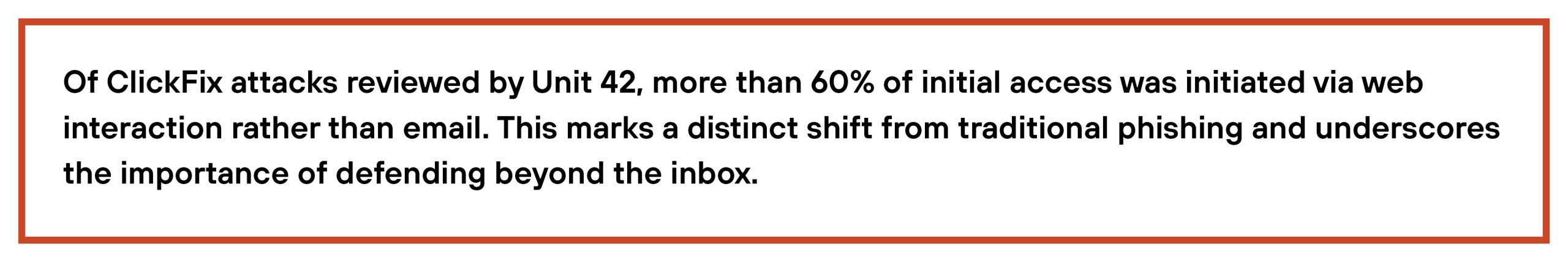

Social engineering has evolved into a scalable, automated ecosystem that mimics trusted signals and exploits familiar workflows. One example is ClickFix, a technique using fake browser alerts, fraudulent update prompts and drive-by downloads to initiate compromise. Between May 2024 and May 2025, ClickFix was the initial access vector in at least eight confirmed IR cases.

Delivery Mechanisms: SEO Poisoning, Malvertising and Fraudulent Prompts

ClickFix campaigns don’t rely on a single delivery method. Instead, they exploit multiple entry points. We have observed these campaigns using SEO poisoning, malvertising and fraudulent browser alerts to lure users into initiating the attack chain themselves.

In one confirmed IR case, the threat actor leveraged SEO poisoning to plant a malicious link high in search engine results. When an employee searched for a software installer, they were redirected to a spoofed landing page that triggered a payload download. Malvertising plays a similar role, delivering fake “click to fix” banners via ad networks or pop-ups mimicking trusted software brands. Another growing vector is fraudulent system alerts, crafted to mimic legitimate browser or operating system warnings. In one healthcare example, an employee encountered what appeared to be an authentic Microsoft update notification while accessing an internal system from home. The link led to the download of a loader, which executed an infostealer and enabled credential harvesting. These delivery mechanisms share three core attributes:

- Trust mimicry

- User initiation

- Platform agnosticism

Because the user initiates the action (by clicking a link, downloading a file or responding to a prompt) the attack often bypasses traditional perimeter defenses and evades early detection by endpoint tools.

Common ClickFix Payloads and Behavioral Patterns

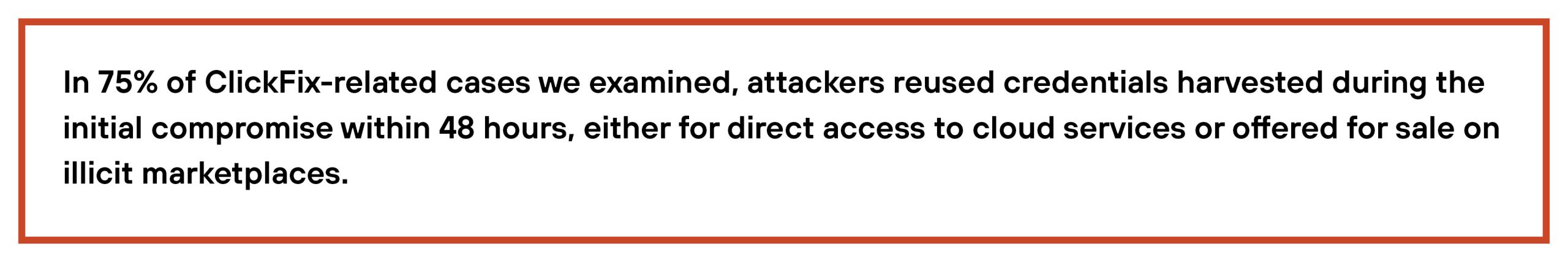

ClickFix campaigns follow a consistent behavioral pattern that prioritizes speed, credential theft and staging for monetization. The payloads vary, but their objectives are tightly aligned. Establish a foothold, extract value and avoid detection.

Credential-harvesting malware is the most common first-stage payload. In many cases, we identified the use of off-the-shelf stealers such as RedLine or Lumma, deployed immediately after a user downloaded a malicious installer or responded to a spoofed update prompt. These tools are configured to extract browser-stored credentials, saved tokens and session cookies.

Lampion, a banking Trojan analyzed by Unit 42 researchers, exemplifies the evolution of these payloads. Initially delivered via email lures, newer Lampion variants use SEO poisoning and compromised websites for distribution, blurring the lines between phishing and ClickFix-style delivery. The retail sector is a common target for these kinds of attacks. An example includes a fake update prompt that led a retail employee to unknowingly install a remote administration Trojan that allowed the attacker to observe and eventually control the victim’s device. Within 90 minutes, the attacker had captured credentials for the organization’s order management system and staged outbound data transfers.

Modular, Escalating Toolchains

ClickFix attacks often use payloads that escalate in stages, depending on the value of the environment:

- Begin with lightweight credential harvesters to gather intelligence.

- Deploy silent loaders to deliver additional tools only if access to sensitive systems is confirmed.

- Follow-on payloads can include:

- Remote access Trojans (RATs)

- Infostealers

- Encryption modules or wipers.

Behavioral consistency is what makes these campaigns effective and repeatable. The payloads are rarely novel, but their delivery and timing are calibrated to minimize risk and maximize utility. In many cases, the attackers relied on well-worn techniques (not zero-days or custom exploits) because the system’s users, not its software, were the point of entry.

ClickFix Isn’t Sophisticated: It's Systematic

Most ClickFix campaigns used publicly available tools repurposed for credential theft or remote access. What set them apart was not malware sophistication, but precision delivery, legitimate-looking prompts, high-trust delivery paths and low-friction execution.

Strategic Takeaways

ClickFix is a blueprint for scalable deception. To defend against it, we advise security leaders to:

- Monitor for early-stage access patterns, especially credential harvesters and fileless loaders.

- Harden endpoints against commonly misused malware families, even those considered commodity-grade.

- Track and control software installation privileges. Many incidents began with user-approved downloads.

- Expand detection beyond email to include web-based delivery, SEO manipulation and spoofed system prompts.

- Establish credential hygiene practices and limit reuse across systems to prevent chained exposure.

Social Engineering by the Numbers

In this section, we turn from tactics to telemetry to examine the technical and organizational patterns behind that success.

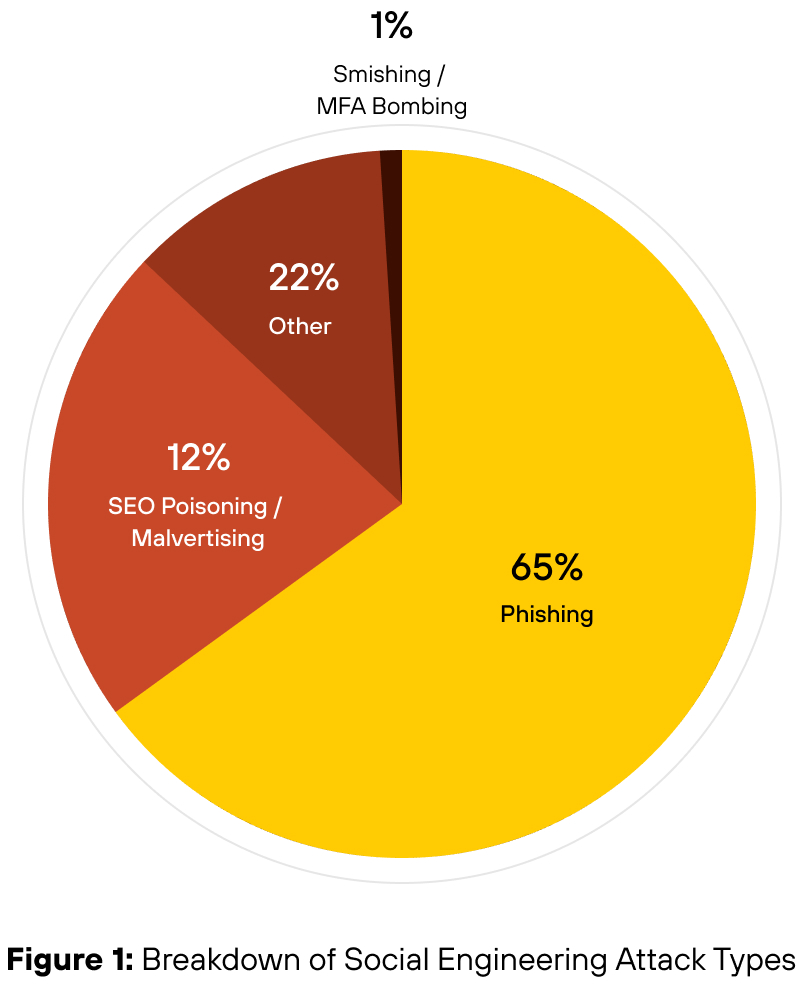

As reported in January, phishing accounted for 23% of all intrusions and that number remains constant for the data set used in this report. When isolating only social engineering-driven intrusions, phishing rises to 65% of those cases (see Figure 1).

Of those phishing-driven social engineering cases:

- 66% of social engineering attacks targeted privileged accounts.

- 23% involved callback or voice-based techniques.

- 45% used impersonation of internal personnel to build trust.

Our data reveals six key patterns behind the continued success of social engineering attacks — and how they’re bypassing defenses.

Initial Access: Social Engineering Remains the Top Tactic

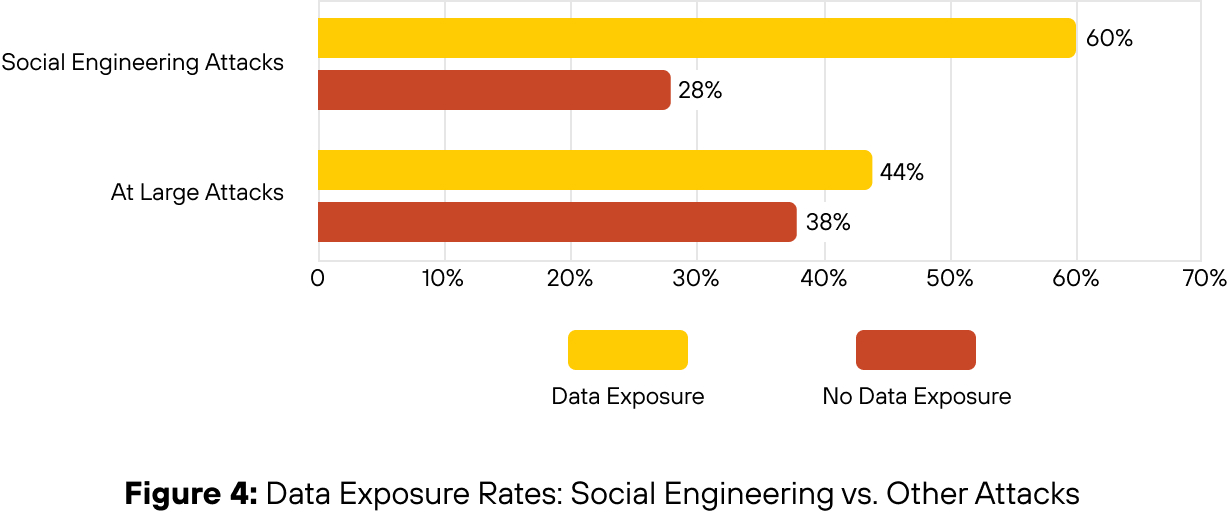

Social engineering dominated initial access methods in our IR caseload between May 2024 and May 2025 (see Figure 4).

Key Insight

Unlike technical exploits that rely on unpatched systems or zero-days, social engineering succeeds due to weak access controls, a sense of urgency, over-permissioned accounts and misplaced trust. These conditions persist in enterprise environments, even where modern detection tools are deployed.

Strategic Takeaways

Social engineering remains the most effective attack vector because it exploits human behavior, not technical flaws. It consistently bypasses controls, regardless of an organization’s maturity.

It’s time to move beyond user education as the primary defense. Treat social engineering as a systemic vulnerability that demands layered technical controls and strict zero-trust verification.

Novel Vectors Are Gaining Ground

A combined 35% of social engineering cases involved less conventional methods, including SEO poisoning and malvertising, smishing and MFA bombing, and a growing set of other techniques (see Figure 1).

While phishing remains the primary delivery mechanism, these emerging methods show that attackers are adapting social engineering to reach users across platforms, devices and workflows where traditional email security is no longer a barrier.

Key Insight

The dominance of phishing masks a deeper shift; threat actors are broadening their playbook.

Strategic Takeaways

Social engineering defense must cover more than the inbox. Security leaders should ensure that detection extends to mobile messaging, collaboration tools, browser-based vectors and quick response (QR) interfaces.

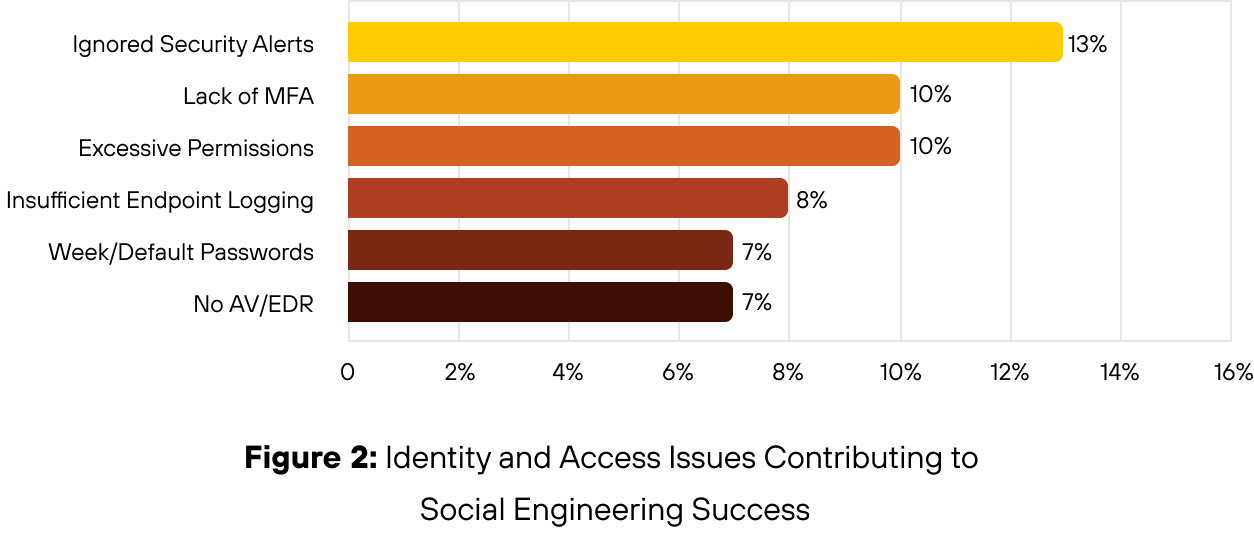

Missed Alerts, Weak Controls and Permission Overreach Fuel Social Engineering Intrusions

These weaknesses span industries, from manufacturing and healthcare to finance, professional services, retail and state and federal government. They also affect organizations of every size, from small businesses to large enterprises, underscoring just how widespread and systemic these issues are.

- In many cases, security alerts went unnoticed because overwhelmed security operations teams were unaware of them. Overburdened teams missed, deprioritized or dismissed malicious logins, privilege escalations or alerts triggered by unusual device access until after compromise was confirmed.

- Excessive permissions increased the area of impact. In many cases, compromised accounts had access well beyond their operational role.

- Lack of or insufficiently deployed MFA featured in a large share of credential-based intrusions. Attackers were often able to authenticate successfully using harvested credentials without encountering any secondary verification.

Key Insight

Threat actors exploited control gaps that could have been closed.

Strategic Takeaways

Social engineering defense depends on detecting early-stage indicators and limiting access after compromise. Many early indicators are missed, not because they’re ignored, but because they’re misclassified.

- Prioritize alert logic to flag abnormal login patterns, MFA abuse and unusual SaaS access as high priority.

- Security teams must ensure that critical alerts are reviewed and escalated.

- Enforced MFA should be applied to all privileged and external-facing accounts.

- Access entitlements must be tightly scoped and regularly reviewed.

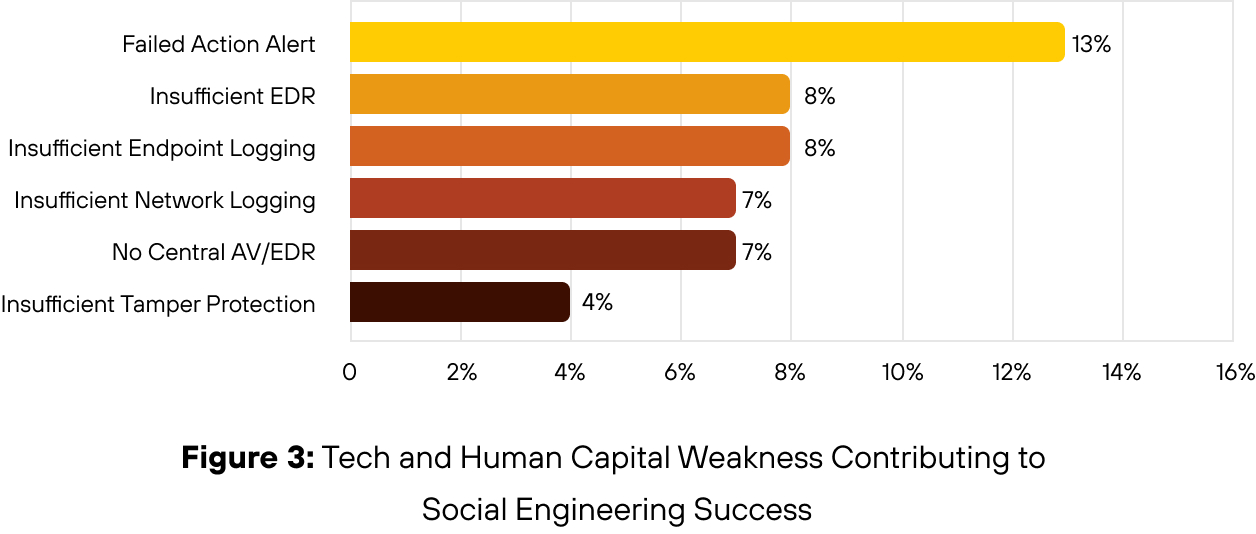

Weak Detection Capability Technology and Under-Resourced Teams Amplify Social Engineering Risks

Social engineering intrusions rarely hinge on attacker sophistication alone. More often, they succeed when limited detection capabilities intersect with over-burdened or under-trained teams. Our IR data highlights how technical weak points and staffing constraints create fertile ground for compromise.

The six most cited contributing factors reflect more than tooling gaps, they reveal systemic strain. Crucially, few organizations had implemented ITDR or user and entity behavior analytics (UEBA), two capabilities that are increasingly vital for detecting social engineering attacks and preventing account takeover. Enterprises relying solely on conventional logging and endpoint telemetry are likely to miss early indicators.

These weaknesses surfaced most often in four key areas:

- Failure to escalate alerts: In several cases, early warning signs were logged but not acted on, allowing attackers to progress unchecked.

- Failure to action alerts: In many cases, anomalous behavior was flagged but not escalated due to alert fatigue, unclear ownership, or skill gaps within security teams.

- Insufficient EDR and endpoint logging: Without clear indicators or behavioral baselines, analysts struggled to distinguish between routine activity and signs of compromise.

- Lack of centralized anti-virus /EDR and tamper protection exposed unmanaged assets, which threat actors exploited to move laterally or disable controls undetected.

Key Insight

Security breakdowns stemmed from weak telemetry, unclear alert ownership, and strained frontline capacity, not attacker sophistication.

Strategic Takeaways

- Strengthening the human layer requires strengthening the systems that support it.

- Ensure alerts are triaged with clear ownership and escalation paths.

- Prioritize expanding EDR to extended detection and response (XDR), for centralized detection with rich context.

- Address tooling gaps that leave unmanaged assets or disablement vectors exposed.

Initial access is only the beginning. In many cases, what starts as a single deceptive interaction results in wide-ranging data exposure and attackers are optimizing for that exact outcome.

Social Engineering Leads to Higher Rates of Data Exposure

Social engineering attacks led to data exposure in 60% of cases, according to our IR data. That is 16 percentage points higher than attacks involving other initial access vectors. This includes direct exfiltration as well as indirect exposure through credential theft, unauthorized access to internal systems or deployment of infostealers and remote access Trojans.

Key Insight

Roughly half of social engineering cases were business email compromises (BEC), and almost 60% of all BEC cases saw data exposure, showing that threat actors moved quickly from gaining access to exfiltrating data or harvesting credentials during these kinds of incidents. Additionally, general network intrusions and ransomware were the two other top incident types where data was exposed. Of those incident types, social engineering was in the top two initial access vectors, showing the popularity of this technique across different types of intrusions and actors.

Credential exposure was also a common precursor to broader data loss. In several cases, attackers reused compromised credentials to access file shares, customer systems or cloud environments. This chained exposure effect amplifies the impact of a single successful lure, turning one compromised identity into broader organizational risk.

Strategic Takeaways

Social engineering isn’t just an access problem, it’s a significant data-loss risk. To reduce exposure, security teams must improve visibility into user behavior after login, not just focus on preventing initial compromise. Key measures include:

- Restricting access to sensitive assets by default.

- Monitoring lateral movement and file access.

- Implementing just-in-time provisioning for privileged operations.

- Data loss prevention (DLP) policies and data tagging should also account for the likelihood of social engineering-driven access, especially via compromised accounts.

Profit Remains the Primary Driver

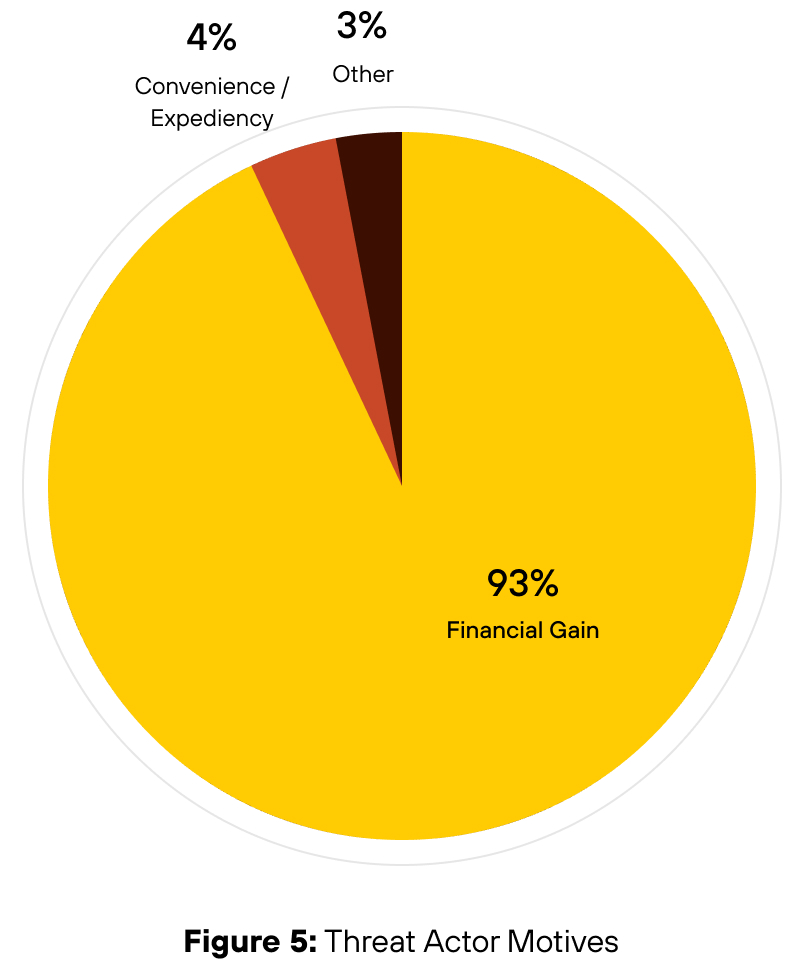

Financial gain is the dominant motive across Unit 42 incident response cases, and social engineering attacks are no exception. Nearly all social engineering intrusions between May 2024 and May 2025 were financially motivated (see Figure 5), with threat actors seeking to monetize access through data theft, extortion, ransomware or resale of credentials.

Key Insight

Social engineering remains a preferred access method because it's easy to execute, infrastructure-light and often avoids detection—with attackers reusing proven tools like phishing kits and impersonation scripts to achieve compromise-to-monetization in under 48 hours, delivering superior cost-benefit over technical exploits by leveraging trust-based access to bypass hardened controls.

Strategic Takeaways

Attackers continue to choose social engineering not for its sophistication, but for its ease, speed and reliability. Detection and response must be tuned to spot monetization signals (including data staging, file movement and abnormal system access) even when the intrusion begins with a single deceptive message.

Recommendations for Defenders

Social engineering continues to outperform other access vectors, not through technical sophistication, but by exploiting gaps between people, process and platform. To counter this, defenders must focus on identity resilience, visibility across workflows, and intelligence-led operations. Capabilities such as UEBA and ITDR play an increasingly critical role in identifying abnormal activity and blocking account takeover attempts.

The following eight recommendations draw directly from Unit 42’s incident response caseload and threat research:

Correlate Identity Signals To Detect Abuse Earlier

Attackers often appear legitimate, until anomalous behaviors emerge. Correlating signals across identity, device and session behavior helps security operations teams detect social engineering attacks before they escalate. Platforms such as Cortex XSIAM accelerate this process, enabling faster threat detection and containment without relying on traditional indicators of compromise (IOCs).

Enforce Zero Trust Across Access Pathways

A strong Zero Trust posture limits attacker movement after initial access. Apply conditional access policies that assess device trust, location and login behavior before granting access. Combine least-privilege principles, just-in-time access and network segmentation to restrict lateral movement and reduce blast radius.

Strengthen the Human Layer With Detection and Training

Employees are part of the detection surface. Harden common abuse points (email, browsers, messaging apps, domain name system (DNS)) with intelligence-led controls. Train frontline teams such as HR and IT support to recognize and report impersonation, voice lures and pretexting. Simulate current attack techniques, including help desk spoofing and MFA manipulation.

Strengthen Detection With Identity and Behavioral Analytics

Detecting social engineering requires visibility beyond traditional indicators. Correlate signals across identity, endpoint, network and SaaS activity to expose escalation attempts early. Capabilities like UEBA and ITDR help surface anomalies such as impersonation, session abuse and credential misuse.

Control and Monitor Illegitimate Use of Native Tools and Business Process Workflows

Attackers can use built-in utilities such as PowerShell or WMI to move undetected. Establish behavioral baselines and alert on anomalies. Map business workflows to uncover where process trust can be exploited, particularly escalation points that rely on fast-track approvals or assumed identity, such as help desk credential resets, finance system overrides or privileged access granted through informal Slack or Teams messages.

Build Resilience Through Simulation and Playbook Readiness

Preparation narrows response gaps. Run live drills based on current social engineering tactics, such as impersonation or chained credential use. Validate playbooks, involve cross-functional teams and integrate Unit 42 threat intelligence into both blue team exercises and user awareness programs.

Enforce Network-Layer Controls

Deploy Advanced DNS Security and Advanced URL filtering to block access to malicious infrastructure. These controls help detect and prevent social engineering attacks that rely on spoofed domains, typo-squatting, SEO poisoning and link-based credential theft. Visibility at the network layer adds a critical line of defence when endpoint or identity-based detection fails.

Lock Down Identity Recovery Paths

Threat actors increasingly target IT help desks to reset credentials and bypass MFA. Strengthen controls around account recovery by enforcing strict identity verification protocols, limiting who can initiate resets, and monitor for unusual request patterns. Help desk staff should receive regular training grounded in real-world threat activity.

Final Thoughts

Social engineering continues to evolve, but its success still depends on trust. Defenders must think beyond malware and infrastructure controls. The new perimeter is shaped by people, processes and the decisions they make in real time. The recommendations in this section are designed to strengthen those decisions and create an environment where trust cannot be easily exploited.

How Palo Alto Networks Can Help

Palo Alto Networks provides unified security platforms that empower organizations to defend against both highly targeted and broad social engineering threats.

Cortex XSIAM and Cortex XDR transform the security operations center (SOC) with unified visibility and protection — blocking endpoint threats, adding email security features and enabling AI-powered detection, investigation and response across any data source.

Advanced WildFire, Advanced URL Filtering and Advanced DNS Security further strengthen defenses by using AI-driven analysis to block phishing, malware, and malicious web content before they reach users.

Prisma Access and Prisma AIRS extend protection to remote workers, enabling consistent policy enforcement and threat prevention everywhere. Prisma Access Browser adds secure web browsing and isolation from web-based threats on any device.

To help organizations stay resilient and proactive, the Palo Alto Networks Unit 42 Retainer and Proactive Services offer 24/7 incident response and access to the latest threat intelligence. By integrating Unit 42 intelligence into employee training programs, organizations can help keep their workforce alert to emerging social engineering tactics, closing the gap between attacker innovation and defender awareness. This holistic approach helps operationalize Zero Trust, secure the human attack surface, and enable rapid response to evolving threats.

Data and Methodology

The mission of this report is to provide readers with a strategic understanding of existing and anticipated threat scenarios, enabling them to implement more effective protection strategies.

We sourced data for this report from more than 700 cases Unit 42 responded to between May 2024 and May 2025. Our clients range from small organizations with fewer than 50 personnel to Fortune 500 and Global 2000 companies and government organizations with more than 100,000 employees.

The affected organizations were headquartered in 49 unique countries. About 73% of the targeted organizations in these cases were located in North America. Cases related to organizations based in Europe, the Middle East and Asia-Pacific form the other approximately 27% of the work. Attacks frequently have impact beyond the locations where organizations are headquartered.

Findings may differ from those published in the January 2025 Global Incident Response Report, which analyzed IR cases from October 2023 to October 2024. Differences in percentages reflect both the different timeframes and the focused nature of this report, which emphasizes social engineering-specific intrusions across verticals.

We excluded some factors in our data that might compromise our analytical integrity. For example, we supported customers investigating possible effects of CVE-2024-3400, causing this vulnerability to appear with unusual frequency in our data set. Where necessary, we recalibrated the data to address any statistical imbalances.

Appendix

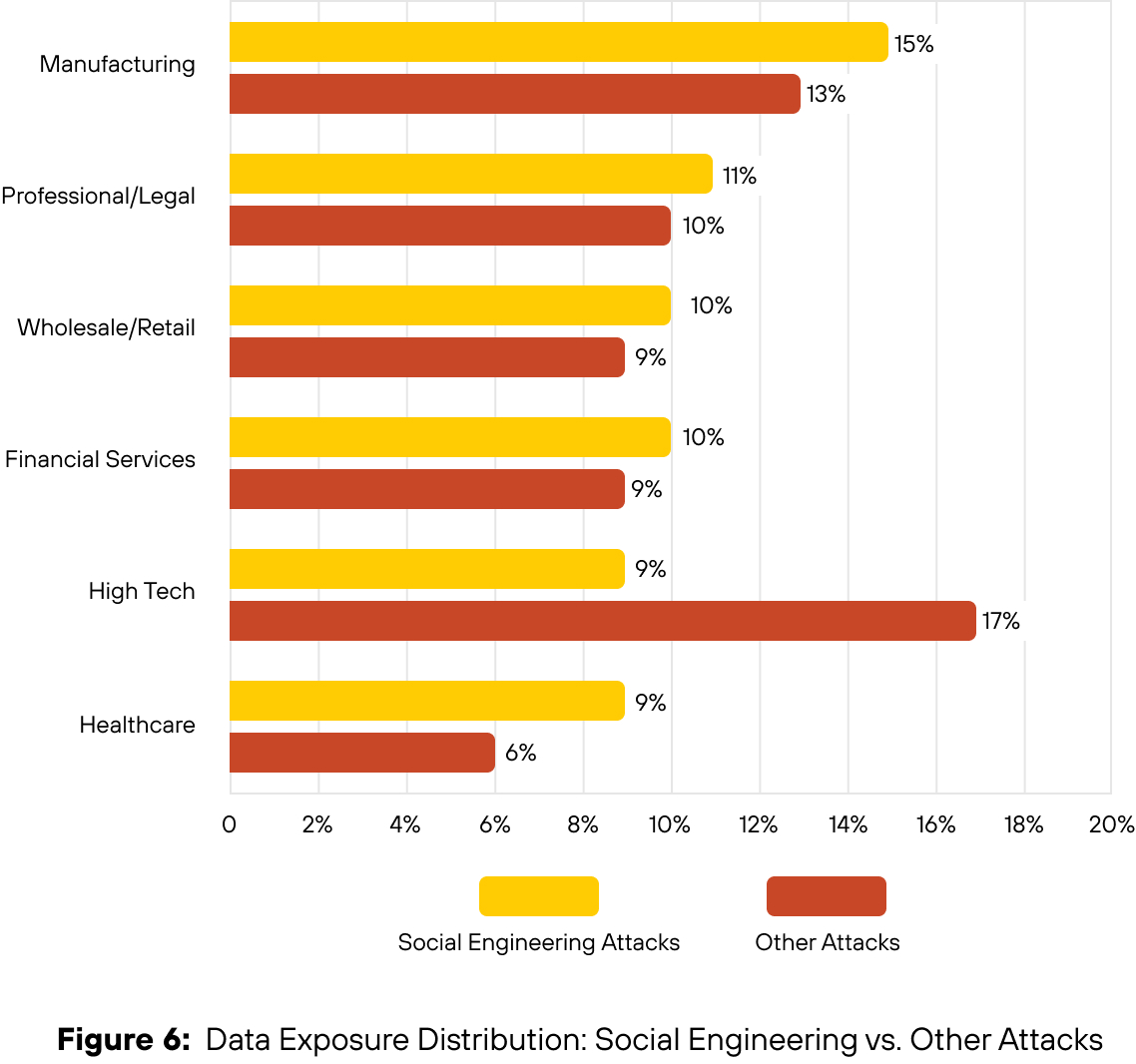

Industries Most Impacted by Social Engineering Attacks

According to Unit 42 IR data from May 2024 to May 2025, high tech was the most targeted sector across all confirmed intrusions. But when isolating for social engineering, manufacturing emerged as the most impacted. This shift highlights how attacker tactics vary by industry profile. See Figure 6 for the full sector-by-sector breakdown.

如有侵权请联系:admin#unsafe.sh