虚假账号创建是一种通过自动化工具和一次性身份大规模注册账号的在线滥用行为。攻击者利用 disposable emails、临时电话号码和反检测浏览器等手段模拟真实用户注册,用于滥用免费服务、操纵互动指标或实施欺诈。尽管检测和阻止此类攻击具有挑战性,但通过行为分析、输入标准化和实时监控等方法可以有效减少其影响。 2025-6-27 12:52:48 Author: securityboulevard.com(查看原文) 阅读量:16 收藏

Fake account creation is one of the most persistent forms of online abuse. What used to be a fringe tactic (bots signing up to post spam) has become a scaled, repeatable attack. Today’s fake account farms operate with disposable identities, rotating infrastructure, and automation frameworks built to evade detection.

These attacks aren’t opportunistic. They’re industrialized. Cheap compute, disposable emails, temporary phone numbers, and anti-detect browsers make it easy to simulate real users at scale. Once accounts are live, attackers use them to exploit free tiers, skew engagement metrics, or run follow-on fraud.

This post breaks down how fake account creation works, why it’s hard to stop, and what actually helps detect and block it in production.

What is fake account creation?

Fake account creation is a form of automated abuse where attackers register large numbers of new accounts using bots, disposable emails, and fake or synthetic identities. These accounts aren’t tied to real users. A few duplicate accounts per person may be benign, but problems arise when one actor creates hundreds or thousands to exploit your platform.

The core issue isn’t just that fake accounts exist. It’s how easily they can be produced at scale using infrastructure that mimics legitimate users. Many of these accounts look normal: they use Gmail addresses, pass CAPTCHA, and complete verification. But they’re part of coordinated, scripted campaigns.

Fake accounts are a means to an end. Attackers use them to abuse incentives (like free trials or referral rewards), manipulate reputation systems, or set the stage for fraud and spam.

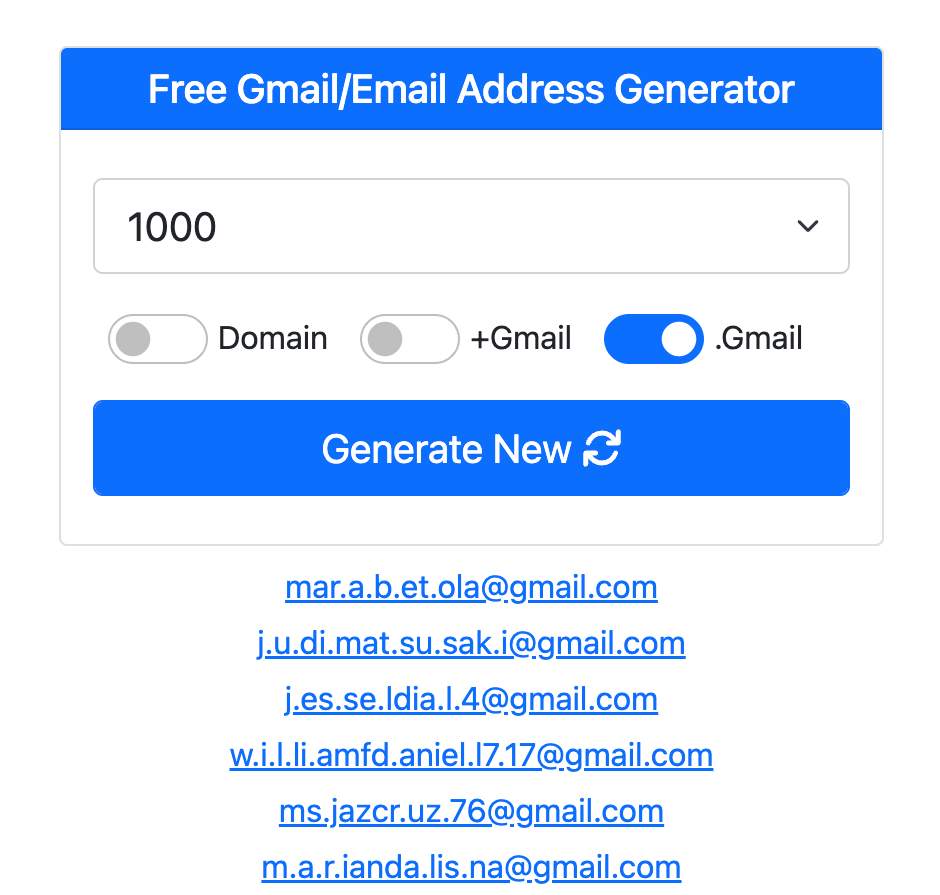

To see how this scales, start with the manual version. A person creates a second account to claim a signup bonus, using a new email or a VPN. Now automate it. Use disposable inboxes or Gmail alias tools to generate addresses. Script the signup flow, solve CAPTCHA, click the verification link, and repeat. What began as a manual trick becomes a high-volume pipeline.

This is what fake account creation looks like in practice: signups turned into infrastructure, and accounts turned into tools for abuse.

How fake account creation works

Fake account creation is not a one-off trick. It’s a repeatable pipeline attackers use to create accounts at scale while avoiding detection. Manual signups still happen, but bots make the attack scalable. With automation, a single actor can create thousands of accounts in minutes.

Scaling a fake signup campaign typically involves the following steps:

Sourcing emails: Most platforms require email validation. Attackers meet this requirement in two ways:

- Classic disposable inboxes: Services like

10minutemail,Maildrop, ortempmailer.netprovide public inboxes that bots can poll for verification emails. These are fast, cheap, and easy to automate. - Gmail alias abuse: Tools like Emailnator generate many Gmail variants using dot normalization and

+aliasing. These addresses pass filters but can be normalized to reveal duplication.

The screenshot below shows how service like Emailnor can be used to get access to thousands of Gmail emails for free.

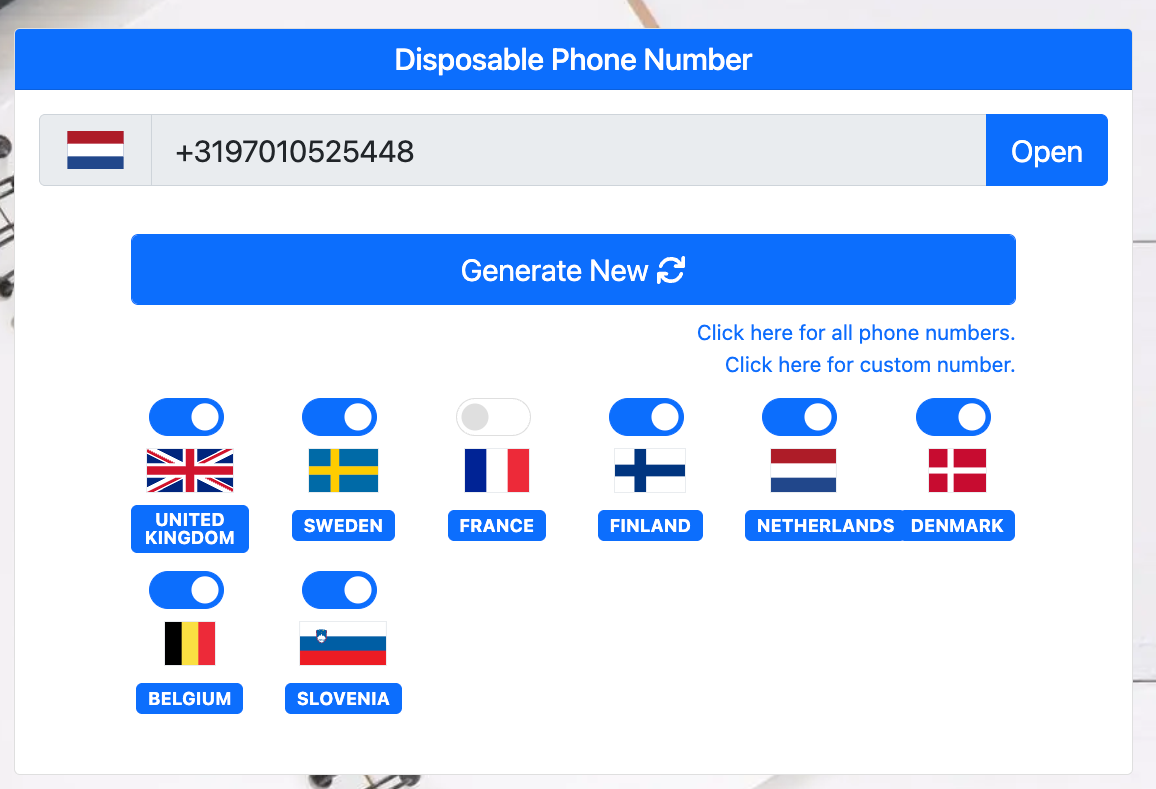

Using temporary phone numbers: When SMS verification is required, attackers rely on services that offer shared virtual numbers. These behave like disposable emails and are often accessible through APIs. Attackers scale across providers or use paid tiers to improve availability and avoid reuse limits.

Automating the signup flow: With emails and phone numbers in place, bots are configured to simulate real users and complete registrations. They mimic request structure, HTTP headers, and payloads to pass validation.

To reduce detection risk, bots may:

- Rotate proxies to distribute traffic

- Throttle requests to resemble human behavior

- Randomize headers, user agents, and device traits

After registration: Once fake accounts are created, attackers use them to extract value or support downstream abuse. Common goals include:

- Abusing free-tier services: AI tools, cloud products, and APIs are accessed repeatedly using new accounts.

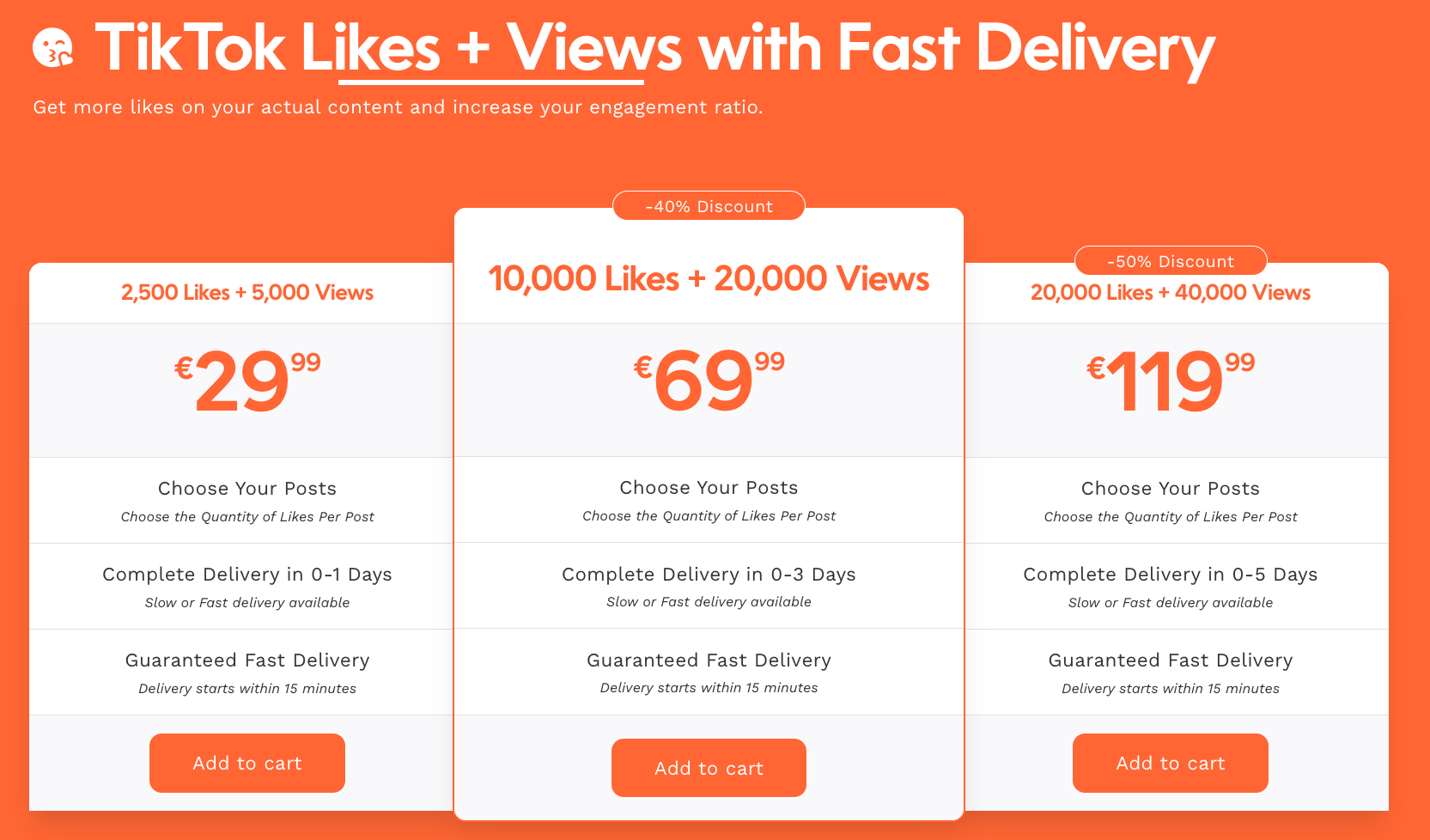

- Manipulating engagement: Bots simulate activity like votes, reviews, or views to influence metrics.

Why attackers do it

Fake account creation is appealing because it’s cheap, scalable, and hard to trace. Attackers don’t need advanced skills. With basic scripts or off-the-shelf tools, they can register users in bulk using disposable emails, spoofed devices, and rotating IPs. Public guides and services like Emailnator or SMS APIs make setup even easier.

These accounts are difficult to attribute. Since they rely on fake infrastructure, it’s hard to link them to a real person. That makes them useful for many forms of abuse, from promo abuse to manipulation of public metrics.

The economics work in the attacker’s favor. A fake account might cost pennies to generate but can unlock much greater value — free-tier usage, referral bonuses, or inflated reputation. Some online services let users buy fake engagement, cf screenshot below, (like views or likes for TikTok and other platforms), which are delivered using automated bots and large volumes of fake accounts. That model is just one example. The same infrastructure is used to exploit signup incentives, distort user metrics, or support fraud.

How fake account creation impacts businesses

Fake account creation hurts businesses even when no breach occurs. Any service with a signup form is a potential target, especially if it offers free trials, referral rewards, or new user promotions. The consequences extend beyond security, affecting product, support, marketing, and finance teams.

Fake accounts degrade the user experience. When bots flood the platform to manipulate reviews or rankings, real users lose trust. This leads to lower engagement, more complaints, and erosion of community quality.

Fraud losses add up fast. Attackers use fake accounts to:

- Bypass free-tier usage limits (e.g., API access, AI inference)

- Redeem referral bonuses and signup credits at scale

- Trigger promo codes repeatedly

- Abuse one-time offers or introductory pricing

These patterns are often invisible at first but become costly as they scale — draining compute budgets or distorting business metrics.

Customer support teams absorb the fallout. Spam complaints, signup errors, and moderation issues all increase. In some cases, bots flood messaging features or create fake profiles that disrupt the platform.

Product and analytics teams suffer from polluted data. Fake signups inflate user growth, skew A/B tests, and distort conversion or retention metrics — making it harder to make product decisions based on real user behavior.

Types of bots used in fake account creation attacks

Fake account creation doesn’t depend on a single tool. Attackers use a range of technologies to simulate legitimate signups across web, mobile, and API surfaces. Their choices depend on the platform they’re targeting, the scale of the campaign, and the strength of bot defenses.

Attackers commonly rely on the following technologies:

- HTTP clients: Libraries like

axios,requests, orfetchlet bots send raw HTTP requests to signup endpoints. These bots skip the UI and work directly with API calls. They’re fast and easy to scale but break when defenses rely on JavaScript execution, timing checks, or hidden fields. - Browser automation frameworks: Tools like Puppeteer and Playwright simulate the full signup flow. They can fill forms, solve CAPTCHAs, and verify emails. With stealth plugins like Puppeteer Stealth, they closely mimic real browsers and evade basic detection.

- Anti-detect browsers: Browsers such as Undetectable, Hidemium, or Kameleo are built to bypass fingerprinting. They spoof traits like screen size, timezone, device memory, and canvas fingerprinting, making automation traffic appear more diverse.

- Mobile emulators and spoofed devices: For mobile-focused services, attackers use tools like Genymotion or rooted Android devices to imitate mobile traffic. These setups help them bypass web-based detection by mimicking real app usage.

Most large-scale campaigns combine multiple tools. Some attackers script their own flows, while others use paid frameworks or open-source kits that handle the entire process — from email generation to verification and account creation.

Industries most targeted by fake account creation

Fake account creation affects any platform with a signup flow, but attackers focus on services where accounts unlock economic value, skew metrics, or support further abuse. The details vary by industry, but the goal is the same: turn synthetic users into leverage.

AI SaaS platforms are high-value targets. Attackers register fake accounts to exploit free-tier compute — generating AI images, running LLM prompts, or accessing proprietary APIs. Because usage translates directly into infrastructure costs, the impact adds up quickly. We broke this down in this blog post.

Gaming platforms are flooded with fake accounts used for abuse and promotion. Bots spread phishing links via in-game chat or friend requests, or advertise illicit services like currency top-ups and boosting. Others exploit reward mechanics—redeeming login bonuses or referral perks—to transfer items or currency to mule accounts.

E-commerce services see abuse tied to coupons, referrals, and reviews. Fake accounts claim new user discounts or inflate product ratings. Some campaigns focus on manipulating seller reputation or flooding listings with fake sentiment.

Social platforms and forums are frequent targets for fake engagement. Bots mass-like posts, boost visibility, or amplify spam. While many fake accounts appear real, with bios and profile photos, they’re often controlled at scale through scripts or click farms.

Financial services and fintech tools are exploited using synthetic identities. Attackers fabricate plausible personas to bypass KYC checks, trigger promos, or test fraud strategies. These accounts often rely on disposable emails, virtual phones, and recycled PII.

Streaming platforms face inflated metrics. Attackers use bots to boost views, streams, or rankings for content. The goal is often to manipulate discovery algorithms or increase monetization payouts.

The table below summarizes how attackers monetize fake accounts across key industries:

| Industry | Monetization / Abuse Potential |

|---|---|

| AI SaaS | Exploit free-tier compute (LLMs, image/video generation, speech), automate API usage |

| Gaming | Redeem bonuses, farm rewards, resell “smurf” or starter accounts |

| E-commerce | Abuse coupons/referrals, manipulate reviews, inflate seller/buyer activity |

| Social platforms | Fake engagement (likes, followers, upvotes), spam amplification, reputation gaming |

| Finance | Synthetic identity fraud, promo abuse, KYC testing, laundering through P2P flows |

| Streaming | Inflate views/streams, manipulate ranking algorithms or monetization metrics |

How to detect fake account creation attacks

Fake signups often pass basic validation. They may use real emails, solve CAPTCHAs, and mimic normal browser behavior. But when executed at scale, they leave behind patterns that don’t match real user behavior. Effective detection depends on identifying these patterns across volume, environment, and behavior.

Volume anomalies: Fake account creation is optimized for scale. Large shifts in signup traffic, especially during off-hours or promotion launches, are often a giveaway.

- Spikes in new registrations — especially tied to incentive programs or sudden bursts of traffic — often point to automated signups.

- Drop in engagement after signup. Legitimate users typically complete onboarding or start using the product. Fake accounts often stall after registration.

- Clustering around specific referral codes or promo campaigns can indicate abuse through mass registration funnels.

Environmental inconsistencies: Automation stacks leave fingerprints. Even when attackers randomize inputs, the underlying infrastructure reveals shared traits.

- Signups using known disposable email domains such as

10minutemail.comormaildrop.ccshould be scrutinized. See our disposable email breakdown. - Gmail alias abuse (e.g.

[email protected]) can create the illusion of uniqueness. Normalizing these addresses exposes duplication. - Virtual phone numbers are often reused across fake accounts. Watch for phone verification flows using shared or recycled numbers.

- Repeated device traits like the same WebGL fingerprint, timezone, or header order suggest bot traffic using anti-detect browsers or emulators.

Behavioral irregularities: Bots often struggle to replicate human behavior across the full onboarding flow.

- Identical or overly random field values (e.g., bios, usernames) often result from input generators. These can be spotted with clustering or anomaly scoring.

- Unusual onboarding flow. Fake accounts may skip profile setup, abandon flows, or follow rigid patterns inconsistent with normal users.

- Reuse of the same device fingerprint across many accounts is a strong indicator of automation. Even with proxy rotation, infrastructure reuse often leaks at the device level.

How to block fake account creation

Detecting fake signups is one thing. Blocking them without disrupting legitimate users is harder. If defenses are too lenient, bots slip through. If they’re too strict, real users abandon onboarding.

The goal isn’t to eliminate every fake account. It’s to increase the attacker’s cost. Most fake signup operations rely on cheap infrastructure and automation. Adding friction, unpredictability, and monitoring makes scale harder to sustain.

Apply progressive friction: Don’t apply the same defenses to every user. Introduce friction based on real-time risk signals.

- Use CAPTCHA selectively when traits like IP reputation, disposable emails, or device anomalies indicate automation. Vary the challenge to avoid becoming predictable.

- Block known high-risk signals such as throwaway domains or mismatched user agents. But pair with normalization and behavioral checks to avoid false positives.

This approach protects real users from unnecessary hurdles while making automation more expensive.

Normalize inputs to expose duplicates: Input normalization collapses synthetic variants into recognizable duplicates. This is especially effective for email and phone abuse.

- Strip dots and

+tags from Gmail addresses. Variants like[email protected]and[email protected]route to the same inbox. - Standardize phone number formats and remove duplicates across country code variations.

- Fuzz match usernames and bios to catch mass-generated values.

Normalization shrinks the space attackers rely on to appear unique.

Implement adaptive rate limits: Rate limiting signups helps contain abuse, but static rules aren’t enough. Bots are built to evade predictable caps.

- Throttle by device, fingerprint, or session — not just IP. Proxies are easy to rotate, but infrastructure traits often repeat.

- Watch for coordination across distributed traffic. Campaigns may spread signups across thousands of IPs but reuse headers or TLS signatures.

- Detect bursts of randomness in field values or identities. Sudden entropy spikes usually indicate script-driven input, not organic growth.

Smart rate limiting buys time, slows attacks, and helps surface orchestrated abuse.

Invest in bot detection: Basic bots are noisy. They skip JavaScript, reuse headers, or fail fingerprint checks. But the more advanced ones use headless browsers and spoofing tools designed to evade detection.

Effective systems look for:

- Automation indicators like

navigator.webdriver, inconsistent WebGL values, or CDP injection. - Mismatched traits like a mobile user agent with a desktop viewport or recycled device fingerprints.

- Behavioral clues like zero interaction, identical navigation flows, or high signup speed across sessions.

The strongest defenses link signups across infrastructure, correlating signals over time to uncover campaigns early.

If your team isn’t set up to track these patterns at scale, Castle can help. Our detection engine flags risky signups in real time, correlates fingerprints across attempts, and gives your team visibility into automation before abuse starts.

Why blocking fake signups at scale is hard — and what actually works

Fake account creation is constantly evolving. Attackers adapt scripts, rotate infrastructure, and test your defenses. Every friction layer becomes a challenge to bypass, not a barrier that holds.

Block disposable emails? They switch to Gmail aliases. Add phone verification? They rotate virtual numbers. Fingerprint devices? They deploy anti-detect browsers with spoofed traits. Rate limit IPs? They distribute traffic across proxy pools and stagger timing.

Even mobile apps are exposed. Many registration APIs lack the behavioral telemetry or instrumentation needed to flag emulators or scripted flows.

The real challenge isn’t spotting automation. It’s separating fake intent from legitimate behavior without damaging your funnel. Overblocking reduces conversion. Underblocking lets bots scale. Static rules fall apart under pressure.

What effective production defense looks like

Behavioral analytics: Focus on flows, not just fields. Bots can solve CAPTCHAs and verify emails, but they struggle to mimic real onboarding behavior. Look for:

- Very short session duration

- Identical or overly random values across accounts

- Drop-off immediately after verification

Real users explore. Bots follow scripts.

Session linking: Attackers spread out infrastructure, but shared traits persist. Link sessions using cookies, TLS fingerprints, canvas hashes, and timezone to surface clusters.

If dozens of signups share the same hardware traits, you’re seeing one rig — not dozens of users.

Input normalization and adaptive signals: Attackers generate variation synthetically. Normalize those inputs to collapse duplication.

- Strip Gmail dots and

+tags - Standardize and deduplicate phone numbers

- Use edit distance to cluster near-identical usernames

Update blocklists and indicators regularly. Static signals get stale fast.

Real-time telemetry and campaign visibility: Point-in-time detection misses evolving campaigns. You need live dashboards that surface:

- Signup spikes by fingerprint or device

- Outliers in referral codes or engagement

- Patterns in field entropy or form progression

Detecting and blocking fake signups isn’t about stopping every single one. It’s about making scale too expensive to maintain — and seeing the abuse before it impacts your users or your metrics.

Final thoughts

Fake account creation isn’t just a nuisance. It’s a scalable, repeatable workflow. Attackers now use mature infrastructure: browser automation frameworks, disposable email APIs, SMS verifiers, and anti-detect browsers that closely mimic real devices.

These are not one-off scripts. They’re modular pipelines. Signup flows are automated, tested, and reused. Friction is measured. Tools like Gmail alias generators and mobile emulators are packaged as services and shared in Telegram groups.

Simple filters and static rules are no match. Blocklists get outdated. Signals get spoofed. Each new defense becomes a test case for the next version of the bot.

What works is adaptability. Effective systems track session-level behavior, link activity across devices and accounts, and evolve with attacker tactics. The goal isn’t to block every fake account — it’s to make large-scale abuse impractical and to expose coordinated activity before it causes damage.

That’s what Castle is built for. If fake signups are skewing your metrics or driving up costs, we can help.

*** This is a Security Bloggers Network syndicated blog from The Castle blog authored by Antoine Vastel. Read the original post at: https://blog.castle.io/fake-account-creation-attacks-anatomy-detection-and-defense/

如有侵权请联系:admin#unsafe.sh