The current Spider and Scanner tools are pretty good at le 2018-08-15 00:57:00 Author: portswigger.net(查看原文) 阅读量:107 收藏

The current Spider and Scanner tools are pretty good at letting you do one thing at a time. They let you define your configuration and scope. They each employ a single queue of work. They can be paused and resumed.

But if you want to do multiple scans of different areas, with different configurations, and different priorities, and monitor and control each scan independently, then you're stuck.

All of this will soon change. The Spider and Scanner are going to disappear as top-level singleton tools, with their global configuration and queues of work. In their place, you'll be able to kick off individual scans. Each scan can be assigned to do something different: crawl a particular web site, audit a bunch of selected requests, or perform an end-to-end crawl and audit.

Each scan has its own configuration and scope, manages its own pending work, and can be monitored and controlled independently. You can create as many parallel scans as you like, pause and resume them individually, and set priorities for use of resources. If you like to work by selecting individual items and sending them for scanning, you can choose which applicable task to send each item to.

There are many obvious use cases for the new capability:

- You can create separate scans for different areas of an application, and separately monitor the progress and results of each.

- You can create a high priority task to audit items that you manually select as being interesting, and a lower priority task working through a larger backlog of work.

- You can create scans that are optimized for different purposes. For example, a quick scan for low-hanging fruit; a slow thorough and exhaustive scan; or a scan for a specific vulnerability like file path traversal.

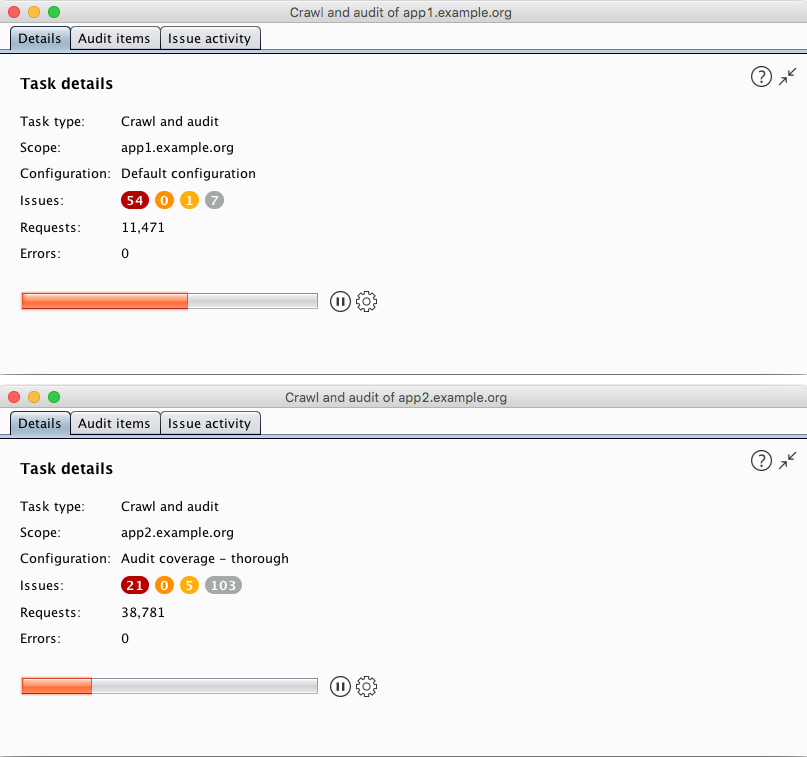

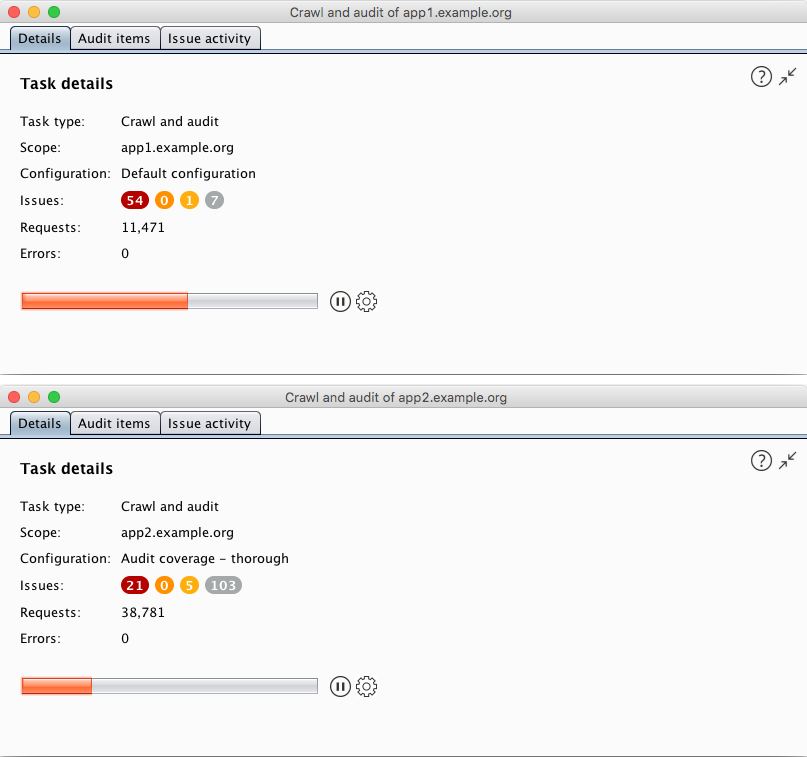

Here is one view in the new UI showing the details of two parallel scans in progress:

Over the next few days, we'll describing some more upcoming features related to the new support for multiple parallel scans.

如有侵权请联系:admin#unsafe.sh