2024-10-31 01:0:18 Author: hackernoon.com(查看原文) 阅读量:3 收藏

Table of Links

-

Introduction

TESTING PROCEDURES

Once a DRL agent is trained, a testing procedure is designed to assess the hedging performance of the agent. First, note that the final P&L of the hedging agent is computed by tracking a money-market account position, denoted as 𝐵, through each time step until maturity:

Given this defined performance metric, the main requirements for agent testing are:

1. The generation of asset price data, which is required for the DRL agent state and the P&L calculations.

2. The American put option price at the initial time step, used in the P&L calculation.

3. The construction of an exercise boundary to be used by the counterparty.

4. The design of benchmark hedging strategies for comparison.

Once again, this section will be grouped into GBM and stochastic volatility model experiments.

GBM Experiments: Testing Procedure

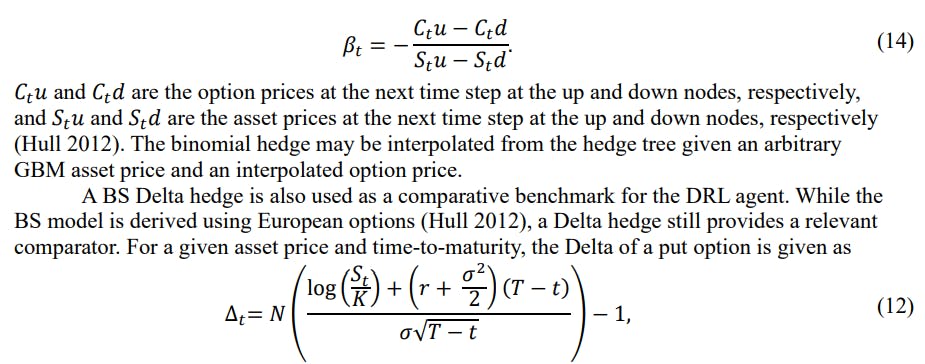

s in training, GBM tests use MC paths of the GBM process for testing. Moreover, given that an American option tree was computed for training, the tree is used to generate the initial option price in testing. Further, by indexing the nodes at which exercise is optimal, an exercise boundary to be used by the counterparty is constructed. Given that binomial trees are constructed for both the asset and option price processes, this study uses a binomial tree hedge as a testing benchmark for the DRL agent. At a given timestep, the binomial tree hedge for an American put option, which in this study is denoted 𝛽𝑡 , is given by

where 𝑁(∗) is the cumulative normal distribution function, 𝑟 is the risk-free rate (5% for all experiments), and 𝐾 is the strike price (Hull 2012). All GBM tests are conducted using 10000 GBM sample paths with 100 rebalance points each, and the initial asset price and strike price are both set at $100.

Stochastic Volatility Experiments: Testing Procedure

Recall that to establish a baseline, the first stochastic volatility experiment trains the DRL agent using arbitrary model coefficients. The option maturity is set to one month, and the DRL agent trained with paths from said stochastic volatility model with arbitrary coefficients is tested across 10000 episodes with 21 rebalance steps each (daily rebalancing), noting that the initial volatility is 20%, and the initial asset price and strike price are set to $100.

Upon completion of this experiment, the market calibrated DRL agents are tested by simulating 10000 asset paths using the respective calibrated model, the implied initial volatility, and the option maturity. The calibrated Chebyshev exercise boundary is used for determining counterparty decisions. Daily rebalancing is assumed, and therefore the number of rebalance points in each testing episode is the number of trading days between August 17th, 2023, and the option maturity date. To give insight into how a DRL agent trained with a market-calibrated stochastic volatility model will perform in practice, a final experiment in this study evaluates the performance of each of the 80 DRL agents on the true asset path that was realized between August 17th, 2023, and the maturity date. The price data between August 17th, 2023, and November 17th, 2023, for all 8 symbols used were retrieved from Yahoo finance and are listed in the Appendix. As for the design of a comparative benchmark strategy, only the BS Delta is used as a comparative benchmark as a binomial tree is not constructed for stochastic volatility experiments. The updated volatility at each time step is substituted into the BS Delta calculation.

Authors:

(1) Reilly Pickard, Department of Mechanical and Industrial Engineering, University of Toronto, Toronto, ON M5S 3G8, Canada ([email protected]);

(2) Finn Wredenhagen, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(3) Julio DeJesus, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(4) Mario Schlener, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(5) Yuri Lawryshyn, Department of Chemical Engineering and Applied Chemistry, University of Toronto, Toronto, ON M5S 3E5, Canada.

This paper is

如有侵权请联系:admin#unsafe.sh