2024-6-21 23:46:52 Author: flashpoint.io(查看原文) 阅读量:9 收藏

Disinformation is the deliberate and systematic attempt to deceive and manipulate public opinion by spreading false or misleading information.

Active disinformation campaigns can take many forms, with nation states leveraging a wide array of tactics and techniques intended to introduce additional cyber, physical, and geopolitical threats. Perhaps no nation state has developed a systematic disinformation capability as sophisticated as Russia.

With our digitally connected global population, individuals and organizations can both be frequent targets of disinformation campaigns. Here are three ways security experts can help their organizations navigate in murky information waters:

- Anticipate the weaponization of new technologies

- Enhance situational awareness amid a widening threat landscape

- Protect people, places, and assets with Flashpoint OSINT data and tools

1. Anticipate the weaponization of new technologies

Understanding modern Russian disinformation campaigns and the technologies they use is critical for agencies and organizations across both governmental and commercial realms—especially if they are direct or indirect targets of the Russian government or state-sponsored threat actors.

Russia is notorious for its involvement in numerous disinformation campaigns around the world, particularly those targeting the United States and European countries such as Ukraine. Russian disinformation tactics have significantly evolved in sophistication and scope since the Soviet era of the 1920s. New technologies, such as AI-generated content—especially deepfakes—have enabled disinformation actors to exploit legitimate social platforms, media, and research organizations to spread false information.

Deepfakes and synthetic media

Synthetic media content includes audio clips, photos, and videos that have been digitally manipulated or completely fabricated using artificial intelligence tools to mislead the viewer and promote false information.

There have been several instances of highly viewed deepfakes that were created to promote pro-Russian sentiments. In November 2023, a deepfake video depicting Moldova’s current President Maia Sandu was shared online. In it, Sandu allegedly announced her resignation and pledged her support for the candidacy of pro-Russia fugitive oligarch Ilan Shor. Before its removal, the video had been reported to have been viewed over 155 million times.

Ukraine has also been a major target for deepfakes since Russia’s invasion in 2022. During the early stages of the war, a video depicting Volodymyr Zelenskyy appeared on a compromised Ukrainian news website. In this video, the fake Zelenskyy encouraged Ukrainian troops to lay down their weapons. As the war continued, Russian disinformation actors launched Operation Black Hole—a narrative designed to convince European and US audiences that Ukraine is a “black hole” where military and financial aid completely disappears. This deepfake included a watermark from a major news network and the logo of the American Museum of Natural History. This narrative was so effective that it was subsequently echoed by some high-profile American politicians and influencers.

Всі ви, напевно, чули про технологію Діпфейк (англ. deepfake; поєднання слів deep learning («глибинне навчання») та fake («підробка») — методика синтезу зображення людини, яка базується на штучному інтелекті.

— Defence intelligence of Ukraine (@DI_Ukraine) March 2, 2022

Готується провокація РФ.https://t.co/XYyS9WsPkK

Exploiting information gaps, civil unrest, and prejudices

Russian disinformation actors frequently target divisive issues to polarize societies and destabilize political systems. Flashpoint recently observed this tactic in early 2024 regarding the Texas border.

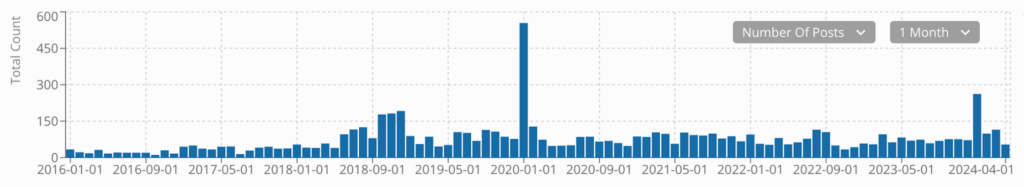

Number of illicit posts mentioning terms “immigration,” “Texas,” and “protest” or “rally/rallies” over time.

Our analysts saw a noticeable spike in illicit posts from threat actors and pro-Russian social media accounts mentioning the terms “immigration,” “Texas,” and “protest” or “rally/rallies” beginning in January 2024. Disinformation messages were created to amplify the Texas border dispute using coordinated activity on Telegram and X. The campaign involved spreading the narrative that a civil war could take place in the United States.

Flashpoint analysts observed this photo of a man holding a PMC Wagner flag being shared online. The caption states that the photo was taken near the US-Mexico border.

Russia has also previously posed as activists for the Black Lives Matter (BLM) movement and Russian news outlets portrayed the movement as aggressive which amplified negative coverage.

2. Enhance situational awareness

Situational awareness, a fundamental aspect of threat intelligence, involves understanding your surroundings by knowing your current position, your intended location, and identifying any potential risks posed by people or objects in your vicinity. This traditionally includes mobile radios, landlines, emails, cell and satellite phones, traffic cameras, and more—however, in today’s digital age, this now includes social media, paste sites, and the deep and dark web.

Social media manipulation is a common tradecraft for Russian disinformation. Threat actors often create bot networks to share a large volume of fake content through thousands of inauthentic accounts, creating the impression of widespread support or opposition to a given message.

Russia is known to have bot networks operating worldwide; however, their efforts have recently been concentrated in Ukraine, Moldova, Kazakhstan, and Russia itself. Recently, Ukraine reported the arrest of two threat actors in Kyiv who were involved in farming social media and messenger accounts. This bot farm had generated up to 1,000 fake social media accounts per day.

Monitoring illicit forums and the deep and dark web

Russian disinformation narratives often start on Russian-owned or sponsored illicit forums, Telegram channels, and fake news sources. When new narratives or conspiracy theories begin to spike in popularity, the initial sharing and activity can often be traced back to Russian-owned channels.

This can be seen with disinformation narratives such as Operation Black Hole, where Flashpoint traced the first mentions of the term to pro-Russia Telegram channels. Our analysts then attributed the widespread sharing of the message to channels reportedly connected with the Russian Federal Security Service (FSB), as well as the main state propaganda sources.

3. Integrate cyber and physical security measures

Protecting yourself against disinformation attempts is critical as it can introduce additional and unforeseen physical and cyber threats.

Interconnected threats: The Russia-Ukraine War

The Russia-Ukraine War has been an unprecedented display of the convergence of physical, geopolitical, and cyber threats. Starting in 2014, Russia leveraged propaganda and disinformation tactics to recruit individuals into pro-Russia militias fighting in the Donetsk and Luhansk People’s Republics (DPR and LPR). Prior to their invasion of Ukraine, Flashpoint reported a significant increase in DPR and LPR recruitment and fundraising efforts on various Russian-language social media and chat platforms. Furthermore, by monitoring Russian infantry’s social media activity, Flashpoint was able to track physical evidence of Russian military mobilization to strategic Ukrainian border locations.

On the cyber side, disinformation narratives also brought Russian-affiliated and sponsored threat actor groups to participate in numerous DDoS attacks, website defacements, and destructive malware attacks—all in the name of hacktivism.

Enabling a holistic defense strategy using OSINT

Looking at the general threat landscape, OSINT helps government agencies and private sector organizations better protect people, places, and assets. By leveraging both finished intelligence and tailored insights, they can enhance their security posture and proactively counter sophisticated threats. Improved situational awareness allows users to safeguard brick-and-mortar assets and ensure operational continuity amid potential civil unrest or attempts to incite protests or violence.

At the same time, OSINT empowers Cyber Threat Intelligence teams to be proactive in shutting down potential threats by identifying and taking down proxy accounts, dangerous websites, and malicious IP addresses associated with known disinformation campaigns.

Protect people, places, and assets with Flashpoint

Government agencies and organizations can leverage Flashpoint’s Echosec solution to integrate physical and cyber security measures. Additionally, new AI-assisted features enable customers to ask plain-language questions and receive immediate answers about emerging narratives, disinformation campaigns, public sentiment, and threat actor involvement in real-time.

To learn more about how OSINT keeps organizations safe, check out our latest on-demand webinar “Protecting Yourself in OSINT: 10 Crucial Tips for Secure Investigations.” Or, sign up for a demo today.

如有侵权请联系:admin#unsafe.sh