By Marek Surovič and Henrich Lauko

EuroLLVM is a developer meeting focused on projects under the LLVM Foundation umbrella that live in the LLVM GitHub monorepo, like Clang and—more recently, thanks to machine learning research—the MLIR framework. Trail of Bits, which has a history in compiler engineering and all things LLVM, sent a bunch of our compiler specialists to the meeting, where we presented on two of our projects: VAST, an MLIR-based compiler for C/C++, and PoTATo, a novel points-to analysis approach for MLIR. In this blog post, we share our takeaways and experiences from the developer meeting, which spanned two days and included a one-day pre-conference workshop.

Security awareness

A noticeable difference from previous years was the emerging focus on security. There appears to be a growing drive within the LLVM community to enhance the security of the entire software ecosystem. This represents a relatively new development in the compiler community, with LLVM leadership actively seeking expertise on the topic.

The opening keynote introduced the security theme, asserting it has become the third pillar of compilers alongside optimization and translation. Kristof Beyls of ARM delivered the keynote, providing a brief history of how the concerns and role of compilers have evolved. He emphasized that security is now a major concern, alongside correctness and performance.

The technical part of the keynote raised an interesting question: Does anyone verify that security mitigations are correctly applied, or applied at all? To answer this question, Kristof implemented a static binary analysis tool using BOLT. The mitigations Kristof picked to verify were -fstack-clash-protection and -mbranch-protection=standard, particularly its pac-ret mechanism.

The evaluation of the BOLT-based scanner was conducted on libraries within a Fedora 39 AArch64-linux distribution, comprising approximately 3,000 installed packages. For pac-ret, analysis revealed 2.5 million return instructions, with 46 thousand lacking proper protection. Scanning 1,920 libraries that use -fstack-clash-protection identified 39 as potentially vulnerable, although some could be false positives.

An intriguing discussion arose regarding the preference for BOLT over tools like IDA, Ghidra, or Angr from the reverse-engineering domain. The distinction lies in BOLT’s suitability for batch processing of binaries, unlike the user-interactivity focus of IDA or Ghidra. Furthermore, the advantage of BOLT is that it supports the latest target architecture changes since it is part of the compilation pipeline, whereas reverse engineering tools often lag behind, especially concerning more niche instructions.

For further details, Kristof’s RFC on the LLVM discourse provides additional information.

For those interested in compiler hardening, the OpenSSF guidelines offer a comprehensive overview. Additionally, for a more in-depth discussion of security for compiler engineers, we suggest reading the Low Level Software Security online book. It’s still a work in progress, and contributions to the guidelines are welcome.

One notable talk on program analysis and debugging was Incremental Symbolic Execution for the Clang Static Analyzer, which discussed how the Clang Static Analyzer can now cache results. This innovation helps keep diagnostic information relevant across codebase changes and minimizes the need to invoke the analyzer. Another highlight was Mojo Debugging: Extending MLIR and LLDB, which explored new developments in LLDB, allowing its use outside the Clang environment. This talk also covered the potential upstreaming of a debug dialect from the Modular warehouse.

MLIR is not (only) about machine learning

MLIR is a compiler infrastructure project that gained traction thanks to the machine learning (ML) boom. The ML in MLIR, however, stands for Multi-Level, and the project allows for much more than just tinkering with tensors. SiFive, renowned for their work on RISC-V, employs it in circuit design, among other applications. Compilers for general-purpose languages using MLIR are also emerging, such as JSIR Dialect for JavaScript, Mojo as a superset of Python, ClangIR, and our very own VAST for C/C++.

The MLIR theme of this developer meeting could be summarized as “Figuring out how to make the most of LLVM and MLIR in a shared pipeline.” A number of speakers presented work that, in one way or another, concluded that many performance optimizations are better done in MLIR thanks to its better abstraction. LLVM then is mainly responsible for code generation to the target machine code.

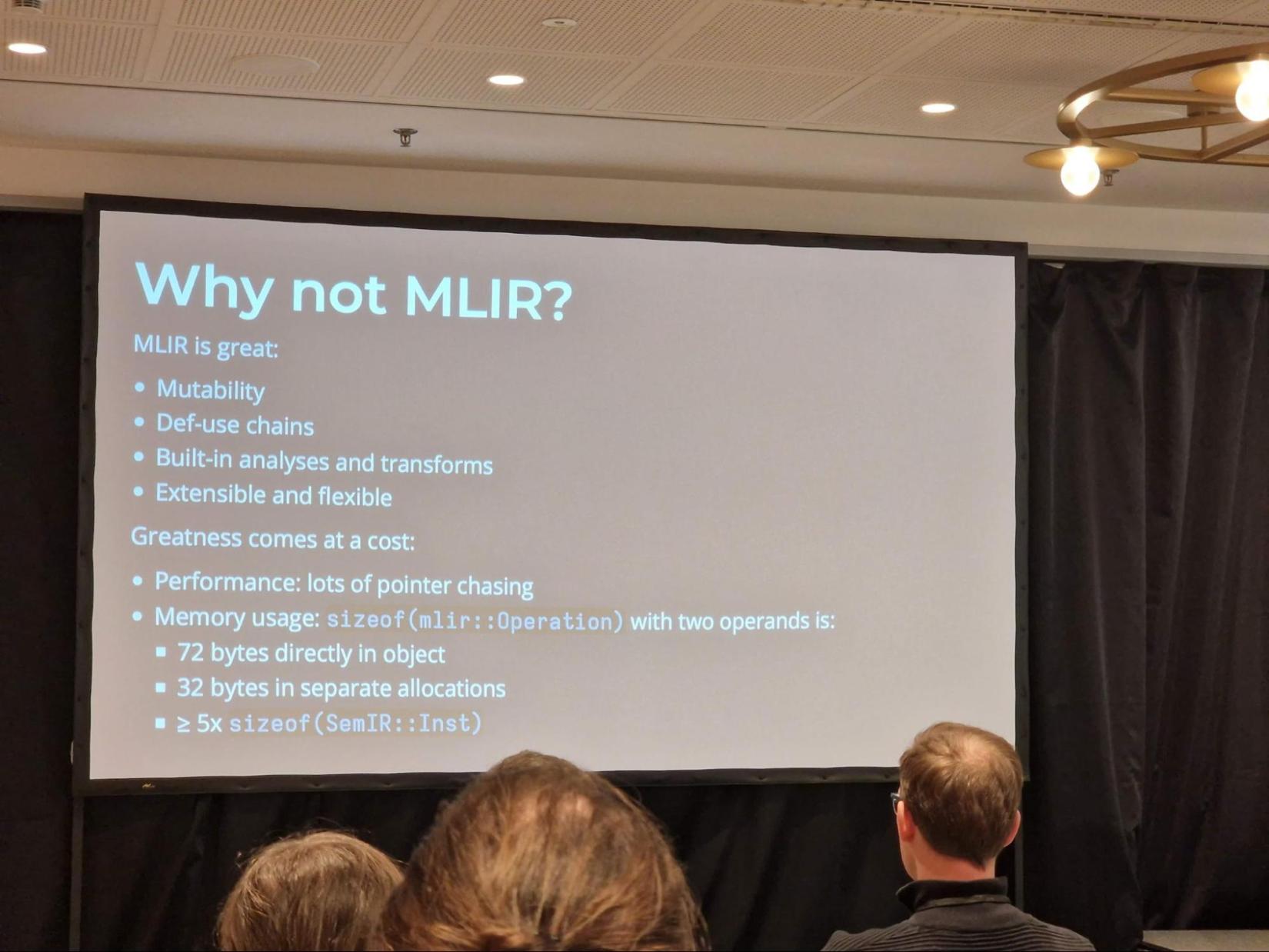

After going over all the ways MLIR is slow compared to LLVM, Jeff Niu (Modular) remarked that in the Mojo compiler, most of the runtime is still spent in LLVM. The reason is simple: there’s just more input to process when code gets compiled down to LLVM.

A team from TU Munich even opted to skip LLVM IR entirely and generate machine-IR (MIR) directly, yielding ~20% performance improvement in a Just-in-Time (JIT) compilation workload.

Those intrigued by MLIR internals should definitely catch the second conference keynote on Efficient Idioms in MLIR. The keynote delved into performance comparisons of different MLIR primitives and patterns. It gave developers a good intuition about the costs of performing operations such as obtaining an attribute or iterating or mutating the IR. On a similar topic, the talk Deep Dive on Interfaces Implementation gave a better insight into a cornerstone of MLIR genericity. These interfaces empower dialects to articulate common concepts like side effects, symbols, and control flow interactions. The talk elucidated their implementation details and the associated overhead incurred in striving for generality.

Region-based analysis

Another interesting trend we’ve noticed is that several independent teams have found that analyses traditionally defined using control flow graphs based on basic blocks may achieve better runtime performance when performed using a representation with region-based control flow. This improvement is mainly because analyses do not need to reconstruct loop information, and the overall representation is smaller and therefore quicker to analyze. The prime example presented was dataflow analysis done inside the Mojo compiler.

For cases like Mojo, where you’re starting with source code and compiling down an MLIR-based pipeline, switching to region-based control flow for analyses is only a matter of doing the analysis earlier in the pipeline. Other users are not so lucky and need to construct regions from traditional control flow graphs. If you’re one of those people, you’re not alone. Teams in the high-performance computing industry are always looking for ways to squeeze more performance from their loops, and having loops explicitly represented as regions instead of hunting for them in a graph makes a lot of things easier. This is why MLIR now has a pass to lift control flow graphs to regions-based control flow. Sounds familiar? Under the hood, our LLVM-to-C decompiler Rellic does something very similar.

Not everything is sunshine and rainbows when using regions for control flow, though. The regions need to have a single-entry and single-exit. Many programming languages, however, allow constructs like break and continue inside loop bodies. These are considered abnormal entries or exits. Thankfully, with so much chatter around regions, core MLIR developers have noticed and are cooking up a major new feature to address this. As presented during the MLIR workshop, the newly designed region-based control flow will allow specifying the semantics of constructs like continue or break. The idea is pretty simple: these operations will yield a termination signal and forward control flow to some parent region that captures this signal. Unfortunately, this still does not allow us to represent gotos in our high-level representation, as the signaling mechanism does allow users to pass control-flow only to parent regions.

C/C++ successor languages

The last major topic at the conference was, as is expected in light of recent developments, successor languages to C/C++. One such effort is Carbon, which had a dedicated panel. The panel questions ranged from technical ones, like how refactoring tools will be supported, to more managerial ones, like how Carbon will avoid being overly influenced by the needs of Google, which is currently the main supporter of the project. For a more comprehensive summary of the panel, check out this excellent blog post by Alex Bradbury.

Other C++ usurpers had their mentions, too—particularly Rust and Swift. Both languages recognize the authority of C++ in the software ecosystem and have their own C++ interoperability story. Google’s Crubit was mentioned for Rust during the Carbon panel, and Swift had a separate talk on interoperability by Egor Zhdan of Apple.

Our contributions

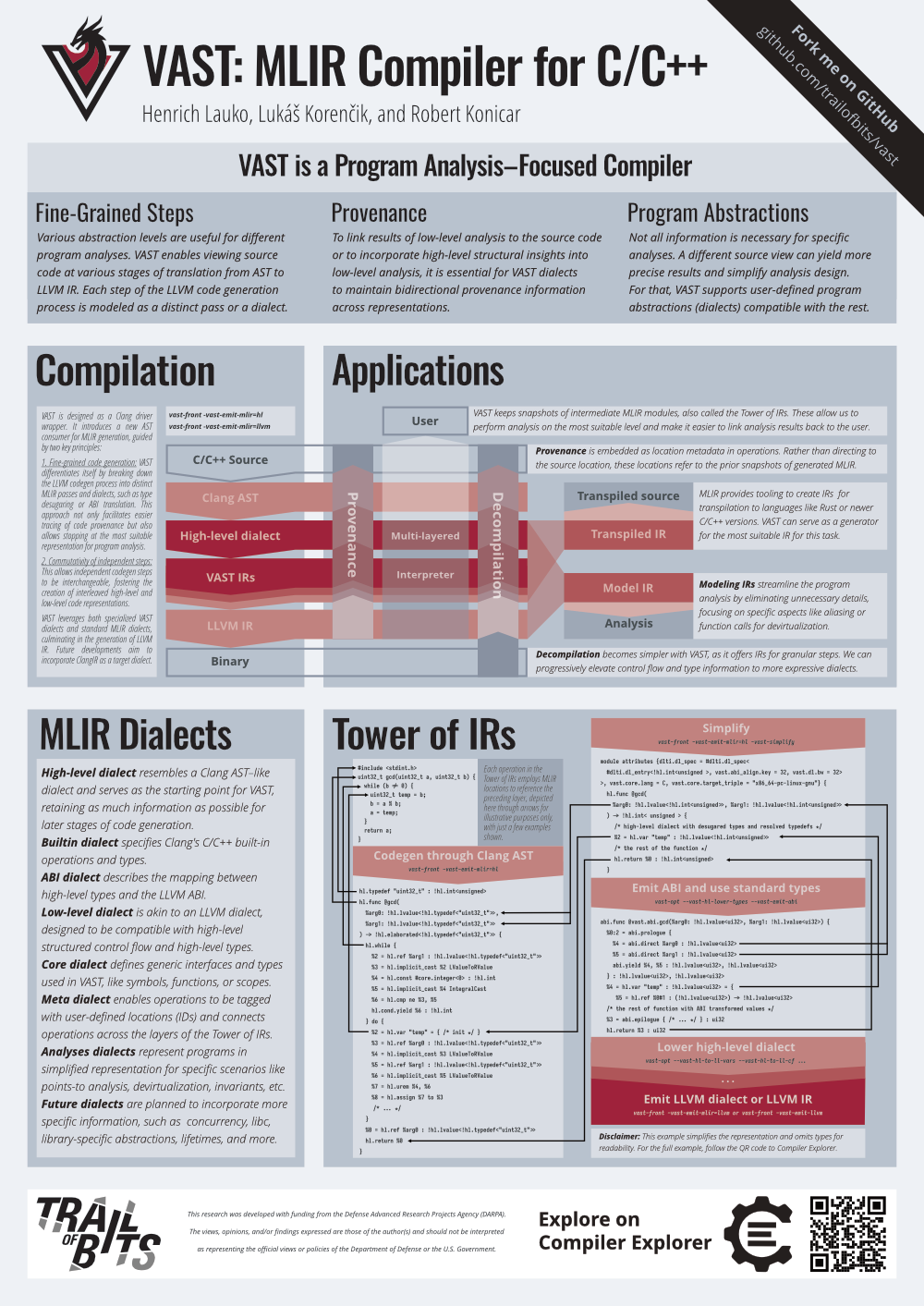

Our own Henrich Lauko gave a talk on a new feature coming to VAST, our MLIR-based compiler for C/C++: the Tower of IRs. The big picture idea here is that VAST is a MLIR-based C/C++ compiler IR project that offers many layers of abstraction. Users of VAST then can pick the right abstractions for their analysis or transformation use-case. However, there are numerous valuable LLVM-based tools, and it would be unfortunate if we couldn’t use them with our higher-level MLIR representation. This is precisely why we developed the Tower of IRs. It enables users to bridge low-level analysis with high-level abstractions.

The Tower of IRs introduces a mechanism that allows users to take snapshots of IR between and after transformations and link them together, creating a chain of provenance. This way, when a piece of code changes, there’s always a chain of references back to the original input. The keen reader already has a grin on their face.

The demo use case Henrich presented was repurposing LLVM analyses in MLIR by using the tower to bring the input C source all the way down to LLVM, perform a dependency analysis, and translate analysis results all the way back to C via the provenance links in the tower.

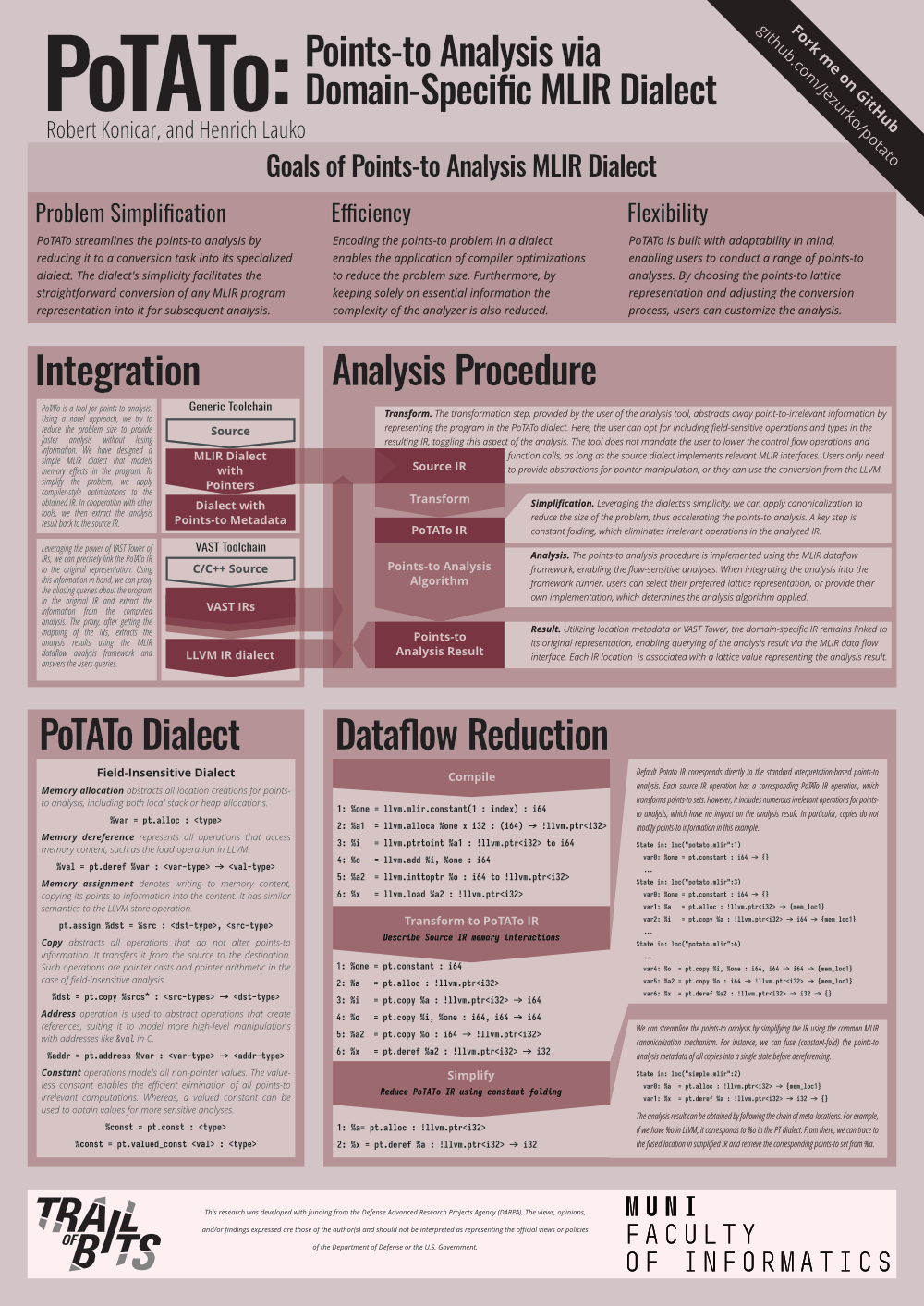

Along with Henrich, Robert Konicar presented the starchy fruits of his student labor in the form of PoTATo. The project implements a simple MLIR dialect tailored towards implementing points-to analyses. The idea is to translate memory operations from a source dialect to the PoTATo dialect, do some basic optimizations, and then run a points-to analysis of your choosing, yielding alias sets. To get relevant information back to the original code, one could of course use the VAST Tower of IRs. The results that Robert presented on his poster were promising: applying basic copy-propagation before points-to analysis significantly reduced the problem size.

AI Corridor talks

Besides attending the official talks and workshops, the Trail of Bits envoys spent a lot of time chatting with people during breaks and at the banquet. The undercurrent of many of those conversations was AI and machine learning in all of its various forms. Because EuroLLVM focuses on languages, compilers, and hardware runtimes, the conversations usually took the form of “how do we best serve this new computing paradigm?”. The hardware people are interested in how to generate code for specialized accelerators; the compiler crowd is optimizing linear algebra in every way imaginable; and languages are doing their best to meet data scientists where they are.

Discussions about projects that went the other way—that is, “How can machine learning help people in the LLVM crowd?”—were few and far between. These projects typically did research into various data gathered in the domains around LLVM in order to make sense out of them using machine learning methods. From what we could see, things like LLMs and GANs were not really mentioned in any way. Seems like an opportunity for fresh ideas!