2024-6-14 18:37:23 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

The evolution of phishing has always been characterized by a push and pull between quality and quantity. For decades, quantity prevailed, with the vast majority of phishing campaigns following the same basic logic as spam. “Spray and pray,” “scale over sophistication,” and “quantity over quality” were the mantras of malicious actors operating with the understanding that even a 0.1% success rate, when applied to millions of targets, was still a recipe for success.

However, more recently, advancements in defensive security capabilities have made that brand of low-effort, high-volume phishing light years less effective. Naturally, this led to the growth of new, more sophisticated strategies — such as spear phishing and business email compromise (BEC) — which rely on more carefully crafted, convincing messages tailored to specific groups, organizations or individuals.

Although these types of attacks have been on the rise for quite some time, until recently, their growth has been tempered by the inherent limitations of such a strategy. Researching targets, personalizing messages and ensuring linguistic accuracy — are all time-consuming processes, which have served as natural constraints on the scalability of such attacks.

GenAI Renders Traditional Trade-Offs Obsolete

However, those constraints appear to be loosening. With the recent emergence of commercially available generative AI (GenAI) tools like ChatGPT, what once felt like a fundamental law of the universe is quickly becoming a thing of the past. With a single, sentence-length prompt, attackers can now generate elaborate, expertly written phishing emails in mere seconds. And they can do so in virtually any language, style or format; even going so far as to imitate the unique writing style of a particular individual if given samples of their writing to imitate.

As if that wasn’t enough, GenAI can also be used by malicious actors to expedite and automate much of the work that goes into researching targets. Last November, OpenAI announced that users will now be able to develop and share custom-made AI agents (called GPTs) to automate or assist with any number of tasks — including researching potential targets for BEC attacks.

With websites like theorg.com proliferating on the web — which allows companies to upload org charts to be shared publicly — one can easily imagine a bad actor creating a “GPT” that sifts through those charts for intel on potential targets, including things like their role, who they report to, who reports to them, who their recurring partners and vendors are, and so on.

With the research on auto-pilot, and the actual crafting of messages requiring nothing more than simple, single-sentence inputs, a high-profile BEC campaign that might have taken days or weeks to develop can now be developed and deployed in a couple of hours.

From Possibility to Reality: BEC Skyrockets the World Over

It would be hard to overstate the enormity of GenAI’s impact on cybersecurity over the past 14 months. However, to say it came as a total surprise would be disingenuous. Researchers have been studying AI’s potential for harm in the realm of cybersecurity for years. In fact, in a 2021 study, a group of researchers demonstrated how generative AI (using OpenAI’s GPT-3 technology) could be used to generate dramatically more sophisticated, personalized, convincing phishing emails at increased frequency and scale (even developing a means of automating the personalization process to establish a “targeted phishing pipeline”).

Roughly a year later, OpenAI released its large language model (LLM) to the world as ChatGPT, which quickly became the fastest-growing consumer application of all time. And in virtually no time at all, malicious actors began looking for ways to leverage this groundbreaking technology to their advantage. Although companies like OpenAI have implemented safeguards to prevent malicious use, there’s been no shortage of research demonstrating how easily these guardrails can be circumvented, using nothing more than clever prompting. What’s more, purpose-built black-hat versions of the technology, such as FraudGPT and WormGPT, have already been made widely available on the dark web.

Indeed, the malicious actors of the world wasted no time in putting this revolutionary technology to work — and at IRONSCALES, our platform data seems to suggest this inevitability has already become a reality. From Q2 2022 to Q2 2023, the total volume of BEC attempts increased by 21% worldwide. Meanwhile, advanced email attacks of all kinds increased by 24% globally over just the first two quarters of 2023.

Text is Just the Beginning: AI-Generated Image-Based Phishing Hits Africa

When we talk about generative AI, we most often think of tools like ChatGPT or Bard, which focus on generating outputs in the form of written text. However, as the fervor around generative AI grows, we are seeing more and more consumer-facing applications using these same core technologies to develop a variety of media — including images, video, speech and even music. Programs like Midjourney and DALL-E, for example, allow users to generate professional-grade digital imagery using nothing more than simple, everyday language prompts.

Never the type to let a good opportunity pass by, the threat actors of the world are already seizing on those groundbreaking capabilities to enhance their phishing campaigns. Especially as AI-enabled defensive cybersecurity solutions begin to improve at detecting even these more advanced forms of phishing, threat actors are turning to image files as a way to slip past organizations’ defenses.

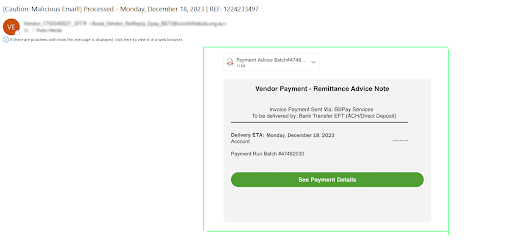

Below, you’ll see a textbook example of an image-based phishing attack taken from a real-world IRONSCALES customer:

Although the message appears to consist of nothing but text, everything inside the green box (added for emphasis) is in fact a .png file. And if the user clicks anywhere within that field, they will be taken to a malicious website. In this way, attackers can evade the rapidly improving natural language processing (NLP) capabilities used by today’s AI-enabled defensive cybersecurity tools.

And, unsurprisingly, they’re doubling down on this newfound strategy. To better understand the full extent of this trend across regions, IRONSCALES examined the platform data of its entire African customer base. As illustrated in the chart below, IRONSCALES’ African customers saw a dramatic increase in image-based phishing attack attempts over the past year.

Although the upward trend in image-based phishing attacks is a decidedly global one, the above illustrates that African businesses are not immune — and have seen more dramatic increases than many other global regions. Looking at .png-based attacks, we see a similarly dramatic year-over-year increase (~175%) between 2022 and 2023.

No Matter What the Format, AI-Generated Phishing Spells Trouble for Global Businesses

Whether it be purely text-based social engineering, or advanced, image-based attacks, one thing’s for certain — generative AI is fueling a whole new age of advanced phishing. And no matter where you look, organizations appear ill-prepared to take on this new flood of “spam-volume” BEC.

According to a recent analysis by ISC2, the world needs an additional four million accredited cybersecurity professionals to close the current global cybersecurity skills gap. At the same time, these shortages in skilled labor are even more dramatic in certain regions of the world, Africa included.

However, rather than look at these gaps solely as a source of doom and gloom, instead, we might be better off looking at them as opportunities — a recent study by the International Finance Corporation (IFC) found that, by 2030, there will be approximately 230 million jobs requiring some form of digital skills across the continent. These opportunities have the potential to give rise to 650 million training opportunities and an estimated USD 130 billion in market value.

While the best weapon against AI-enabled attacks is undoubtedly AI-enabled defenses, those tools work best when treated as ways of augmenting human intelligence and increasing efficiencies. Given the current state of affairs, the combined approach of AI and employee education may just lead to very meaningful, and very human opportunities on the African continent, and worldwide.

Recent Articles By Author

如有侵权请联系:admin#unsafe.sh