2024-6-1 21:30:20 Author: hackernoon.com(查看原文) 阅读量:1 收藏

Authors:

(1) Praveen Tirupattur, University of Central Florida.

Table of Links

- Abstract

- Acknowledgements

- Chapter 1: Introduction

- Chapter 2: Related Work

- Chapter 3: Proposed Approach

- Chapter 4: Experiments and Results

- Chapter 5: Conclusions and Future Work

- Bibliography

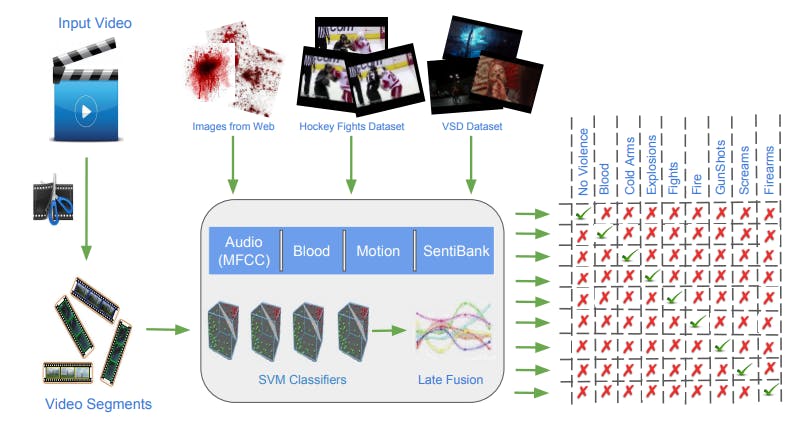

3. Proposed Approach

This chapter provides a detailed description of the approach followed in this work. The proposed approach consists of two main phases: Training and Testing. During the training phase, the system learns to detect the category of violence present in a video by training classifiers with visual and audio features extracted from the training dataset. In the testing phase, the system is evaluated by calculating the accuracy of the system in detecting violence for a given video. Each of these phases is explained in detail in the following sections. Please refer to Figure 3.1 for the overview of the proposed approach. Finally, a section describing the metrics used for evaluating the system is presented.

3.1. Training

In this section, the details of the steps involved in the training phase are discussed. The proposed training approach has three main steps: Feature extraction, Feature Classification, and Feature fusion. Each of these three steps is explained in detail in the following sections. In the first two steps of this phase, audio and visual features from the video segments containing violence and no violence are extracted and are used to train two-class SVM classifiers. Then in the feature fusion step, feature weights are calculated for each violence type targeted by the system. These feature weights are obtained by performing a grid search on the possible combination of weights and finding the best combination which optimizes the performance of the system on the validation set. The optimization criteria here is the minimization of EER (Equal Error Rate) of the system. To find these weights, a dataset disjoint from the training set is used, which contains violent videos of all targeted categories. Please refer to Chapter 1 for details of targeted categories.

Many researchers have tried to solve the Violence detection problem using different audio and visual features. A detailed information on violence detection related research is presented in Chapter 2. In the previous works, the most common visual features used to detect violence are motion and blood and the most common audio feature used is the MFCC. Along with these three common low-level features, this proposed approach also includes SentiBank (Borth et al. [4]), which is a visual feature representing sentiments in images. The details of each of the features and its importance in violence detection and the extraction methods used are described in the following sections.

3.1.1.1. MFCC-Features

Audio features play a very important role in detecting events such as gunshots, explosions etc, which are very common in violent scenes. Many researchers have used audio features for violence detection and have produced good results. Even though some of the earlier works looked at energy entropy [Nam et al. [41]] in the audio signal, most of them used MFCC features to describe audio content in the videos. These MFCC features are commonly used in voice and audio recognition.

In this work, MFCC features provided in the VSD2014 dataset are used to train the SVM classifier while developing the system. During the evaluation, MFCC features are extracted from the audio stream of the input video, with window size set to the number of audio samples per frame in the audio stream. This is calculated by dividing the audio sampling rate with fps (frames per second) value of the video. For example, if the audio sampling rate is 44,100 Hz and the video is encoded with 25 fps, then each window has 1,764 audio samples. The window overlap region is set to zero and 22 MFCC are computed for each window. With this setup, a 22-dimensional MFCC feature vector is obtained for each video frame.

3.1.1.2. Blood-Features

Blood is the most common visible element in scenes with extreme violence. For example, scenes containing beating, stabbing, gunfire, and explosions. In many earlier works on violence detection, detection of pixels representing blood is used as it is an important indicator of violence. To detect blood in a frame, a pre-defined color table is used in most of the earlier works, for example, Nam et al. [41] and Lin and Wang [38]. Other approaches to detecting blood, such as the use of Kohonen’s Self-Organizing Map (SOM)(Clarin et al. [12]), are also used in some of the earlier works.

In this work, a color model is used to detect pixels representing blood. It is represented using a three-dimensional histogram with one dimension each for red, green and blue values of the pixels. In each dimension, there are 32 bins with each bin having width of 8 (32 × 8 = 256). This blood model is generated in two steps. In the first step, the blood model is bootstrapped by using the RGB (Red, Green, Blue) values of the pixels containing blood. The 3 dimensional binned histogram is populated with the RGB values of these pixels containing blood. The value in the bin to which a blood pixel belongs to is incremented by 1 each time a new blood pixel is added to the model. Once a sufficient number of bloody pixels are used to fill the histogram, the values in the bins are normalized by the sum of all the values. The values in each of the bins now represent the probability of a pixel showing blood given its RGB values. To fill the blood model, pixel containing blood are cropped from various images containing blood which are downloaded from Google. Cropping of the regions containing only blood pixels is done manually. Please refer to the image Figure 3.2 for samples of the cropped regions, each of size 20 pixels × 20 pixels.

Once the model is bootstrapped, it is used to detect blood in the images downloaded from Google. Only pixels that have a high probability of representing blood are used to further extend the bootstrapped model. Downloading the images and extending the blood model is done automatically. To download images from Google which contain blood, search words such as “bloody images”, “bloody scenes”, “bleeding”, “real blood splatter”, “blood dripping” are used. Some of the samples of the downloaded images can be seen in the Figure 3.3. Pixel values with high blood probability are added to the blood model until it has, at least, a million pixel values.

This blood model alone is not sufficient to accurately detect blood. Along with this blood model, there is a need for a non-blood model as well. To generate this, similar to the earlier approach, images are downloaded from Google which do not contain blood and the RGB pixel values from these images are used to build the non-blood model. Some samples images used to generate this non-blood model are shown in Figure 3.3. Now using these blood and non-blood models, the probability of a pixel representing blood is calculated as follows

Using this formula, for a given image, the probability of each pixel representing blood is calculated and Blood Probability Map (BPM) is generated. This map has the same size as that of the input image and contains the blood probability values for every pixel. This BPM is binarized using a threshold value to generate the final binarized BPM. The threshold used to binarize the BPM is estimated (Jones and Rehg [35]). From this binarized BPM, a 1-dimensional feature vector of length 14 is generated which contains the values such as the blood ratio, blood probability ratio, size of the biggest connected component, mean, variance etc. This feature vector is extracted for each frame in the video and is used for training the SVM classifier. A sample image along with its BPM and binarized BPM are presented in Figure 3.4. It can be observed from this figure that this approach has performed very well in detecting pixels containing blood.

3.1.1.3. Motion-Features

Motion is another widely used visual feature for violence detection. The work of Deniz et al. [21], Nievas et al. [42] and Hassner et al. [28] are some of the examples in which motion is used as the main feature for violence detection. Here, motion refers to the amount of spatio-temporal variation between two consective frames in a video. Motion is considered a good indicator of violence as substantial amount of violence is expected in the scenes that contain violence. For example, in the scenes that contain person-on-person fights, there is fast movement of human body parts like legs and hands, and in scenes that contain explosions, there is a lot of movement from the parts that are flying apart because of the explosion.

The idea of using motion information for activity detection stems from psychology. Research on human perception has shown that the kinematic pattern of movement is sufficient for the perception of actions (Blake and Shiffrar [2]). Research studies in computer vision (Saerbeck and Bartneck [50], Clarke et al. [13], and Hidaka [29]) have also shown that relatively simple dynamic features such as velocity and acceleration correlate to emotions perceived by a human.

In this work, to calculate the amount of motion in a video segment, two different approaches are evaluated. The first approach is to use the motion information embedded inside the video codec and the next approach is to use optical flow to detect motion. These approahces are presented next.

3.1.1.3.1. Using Codec

In this method, the motion information is extracted from the video codec. The magnitude of motion at each pixel per frame called the motion vector is retrieved from the codec. This motion vector is a two-dimensional vector and has the same size as a frame from the video sequence. From this motion vector, a motion feature which represents the amount of motion in the frame is generated. To generate this motion feature, first the motion vector is divided into twelve sub-regions of equal sizes by slicing it along the x and y-axis into three and four regions respectively. The amount of motion along the x and y-axis at each pixel from each of these sub-regions are aggregated and these sums are used to generate a two-dimensional motion histogram for each frame. This histogram represents the motion vector for a frame. Refer to the image on the left in Figure 3.5 to see the visualization of the aggregated motion vectors for a frame from a sample video. In this visualization, the motion vectors are aggregated for sub-regions of size 16 × 16 pixels. The magnitude and direction of motion in these regions is represented using the length and orientation of the green dashed lines which are overlaid on the image.

3.1.1.3.2. Using Optical Flow

The next approach to detect motion uses Optical flow (Wikipedia [57]). Here, the motion at each pixel in a frame is calculated using Dense Optical Flow. For this, the implementation of Gunner Farneback’s algorithm (Farneb¨ack [24]) provided by OpenCV (Bradski [5]) is used. The implementation is provided as a function in OpenCV and for more details about the function and the parameters, please refer to the documentation provided by OpenCV (OpticalFlow [43]). The values 0.5, 3, 15, 3, 5, 1.2 and 0 are passed to the function parameters pyr scale, levels, win-size, iterations, poly n, poly sigma and flags respectively. Once the motion vectors at every pixel are calculated using Optical flow, the motion feature from a frame is extracted using the same process mentioned in the above Section 3.1.1.3.1. Refer to the image on the rights in Figure 3.5 to get an impression of the aggregated motion vectors extracted from a frame. The motion vectors are aggregated for sub-regions of size 16×16 pixels as in the previous approach to provide a better comparison between the features extracted by using Codec information and Optical flow.

After the evaluation of both these approaches to extract motion information from videos, the following observations are made. First, extracting motion from Codecs is much faster than using optical flow as the motion vectors are precalculated and stored in video codecs. Second, motion extraction using optical flow is not very efficient when there are blurred regions in a frame. This blur is usually caused by sudden motions in a scene, which is very common in scenes containing violence. Hence, the use of optical flow for extracting motion information to detect violence is not a promising approach. Therefore, in this work information stored in the video codecs is used to extract motion features. The motion features are extracted from each frame in the video and are used to train an SVM classifier.

3.1.1.4. SentiBank-Features

In addition to the aforementioned low-level features, the SentiBank feature introduced by Borth et al. [4] is also applied. SentiBank is a mid-level representation of visual content based on the large-scale Visual Sentiment Ontology (VSO) [1]. SentiBank consists of 1,200 semantic concepts and corresponding automatic classifiers, each being defined as an Adjective Noun Pair (ANP). Such ANPs combine strong emotional adjectives to be linked with nouns, which correspond to objects or scenes (e.g. “beautiful sky”, “disgusting bug”, or “cute baby”). Further, each ANP (1) reflects a strong sentiment, (2) has a link to an emotion, (3) is frequently used on platforms such as Flickr or YouTube and (4) has a reasonable detection accuracy. Additionally, the VSO is intended to be comprehensive and diverse enough to cover a broad range of different concept classes such as people, animals, objects, natural or man-made places and, therefore, provides additional insights about the type of content being analyzed. Because SentiBank demonstrated its superior performance as compared to low-level visual features on the analysis of sentiment Borth et al. [4], it is used now for the first time to detect complex emotion such as violence from video frames.

SentiBank consists of 1,200 SVMs, each trained to detect one of the 1,200 semantic concepts from an image. Each SVM is a binary classifier which gives a binary output 0/1 depending on whether or not the image contains a specific sentiment. For a given frame in a video, a vector containing the output of all 1,200 SVMs is considered as the SentiBank feature. To extract this feature, a python-based implementation is utilized. For training the SVM classifier, the SentiBank features extracted from each frame in the training videos are used. The SentiBank feature extraction takes few seconds as it involves collecting output from 1,200 pre-trained SVMs. To reduce the time taken for feature extraction, the SentiBank feature for each of the frame is extracted in parallel using multiprocessing.

3.1.2. Feature Classification

The next step in the pipeline after feature extraction is feature classification and this section provides the details of this step. The selection of classifier and the training techniques used play a very important role in getting good classification results. In this work, SVMs are used for classification. The main reason behind this choice is the fact that the earlier works on violence detection have used SVMs to classify audio and visual features and have produced good results. In almost all the works mentioned in Chapter 2 SVMs are used for classification, even though they may differ in the kernel functions used.

From all the videos available in the training set, audio and visual features are extracted using the process described in the Section 3.1.1. These features are then divided into two sets, one to train the classifier and the other to test the classification accuracy of the trained classifier. As the classifiers used here are SVMs, a choice has to be made about what kernel to use and what kernel parameters to set. To find the best kernel type and kernel parameters, a grid search technique is used. In this grid search, Linear, RBF (Radial Basis Function), and Chi-Square kernels along with a range of value for their parameters are tested, to find the best combination which gives the best classification results. Using this approach, four different classifiers are trained, one for each feature type. These trained classifiers are then used in finding the feature weights in the next step. In this work, the SVM implementation provided by scikit-learn (Pedregosa et al. [45]) and LibSVM (Chang and Lin [9]) are used.

3.1.3. Feature Fusion

In the feature fusion step, the output probabilities from each of the feature classifiers are fused to get the final score of the violence in a video segment along with the class of violence present in it. This fusion is done by calculating the weighted sum of the probabilities from each of the feature classifiers. To detect the class of violence to which a video belongs, the procedure is as follows. First, the audio and visual features are extracted from the videos belonging to each of the targeted violence classes. These features are then passed to the trained binary SVM classifiers to get the probabilities of each of the video containing violence. Now, these output probabilities from each of the feature classifiers are fused by assigning each feature classifier a weight for each class of violence and calculating the weighted sum. The weights assigned to each of the feature classifiers represent the importance of a feature in detecting a specific class of violence. These feature weights have to be adjusted appropriately for each violence class for the system to detect the correct class of violence.

There are two approaches to finding the weights. The first approach is to manually adjust weights of a feature classifier for each violence type. This approach needs a lot of intuition about the importance of a feature in detecting a class of violence and is very error prone. The other approach is to find the weights using a grid-search mechanism where a set of weights is sampled from the range of possible weights. In this case, the range of possible weights for each feature classifier is [0,1], subjected to the constraint that the sum of weights of all the feature classifiers amounts to 1. In this work, the latter approach is used and all the weight combinations which amount to 1 are enumerated. Each of these weight combinations is used to calculate the weighted sum of classifier probabilities for a class of violence and the weights from the weight combination which produces the highest sum is assigned to each of the classifiers for the corresponding class of violence. To calculate these weights, a dataset which is different from the training set is used, in order to avoid over-fitting of weights to the training set. The dataset used for weight calculation has videos from all the classes of violence targeted in this work. It is important to note that, even though each of the trained SVM classifiers are binary in nature, the output values from these classifiers can be combined using weighted sum to find the specific class of violence to which a video belongs.

3.2. Testing

In this stage, for a given input video, each segment containing violence is detected along with the class of violence present in it. For a given video, the following approach is used to detect the segments that contain violence and the category of violence in it. First, the visual and audio features are extracted from one frame every 1-second starting from the first frame of the video, rather than extracting features from every frame. These frames from which the features are extracted, represent a 1-second segment of the video. The features from these 1-second video segments are then passed to the trained binary SVM classifiers to get the scores for each video segment to be violent or non-violent. Then, weighted sums of the output values from the individual classifiers are calculated for each violence category using the corresponding weights found during fusion step. Hence, for a given video of length ‘X’ seconds, the system outputs a vector of length ‘X’. Each element in this vector is a dictionary which maps each violence class with a score value. The reason for using this approach is two fold, first to detect time intervals in which there is violence in the video and to increase the speed of the system in detecting violence. The feature extraction, especially extracting the Sentibank feature, is timeconsuming and doing it for every frame will make the system slow. But this approach has a negative effect on the accuracy of the system as it detects violence not for every frame but for every second.

3.3. Evaluation Metrics

There are many metrics that can be used to measure the performance of a classification systems. Some of the measures used for binary classification are Accuracy, Precision, Recall (Sensitivity), Specificity, F-score, Equal Error Rate (EER), and Area Under the Curve (AUC). Some other measures such as Average Precision (AP) and Mean Average Precision (MAP) are used for systems which return a ranked list as a result to a query. Most of these measures that are increasingly used in Machine Learning and Data Mining research are borrowed from other disciplines such as Information Retrieval (Rijsbergen [49]) and Biometrics. For a detailed discussion on these measures, refer to the works of Parker [44] and Sokolova and Lapalme [53]. The ROC (Receiver Operating Characteristic) curve is another widely used method for evaluating or comparing binary classification systems. Measures such as AUC and EER can be calculated from the ROC curve.

In this work, ROC curves are used to: (i) Compare the performance of individual classifiers. (ii) Compare the performance of the system in detecting different classes of violence in the Multi-Class classification task. (iii) Compare the performance of the system on Youtube and Hollywood-Test dataset in the Binary classification task. Other metrics that are used here are, Precision, Recall and EER. These measures are used as these are the most commonly used measures in the previous works on violence detection. In this system, the parameters (fusion weights) are adjusted to minimize the EER.

3.4. Summary

In this chapter, a detailed description of the approach followed in this work to detect violence is presented. The first section deals with the training phase and the second section deals with the testing phase. In the first section, different steps involved in the training phase are explained in detail. First the extraction of audio and visual features is discussed and the details of what features are used and how they are extracted are presented. Next, the classification techniques used to classify the extracted features are discussed. Finally, the process used to calculate feature weights for feature fusion is discussed. In the second section, the process used during the testing phase to extract video segments containing violence and to detect the class of violence in these segments is discussed.

To summarize, the steps followed in this approach are feature extraction, feature classification, feature fusion and testing. The first three steps constitute the training phase and the final step is the testing phase. In the training phase, audio and visual features are extracted from the video and they are used to train binary SVM classifiers one for each feature. Then, a separate dataset is used to find the feature weights which minimize the EER of the system on the validataion dataset. In the final testing phase, first the visual and audio features are extracted one per a 1-second video segment of the input test video. Then, these features are passed to the trained SVM classifiers to get the probabilities of these features representing violence. A weighted sum of these output probabilities is calculated for each violence type using the weights obtained in the feature fusion step. The violence type for which the weighted sum is maximum is assigned as a label to the corresponding 1-second video segment. Using these labels the segments containing violence and the class of violence contained in them is presented as an output by the system. The experimental setup and evaluation of this system are presented in the next chapter.

[1] http://visual-sentiment-ontology.appspot.com

如有侵权请联系:admin#unsafe.sh