2024-5-27 20:40:3 Author: hackernoon.com(查看原文) 阅读量:0 收藏

AWS WorkMail is a powerful email and calendar service that combines security, reliability, and ease of use. Its integration with AWS services and compatibility with existing email clients make it an attractive choice for businesses looking to streamline their email and calendar management while ensuring security and compliance. By leveraging AWS WorkMail, organizations can focus on their core activities without worrying about the complexities of managing email infrastructure. Setting up a reliable backup system for your AWS WorkMail is crucial to ensure that your emails are secure and easily recoverable. In this tutorial, I'll walk you through the process of automating AWS WorkMail backups for multiple users, handling up to 10 concurrent mailbox export jobs. We will use AWS services and Python to create a robust backup solution.

Ah! I remember the time I was using the CPANEL mail server and then meddling with my own mail server. Those single-instance mail servers with limited access to IP addresses in the early 2010s were smooth, but as more and more people worldwide started exploiting these servers, Comon, we still get a lot of spam and marketing. Things were tough making sure your email did not land in the spam box.

The choice between AWS WorkMail, a self-hosted mail server, and a cPanel mail server depends on several factors, including budget, technical expertise, scalability needs, and security requirements.

- AWS WorkMail is ideal for businesses seeking a scalable, secure, and managed email solution with minimal maintenance overhead.

- Self-Hosted Mail Server is suitable for organizations with in-house technical expertise and specific customization needs but involves higher costs and maintenance efforts.

- cPanel Mail Server offers a balanced approach for small to medium-sized businesses that need a user-friendly interface and are comfortable with some level of maintenance, benefiting from the bundled hosting services.

My main concerns were always the following;

Security

- AWS WorkMail: Provides built-in security features, including encryption in transit and at rest, integration with AWS Key Management Service (KMS), and compliance with various regulatory standards.

- Self-Hosted Mail Server: Security depends entirely on the expertise of the administrator. Requires configuration for encryption, spam filtering, and regular security updates to protect against threats.

- cPanel Mail Server: Offers security features, but the responsibility for implementing and maintaining security measures falls on the user. cPanel provides tools for SSL/TLS configuration, spam filtering, and antivirus, but proper setup and regular updates are essential.

Then came AWS to the rescue, and since using AWS from 2015, things have been a breeze, except when there came a case of backing up my AWS Account to move to a new account. Well, I have been searching over the internet to find a reliable solution because, as far as we speak as of now, AWS WorkMail doesn’t provide a straightforward way of backing up your email to your local computer or to S3, and it is understandable based on the security compliance, but still, I am still expecting that AWS should provide some GUI or a tool for that. Well while browsing, I did come across tools definitely PAID, so I decided to take on the path of developing my own tool of it. After rigorous testing, based on my experience with AWS, 9/10 problems always occur when one service is unable to communicate with the other service due to policy or IAM role issues.

This is my contribution to all those people who are having a hard time taking backups of AWS Work Mail Accounts.

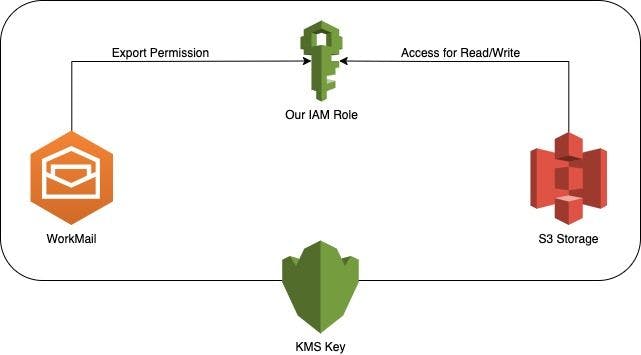

How does the backup work?

We created an IAM Role in your account, giving the role access to export from AWS WorkMail, and then we attached a policy to the same role to allow it to access S3. We then create a KMS Key, which is given access to the IAM Role. The S3 bucket also needs access to the IAM role to function properly.

Step 1: Configure AWS CLI

First, ensure that the AWS CLI is installed and configured with appropriate credentials. Open your terminal and run:

aws configure

Follow the prompts to set your AWS Access Key ID, Secret Access Key, default region, and output format.

Step 2: Set Up Required AWS Resources

We need to create an IAM role, policies, an S3 bucket, and a KMS key. Save the following bash script as setup_workmail_export.sh.

#!/bin/bash

# Configuration

ROLE_NAME="WorkMailExportRole"

POLICY_NAME="workmail-export"

S3_BUCKET_NAME="s3.bucket.name"

AWS_REGION="your-region"

ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

# Create Trust Policy

cat <<EOF > trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "export.workmail.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "$ACCOUNT_ID"

}

}

}

]

}

EOF

# Create IAM Role

aws iam create-role --role-name $ROLE_NAME --assume-role-policy-document file://trust-policy.json

# Create IAM Policy

cat <<EOF > role-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::$S3_BUCKET_NAME",

"arn:aws:s3:::$S3_BUCKET_NAME/*"

]

},

{

"Effect": "Allow",

"Action": [

"kms:Decrypt",

"kms:GenerateDataKey"

],

"Resource": "*"

}

]

}

EOF

# Attach the Policy to the Role

aws iam put-role-policy --role-name $ROLE_NAME --policy-name $POLICY_NAME --policy-document file://role-policy.json

# Create S3 Bucket

aws s3api create-bucket --bucket $S3_BUCKET_NAME --region $AWS_REGION --create-bucket-configuration LocationConstraint=$AWS_REGION

# Create Key Policy

cat <<EOF > key-policy.json

{

"Version": "2012-10-17",

"Id": "workmail-export-key",

"Statement": [

{

"Sid": "Enable IAM User Permissions",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::$ACCOUNT_ID:root"

},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Allow administration of the key",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::$ACCOUNT_ID:role/$ROLE_NAME"

},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

}

]

}

EOF

# Create the KMS Key and get the Key ID and ARN using Python for JSON parsing

KEY_METADATA=$(aws kms create-key --policy file://key-policy.json)

KEY_ID=$(python3 -c "import sys, json; print(json.load(sys.stdin)['KeyMetadata']['KeyId'])" <<< "$KEY_METADATA")

KEY_ARN=$(python3 -c "import sys, json; print(json.load(sys.stdin)['KeyMetadata']['Arn'])" <<< "$KEY_METADATA")

# Update S3 Bucket Policy

cat <<EOF > s3_bucket_policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::$ACCOUNT_ID:role/$ROLE_NAME"

},

"Action": "s3:*"

"Resource": [

"arn:aws:s3:::$S3_BUCKET_NAME",

"arn:aws:s3:::$S3_BUCKET_NAME/*"

]

}

]

}

EOF

# Apply the Bucket Policy

aws s3api put-bucket-policy --bucket $S3_BUCKET_NAME --policy file://s3_bucket_policy.json

# Clean up temporary files

rm trust-policy.json role-policy.json key-policy.json s3_bucket_policy.json

# Display the variables required for the backup script

cat <<EOF

Setup complete. Here are the variables required for the backup script:

# Print out the Variables

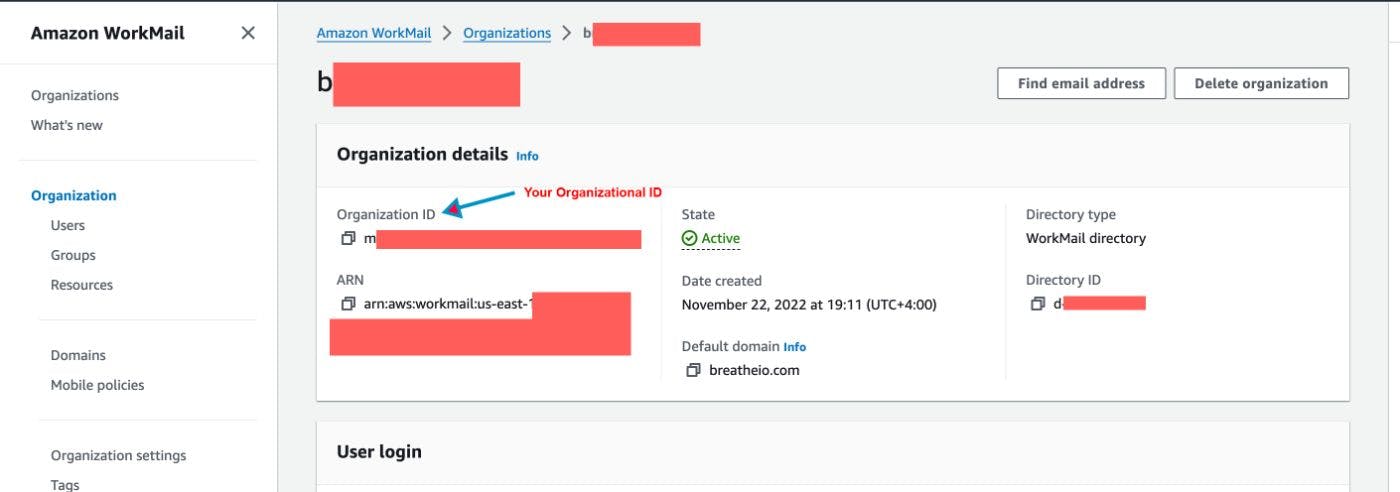

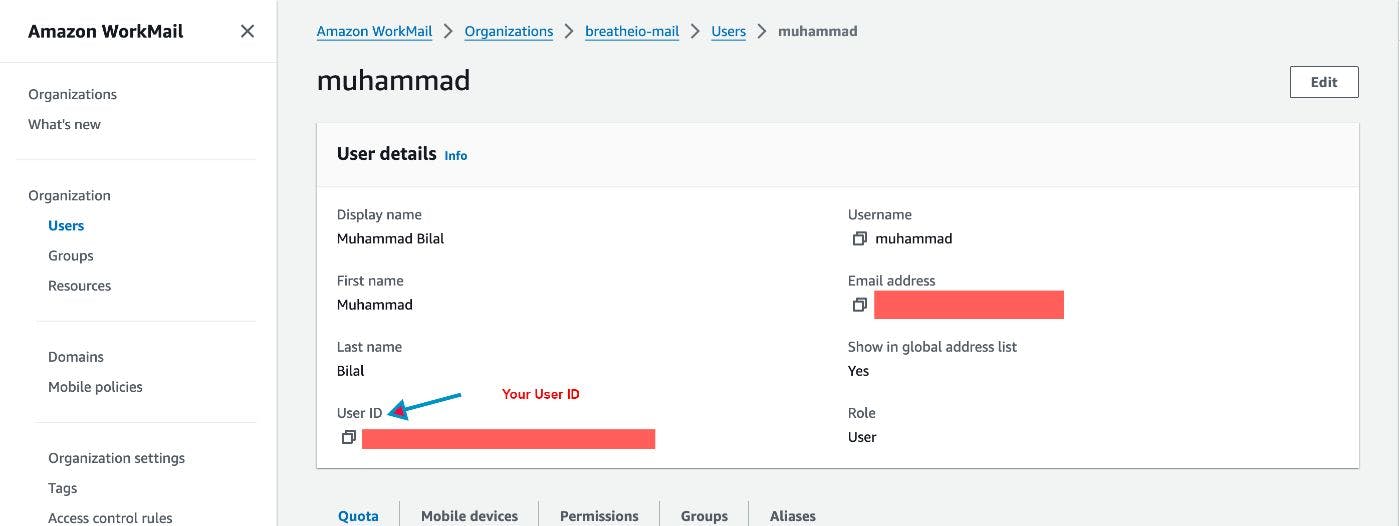

organization_id = 'your-organization-id'

user_id = 'your-user-id'

s3_bucket_name = '$S3_BUCKET_NAME'

s3_prefix = 'workmail-backup/'

region = '$AWS_REGION'

kms_key_arn = '$KEY_ARN'

role_name = '$ROLE_NAME'

account_id = '$ACCOUNT_ID'

EOF

Make the script executable and run it:

chmod +x setup_workmail_export.sh

./setup_workmail_export.sh

Step 3: Write the Backup Script

Now, let's write the Python script to export mailboxes in batches of 10. Save the following script as workmail_export.py.

import boto3

import json

import time

from datetime import datetime

# Configuration

organization_id = 'your-organization-id'

user_emails = {

'user-id-1': '[email protected]',

'user-id-2': '[email protected]',

'user-id-3': '[email protected]',

'user-id-4': '[email protected]',

'user-id-5': '[email protected]',

'user-id-6': '[email protected]',

'user-id-7': '[email protected]',

'user-id-8': '[email protected]',

'user-id-9': '[email protected]',

'user-id-10': '[email protected]',

'user-id-11': '[email protected]',

'user-id-12': '[email protected]'

# Add more user ID to email mappings as needed

}

s3_bucket_name = 's3.bucket.name'

region = 'your-region'

kms_key_arn = 'arn:aws:kms:your-region:your-account-id:key/your-key-id'

role_name = 'WorkMailExportRole'

account_id = 'your-account-id'

# Get the current date

current_date = datetime.now().strftime('%Y-%m-%d')

# Set the S3 prefix with the date included

s3_prefix_base = f'workmail-backup/{current_date}/'

# Initialize AWS clients

workmail = boto3.client('workmail', region_name=region)

sts = boto3.client('sts', region_name=region)

def start_export_job(entity_id, user_email):

client_token = str(time.time()) # Unique client token

role_arn = f"arn:aws:iam::{account_id}:role/{role_name}"

s3_prefix = f"{s3_prefix_base}{user_email}/"

try:

response = workmail.start_mailbox_export_job(

ClientToken=client_token,

OrganizationId=organization_id,

EntityId=entity_id,

Description='Backup job',

RoleArn=role_arn,

KmsKeyArn=kms_key_arn,

S3BucketName=s3_bucket_name,

S3Prefix=s3_prefix

)

return response['JobId']

except Exception as e:

print(f"Failed to start export job for {entity_id}: {e}")

return None

def check_job_status(job_id):

while True:

try:

response = workmail.describe_mailbox_export_job(

OrganizationId=organization_id,

JobId=job_id

)

print(f"Full Response: {response}") # Log full response for debugging

state = response.get('State', 'UNKNOWN')

print(f"Job State: {state}")

if state in ['COMPLETED', 'FAILED']:

break

except Exception as e

:

print(f"Error checking job status for {job_id}: {e}")

time.sleep(30) # Wait for 30 seconds before checking again

return state

def export_mailboxes_in_batches(user_emails, batch_size=10):

user_ids = list(user_emails.keys())

for i in range(0, len(user_ids), batch_size):

batch = user_ids[i:i+batch_size]

job_ids = []

for user_id in batch:

user_email = user_emails[user_id]

job_id = start_export_job(user_id, user_email)

if job_id:

print(f"Started export job for {user_email} with Job ID: {job_id}")

job_ids.append((user_email, job_id))

for user_email, job_id in job_ids:

state = check_job_status(job_id)

if state == 'COMPLETED':

print(f"Export job for {user_email} completed successfully")

else:

print(f"Export job for {user_email} failed with state: {state}")

def main():

export_mailboxes_in_batches(user_emails)

if __name__ == "__main__":

main()

Replace the placeholders with your actual AWS WorkMail organization ID, user ID to email mappings, S3 bucket name, region, KMS key ARN, role name, and AWS account ID.

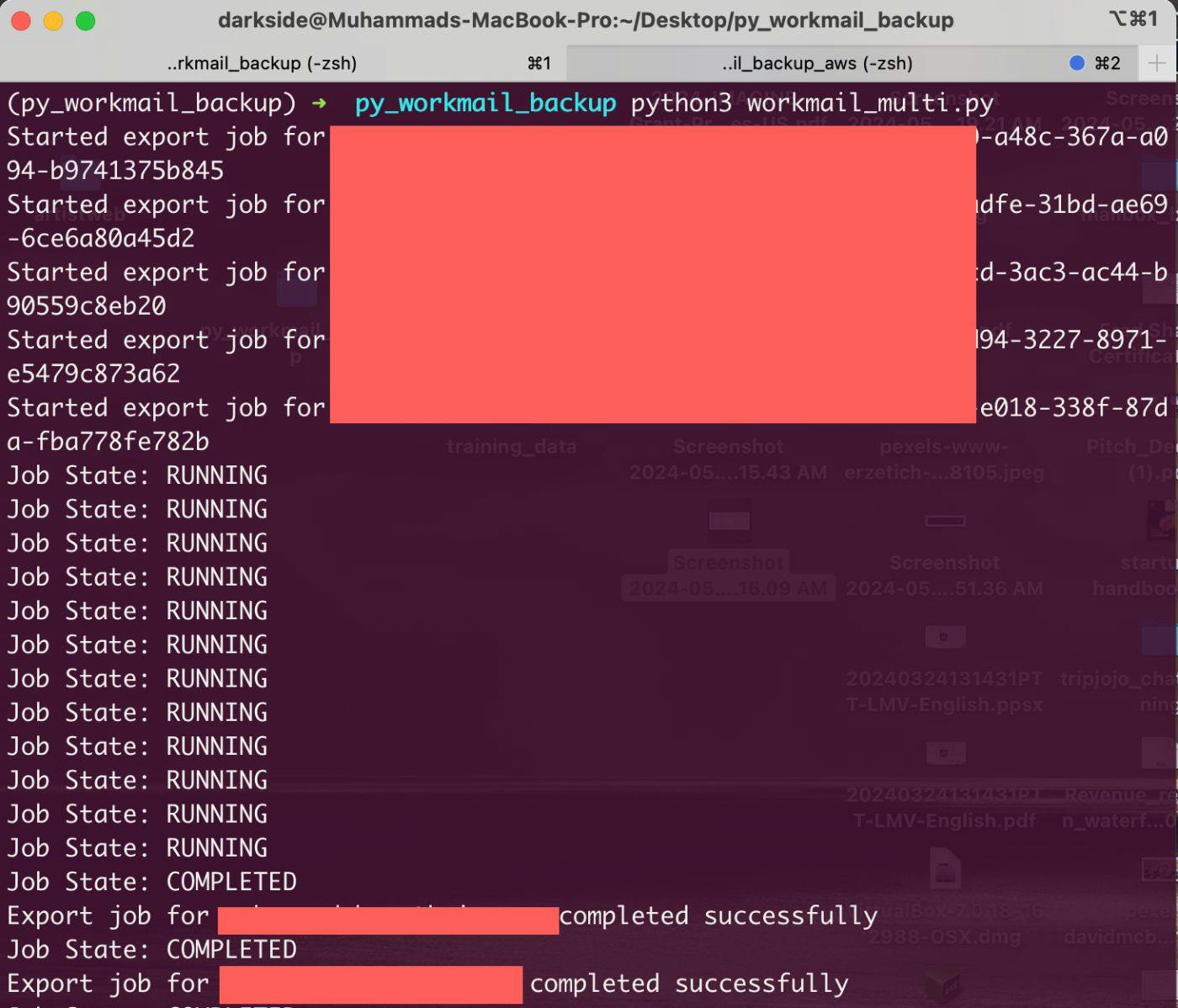

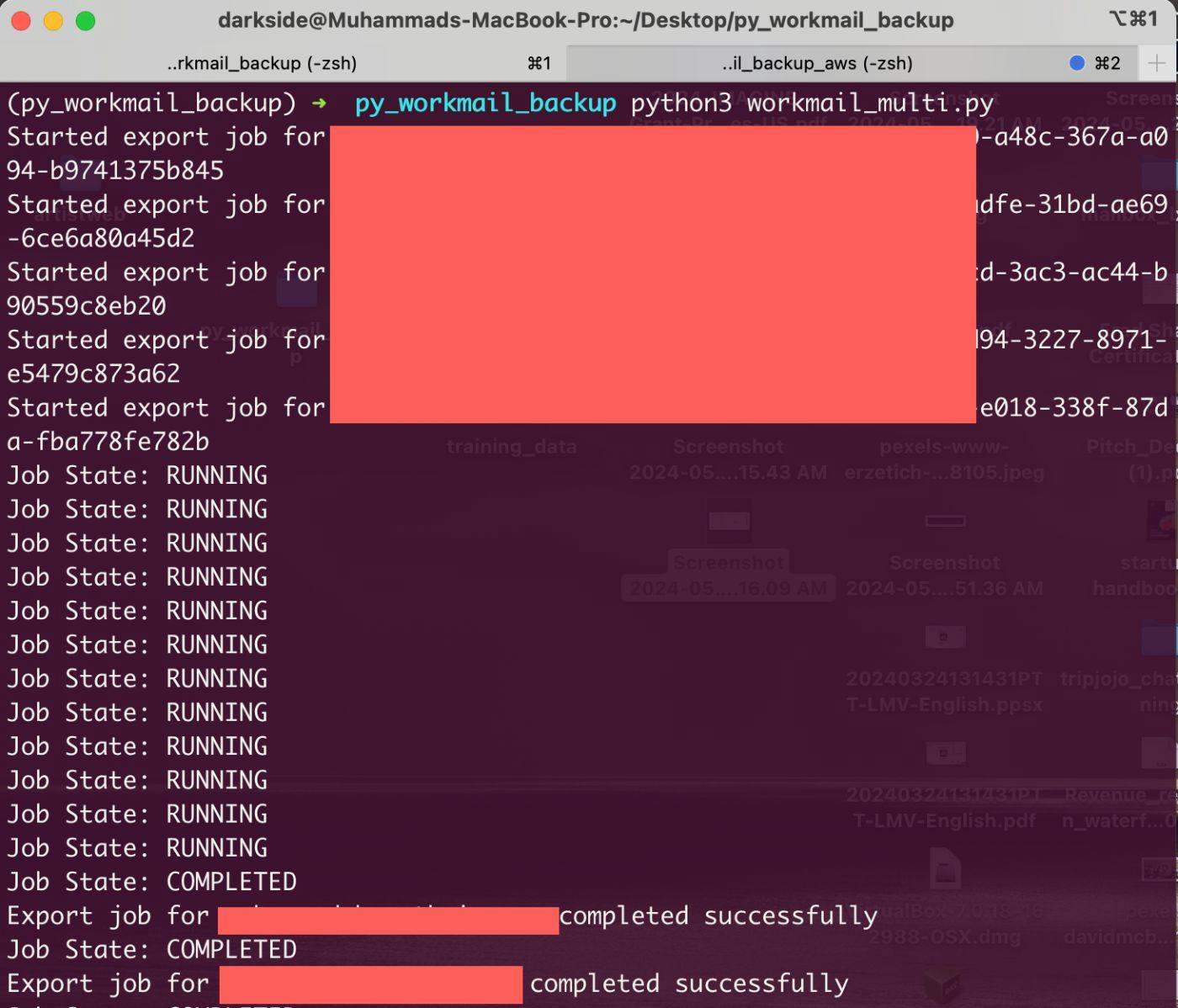

Step 4: Run the Backup Script

Ensure you have Boto3 installed:

pip install boto3

Then, execute the Python script:

python workmail_export.py

Import Mailbox

import boto3

import json

import time

# Configuration

organization_id = 'your-organization-id'

user_import_data = {

'user-id-1': '[email protected]',

'user-id-2': '[email protected]',

'user-id-3': '[email protected]',

'user-id-4': '[email protected]',

'user-id-5': '[email protected]',

'user-id-6': '[email protected]',

'user-id-7': '[email protected]',

'user-id-8': '[email protected]',

'user-id-9': '[email protected]',

'user-id-10': '[email protected]',

'user-id-11': '[email protected]',

'user-id-12': '[email protected]'

# Add more user ID to email mappings as needed

}

s3_bucket_name = 's3.bucket.name'

s3_object_prefix = 'workmail-backup/' # Prefix for S3 objects (folders)

region = 'your-region'

role_name = 'WorkMailImportRole'

account_id = 'your-account-id'

# Initialize AWS clients

workmail = boto3.client('workmail', region_name=region)

sts = boto3.client('sts', region_name=region)

def start_import_job(entity_id, user_email):

client_token = str(time.time()) # Unique client token

role_arn = f"arn:aws:iam::{account_id}:role/{role_name}"

s3_object_key = f"{s3_object_prefix}{user_email}/export.zip"

try:

response = workmail.start_mailbox_import_job(

ClientToken=client_token,

OrganizationId=organization_id,

EntityId=entity_id,

Description='Import job',

RoleArn=role_arn,

S3BucketName=s3_bucket_name,

S3ObjectKey=s3_object_key

)

return response['JobId']

except Exception as e:

print(f"Failed to start import job for {entity_id}: {e}")

return None

def check_job_status(job_id):

while True:

try:

response = workmail.describe_mailbox_import_job(

OrganizationId=organization_id,

JobId=job_id

)

state = response.get('State', 'UNKNOWN')

print(f"Job State: {state}")

if state in ['COMPLETED', 'FAILED']:

break

except Exception as e:

print(f"Error checking job status for {job_id}: {e}")

time.sleep(30) # Wait for 30 seconds before checking again

return state

def import_mailboxes_in_batches(user_import_data, batch_size=10):

user_ids = list(user_import_data.keys())

for i in range(0, len(user_ids), batch_size):

batch = user_ids[i:i+batch_size]

job_ids = []

for user_id in batch:

user_email = user_import_data[user_id]

job_id = start_import_job(user_id, user_email)

if job_id:

print(f"Started import job for {user_email} with Job ID: {job_id}")

job_ids.append((user_email, job_id))

for user_email, job_id in job_ids:

state = check_job_status(job_id)

if state == 'COMPLETED':

print(f"Import job for {user_email} completed successfully")

else:

print(f"Import job for {user_email} failed with state: {state}")

def main():

import_mailboxes_in_batches(user_import_data)

if __name__ == "__main__":

main()

By following these steps, you have set up an automated system to back up your AWS WorkMail mailboxes, handling up to 10 concurrent export jobs. This solution ensures that your emails are securely stored in an S3 bucket, organized by user email and date, and encrypted with a KMS key. This setup provides a robust and scalable backup strategy for your organization's email data.

Github Repo: https://github.com/th3n00bc0d3r/AWS-WorkMail-Backup

如有侵权请联系:admin#unsafe.sh