2024-5-26 23:0:12 Author: hackernoon.com(查看原文) 阅读量:4 收藏

This article is inspired by the need for more Software Engineers in quantum computing. Not to mention the other Developers, UX Designers, QA Testers, Product Managers and all the rest of the talent that makes it possible to ship a real product to real customers. Especially products in Deep Tech and Frontier Tech like quantum computing.

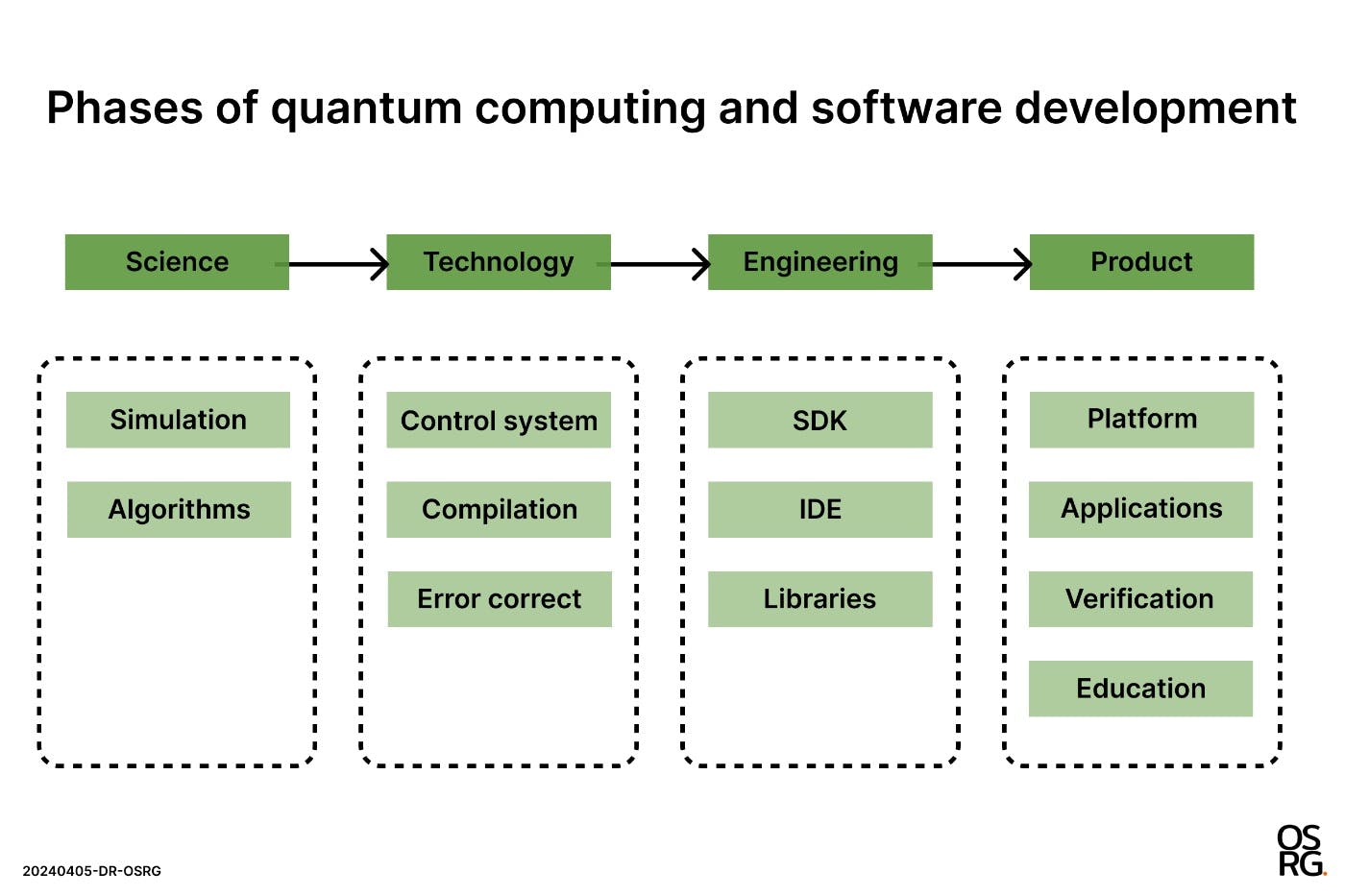

These products and the teams attempting to create them are subject to a long and challenging journey from "Science to Technology to Engineering to Product". A series of phase shifts that are more about organisational (and community) evolution than just a linear progression through technology readiness.

This evolution doesn't just happen. For those of us working in these teams, we face the challenge of reinventing the organisation as it shifts from academic to technical to something more broadly engaged with the marketplace. Which means sourcing and collaborating with a growing community of talent as well as continually evolving and growing our own skills.

This was something I touched on in my "Open Source Your Way into a Quantum Computing Career" talk back in 2022 at the Linux Foundation's Open Source Summit. And it's grown even further in the year or so since, with a noticeable industry shift toward "quantum utility" (a term we use to focus on real-world usefulness rather than theoretical supremacy) and some massive projects kicking off. Such as the Australian government's nearly $1B investment in PsiQuantum setting up a commercial quantum computer back in my home town of Brisbane (said with a little homesickness from here in Seattle).

So yes, there is a lot going on. Which makes this is a very good time to understand what these quantum systems are actually composed of and where your talent and curiosity fits in. I've included some recommendations at the end for how to get involved. And I should add a quick disclaimer that there's not really one "quantum computer" paradigm. I've abstracted the most common elements of the various systems we work on for educational value, but welcome any challenges or rebuttals as this model evolves over time.

The quantum stack at a glance

In many ways the quantum computing stack matches the pattern of a modern high-performance computing (HPC) stack. And to a lesser degree will be familiar enough to anyone working in the cloud computing space. We go from a high-level user experience down to some form of platform that takes our workload and converts it to something that will then run on the hardware. Simple enough to get our head around.

The nuance is a lot more complicated. Such as understanding that a quantum computer is only really as good as the quantum algorithm being used. All the fancy stuff you've heard about superposition and entanglement really just comes down to a way to reliably run some useful algorithms that, on a hardware level, uses phase and interference to perform the "computation" that spits out a probability of the right answer. Doing this a lot creates a greater likelihood of the right answer. Doing this at all requires a useful algorithm and a reliable system implementation.

Simulation also plays a crucial role. You might see this talked about as having to do with "saving from expensive hardware purchases", but this isn't really the case (and often a clue someone is just using AI to write their quantum clickbait). We rely on simulation to not only help develop new and interesting algorithms, but to explore the various ways to set up a workload. It's also a core part of the workflows many of us are building towards, where a truly hybrid system would use classical computing resources to handle the workload and scheduling, along with acceleration via GPUs (or newer chips like TPUs and LPUs), and effectively push certain workloads to the quantum processing unit (QPU) where a quantum algorithm may be useful to the task at hand. While some, like myself, are focused on integrating quantum computing with existing infrastructure, others are dedicated to building the most powerful standalone quantum system. Hence the wide range of exploration in the industry.

If all you take away from this is that a quantum computer is a specialised system that includes a QPU in additional to the existing compute stack to run specialised quantum algorithms then that's a win. No cats, slits, or spooky hand-waving required.

The quantum stack in detail

The following sections move from the top user layer down through the platform, and ultimately into the hardware layer. While the boundaries between these layers can be blurred in practice, we'll follow a model based on a typical workload or workflow for clarity (and sanity).

1. Quantum programming languages and developer tools

At the highest level of the quantum system is the human punching away at the keyboard. Quantum programming languages provide the high level of abstraction required for exploring quantum algorithms and creating programs in a manageable form. The experience of working with these languages is expanded by Software Development Kits (SDKs) that offer the libraries and tools required to develop quantum software.

There is some blurring of the distinction between SDKs and frameworks and Integrated Development Environments (IDEs). This is shaped by the diverse approaches of quantum vendors and the integration of platforms and product verticals tailored to specific end-users. A researcher wanting full local access and pulse level control will differ from an enterprise team developing hybrid workloads, which will differ from a fintech startup building on top of a cloud-based quantum platform. This pattern is familiar in enterprise or cloud-based projects, but it will evolve with nuances as the commercial value of quantum systems becomes more apparent and influences product design. Meanwhile, the most prevalent SDKs and their associated programming languages are as follows.

- IBM Quantum and (Python-based) Qiskit

- Microsoft Quantum Development Kit (QDK) and Q#

- Google Cirq and Python

- Amazon Braket and Python

- Intel Quantum SDK for Python, and C/C++

- Rigetti Forest and (Python-based) PyQuil

2. Quantum algorithms and applications

Moving down the stack we come to the algorithms at the heart of any desired quantum workload. As the various competing approaches to creating quantum computers improve, so do the opportunities for real-world applications. A range of software libraries and packages are being built towards specific functional areas of use (such as IBM's Qiskit Machine Learning for quantum machine learning or Google's OpenFermion for quantum chemistry), and the existing libraries of known quantum algorithms are being extended and optimised by researchers and commercial vendors (such as Stephen Jordan's Quantum Algorithm Zoo and Classiq's library).

Some quantum algorithms have achieved near-celebrity status. Others are quantum adaptations of classical algorithms or serve as building blocks for larger workloads. There are even some quantum algorithms that are functionally useless in the real world (insert a joke about physicists here if you dare), but are important examples of quantum advantage. For a deeper dive, refer to my feature on quantum algorithms, but here are some notable examples.

- Shor’s Algorithm is the “encryption cracking” algorithm proposed as a way to factor large numbers exponentially faster than any known classical algorithm.

- Grover’s Search Algorithm is a useful starting point for algorithmic speed-up of unstructured data searches.

- Deutsch-Jozsa Algorithm isn’t technically useful in and of itself, but was an early example of showing quantum advantage over classical methods.

- Quantum Fourier Transform (QFT) is the quantum version of the Fast Fourier Transform at the heart of many powerful algorithms.

- Variational Quantum Eigensolver (VQE) is a hybrid algorithm being explored for near-term applications in quantum chemistry, materials simulation, and optimization problems.

3. Quantum simulators and emulators

Quantum simulators are the software tools used to replicate the behaviour of quantum systems on classical computers. They form an essential part of our workflow developing algorithms and optimising potential workloads (especially with simulators that feature the same set of gates or other elements of the specific hardware). The role of simulators has evolved as the industry itself evolves from pure academic research to potential commercial utility. The accuracy of the simulation for specific quantum hardware has improved to the point of modelling the system's unique noise and errors. Note that the following examples are subject to change or deprecate as vendors (looking at you Qiskit 1.0) iterate or streamline their product ranges as the industry matures.

- Google Cirq and Qsim

- Intel Quantum Simulator

- Microsoft Quantum Simulators (and novel Resource Estimator)

- IonQ hardware noise model simulation

- Quantum Brilliance Qristal Emulator

- IBM Qiskit Aer

4. Quantum cloud platforms

Before we move further down the stack we need to make a small side note on the topic of quantum cloud platforms. In the current era there are a handful of major vendors with a few hardware systems in operation. Each faces the same question of whether to attempt to sell hardware units directly, host on their own campus, sell access via the internet, or some combination of the above. And then add private interconnects, public cloud vendors, sovereign capability and research labs to the mix. It's not a given that the cloud platform model will prove to be the defining economic model for quantum computing, although it occupies the greatest mindshare from outside of the sector given the patterns of cloud computing that came prior.

Having said all of that, pay attention also to the companies who choose not to provision their systems for cloud platform access. At Quantum Brilliance my focus was on highly-parallelised edge-compute clusters using the diamond NV-center approach that enables small form factor and room-temperature QPUs (with the first ). In speaking with other quantum startups, the use cases for all forms of fixed or mobile deployment seem to apply, and there's a lot of interesting (and often undisclosed) work being done away from the web. Among those accessible online, here are some to watch.

- IBM Quantum Experience

- Amazon Braket

- Microsoft Azure Quantum

- Google Quantum Computing Service

- Strangeworks (multi-vendor platform)

- Xanadu Cloud

- Quantinuum (H-Series via multiple vendors)

5. Quantum compilers and circuit optimisation

The role of the quantum compiler is to translate the high-level quantum programs into the low-level instructions to be executed on quantum hardware. While the specifics are beyond this article's scope, the process involves gate decomposition (to match the abstract gates to the physical qubits), mapping and scheduling (to match the logical qubits of the algorithm to the physical qubits) and details specific to the vendor and their particular system (such as fidelity, error rates and connectivity).

To simplify this example stack, we will roll into this level the various forms of quantum circuit optimization that apply techniques to minimize the number of quantum gates, depth, or other resource elements without changing the underlying function. This can occur before compilation, as a part of it during the compilation process, or later on as part of a fine-tuning for the hardware process. For clarity, let's group it here within our workflow. Here's some examples to be aware of.

- Quantinuum TKET

- inQWIRE SQIR and VOQC

- Rigetti Quil Compiler

- Berkleley Quantum Synthesis Toolkit

- Microsoft QDK Compiler

6. Quantum error correction software

The role of quantum error correction in the current era of "noisy" quantum systems is especially important. To the point that there are companies specialising in this layer of the stack. The need for these companies and the wider error correction effort is due to the fragile nature of quantum systems. While superconducting quantum computers dominate popular imagination, error correction is vital across all methods (trapped ion, photonic, NV center, etc.).

Regardless of qubit generation method, challenges arise in preparation, workload execution, and measurement. Decoherence affects all methods, along with gate errors, measurement errors, and individual qubit quality. Quantum error correction is understandably complex, but can incorporate techniques such as system redundancy (spreading the quantum information across multiple qubits), syndrome management (using ancillary qubits to detect errors without disturbing the encoded information), and profiling the performance of individual or clustered qubits over time. While these will still be important if the era of truly fault-tolerant quantum computing is achievable, they are an exciting topic of research in the present Noisy Intermediate-Scale Quantum (NISQ) era we find ourselves in. Major vendors and examples include the following.

7. Quantum control systems

The quantum control system is responsible for managing and controlling the operations of the quantum hardware. It handles tasks at the hardware level such as calibration, pulse shaping, and qubit control. Given the different forms of quantum computing being developed, and the range of implementations of monolithic or networked quantum systems, we can consider the term "quantum control system" to be inclusive rather than specific. Likewise there is no hard and fast definition of a "quantum operating system".

This will likely change over time as the phase of development moves from the "Science to Technology" phases and into "Engineering to Product". Especially where closer alignment with existing product patterns and the terminology of the users and/or marketplace are desirable. For the most part, the control system will be an internal resource (something between an OS and firmware) but the following vendors and products are notable.

- Quantum Machines OPX+

- Q-CTRL Quantum Control (resource

- Qblox and Orange Quantum Systems Quantify-Core

8. Quantum hardware

The final layer in our stack is the quantum hardware itself. It's useful to remember that there is no single or right way to generate and work with qubits. Nor is there a clear market leading approach. Each method or approach has its own challenges, and there may prove to be multiple approaches with benefits for specific scenarios.

The hardware layer is what many think of when they hear the term "quantum computing", much in the same way that the early tube and valve computing devices defined the terminology and language by sheer force of mechanical interaction. Switches and punch cards gave way to instructions and programs over time, in turn being extended by memory and storage, and then connected by networks and servers.

These patterns are present in the research and development of quantum devices, and as we can see from the above, the layers of an example quantum stack provide opportunity for existing expertise at each layer to provide new and interesting ideas. Hopefully in new ways we might never have considered, given the nuance that each underlying technology and layer in the stack provides.

TL;DR?

The work being done by quantum physicists, electrical and electronic engineers, fabricators and all kinds of manipulators of atoms is not only supported but enabled by those of us who wrangle the bits and (zetta)bytes. It's a great time to get involved.

A good start for new learners is Q-CTRL's Black Opal, Delft university's high level MOOC on edX, and the inevitable path into IBM's Qiskit. For those of you from the C# side of things, this Microsoft introduction has a quick look at their approach with Q# and how it's rolled into the Azure ecosystem. And the Classiq algorithm library is worth bookmarking.

I'll do a dedicated article on learning quantum for software engineers soon, as this is a topic that I get emailed about nearly weekly since my Open Source Summit talk (and the rolling layoffs of engineering talent that has occurred since). While any industry shift is hard for the people and families affected by the colder edge of the economics, it can in turn be an opportunity to unlock the talent (and the career paths) that would otherwise be locked away. So if you're considering further exploration, please do so, and drop me a line if there's anything I can do to help. And be sure to sign up to the Product In Deep newsletter where we dig into the strategy and craft that goes into shipping real products in the Deep Tech and Frontier Technology realms.

如有侵权请联系:admin#unsafe.sh