Introduction

Multithreading is an important concept in programming that allows the creation and management of multiple threads of execution within a single application. By leveraging multithreading, programs can perform multiple tasks simultaneously, making them faster and more efficient. In this article, we will explore what multithreading is, why it's important to learn, what processes and threads are, and how modern programming languages like Golang support this technology.

What Is Multithreading and Why Learn It?

Multithreading is the ability of a program to execute multiple threads (parts of code) simultaneously. It’s similar to how a chef in a kitchen can chop vegetables while also preparing a sauce. The benefits of using multithreading include:

- Increased program speed through parallel task execution.

- Efficient use of processor resources, especially on multi-core systems.

- Improved responsiveness of programs, especially those performing long-running operations like downloading data from the internet.

Learning multithreading is crucial for developing high-performance and efficient applications, particularly in today’s environment, where users expect software to be fast and responsive.

What Is a Process?

A process is a running program that includes the program code, data, and resources such as files and memory needed for its execution. When you launch an application on your computer, the operating system creates a process for it.

Example: When you open a browser, the operating system creates a process that manages the browser and its tabs.

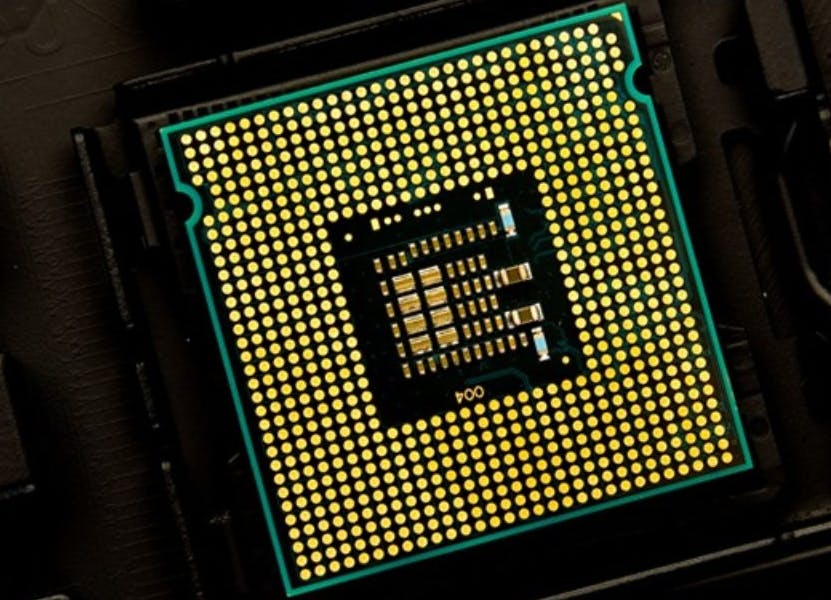

What Is a Process on a Processor?

A processor (or CPU) is the main component of a computer that executes program instructions. A process on a processor represents the execution of a program, broken down into a sequence of commands executed by the processor. Each process can occupy one or more processor cores, depending on its structure and tasks.

Example: If you have a multi-core processor, the operating system can distribute different processes across various cores to ensure more efficient task execution.

What Are Clock Cycles in a Processor?

Clock cycles (or cycles) in a processor are the smallest units of time in which the processor performs one or more operations.

The processor's clock speed is measured in hertz (Hz), typically in gigahertz (GHz), indicating the number of cycles per second.

Example: If a processor has a clock speed of 3 GHz, it means it performs 3 billion cycles per second. Each cycle involves performing one or more elementary operations, such as reading or writing data, performing arithmetic operations, etc.

How Clock Cycles Relate to Processes on a Processor

Clock cycles are directly related to the execution of processes. Each process on a processor is carried out through a series of cycles during which the processor handles the process's instructions. In each cycle, the processor can perform one or more operations, such as:

- Reading an instruction from memory.

- Decoding the instruction.

- Executing an arithmetic or logical operation.

- Reading or writing data.

Therefore, the execution speed of a process depends on the processor's clock speed: the more cycles per second, the more instructions can be executed in the same period.

Example: Imagine the processor is a factory and the cycles are the work shifts. In each shift (cycle), the factory performs a certain amount of work (process instructions). The more shifts per day (higher clock speed), the more work can be completed.

Let’s see the details with a small table:

- Fetch: The instruction is retrieved from memory.

- Decode: The instruction is interpreted to determine what actions are required.

- Execute: The processor performs the required actions.

- Writeback: The result of the execution is written back to memory or a register.

Clock Cycle 1 Clock Cycle 2 Clock Cycle 3 Clock Cycle 4 Clock Cycle 5

_______________ _______________ _______________ _______________ _______________

| | | | | |

| Fetch | | | | |

| Instruction 1 | | | | |

|_______________|________________|________________|________________|________________|

| | | | |

| Decode | | | |

| Instruction 1 | | | |

|________________|________________|________________|________________|

| | | |

| Execute | | |

| Instruction 1 | | |

|________________|________________|________________|

| | |

| Writeback | |

| Instruction 1 | |

|________________|________________|

| |

| Fetch |

| Instruction 2 |

|________________|

-

Clock Cycle 1:

-

Fetch Instruction 1: The processor reads the instruction from memory.

-

-

Clock Cycle 2:

-

Decode Instruction 1: The processor decodes the fetched instruction to understand what needs to be done.

-

-

Clock Cycle 3:

-

Execute Instruction 1: The processor performs the operation specified by the instruction.

-

-

Clock Cycle 4:

-

Writeback Instruction 1: The processor writes the result of the operation back to a register or memory.

-

-

Clock Cycle 5:

- Fetch Instruction 2: The processor reads the next instruction from memory (and starts the cycle again for the next instruction).

In a pipelined processor, multiple instructions can be in different stages of execution simultaneously, which increases efficiency. For example, while one instruction is being executed, another can be fetched, and yet another can be decoded. This overlap improves the throughput of the processor, allowing it to execute more instructions per unit of time.

What Is a Thread, and How Is It Different?

A thread is the smallest unit of processing that can be executed independently within a process. A single process can have multiple threads running in parallel, sharing common resources such as memory and files.

The main difference between a process and a thread:

- Processes are independent and have their own resources.

- Threads within the same process share resources and can interact directly with each other.

Example: Imagine a process as an office building (program) and threads as individual employees (parts of the program) working in the building. They can work on different tasks simultaneously but share common resources like the internet or printer.’

Multithreading in Different Programming Languages

Multithreading is essential in modern computing because it enables applications to execute multiple tasks concurrently, enhancing performance and responsiveness. This section explores how various programming languages address multithreading, why it's important, and the types of threads they support.

Why Multithreading is Important

Multithreading allows programs to perform several operations simultaneously, which can lead to significant performance improvements, particularly on multi-core processors. By breaking down tasks into smaller threads that can run concurrently, programs can make better use of the available CPU cores, leading to faster and more efficient execution.

Benefits of Multithreading

-

Increased Performance: By running multiple threads in parallel, applications can process data more quickly. This is especially useful for tasks that can be divided into independent units, such as processing large datasets or handling multiple network requests.

-

Improved Responsiveness: In user-facing applications, multithreading ensures that the user interface remains responsive even while performing intensive background tasks. For example, a web browser can continue to respond to user input while loading multiple web pages simultaneously.

-

Resource Utilization: Multithreading makes efficient use of system resources by keeping the CPU busy with multiple threads, rather than allowing it to sit idle while waiting for one task to complete.

Challenges in Multithreading

Despite its benefits, multithreading introduces complexity. Managing multiple threads requires careful handling of shared resources to avoid issues such as race conditions, deadlocks, and synchronization problems. Different programming languages offer various mechanisms to manage these challenges.

Types of Threads

-

Kernel-Level Threads: These threads are managed directly by the operating system kernel. They offer good performance in multi-core systems but are heavier in terms of resource consumption compared to user-level threads.

-

User-Level Threads: Managed by a user-level library rather than the OS kernel, these threads are lighter and faster to create but might not be as efficient on multi-core systems because the kernel is unaware of their existence.

-

Green Threads: A type of user-level thread that is scheduled by a runtime library or virtual machine instead of the operating system. Languages like Go use green threads (goroutines) to achieve efficient multithreading without the overhead associated with kernel-level threads.

Multithreading in Various Programming Languages

C/C++

C and C++ provide low-level control over multithreading through libraries like POSIX threads (pthreads) on Unix-like systems and the Windows threads API on Windows. These libraries offer extensive control but require meticulous management to prevent concurrency issues. Developers must manually handle synchronization and resource sharing, which can be error-prone and complex.

Java

Java integrates multithreading into its core language features through the java.lang.Thread class and the java.util.concurrent package. Java’s multithreading model is built on top of the JVM, which abstracts many complexities of thread management. The JVM manages thread scheduling and provides high-level concurrency utilities, making it easier for developers to write and manage multithreaded applications.

Java's approach helps in creating scalable applications, particularly in server-side and enterprise environments.

Python

Python supports multithreading through the threading module, which provides a higher-level interface for working with threads. However, Python's Global Interpreter Lock (GIL) limits true parallel execution in multithreaded programs. The GIL ensures that only one thread executes Python bytecode at a time, which simplifies memory management but can be a bottleneck in CPU-bound tasks.

To circumvent this limitation, Python developers often use the multiprocessing module, which allows concurrent execution by using separate processes instead of threads.

C#

C# offers robust support for multithreading through the System.Threading namespace and the Task Parallel Library (TPL). These frameworks provide high-level abstractions for creating and managing threads, as well as tools for parallel programming. The TPL simplifies the development of parallel and asynchronous code, making it easier to write responsive and scalable applications, particularly in the .NET ecosystem.

Golang

Golang (or Go) is a modern programming language developed by Google. It has built-in support for multithreading using goroutines – lightweight threads managed by the Go runtime rather than the operating system.

Goroutines in Go are implemented on top of system threads with the help of a scheduler that efficiently distributes goroutines across available processor cores. The Go scheduler uses an "M:N" model, where M is the number of goroutines and N is the number of system threads.

Key components of Go's scheduler:

-

Goroutines – Lightweight threads that run concurrently. They are launched using the

gokeyword. -

Machines (M) – System threads that execute goroutines. One M can execute multiple goroutines sequentially.

-

Processors (P) – Abstraction representing the resources needed to execute goroutines. Each P is associated with one M and manages a queue of goroutines to be executed.

The Go scheduler distributes goroutines among processors and machines, ensuring efficient use of all available processor cores.

Example of creating a goroutine in Go:

func main() {

go sayHello() // Launching a function in a separate goroutine

time.Sleep(time.Second) // Waiting for the goroutine to finish

}

In this example, the sayHello function is launched as a goroutine and will run concurrently with the main function. Using goroutines allows writing efficient and parallel programs with minimal overhead.

Rust

Rust provides safe and efficient multithreading through its ownership model and type system, which prevents data races at compile time. Rust’s concurrency model leverages threads provided by the standard library, and the language’s strict compile-time checks ensure that shared resources are accessed safely. This reduces the likelihood of concurrency bugs and makes multithreaded Rust programs both performant and reliable.

Why Different Approaches?

The varying approaches to multithreading across programming languages are influenced by factors such as language design philosophy, target applications, and the need for abstraction versus control. High-level languages like Java and C# abstract many threading details to simplify development, making them suitable for large-scale enterprise applications.

In contrast, languages like C and C++ offer fine-grained control, catering to systems programming and applications requiring high performance and low-level resource management.

Understanding these different approaches and their respective benefits and trade-offs is crucial for developers to choose the right tools and techniques for their specific use cases, leading to the development of efficient, high-performance applications.

Conclusion

Multithreading is a powerful tool that enables programs to perform multiple tasks simultaneously, significantly enhancing their speed and efficiency. In this guide, we've explored several key concepts fundamental to understanding multithreading: processes, threads, clock cycles, and how different programming languages implement multithreading.

By grasping these concepts, we gain a deeper insight into how operating systems and applications manage multiple tasks concurrently. Understanding processes helps us see how applications run in isolated environments while understanding threads reveals how these processes can perform multiple operations at once. Knowing about clock cycles provides a glimpse into the fundamental workings of a CPU, illustrating how it executes instructions in a series of timed steps.

Moreover, we've seen how modern programming languages like Golang simplifies the use of multithreading. Golang's lightweight goroutines and efficient scheduler demonstrate how advanced languages provide robust tools for developers to harness the full power of multithreading with ease.

Armed with this knowledge, we can more effectively read and write code, especially in multithreaded environments. Whether we're debugging complex multithreaded applications or optimizing code for better performance, our understanding of these foundational concepts enables us to make informed decisions and implement solutions that are both efficient and scalable.

Multithreading opens up new possibilities for creating high-performance and responsive applications. By learning and utilizing these techniques, we can develop software that better leverages modern hardware capabilities, ultimately delivering better user experiences and more powerful computing solutions.

Take care!

如有侵权请联系:admin#unsafe.sh