Warning: This article discusses explicit adult content and child sexual abuse materi 2023-11-28 00:4:11 Author: www.bellingcat.com(查看原文) 阅读量:24 收藏

Warning: This article discusses explicit adult content and child sexual abuse material (CSAM).

A US artificial intelligence company surreptitiously collected money for a service that can create nonconsensual pornographic deepfakes using financial services company Stripe, which bans processing payments for adult material, an investigation by Bellingcat can reveal.

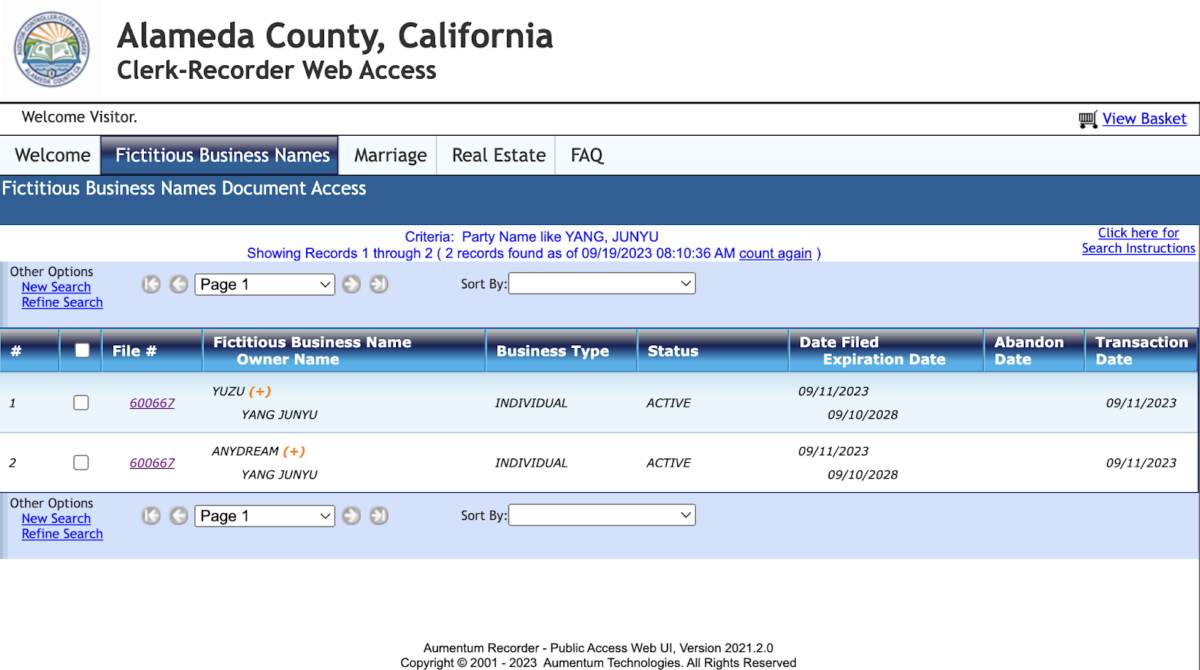

California-based AnyDream routed users through a third-party website presenting as a remote hiring network to a Stripe account in the name of its founder Junyu Yang, likely to avoid detection that it was violating the adult content ban. Stripe said it could not comment on individual accounts but, shortly after the payments provider was contacted for comment last week, the account was deleted.

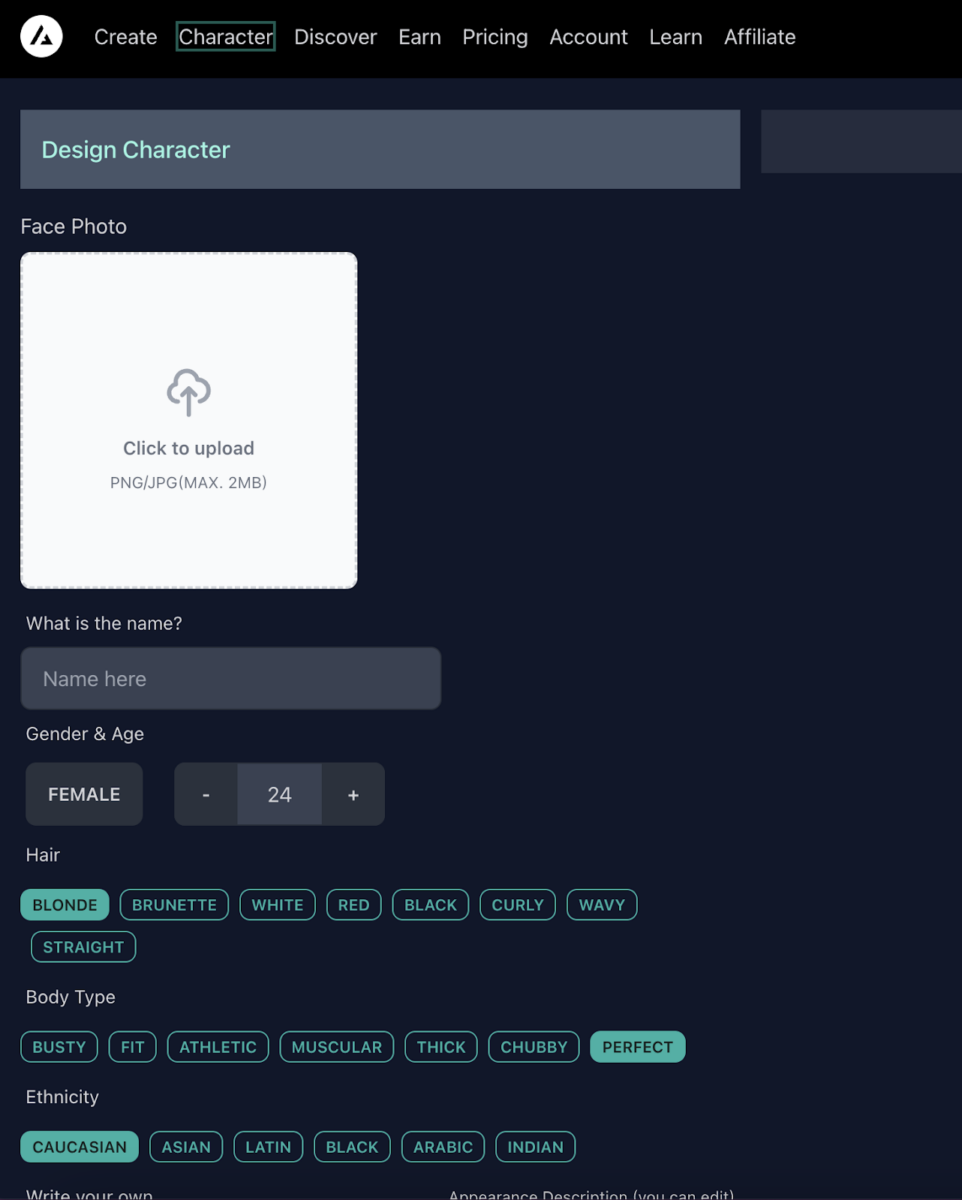

AnyDream, which allows users to upload images of faces to incorporate into AI-image generation, has been used in recent weeks to create nonconsensual pornographic deepfakes with the visage of a schoolteacher in Canada, a professional on the US East Coast, and a 17-year-old actress.

Bellingcat is not naming any of the women who were targeted to protect their privacy but was able to identify them because AnyDream posted the images to its website along with the prompts used to create them, which included their names.

The company has over ten thousand followers on Instagram and over three thousand members in its Discord server, where many users share their AI-generated creations.

AnyDream claimed, in an email sent from an account bearing the name “Junyu Y”, that Yang is no longer with the company. This email was sent 121 minutes after the Discord of an administrator in the company’s server, which identified itself as “Junyu” in April and gave out his personal Gmail address earlier this month, handled a request from a user in the company’s server on the messaging platform.

Asked about this, AnyDream said that it had taken control of Yang’s Discord account, which also linked out to his personal account on X, formerly Twitter, until it was removed after Bellingcat contacted the company. The name of the email account previously bearing his name was then changed to “Any Dream.”

AnyDream claimed its purported new owners, who it refused to identify but said took over sometime this month, left Yang’s name on the Stripe account “during the testing process by mistake.”

However, the Stripe account in Yang’s name was used to collect payments via surreptitious third-party routing as early as October 10. Yang’s personal email address was given out to users in the company’s Discord server weeks later, suggesting whether or not he remains with the company, he was involved when the routing was set up.

“We recognized the issue of processing through Stripe during the due diligence of our transition,” the company said, in an email, adding there “will be a new payment provider replacing Stripe very soon.”

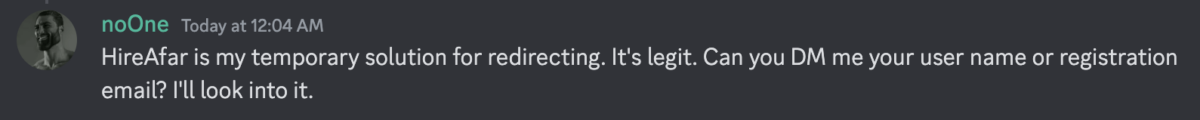

The company avoided answering questions about why it had continued to surreptitiously route Stripe payments through the third-party site. Yang — whose Discord account referred to the routing as “my temporary solution” for collecting payments on October 16 — did not reply to multiple requests for comment.

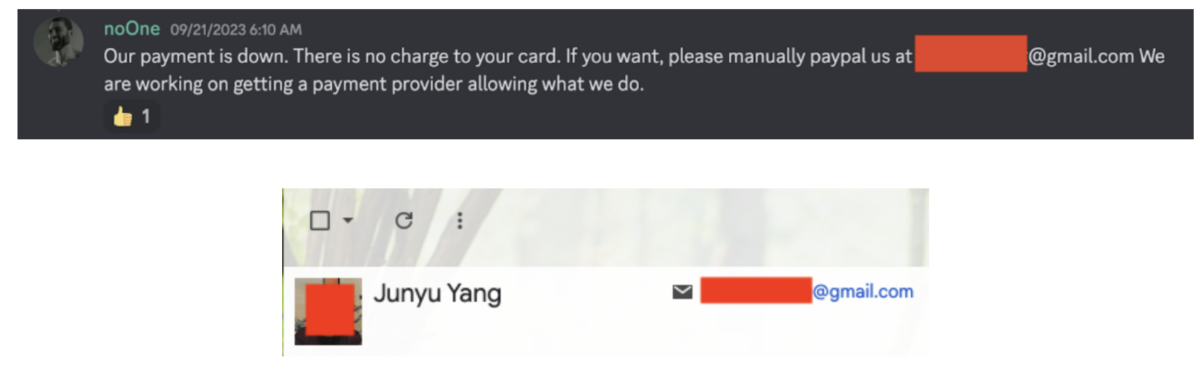

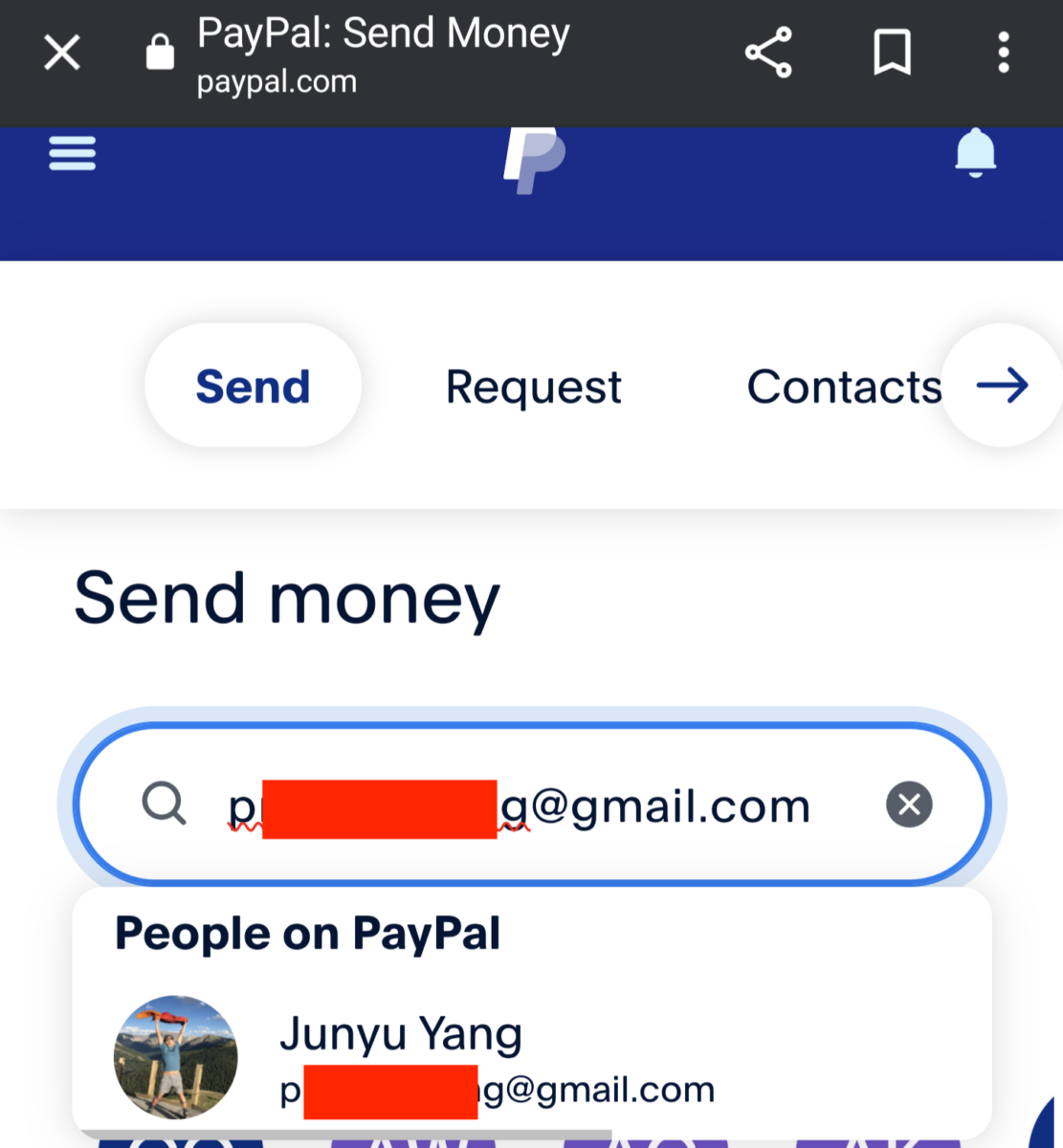

Bellingcat also found that Yang directed users to make payments to his personal PayPal account, potentially violating its terms banning adult content. AnyDream said it has stopped using Paypal — the company last directed a user to make a payment to Yang’s personal email via Paypal on November 2.

While AnyDream declined to provide any information on its purported new owners, Bellingcat was able to identify a man who lists himself as co-founder, a serial entrepreneur named Dmitriy Dyakonov, who also uses the aliases Matthew Di, Matt D.Y., Matt Diakonov, and Matthew Heartful.

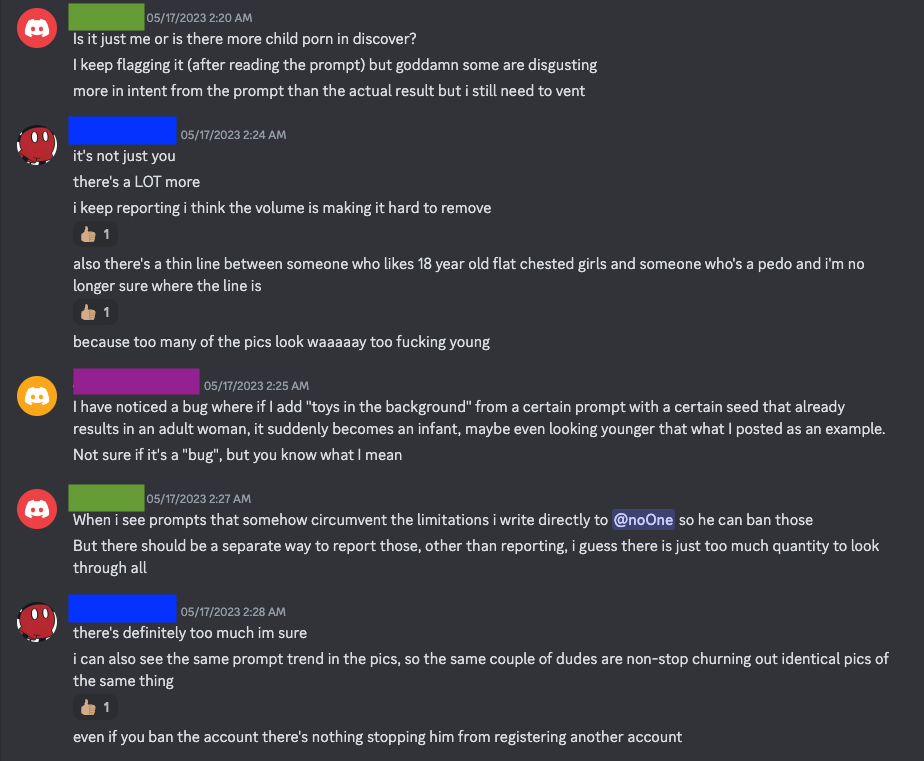

Meanwhile, payment issues are not the only concern at AnyDream. Users of the platform, which can AI-generate pornographic material using uploaded images of someone’s face, alleged earlier this year that there were depictions of what they viewed as underage-looking girls on the company’s site and Discord server.

In a statement to Bellingcat, AnyDream stressed its opposition to and banning of the generation of child sex abuse material (CSAM), but acknowledged it “did have some issues in the beginning.”

“We have since banned thousands of keywords from being accepted by the AI image generator,” the company added. “We also have banned thousands of users from using our tool. Unfortunately, AI creates a new challenge in age verification. With AI, there are images where the character obviously looks too young. But some are on the border.”

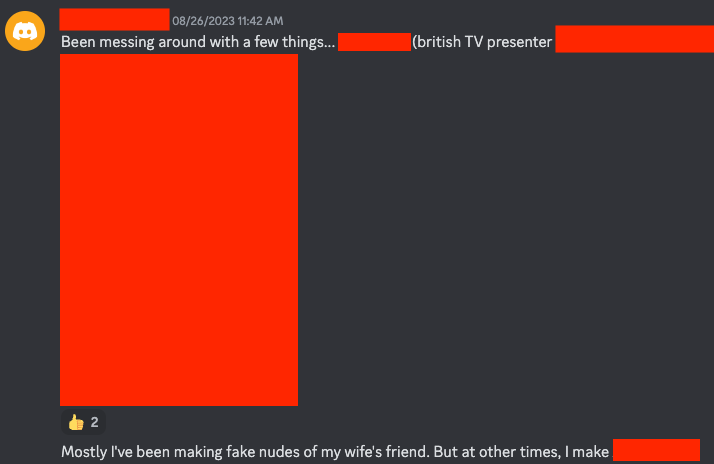

“Mostly I’ve been making fake nudes of my wife’s friend”

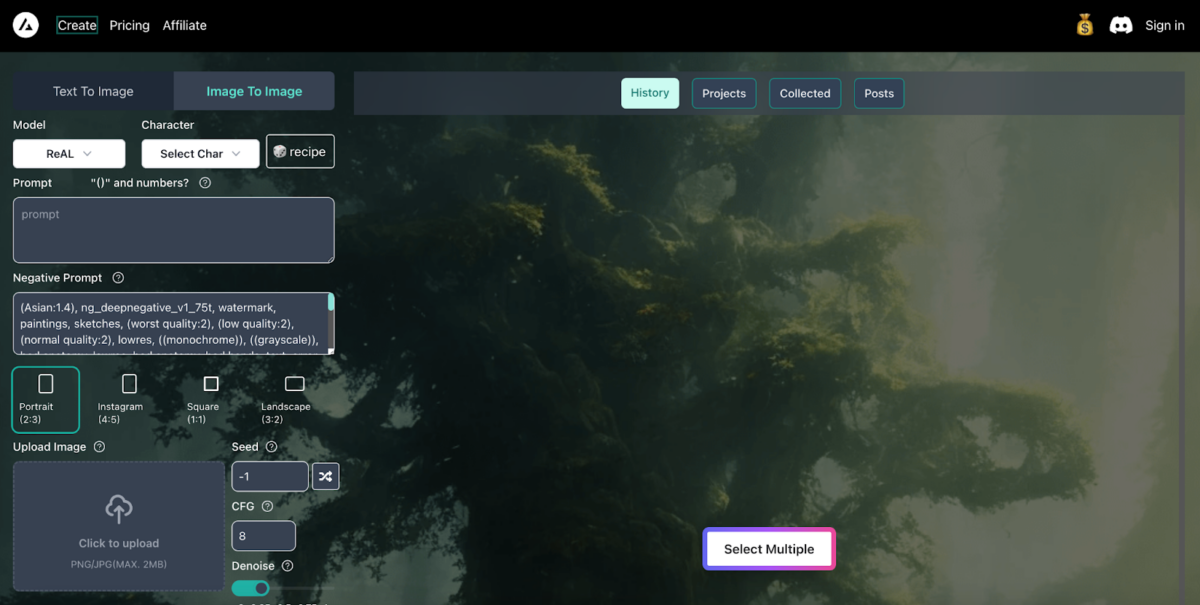

AnyDream is one of dozens of platforms for generating pornographic content that have proliferated alongside the recent boom in AI tech. Founded earlier this year by Yang, a former data scientist at LinkedIn and Nike according to his LinkedIn profile, it lets users generate pornographic images based on text prompts. Users buy “tokens” which allow them to create AI-generated images, including the option of uploading photos of a face to incorporate.

This allows anyone to instantly generate photorealistic explicit images featuring the facial likeness of nearly any person of their choosing, with or without their consent. Users often share images they have made in the company’s Discord server, many of them pornographic, and some are also published on a “Discover” page on the company’s website that highlights new and popular content.

Bellingcat found multiple incidents of AnyDream being used to generate nonconsensual pornographic deepfakes of private citizens. One user publicly posted nonconsensual AI-generated porn of his ex-girlfriend, a professional on the American east coast, on social media. He named and tagged her personal accounts in the posts. AnyDream’s website also prominently displayed the photos.

Last week week, a user generated images using the name and likeness of a grade school teacher in western Canada — those images also appeared on the company’s website.

On 26 August, another user shared in the company’s Discord server that they had generated AI nudes of their wife’s friend. On 22 October another solicited advice, “like i have a picture of this girl and i want to use her face for the pictures.” A moderator replied with detailed guidance.

AnyDream said in a statement that it is working to limit deepfaking to “only users who have verified their identities in the near future,” though did not offer a specific timeline. The company said its primary business case is for Instagram and OnlyFans creators who can make deepfake images of themselves, “saving thousands of dollars and time per photoshoot.” For now, however, users can still create nonconsensual pornographic deepfakes.

These findings add to growing concerns about AI content generation, especially the unchecked creation of pornographic images using women’s likenesses without their consent.

“Image manipulation technology of this kind is becoming highly sophisticated and easily available, making it almost difficult to distinguish ‘real’ nudes from deep fakes,” Claire Georges, a spokesperson for the European Union law enforcement agency Europol, told Bellingcat about the broader issue of nonconsensual deepfakes.

In many national jurisdictions, including the US, there is no federal law governing deepfake porn — a handful of states, including California, New York, Texas, Virginia, and Wyoming, have made sharing them illegal.

Ninety-six per cent of deepfake images are pornography, and 99 per cent of those target women, according to a report by the Centre for International Governance Innovation. Seventy-four per cent of deepfake pornography users told a survey by security firm Home Security Heroes they don’t feel guilty about using the technology.

Of note, the most common targets of nonconsensual AI porn are female celebrities. Some image generator platforms that can produce pornography, such as Unstable Diffusion, ban celebrity deepfakes.

On AnyDream, pornographic deepfakes of celebrities remain commonplace. Shared on the platform’s website and Discord in recent days and weeks are pornographic deepfake images using the face of a sitting US congresswoman and the faces of several multiplatinum Grammy Award-winning singer-songwriters and Academy Award-winning actresses.

AnyDream said it has banned thousands of celebrity names from image prompts, but acknowledged, “People can still misspell it to a minor degree to get AI to create it.”

“We are in the process of creating a better moderation to counter that,” the company added, in a statement. “Unfortunately, it’s an iterative process. I personally am not very plugged into pop culture so I actually have a hard time telling who is a celebrity and who is not.”

However, because the text prompts used to generate images are publicly shared on AnyDream’s website are visible for anyone to review, Bellingcat was able to determine that the correctly spelt names of several celebrities, including two actresses who have starred in multibillion-dollar film franchises, were used to create nonconsensual pornographic deepfakes in recent days and weeks.

“Too many of the pics look waaaaay too f—king young”

One of the greatest concerns among experts regarding the proliferation of AI image generation technology is the potential for creating pornographic material depicting underage subjects. AnyDream, by the company’s own admission, has struggled to contain this material despite banning it, and the company’s filters to prevent the generation of underage content have sometimes failed. This has led some of the platform’s own users to flag inappropriate material on the “Discover” page of AnyDream’s website.

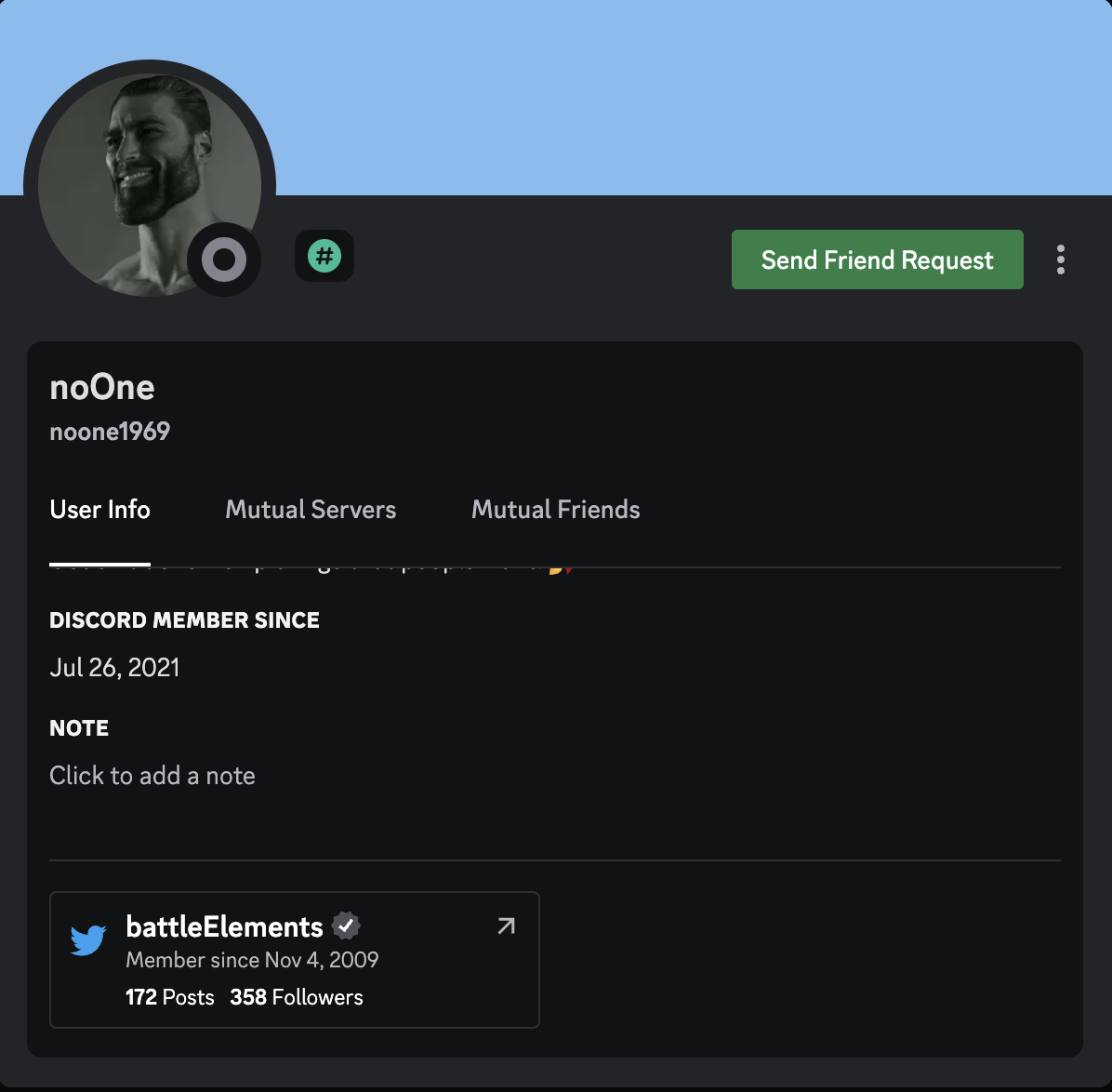

The AnyDream Discord server’s chief administrator, who uses the handle @noOne, has responded to past complaints by removing offending images — sometimes within hours or as long as a day — and by pledging to strengthen filters designed to prevent the creation of illicit material. The user also stated that the company was using minimal safeguards: “Sorry, I have a relatively rudimentary CP [child pornography] filter based on keywords,” the account wrote to one user on Discord in June.

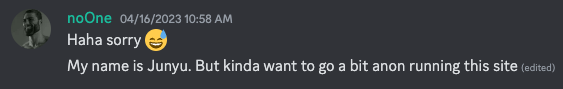

We believe Yang was @noOne. The account was the first administrator when the server was created in March, provides updates about AnyDream, and is the primary account that handles technical and payment issues.

In addition, @noOne wrote in the Discord on 16 April, “My name is Junyu. But kinda want to go a bit anon running this site.” AnyDream claimed the account is no longer controlled by Yang, though avoided answering questions about who now controls it.

The administrator account has also given out a personal Gmail address that displays with Yang’s name when entered into the e-mail provider’s search function.

“Most major tech platforms will proactively ban anything resembling CSAM, but those that do not—as well as platforms that are decentralized—present problems for service administrators, moderators and law enforcement,” reads a June report by the Stanford Internet Observatory’s Cyber Policy Centre.

AnyDream can easily create pornographic images based on prompts and uploads of faces because it runs on Stable Diffusion, a deep learning AI model developed by the London- and San Francisco–based startup Stability AI. Stable Diffusion was open-sourced last year, meaning anyone can modify its underlying code.

One of the authors of the Stanford report, technologist David Thiel, told Bellingcat that AI content generators like AnyDream which rely on Stable Diffusion are using a technology with inherent flaws that allow for the potential creation of CSAM. “I consider it wrong the way that Stable Diffusion was trained and distributed — there was not much curation in the way of what data was used to train it,” he said, in an interview. “It was happily trained on explicit material and images of children and it knows how to conflate those two concepts.”

Thiel added that a “rush to market” among AI companies meant that some technologies were distributed to the public before sufficient safeguards were developed. “Basically, all of this stuff was released to the public far too soon,” he said. “It should have been in a research setting for the majority of this time.”

A spokesperson for Stability AI said, in a statement to Bellingcat, that the company “is committed to preventing the misuse of AI. We prohibit the use of our image models and services for unlawful activity, including attempts to edit or create illicit content.”

The spokesperson added that Stability AI has introduced enhanced safety measures to Stable Diffusion including filters to remove unsafe content from training data and filters to intercept unsafe prompts or unsafe outputs.

In AnyDream’s case, not all of the alleged cases of material depicting possibly underage subjects that have been flagged by users have resulted in deletion. In May, one user in AnyDream’s Discord server posted nonconsensual pornographic AI-generated images of a popular actress dressed as a film role she initially played when she was a minor. “That’s pretty dodgy IMO,” wrote another user. “You’ve sexualised images of someone who was a child in those films.”

The images remain live and publicly available on the Discord server.

Pay from Afar

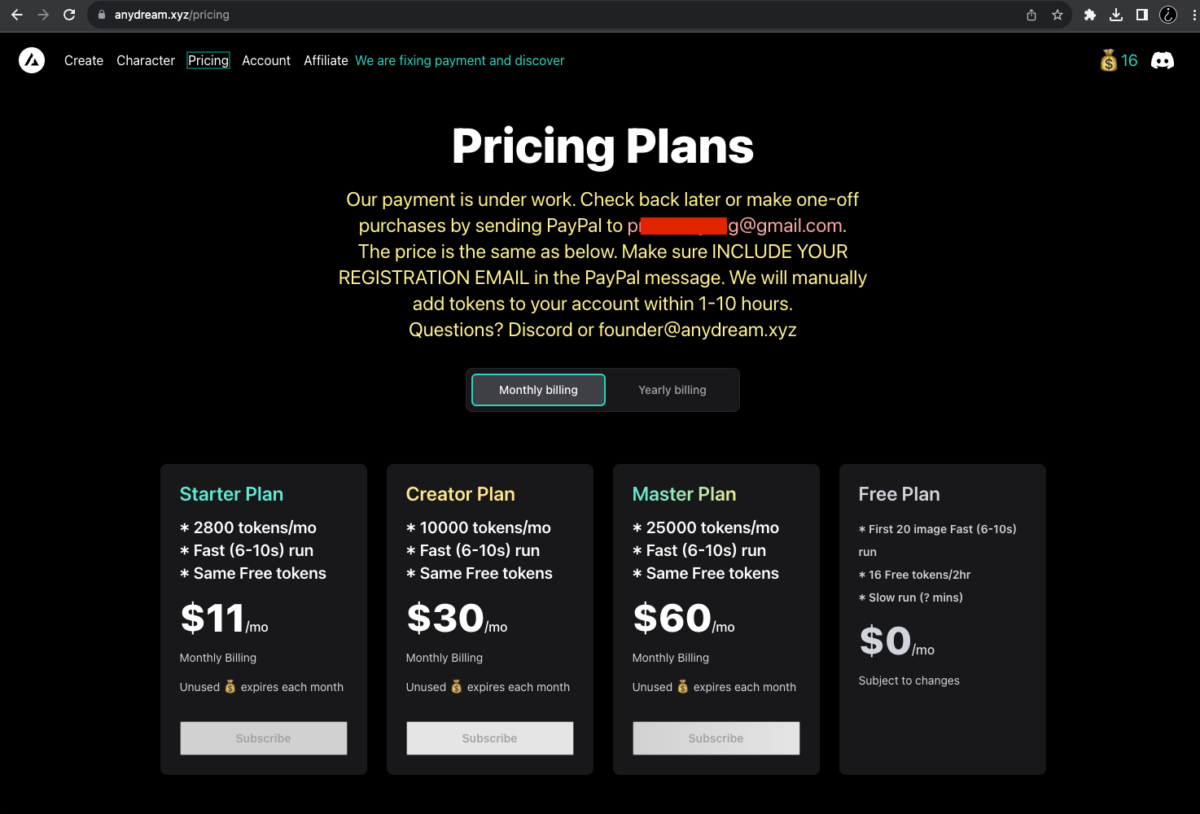

Finding a way to facilitate financial transactions for a website that generates non-consensual pornographic deepfakes has proven difficult for AnyDream. The company resorted to violating the terms of two payment providers — Stripe and PayPal — to get around these struggles.

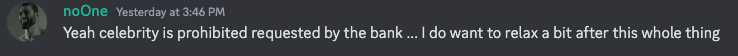

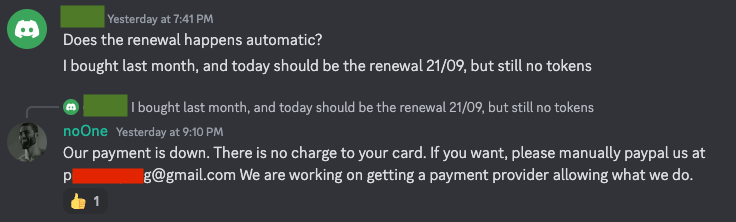

The company wrote in its Discord server on 6 September that it lost access to Stripe because the payment provider bans adult services and material. The main administrator account has frequently bemoaned the difficulty of securing and maintaining payment providers, which they said have objected to the creation of nonconsensual celebrity deepfakes and to AI-generated nudity.

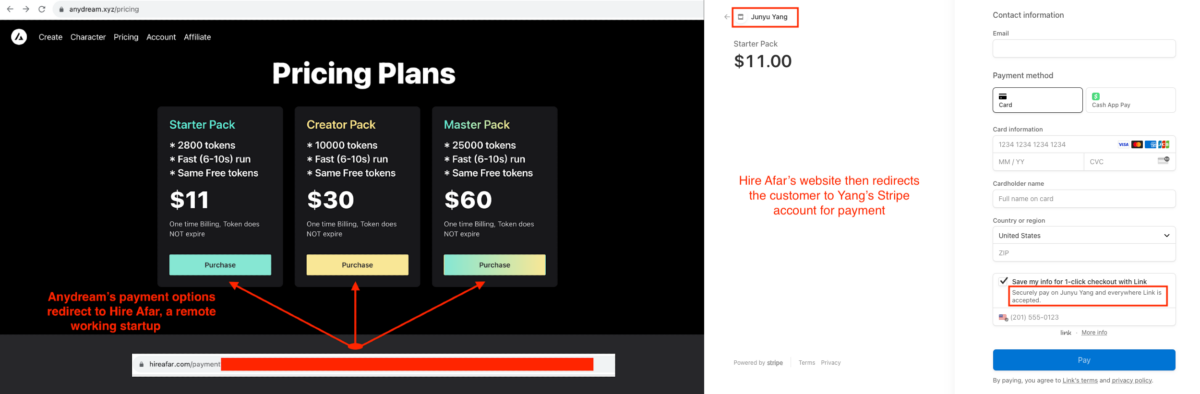

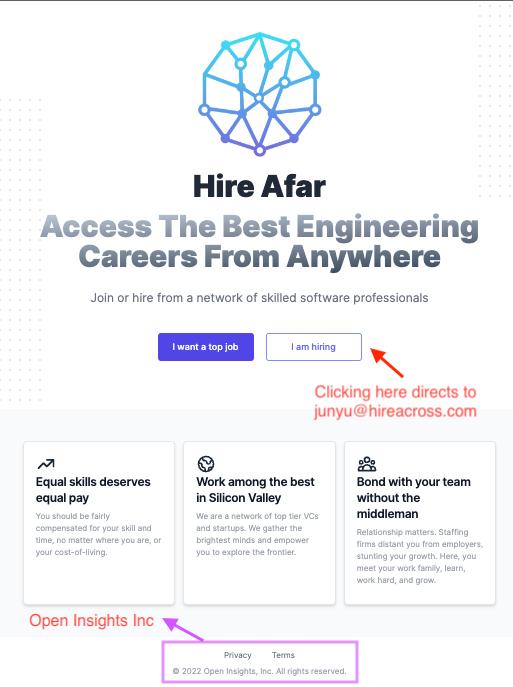

This did not stop AnyDream from using Stripe. At some point after 6 September, the company began collecting payments surreptitiously by routing them through a third-party site called Hire Afar — which describes itself as a networking platform for remote software workers — to a Stripe account in Yang’s name.

When a user clicked on payment options on the AnyDream website, they were redirected to Hire Afar, which redirected them to Stripe.

@noOne admitted in the AnyDream Discord server on 15 Oct that “HireAfar is my temporary solution for redirecting”. Users who made purchases with AnyDream confirmed on the server that their credit cards were charged to “AnyDream www.hireafar.ca”.

Further info on AnyDream’s earnings from Stripe were made public in a Reddit post made last month by a person whose username is Yang’s first and last name and identifies themself as the founder of an unrelated company that Yang lists himself as the founder of on his LinkedIn profile. In the Reddit post, Yang asked for advice about selling his business, noting his “AI NSFW image gen site” was making $10,000 in revenue per month and $6,000 in profit. He said “all income is coming from stripe” in a comment below the post.

The Reddit account has also posted about owning a AI Girlfriend service called Yuzu.fan, which local records show Yang registered as a business name in Alameda County, California. It also also links out to a defunct X handle, @ethtomato — searching on X reveals this was Yang’s previous handle before it was changed to @battleElements.

Stripe isn’t alone among payment providers whose terms and conditions AnyDream has skirted around. Before the company began rerouting payments via the Hire Afar site, it posted a personal Gmail address for Yang on its payments page and solicited payments via PayPal there.

AnyDream’s administrator account in its Discord server also directed customers to send funds via PayPal to the same Gmail account. Users have been directed to send payments to this address since at least June 16 and as recently as November 2.

When entered into PayPal’s website, the email auto-populated Yang’s name and photo, indicating it is his personal account. This is potentially a violation of PayPal’s terms of service that ban the sale of “sexually oriented digital goods or content delivered through a digital medium.”

AnyDream is also likely to have violated policies with a third external vendor, in this case its website registrar. According to ICANN, AnyDream’s domain registrar is Squarespace, which bans the publishing of sexually explicit or obscene material under its acceptable use policy.

AnyDream did not directly address questions about why it continued third-party routing of payments to Stripe, said it has stopped using PayPal and said it may seek alternative website services. AnyDream has begun accepting payment via cryptocurrency with the promise of offering credit card purchasing in the future.

Serial Founder

A LinkedIn account that includes his name and image says Yang is a former data scientist at LinkedIn and Nike.

Last month, he identified himself as AnyDream’s founder on X in an appeal to a Bay Area venture capitalist who put out a call for entrepreneurs “working on AI for adult content.”

He also flagged that he is developing a “chatbot for virtual influencers,” linking out to a site at the address Yuzu.fan. A search of online records in Alameda County, California, confirms that Yang has registered AnyDream and Yuzu as fictitious business names, a legal term for a name used by a person, company, or organisation for conducting business that is not their own name.

AnyDream, asserting again that Yang is not involved in the company, said in an e-mail that it plans to deregister the fictitious business name.

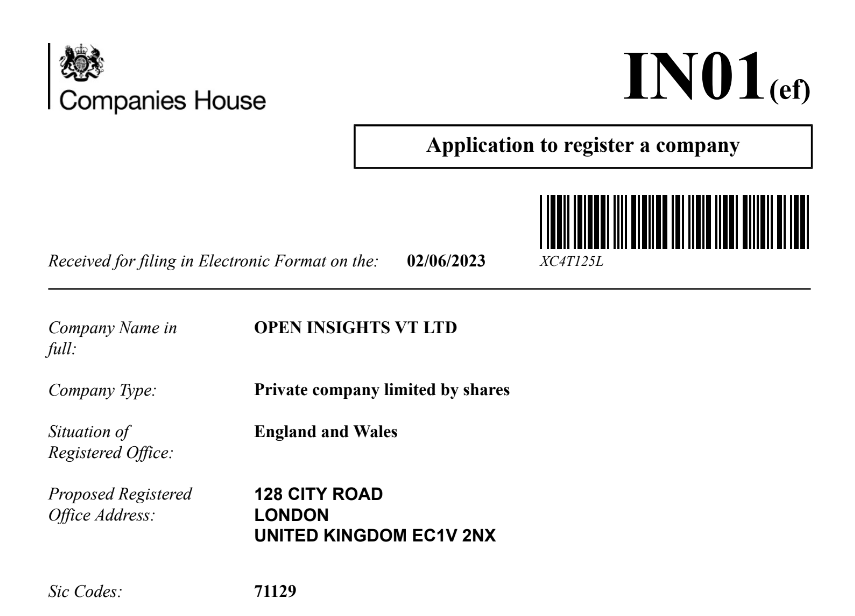

Records also suggest Yang has grouped these, and other entities, under an umbrella company called Open Insights, Inc. The websites for AnyDream, Yuzu and Hire Afar—the website used to redirect Stripe payments for AnyDream—all contain a copyright claim to Open Insights at the bottom of their homepages. So does a fourth website, Hireacross.com, the URL of which can be found because it’s in a contact e-mail listed on Hire Afar which uses Yang’s first name.

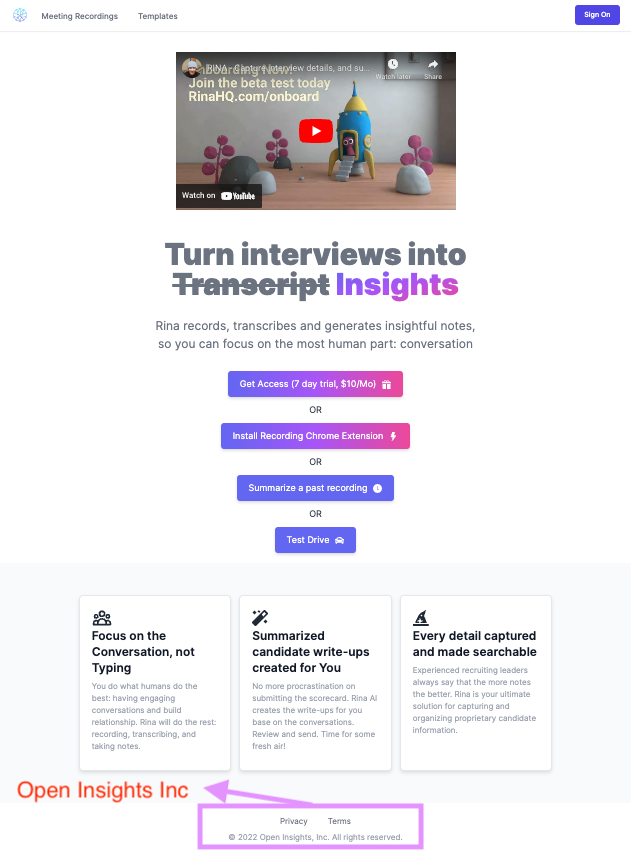

A fifth site that lists Open Insights can be found on Yang’s Linkedin — he lists himself as the co-founder of RinaHQ, an AI transcription startup whose website also lists the same copyright. The YouTube video on RinaHQ’s webpage also links to Yang’s YouTube page. AnyDream, which again declined to specify its purported new owners, claimed the new ownership had bought this group of entities. It would only refer to the person or people behind them as “the management team at Open Insight, inc.”

Additionally, two of the sites — AnyDream and Hireacross— use the same background image and layout. The similarities are possibly because the sites were built using the same platform — most are registered through Squarespace, according to ICANN records.

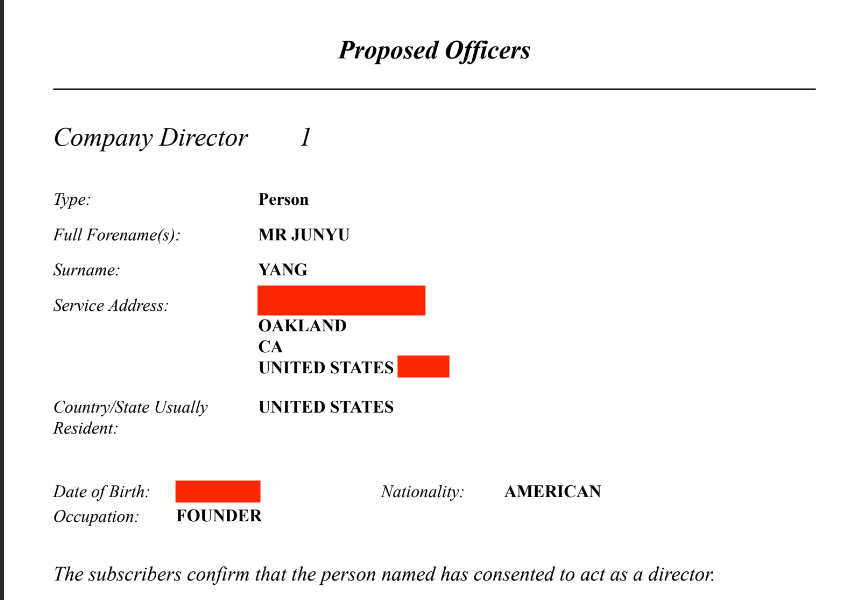

Open Insights, meanwhile, is incorporated in the United Kingdom, according to Companies House, the United Kingdom’s public registry of business entities. It was incorporated in the UK in June 2023 as Open Insights VT Ltd using a California address with the same zip code as one listed on AnyDream’s website. It lists Yang as its sole director. In an e-mail AnyDream denied any knowledge of this UK entity.

The UK is a popular jurisdiction for offshore companies to register because there they are only subject to taxes on their profits made in the UK. Open Insights registered at a London address affiliated with a company formation service provider — these are firms in the UK that provide services, including physical addresses to register at, so that they can domicile in Britain.

The sharing of deepfake pornographic images generated without consent is set to become a criminal offence in England and Wales under the Online Safety Bill, which received Royal Assent on 26 October 2023. (The legislation does not impose regulations on companies like AnyDream that allow for the generation of these images).

A Co-founder Emerges

While AnyDream declined to offer information on its purported new ownership, Bellingcat was able to identify another Bay Area entrepreneur who lists himself as a co-founder of the company.

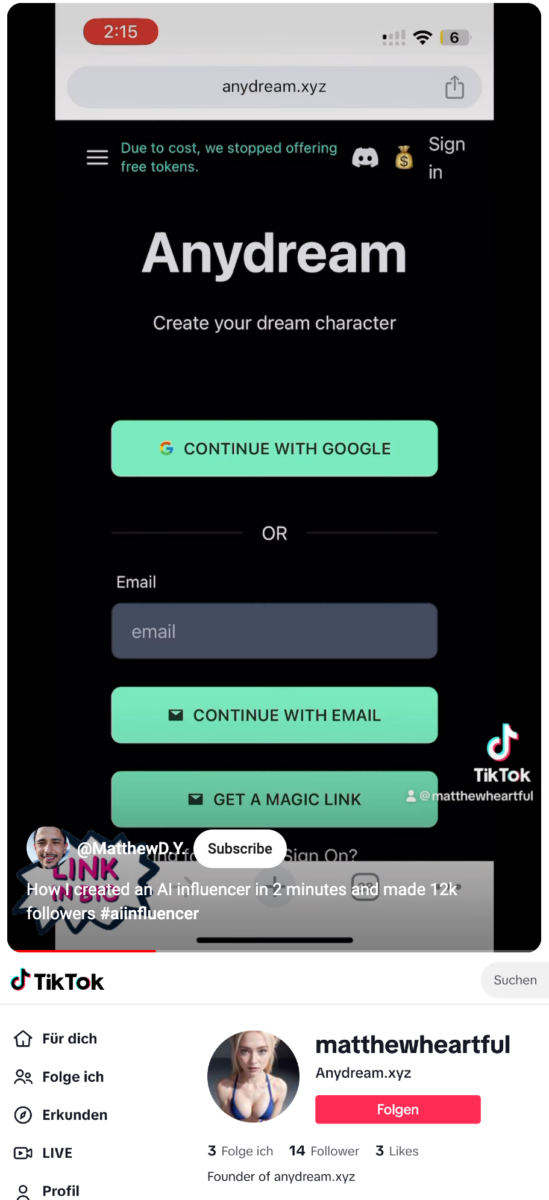

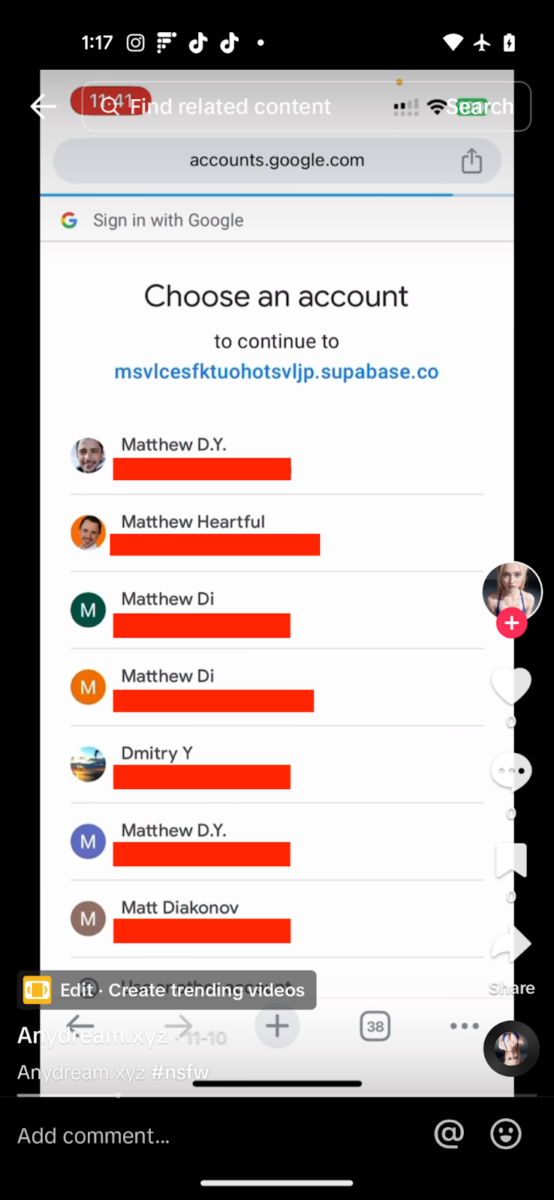

Dmitriy Dyakonov, who also uses the aliases Matthew Di, Matt D.Y., Dmitry Y, Matt Diakonov, and Matthew Heartful, stated that he is an AnyDream co-founder on his TikTok profile, which uses his Matthew Heartful alias. The TikTok profile was deleted after Dyakonov was contacted for comment.

A video posted to this TikTok profile on October 30 that includes directions for how to use AnyDream was crossposted to a YouTube account, which uses Dyakonov’s Matthew D.Y. alias, on the same day.

The TikTok video also identified Dyakonov’s various aliases, displaying them during the login process of its AnyDream demo.

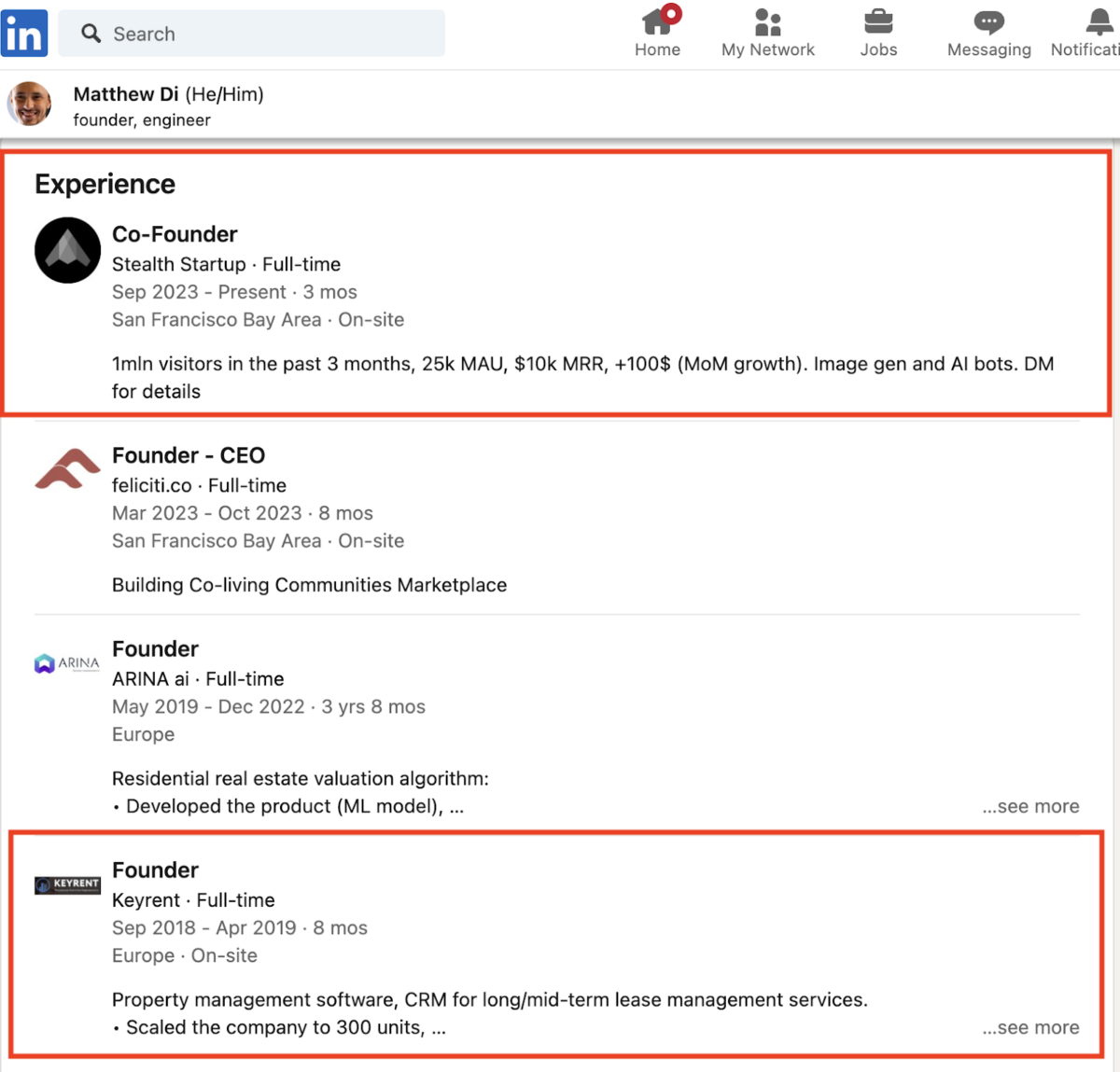

Bellingcat was able to further link Dyakonov to AnyDream via a LinkedIn account under his Matthew Di alias. His profile lists the role of Founder at a company called “Keyrent” among his past experience. The account also states he is currently the co-founder of a “stealth startup” working in image generation — “stealth startup” is a tech industry term for companies that avoid public attention. He also states this startup earns $10,000 in monthly revenue, the same amount Yang said AnyDream was earning in his Reddit post.

With the information on this LinkedIn account, Bellingcat was able to find videos Dykanov posted on another YouTube account, this one under his Dmitry Y alias. In one of those videos, he introduces himself as the co-founder of Keyrent under the name Dmitriy Dyakonov. (This video was deleted after Bellingcat contacted Dyakonov for comment.)

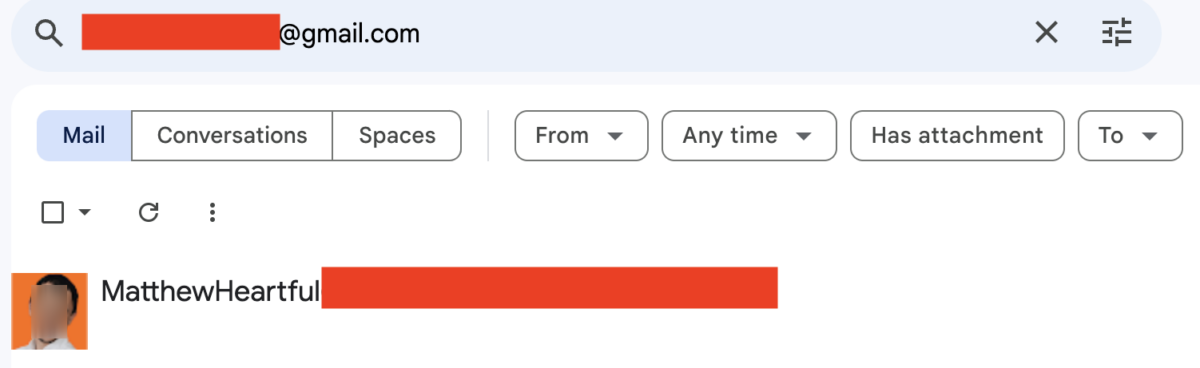

Additionally, entering Dyakonov’s TikTok username with “@gmail.com” into Gmail reveals an account with an image of his face as the main display image. This account uses his “Matthew Heartful” alias.

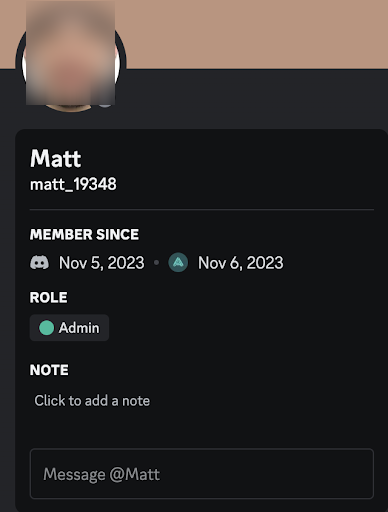

Dyakonov’s face also appears on the account of an administrator in the AnyDream Discord server which goes by the name “Matt.”

The Matt account joined Discord on November 5 — Dyakonov’s LinkedIn account under the Ma alias says he joined the stealth startup he currently works at in September. He did not reply to a request for comment and AnyDream did not reply to questions about his involvement.

AnyDream’s long-term plans go beyond deepfake images. AnyDream shared on 21 October in the company’s Discord server, “We are developing faceswap for videos. Just waiting for the bank to clear us. Don’t want to add more ‘risky’ features before that.” As AnyDream looks to expand its services, legal regimes around the world are rushing to keep up with the proliferation of AI-generated non-consensual deepfake content.

“Women have no way of preventing a malign actor from creating deepfake pornography,” warned a report by the US Department of Homeland Security last year. “The use of the technology to harass or harm private individuals who do not command public attention and cannot command resources necessary to refute falsehoods should be concerning. The ramifications of deepfake pornography have only begun to be seen.”

Michael Colborne and Sean Craig contributed research.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Instagram here, X here and Mastodon here.

如有侵权请联系:admin#unsafe.sh