read file error: read notes: is a directory 2023-4-20 18:0:4 Author: unit42.paloaltonetworks.com(查看原文) 阅读量:53 收藏

This post is also available in: 日本語 (Japanese)

Executive Summary

Unit 42 researchers are monitoring the trending topics, newly registered domains and squatting domains related to ChatGPT, as it is one of the fastest-growing consumer applications in history. The dark side of this popularity is that ChatGPT is also attracting the attention of scammers seeking to benefit from using wording and domain names that appear related to the site.

Between November 2022 through early April 2023, we noticed a 910% increase in monthly registrations for domains related to ChatGPT. In this same time frame, we observed a 17,818% growth of related squatting domains from DNS Security logs. We also saw up to 118 daily detections of ChatGPT-related malicious URLs captured from the traffic seen in our Advanced URL Filtering system.

We now present several case studies to illustrate the various methods scammers use to entice users into downloading malware or sharing sensitive information. As OpenAI released its official API for ChatGPT on March 1, 2023, we’ve seen an increasing number of suspicious products using it. Thus, we highlight the potential dangers of using copycat chatbots, in order to encourage ChatGPT users to approach such chatbots with a defensive mindset.

Palo Alto Networks Next-Generation Firewall and Prisma Access customers with Advanced URL Filtering, DNS Security and WildFire subscriptions receive protections against ChatGPT-related scams. All mentioned malicious indicators (domains, IPs, URLs and hashes) are covered by these security services.

| Related Unit 42 Topics | Phishing, Cybersquatting |

Trends in ChatGPT-Themed Suspicious Activities

Case Studies of ChatGPT Scams

The Risks of Copycat Chatbots

Conclusion

Acknowledgments

Indicators of Compromise

Trends in ChatGPT-Themed Suspicious Activities

While OpenAI was beginning its rapid rise to become one of the most famous brands in the field of artificial intelligence, we observed several instances of threat actors registering and using squatting domains in the wild that use “openai” and “chatgpt” as their domain name (e.g., openai[.]us, openai[.]xyz and chatgpt[.]jobs). Most of these domains are not hosting anything malicious as of early April 2023, but it is concerning that they are not controlled by OpenAI, or other authentic domain management companies. They could be abused to cause damage at any time.

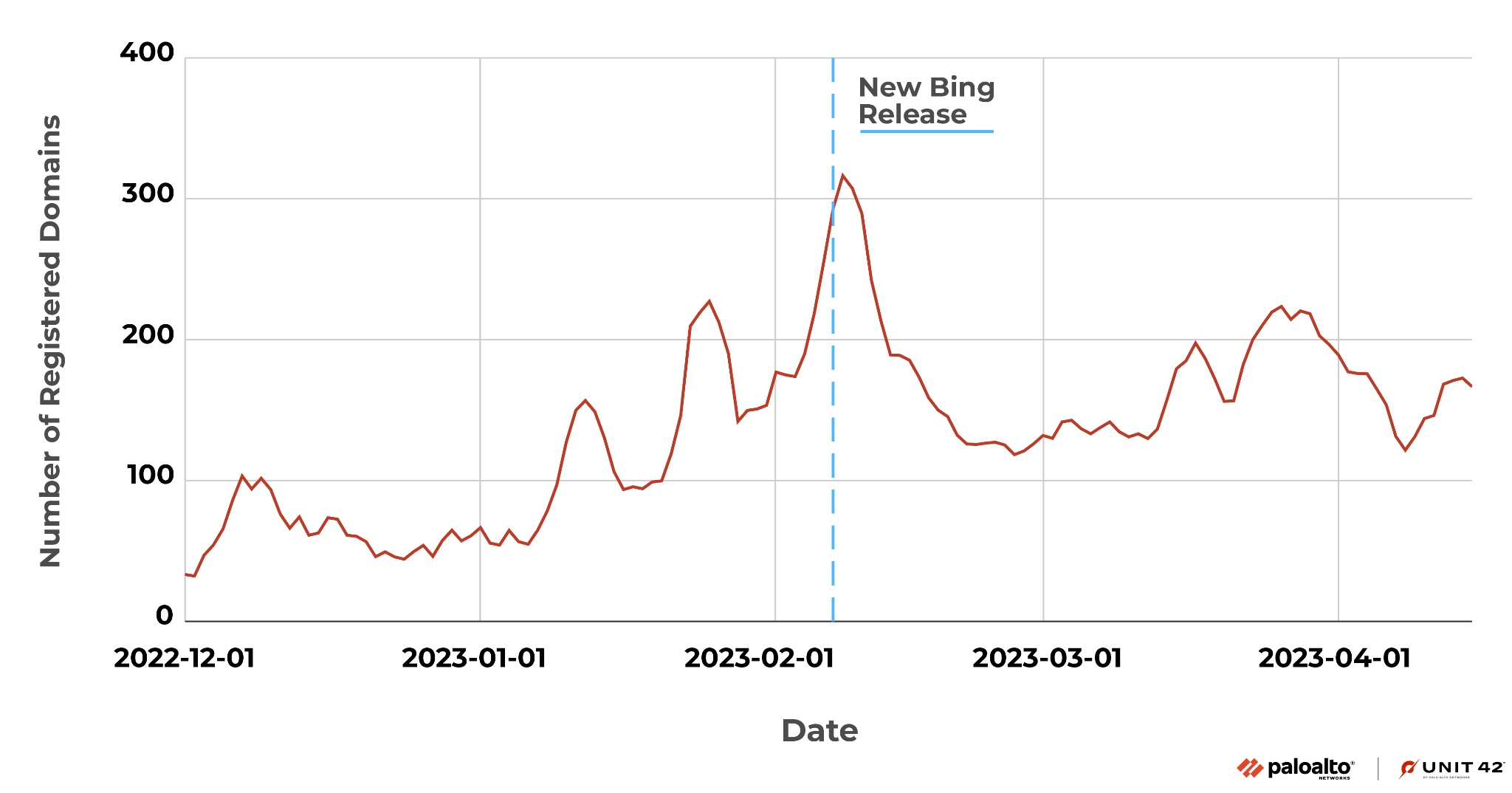

Figure 1 shows the trend of squatting domain registration related to ChatGPT, after its release. We noticed a significant increase in the volume of daily domain registrations during our research period. Shortly after Microsoft announced their new Bing version on Feb. 7, 2023, more than 300 domains related to ChatGPT were registered.

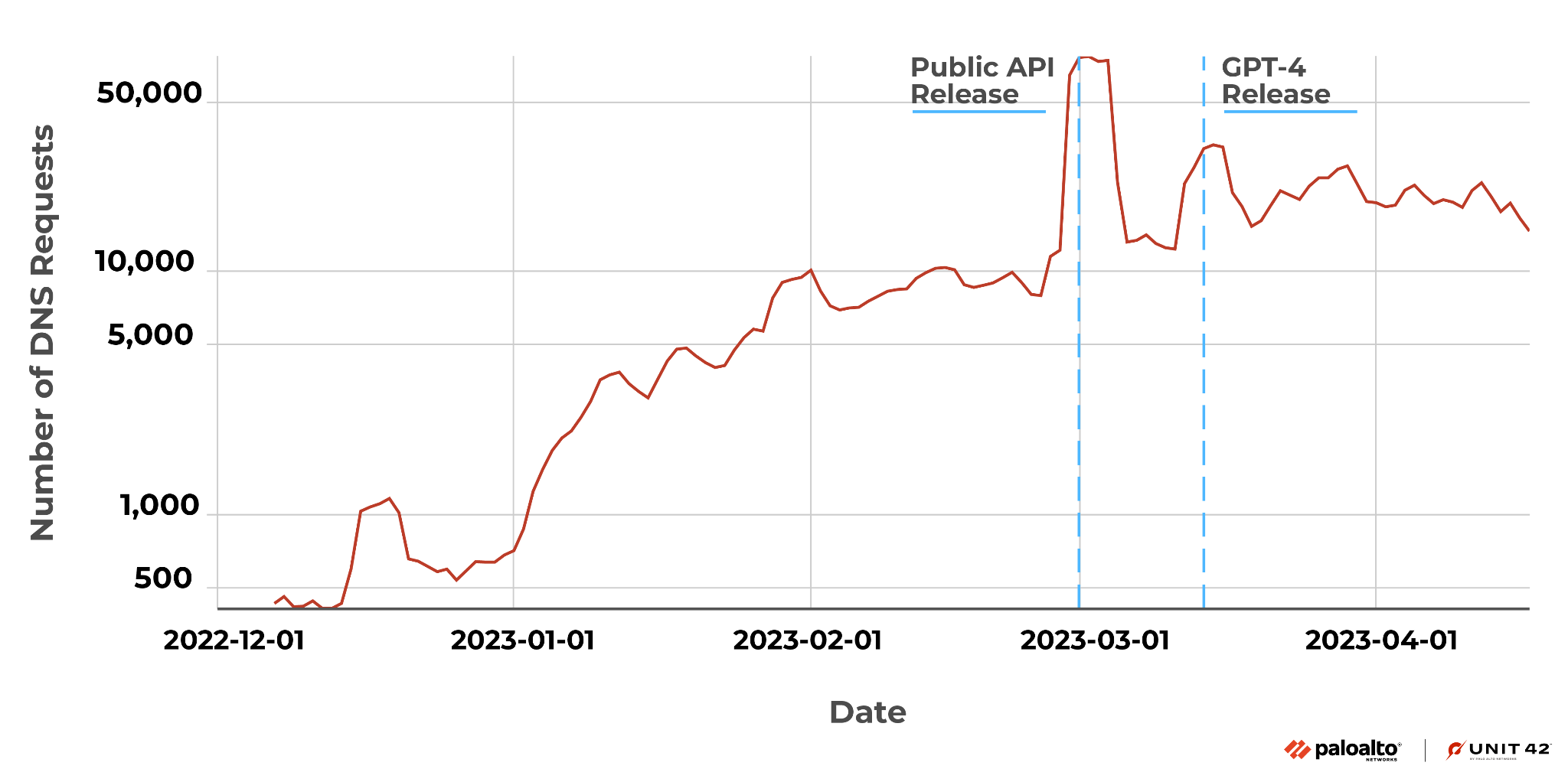

Figure 2 shows a similar pattern where the recognition and popularity of ChatGPT have resulted in a significant rise in logs from the DNS Security product.

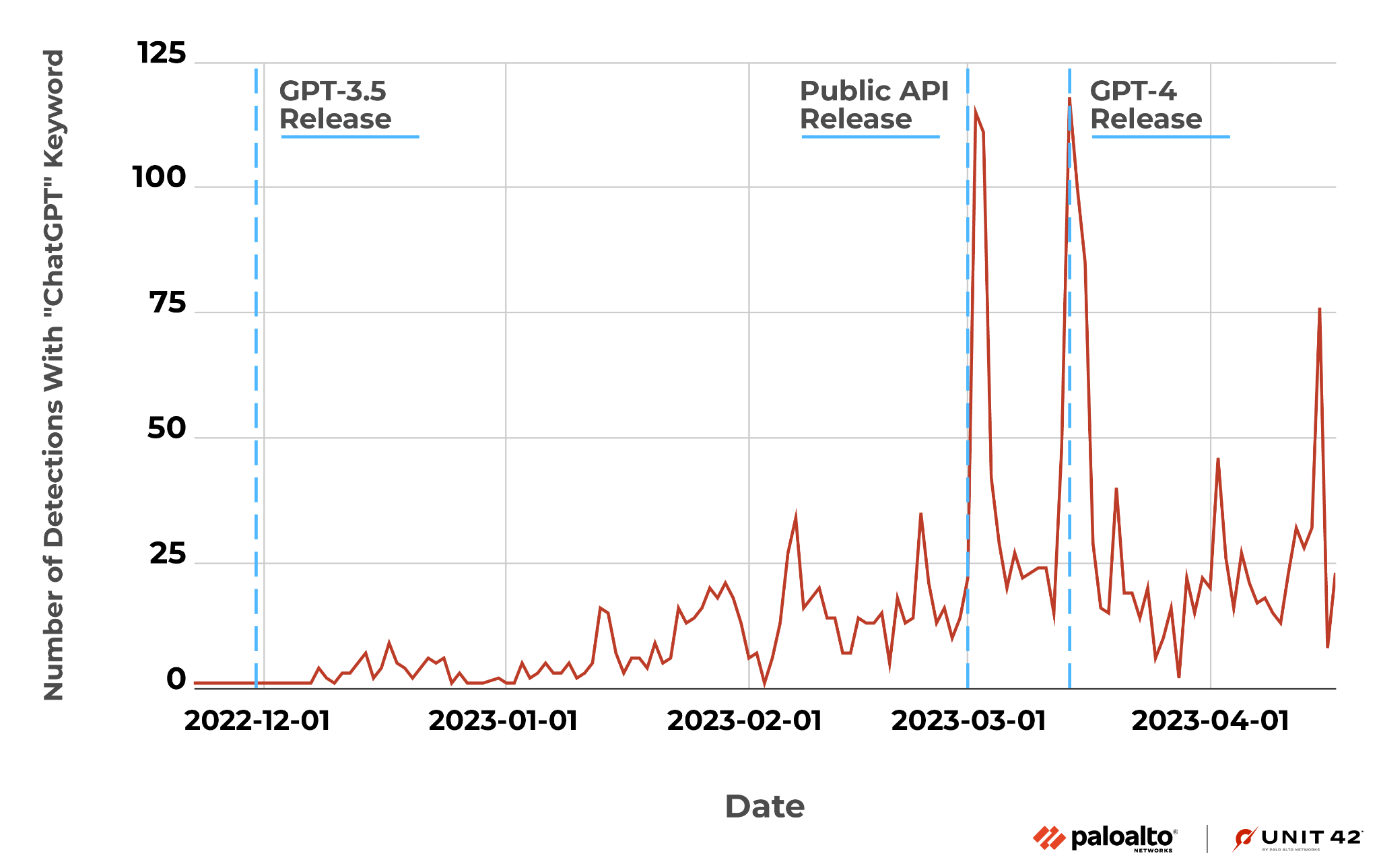

We also did a keyword search in the traffic of the Advanced URL Filtering system. Figure 3 shows two large spikes on the day when OpenAI released the ChatGPT official API and GPT-4.

Case Studies of ChatGPT Scams

While conducting our research, we observed multiple phishing URLs attempting to impersonate official OpenAI sites. Typically, scammers create a fake website that closely mimics the appearance of the ChatGPT official website, then trick users into downloading malware or sharing sensitive information.

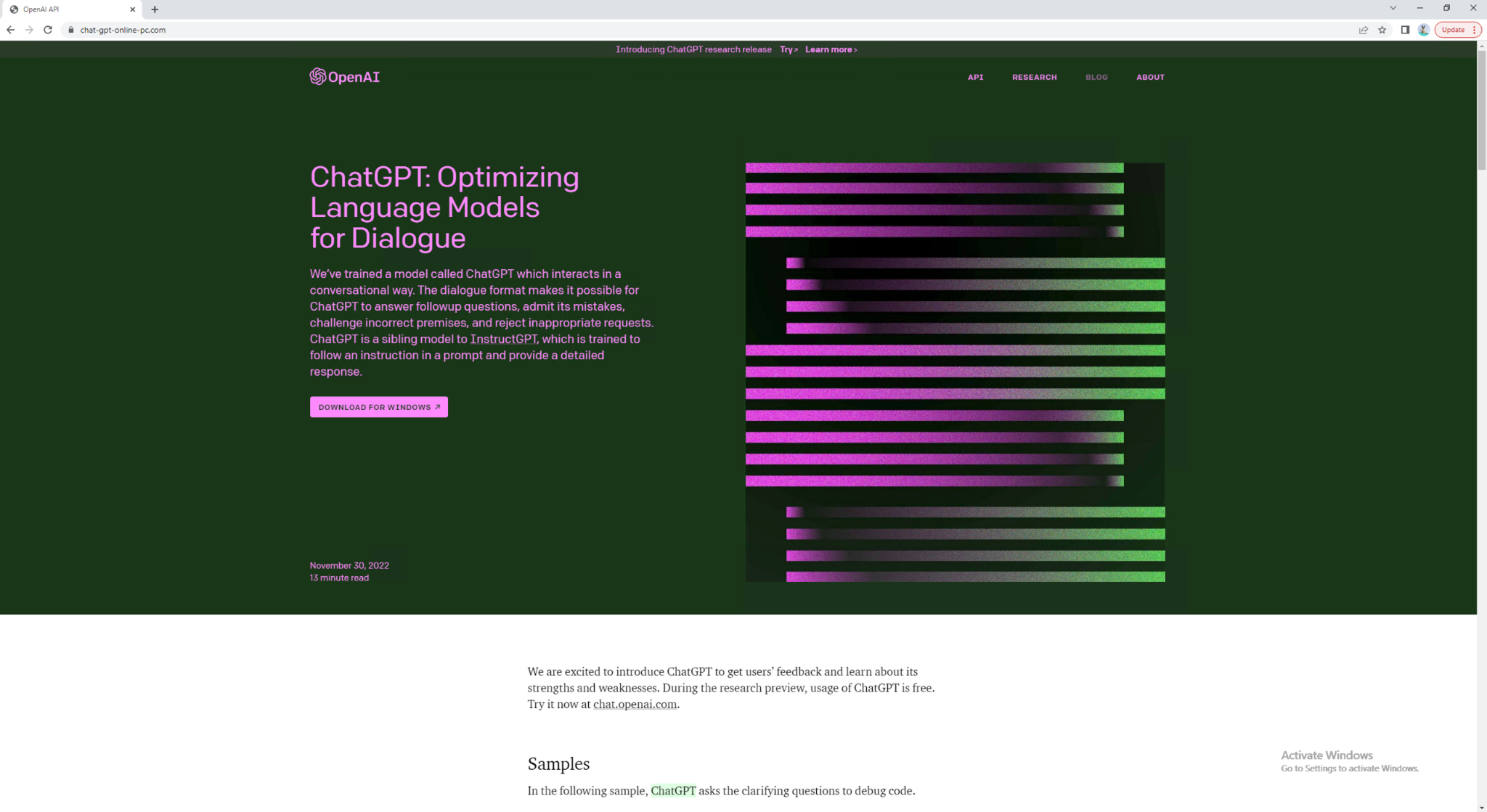

For example, Figure 4 shows a common technique that scammers use to deliver malware. It presents users with a “DOWNLOAD FOR WINDOWS” button that, once clicked, downloads the Trojan malware (SHA256: ab68a3d42cb0f6f93f14e2551cac7fb1451a49bc876d3c1204ad53357ebf745f) to their devices without the victims realizing the risk.

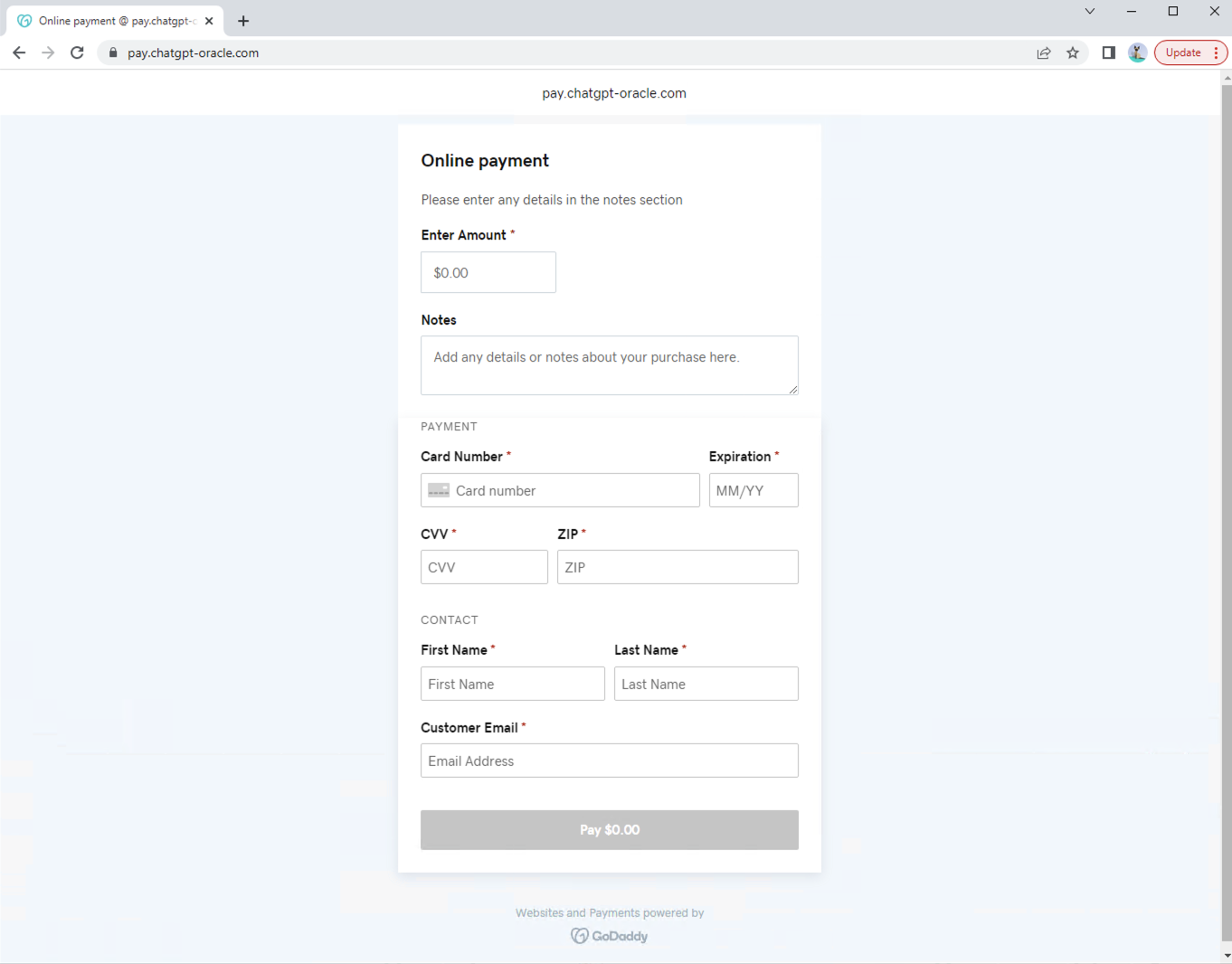

Additionally, scammers might use ChatGPT-related social engineering for identity theft or financial fraud. Despite OpenAI giving users a free version of ChatGPT, scammers lead victims to fraudulent websites, claiming they need to pay for these services. For instance, as shown in Figure 5, the fake ChatGPT site tries to lure victims into providing their confidential information, such as credit card details and email address.

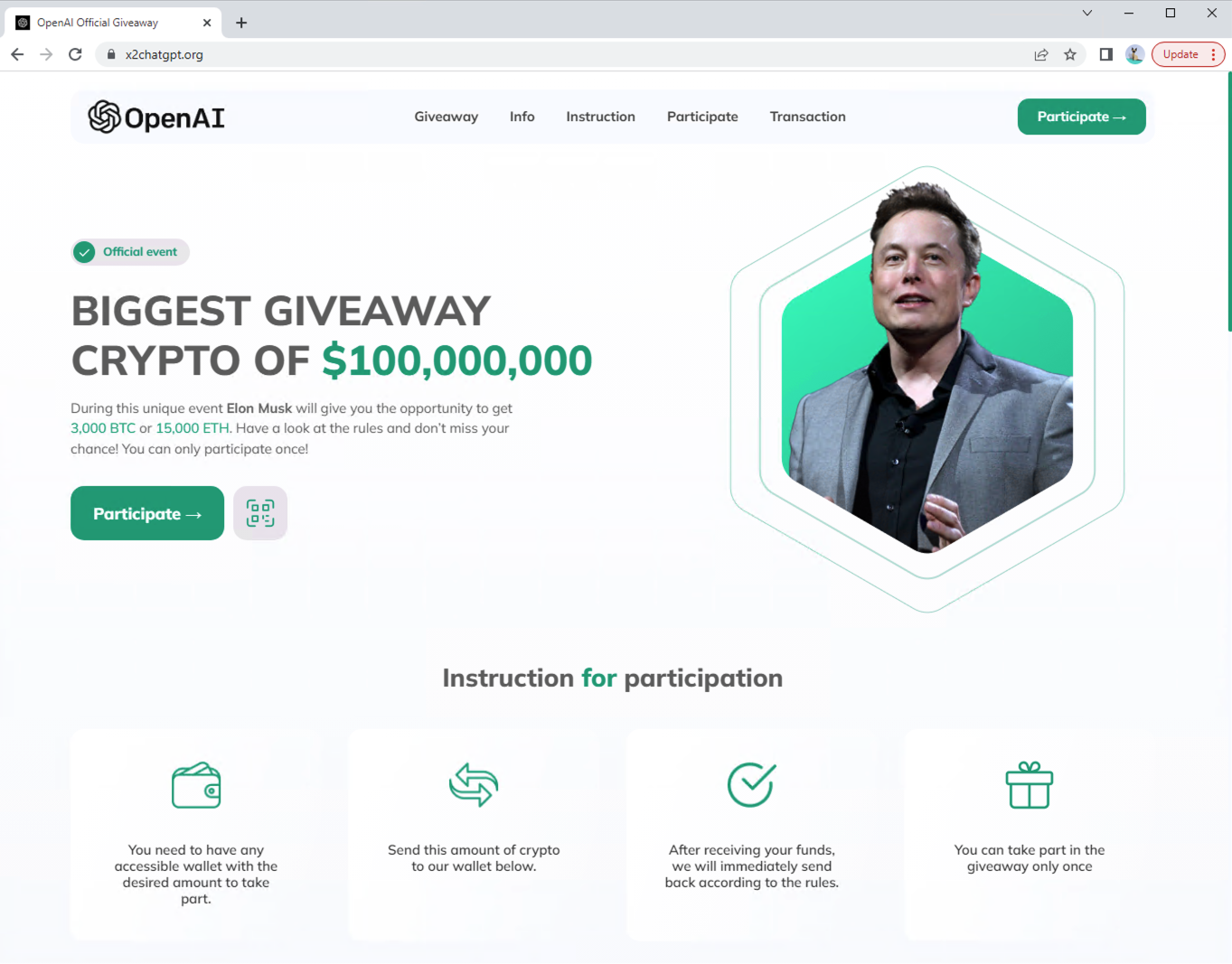

We also noticed some scammers are exploiting the growing popularity of OpenAI for crypto frauds. Figure 6 shows an example of a scammer abusing the OpenAI logo and Elon Musk’s name to attract victims to this fraudulent crypto giveaway event.

The Risks of Copycat Chatbots

While ChatGPT has become one of the most popular applications this year, an increasing number of copycat AI chatbot applications have also appeared on the market. Some of these applications offer their own large language models, and others claim that they offer ChatGPT services through the public API that was announced on March 1. However, the use of copycat chat bots could increase security risks.

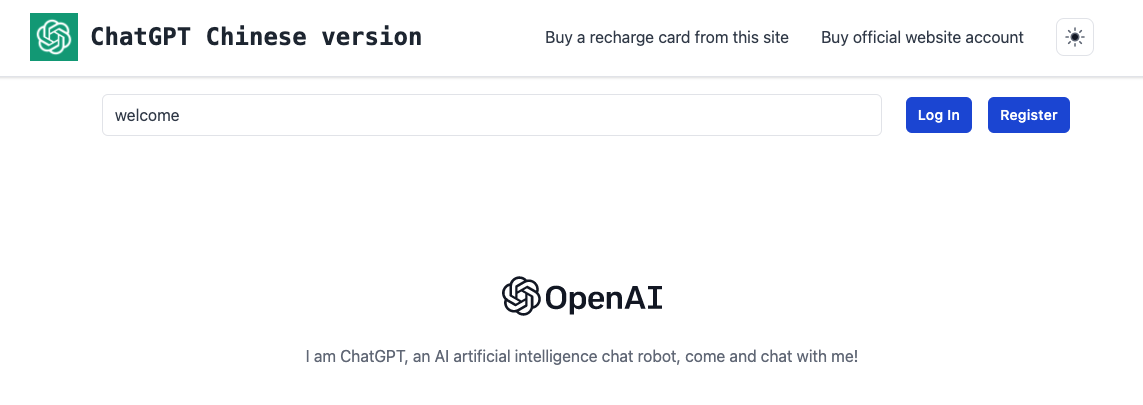

Before the release of the ChatGPT API, there were several open-source projects that allowed users to connect to ChatGPT via various automation tools. Given the fact that ChatGPT is not accessible in certain countries or regions, websites created with these automation tools or the API could attract a considerable number of users from these areas. This also provides threat actors the opportunity to monetize ChatGPT by proxying their service. For example, Figures 7a and 7b below show a Chinese website providing paid chatbot service.

Whether or not they’re offered free of charge, these copycat chatbots are not trustworthy. Many of them are actually based on GPT-3 (released June 2020), which is less powerful than the recent GPT-4 and GPT-3.5.

Moreover, there is another significant risk of using these chatbots. They might collect and steal the input you provide. In other words, providing anything sensitive or confidential could put you in danger. The chatbot’s responses could also be manipulated to give you incorrect answers or misleading information.

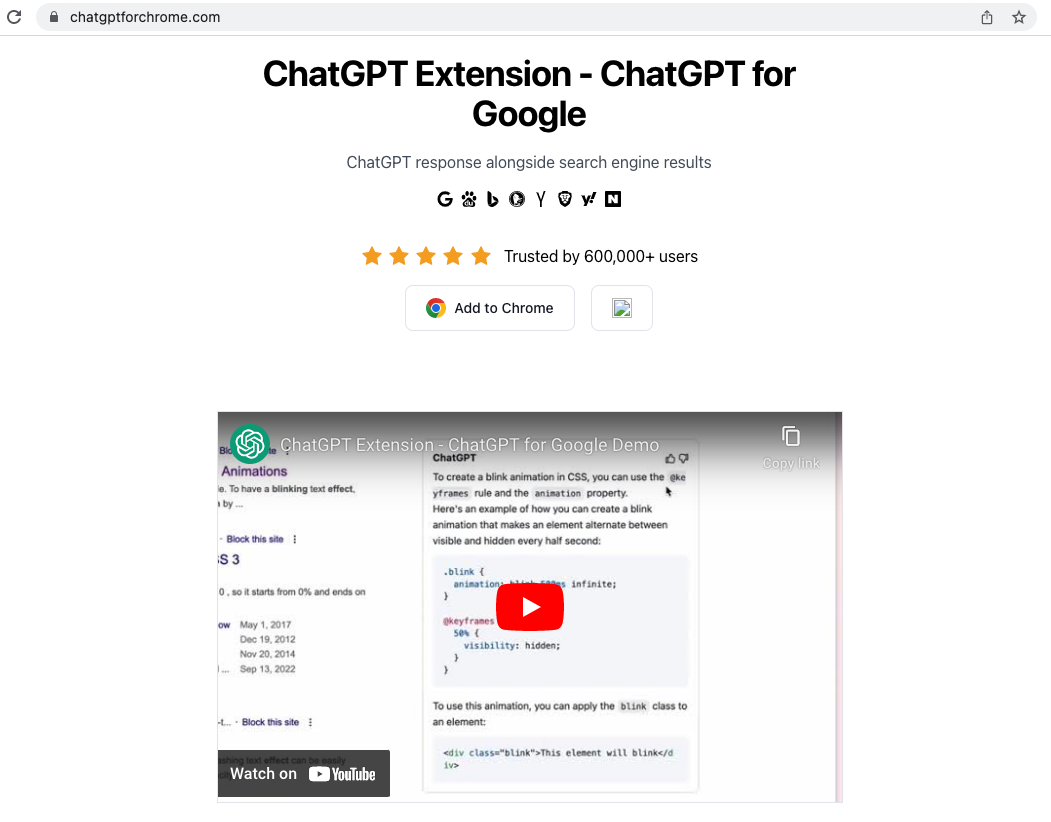

For example, as shown in Figure 8, the squatting domain chatgptforchrome[.]com hosts an introduction page for the ChatGPT Chrome Extension. It uses the information and video from the official OpenAI extension.

The “Add to Chrome” link leads to a different extension URL chrome[.]google[.]com/webstore/detail/ai-chatgpt/boofekcjiojcpcehaldjhjfhcienopme, while the authentic URL should be chrome[.]google[.]com/webstore/detail/chatgpt-chrome-extension/cdjifpfganmhoojfclednjdnnpooaojb.

We downloaded the “AI ChatGPT” extension shown in Figure 8 (SHA256: 94a064bf46e26aafe2accb2bf490916a27eba5ba49e253d1afd1257188b05600) and found that it adds a background script to the victims’ browser, which contains a highly obfuscated JavaScript. Our analysis of this JavaScript shows that it calls the Facebook Graph API to steal a victim's account details, and it might get further access to their Facebook account. Other researchers have also reported similar campaigns involving malicious browser extensions.

Conclusion

The growing popularity of ChatGPT worldwide has made it a target for scammers. We have noticed a significant increase in the number of newly registered domains and squatting domains related to ChatGPT, which could potentially be exploited by scammers for malicious purposes.

To stay safe, ChatGPT users should exercise caution with suspicious emails or links related to ChatGPT. Moreover, the usage of copycat chatbots will bring extra security risks. Users should always access ChatGPT through the official OpenAI website.

Palo Alto Networks Next-Generation Firewall and Prisma Access customers with Advanced URL Filtering, DNS Security and WildFire subscriptions are protected against ChatGPT-related scams. All the mentioned malicious indicators (domains, IPs, URLs and hashes) are covered by these security services.

Acknowledgments

The authors would like to thank Nabeel Mohamed, Shehroze Farooqi and Shresta Bellary Seetharam for providing data sources and examples used in this blog. We would also like to thank Jun Javier Wang, Alex Starov, Harsha Srinath, Laura Novak, Daniel Prizmant and Erica Naone for their advice and help with improving the blog.

Indicators of Compromise

Squatting Domains

- openai[.]us

- openai[.]xyz

- chatgpt[.]jobs

ChatGPT Scams

- chat-gpt-online-pc[.]com

- ab68a3d42cb0f6f93f14e2551cac7fb1451a49bc876d3c1204ad53357ebf745f

- pay[.]chatgpt-oracle[.]com

- x2chatgpt[.]org

ChatBot

- chatgpt[.]appleshop[.]top

Chrome Extensions

- chatgptforchrome[.]com

- chrome[.]google[.]com/webstore/detail/ai-chatgpt/boofekcjiojcpcehaldjhjfhcienopme

- 94a064bf46e26aafe2accb2bf490916a27eba5ba49e253d1afd1257188b05600

Get updates from

Palo Alto

Networks!

Sign up to receive the latest news, cyber threat intelligence and research from us

如有侵权请联系:admin#unsafe.sh