好的,我现在需要帮用户总结一篇文章,控制在100字以内,而且不需要用“文章内容总结”之类的开头。用户给的原文是关于Kovrr的AI治理套件的介绍,看起来挺长的。 首先,我得通读整篇文章,抓住主要信息。文章主要介绍了Kovrr的AI治理套件,包括几个模块:AI资产可见性、风险登记、合规准备、风险量化、保证计划和第三方监控。每个模块都有详细的功能描述。 接下来,我需要确定这些模块的核心功能。比如,AI资产可见性帮助组织识别和管理AI工具;风险登记用于组织和优先处理风险;合规准备评估是否符合标准;风险量化将风险转化为财务指标;保证计划提供改进路线图;第三方监控则管理供应商的风险。 然后,我要把这些信息浓缩到100字以内。要确保涵盖所有关键点:套件名称、核心功能、目标用户(企业)、以及带来的好处(如结构化管理、问责制)。 最后,检查语言是否简洁明了,避免使用复杂的术语。确保总结准确反映原文内容,并且流畅自然。 </think> Kovrr’s AI Governance Suite提供全面的企业级AI管理解决方案,帮助企业识别和管理生成式AI工具及其相关风险。通过统一的资产库存、风险评估、合规准备和量化分析等功能,该套件帮助企业建立结构化的治理框架,确保问责制和可扩展性。 2026-1-7 12:39:4 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

TL;DR

- Kovrr’s AI Governance Suite gives organizations the structure needed to move beyond fragmented oversight and manage GenAI with discipline and accountability.

- AI Asset Visibility reveals where GenAI operates across the enterprise, creating a unified inventory that supports ownership, risk evaluation, and audit-ready reporting.

- The AI Risk Register organizes scenarios consistently, links them to affected systems, and helps teams prioritize actions using likelihood, impact, and mapped safeguards.

- AI Compliance Readiness and AI Risk Quantification evaluate safeguard maturity, align programs with emerging standards, and translate exposure into financial and operational terms.

- The AI Assurance Plan and Third-Party Monitoring capability turn assessments into measurable roadmaps and extend governance across vendors.

The Market Challenges Driving a New Approach to AI Governance

AI systems are being woven into the fabric of business operations at a pace that outstrips the structures needed to safely scale them. McKinsey’s latest State of AI report shows that nearly two-thirds of organizations are still stuck in experimentation or pilot mode, unable to systematically expand AI usage across the business. Although leaders cite early benefits in efficiency, revenue gains, and innovation, only 39% report enterprise-level impact.

Evidently, without the fundamental building blocks of a robust governance program, AI processes will remain stuck in these early, precarious stages of adoption. Indeed, the situation grows even more dire when considering that new AI tools surface in teams without prior approval. Data flows expand in ways traditional oversight methods don’t track, and business decisions become increasingly influenced by systems that risk managers have little insight into. No one has a dependable view of where risks are forming or how quickly they’re compounding.

Regulatory expectations add another dimension of pressure. With legislation emerging across the global market, from the EU AI Act to guidance in the US, Canada, Singapore, and beyond, organizations are expected to demonstrate where AI sits and how it’s being managed. That demand is difficult, if not impossible, to meet when visibility is limited and risk management practices differ across business units, creating a pattern of gaps that leaders can’t justify under scrutiny.

This combination of rapid experimentation, opaque deployment, and rising regulatory pressure has created a reflection point that leaves robust AI governance no longer optional. Stakeholders need a reliable way to understand where GenAI operates, measure exposure, and build a strategic roadmap that stands up to both internal expectations and external review. With Kovrr’s AI Risk Governance suite, that capability is now within reach.

AI Asset Visibility: Illuminating the Enterprise’s AI Footprint

Most enterprises have far more AI activity underway than any formal inventory reflects. Tools are introduced by individual teams, embedded within existing platforms, or adopted informally as productivity shortcuts, making it nearly impossible for security and GRC leaders to understand the scope of their AI exposure or verify that safeguards match actual usage. Kovrr’s AI Asset Visibility module brings that blurred picture into focus.

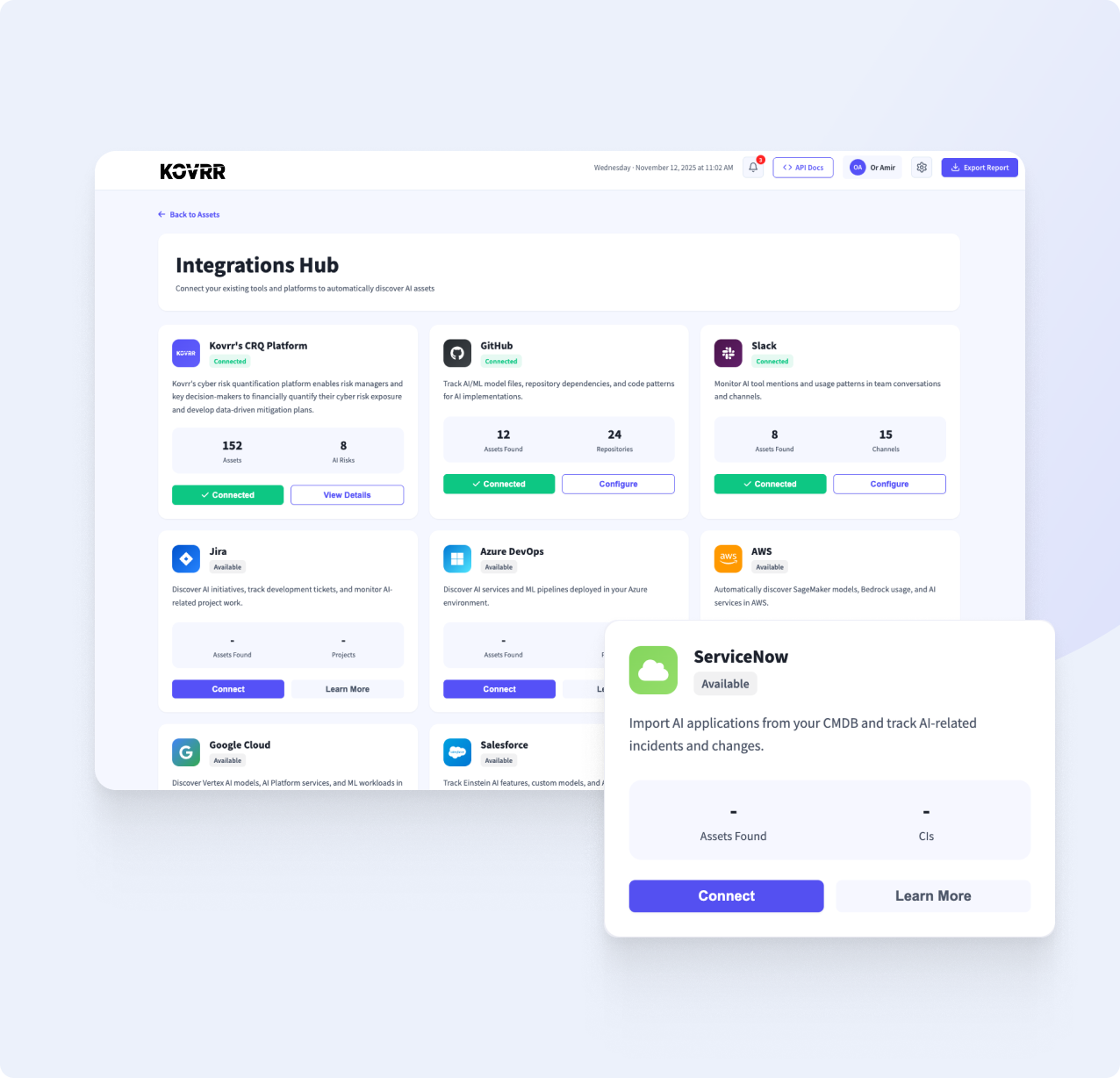

The system continuously discovers sanctioned, shadow, and embedded GenAI tools across the enterprise, consolidating everything into a comprehensive, living inventory. Automated data collection replaces manual reporting, pulling records from integrations, surveys, and secure uploads to create a verified view of each asset, its users, its department, and the data it interacts with.

Once captured, every asset is classified according to its purpose, sensitivity, and business function. User relationships, workflows, and data flows are mapped in a way that makes ownership and accountability unmistakable. Oversight workflows guide teams through approval and review, while exportable reports support audits and leadership briefings. This foundation transforms fragmented insights into a dependable operational map.

AI Risk Register: Organizing AI Scenarios and Their Business Impact

Structured oversight depends on understanding how individual AI risks connect to the enterprise’s systems and workflows, yet most organizations lack a consistent method for documenting these relationships. The result is a jumbled portfolio of exposure details that doesn’t support governance programs. Kovrr’s AI Risk Register replaces that patchwork with a single, organized workspace for every AI-related risk.

Teams can define scenarios in a consistent format, categorize them by domain, and link each one directly to the GenAI tools and business processes it affects. Ownership can be assigned at the start of scenario creation, ensuring accountability is clear from identification through mitigation. Each scenario can include the likelihood and potential loss, generating an in-depth profile that captures both technical and business context.

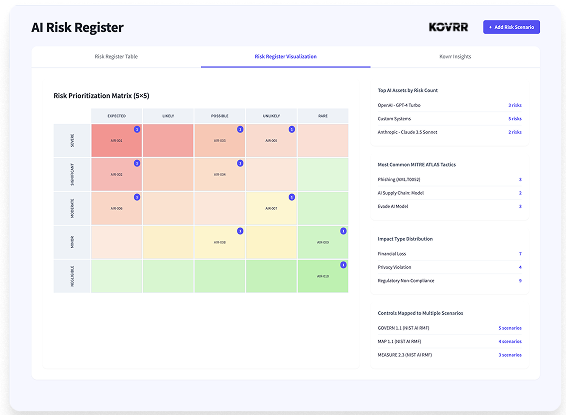

A 5×5 matrix translates these inputs into an at-a-glance view of severity and priority, helping teams understand which risks require immediate focus and how mitigation efforts shift placement over time. Scenario pages also support notes and evidence attachments, giving teams a reliable place to document findings, add context, and prepare for audits without maintaining separate files.

Framework alignment adds another layer of discipline, mapping scenarios to NIST AI RMF, ISO 42001, and MITRE ATLAS with full traceability. Relevant governance controls are listed directly within each entry as well, showing exactly which safeguards apply and how they support the scenario’s mitigation plan. The register transforms scattered risk notes into a coherent system that leaders can use to evaluate exposure, plan responses, and build governance practices that evolve with the organization.

AI Compliance Readiness: Reviewing Controls Against Emerging Standards

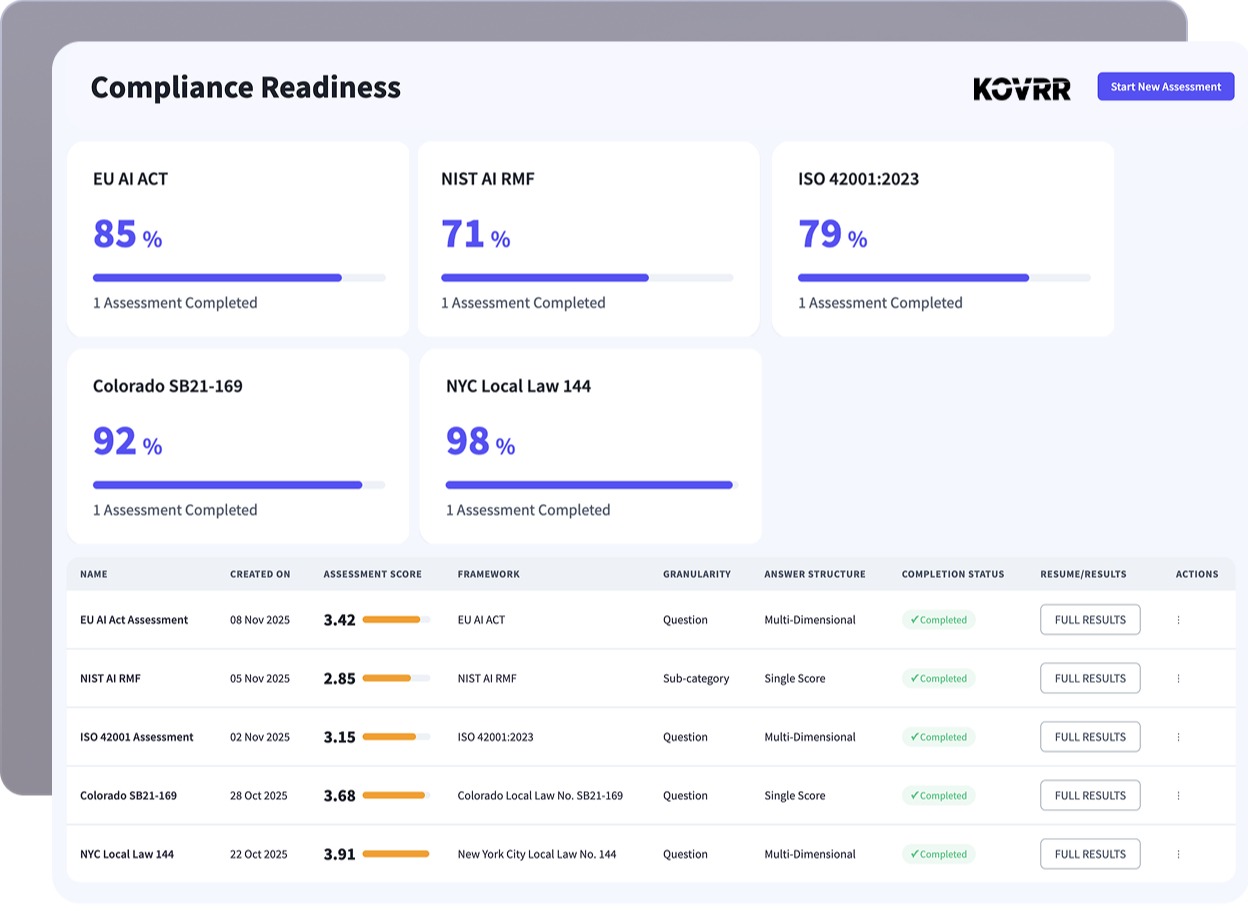

Regulators are sharpening their focus on AI in the market, introducing new expectations for how organizations govern and document their usage. Frameworks such as the NIST AI RMF and ISO 42001 are quickly becoming reference points even for those not yet subject to formal regulation. In the EU, the AI Act has set a more formal baseline and across the rest of the world, governments are following suit. These developments make it essential for teams to understand how their current controls measure up.

Kovrr’s AI Compliance Readiness module gives organizations the necessary structure, guiding teams through a framework-aligned assessment that examines governance maturity across domains, control categories, and subcategories. Each question connects to a defined expectation, supported by evidence workflows that help teams capture documentation, attach records, and maintain audit-ready proof of compliance.

Flexible scoring options accommodate stakeholders’ preferences, allowing teams to leverage scale-based scoring and current-versus-target maturity comparisons. Results flow into visual dashboards that highlight strengths, gaps, and trends across entities, turning raw data into insight leadership can rely on. The gap identification and progress tracking features ensure improvements are measurable and easy to validate over time.

The module’s multi-entity support capabilities also keep evaluations consistent across business units and subsidiaries, enabling a unified compliance posture even in complex environments. Each assessment is exportable in executive-ready format, helping teams support audits, board reviews, and regulatory inquiries with structured, defensible evidence. The AI Compliance Readiness assessment turns compliance preparedness into an ongoing governance discipline.

AI Risk Quantification: Translating Exposure Into Tangible Insights

Although listing scenarios and describing potential outcomes is a necessary foundation of governance, understanding GenAI risk requires more. Leaders need to be able to leverage the available data to know how often incidents might occur, how severe they could be, and which safeguards meaningfully reduce that exposure. Without that level of insight, governance decisions rely on assumptions rather than evidence.

Kovrr’s AI Risk Quantification module provides the modeling depth needed to close that gap. The process begins by capturing how a specific GenAI system operates inside the business, including model access, data sensitivity, reliance factors, and mapped controls. Each model is evaluated in its own environment, allowing teams to quantify exposure for the individual GenAI tools they rely on.

Using industry benchmarks, tailored threat intelligence, and established frequency–severity distributions, the engine calculates incident likelihood and potential losses with measurable precision. The results are then presented in metrics that support executive decision-making, including Annualized Loss Expectancy and loss exceedance curves. Views of loss are likewise broken down by access vector, event type, and underlying risk drivers, giving teams a clear sense of what contributes most to exposure.

Scenario comparison tools then help teams evaluate alternative mitigation strategies, while control impact modeling shows which safeguards deliver the greatest reduction in modeled loss. Each quantified scenario evolves as GenAI deployments or controls change, reinforcing governance programs with a living source of financial and operational insight.

MITRE ATLAS–based recommendations further guide the interpretation of results, aligning modeled risks with real-world tactics and expected behaviors. With this foundation, organizations can communicate exposure to leadership, prioritize investments, and demonstrate measurable improvement over time. Quantification turns GenAI risk into a practical asset for planning and long-term resilience.

AI Assurance Plan: Building a Roadmap for Measurable Oversight

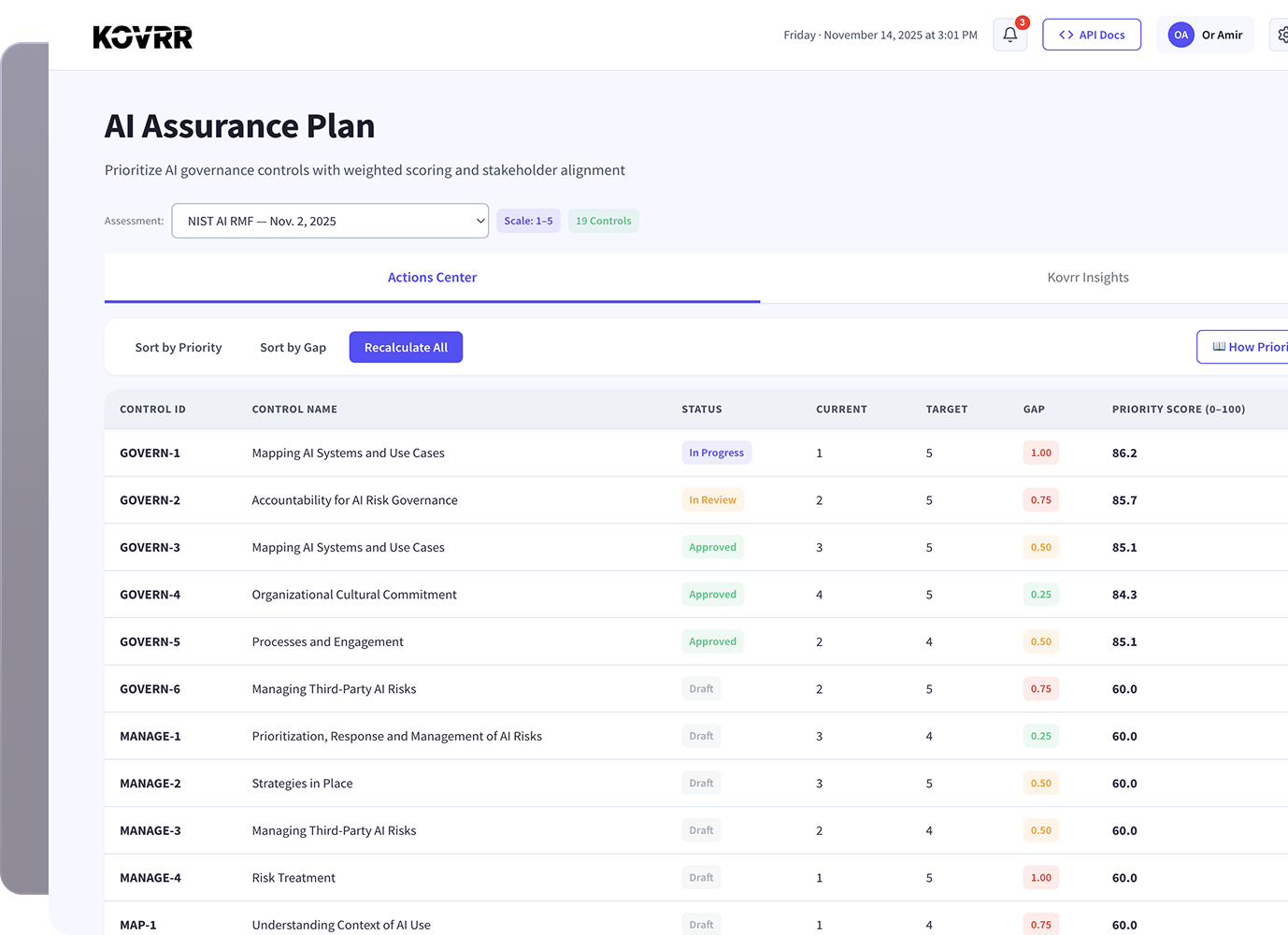

Assessment results only create value when they can be translated into actionable steps. Indeed, many governance programs stall after identifying gaps because teams lack a structured, quantitative way to prioritize improvements. Kovrr’s AI Assurance Plan closes that gap by turning safeguard maturity findings into a measurable roadmap that ensures resources are directed toward the actions with the greatest impact.

The module evaluates governance controls against frameworks and regulations such as NIST AI RMF, ISO 42001, and the EU AI Act, and then applies weighted scoring to reveal where weaknesses exist and how much each one matters. Every control is tied to its influence on maturity and modeled exposure, helping teams understand which improvements meaningfully advance oversight and reduce risk. This capability creates a direct connection between safeguard performance and measurable outcomes, making prioritization defensible.

Teams can also review each control’s details, attach notes and evidence, and document audit materials directly within the platform. Interactive dashboards visualize maturity progression, gap severity, and expected improvement over time, allowing leaders to track progress through quantifiable indicators. Dependency sequencing and value-based rankings support long-term planning, ensuring that resources flow toward initiatives that deliver the strongest return.

By linking readiness results to a practical, data-driven roadmap, the AI Assurance Plan moves governance programs from observation to sustained advancement. It gives security and GRC leaders a structured path forward, ensuring teams are aligned while reinforcing accountability across the enterprise. With this module, building resilience in the age of AI becomes a much more feasible task that teams can implement step by step.

AI Third-Party Risk Monitoring: Identifying Vendor AI Exposure

External partners are adopting GenAI at a pace that mirrors internal teams, often embedding models into workflows that influence critical business functions. However, vendor activity frequently falls outside direct oversight mechanisms, leading to additional exposure levels that organizations either struggle with or fail to track. Kovrr’s AI Third-Party Risk Monitoring capability extends the same discovery and visibility foundation used for internal AI systems, giving teams a unified view of how suppliers and partners deploy GenAI across their business ecosystem.

The module maintains a centralized inventory of vendors and sub-vendors, capturing their GenAI activity and governance safeguards. Continuous monitoring detects changes in vendor usage, updates to AI models, and shifts in control effectiveness, ensuring organizations stay aware of developments that may alter risk. Interactive network views map supply-chain dependencies, helping teams uncover otherwise hidden vulnerabilities and understand where GenAI is driving high-impact or data-sensitive processes.

Vendor scorecards provide detailed insight into safeguards, incidents, and maturity alignment with frameworks such as NIST AI RMF and ISO 42001. Due diligence workflows streamline onboarding and renewal, while contract intelligence tracks documentation, certifications, and policy gaps in one place. Real-time updates keep assessments current, giving organizations a dependable view of third-party exposure as their ecosystem evolves.

By integrating vendor monitoring directly into Kovrr’s AI Risk Governance foundation, teams gain a consistent way to evaluate external dependencies and maintain a transparent, measurable view of GenAI use beyond the enterprise. The module helps stakeholders strengthen oversight practices where they have the least direct control, reducing the blind spots that often carry the most risk and expose the organization to potentially material losses.

Establishing Stronger Oversight With Kovrr’s AI Governance Suite

Organizations still relying on fragmented reviews and ad hoc controls to guide their AI adoption processes are now thoroughly falling behind. The pace of GenAI implementation demands, instead, a governance approach composed of robust structures, measurement, and transparent ownership. Kovrr’s AI Governance suite provides that foundation by giving GRC and security teams a unified system for identifying their AI footprint, documenting risk, evaluating safeguards, quantifying exposure, planning improvements, and monitoring vendor activity.

Each of the modules strengthens a different layer of AI oversight, yet all operate from a common data core. AI Asset Visibility creates the baseline that governance programs depend on, while the AI Risk Register organizes scenarios in a format that supports accountability and defensible reporting. The AI Compliance Readiness Assessment reveals whether safeguards match emerging standards, and AI Risk Quantification converts those risk insights into practical information that leaders, regardless of technical expertise, can act on.

The AI Assurance Plan transforms findings into a structured roadmap for ongoing improvement, and finally, the Third-Party Monitoring module extends these capabilities across the external ecosystem so that oversight keeps pace with how suppliers use GenAI. When harnessed in combination, these elements give organizations the means to govern AI with conviction. Kovrr’s suite of AI modules ultimately offers enterprises a clear path to building governance programs that can scale as their AI footprint continues to expand.

To understand how Kovrr’s AI Governance Suite can strengthen your oversight program, walk through the capabilities firsthand. Schedule a free demo today.

如有侵权请联系:admin#unsafe.sh