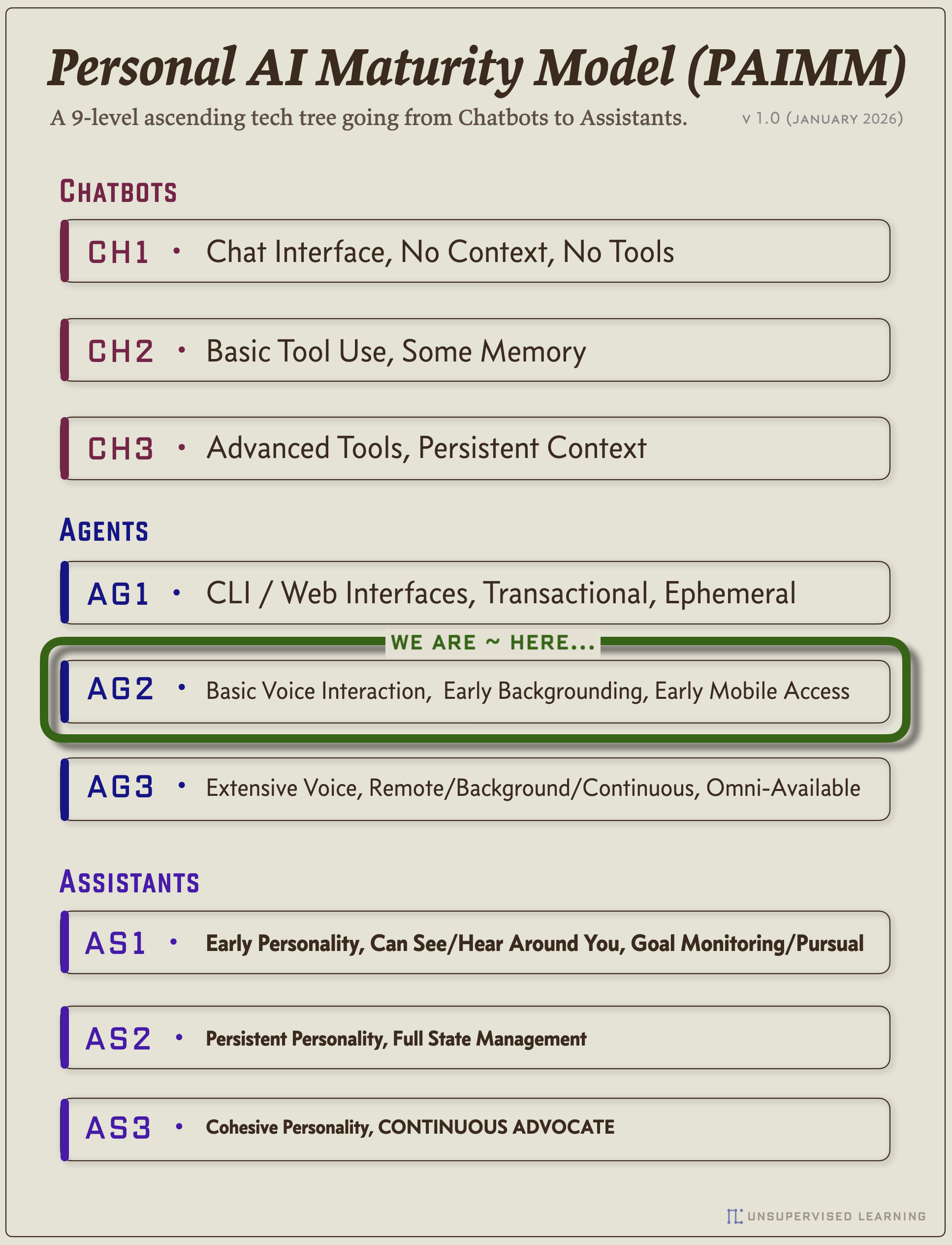

文章描述了个人AI发展的九个阶段,从基础的聊天机器人(Chatbots)到具备自主性和理解能力的完整AI伴侣系统。通过九个层级(TIER 1至TIER 9),展示了AI从简单的问答交互逐步发展为能够协调复杂任务、具备个性和主动性的智能体(Agents),最终演变为全面的数字助理(Assistants),帮助用户实现安全、健康和高效的目标。 2025-12-15 05:30:0 Author: danielmiessler.com(查看原文) 阅读量:0 收藏

9 tiers of personal AI progress, from chatbots to a full AI companion

December 14, 2025

As we think about what's happening with Agents, Agent frameworks like Claude Code, voice interfaces, etc, I invite you to ask a simple question:

Where is this all going?

- What are we actually building towards?

- How far along are we?

- How many more steps are there?

- What is the current next step?

My overall approach to answering these is to imagine the ideal capabilities of an AI companion system—through the lens of what humans eternally strive for—and work backwards.

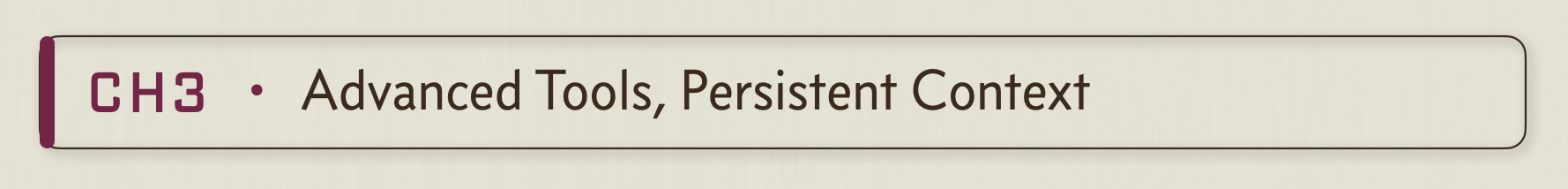

So that's what this maturity model does, in 9 ascending levels.

The idea is to have handles for talking about—and working on—progression towards the destination.

So here are the tiers: from past, through current, to future states.

TIER 1 - CHATBOTS

The first interface to modern AI. You ask a question and you get back an extraordinarily useful answer. And you take it from there.

This was ChatGPT when it first came out. Brilliant. Limited.

- Just a chat interface

- No tools

- No knowledge of you whatsoever

- Mindblowing compared to not having anything, but definitely early days

The beginning of some tools and some rudimentary memory features.

- Still just chat

- Tools are still basic

- Limited knowledge of you and your goals

Chatbots' final form before moving into Agents.

- Tooling far more advanced now

- Additional context and memory features

- Agents are still mostly experimental

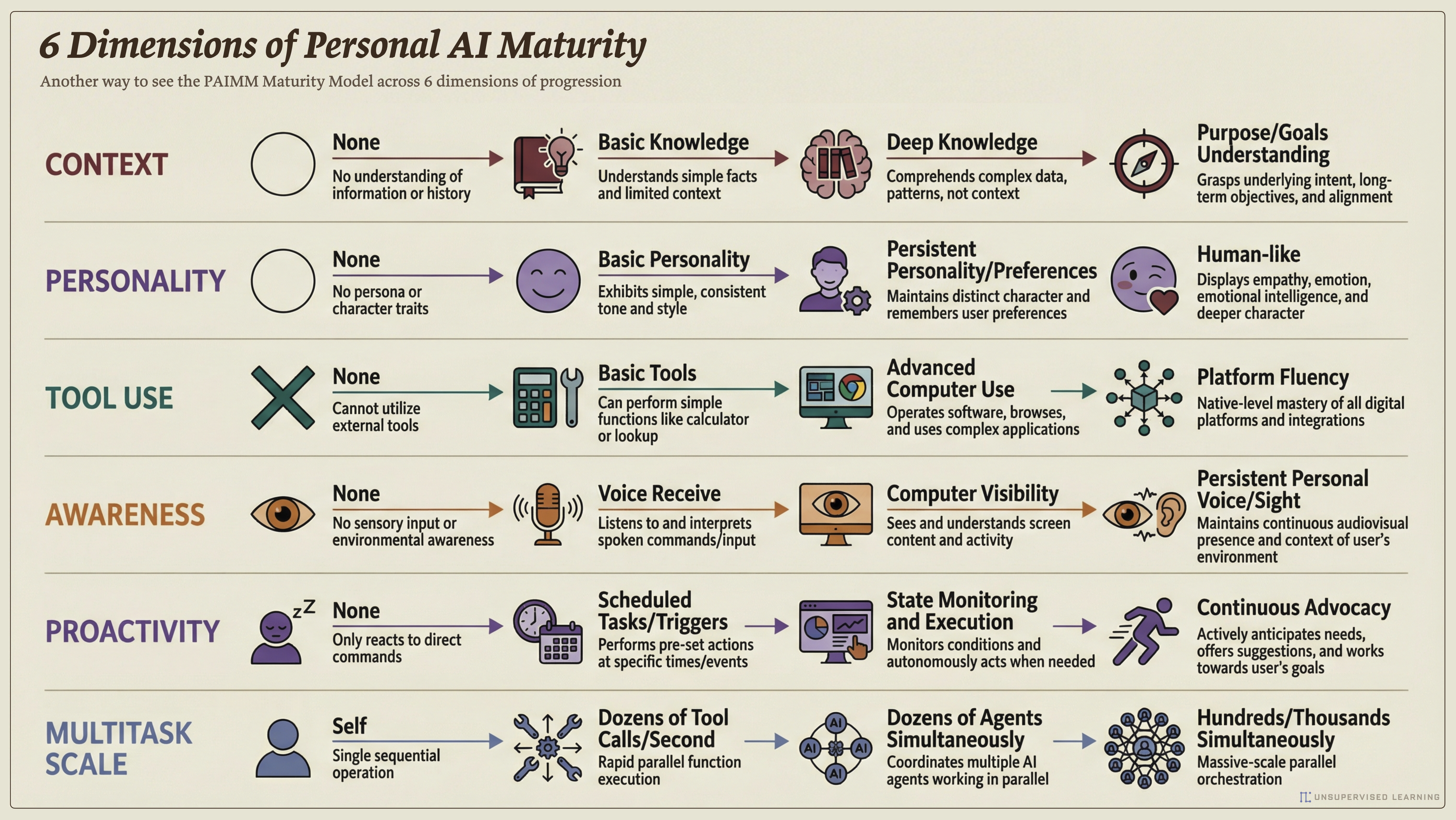

Breaking these across 6 dimensions

Another way to break down the transitions is as a set of spectrums across 6 dimensions.

- Context [ None -> Basic Knowledge -> Deep Knowledge -> Understanding of Purpose/Goals ]

- Personality [ None -> Basic Personality -> Persistent Personality/Preferences -> Human-like ]

- Tool Use [ None -> Basic Tools -> Advanced Computer Use -> Platform Fluency ]

- Awareness [ None -> Voice Receive -> Computer Visibility -> Persistent Personal Voice/Sight ]

- Proactivity [ None -> Scheduled Tasks/Triggers -> State Monitoring and Execution -> Continuous Advocacy ]

- Multitask Scale [ Self -> Dozens of Tool Calls/Second -> Dozens of Agents Simultaneously -> Hundreds/Thousands Simultaneously ]

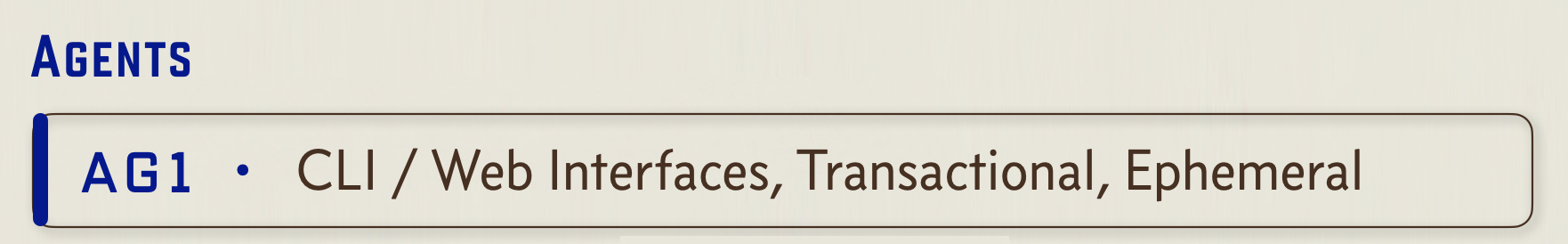

TIER 2 - AGENTS

Instead of us asking questions and getting answers from Chatbots, we assign work to Agents that can autonomously do work on our behalf.

The initial transition from Chatbots to Agents as the main way of thinking about and using AI.

- Standalone agents via LangGraph, other frameworks

- Early 2025 acceleration

- Claude Code and n8n makes them mainstream

- Claude Code best/most-used example, but CLI-based

- Agents are mostly ephemeral (n8n/similar being the exception)

- Early voice interaction stuff

- Experimental computer usage

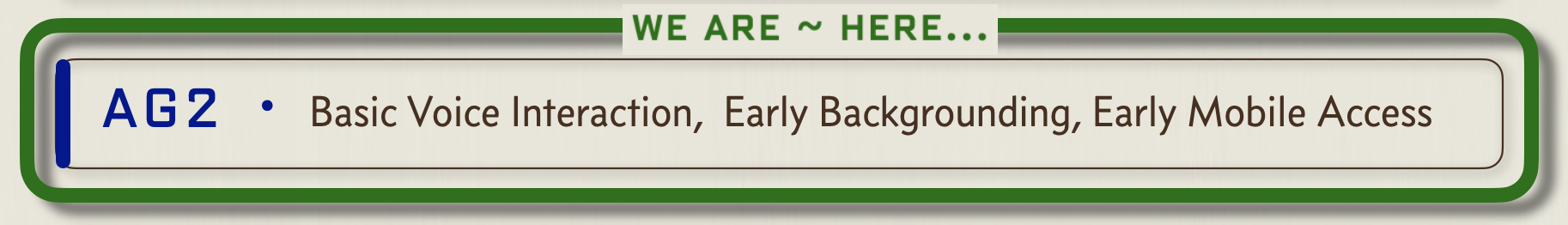

Agents start to become the main mental model for AI work.

- Agents become a lot more controllable and deterministic due to scaffolding of Agentic Systems like Claude Code

- Background and scheduled agents starting to materialize

- Voice accelerates as a usage pattern

- Early signs of universal accessibility, e.g., you can interact with your agents via a mobile app or OS assistant

- Computer usage gets more serious but still isn't mainstream

Predictions are hard, especially about the future.Niels Bohr

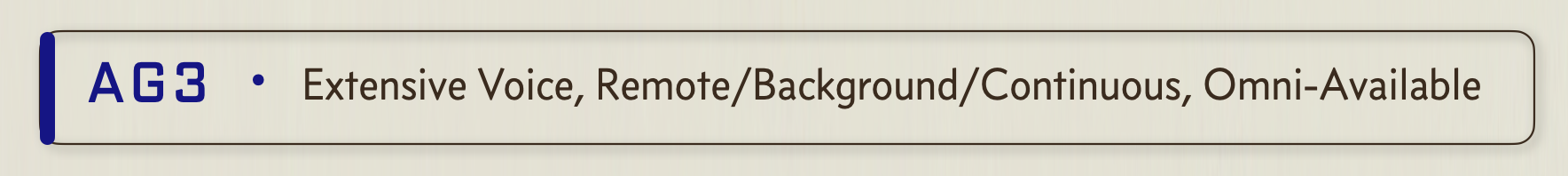

The final form of Agents vs. Assistants, with the main distinction being personality and trust.

- Agents are now both interactive but also running continuously in the background, both locally and in the cloud

- Agents easily manageable from mobile / device when not on main system / traveling / etc.

- Extensive use of voice as the interface

- Computer usage becomes viable and adoption begins

- Very advanced and steerable, but still mostly reactive vs. proactive

TIER 3 - ASSISTANTS

Instead of random Agents performing work, we have a named, trusted Assistant that works with us to further our goals.

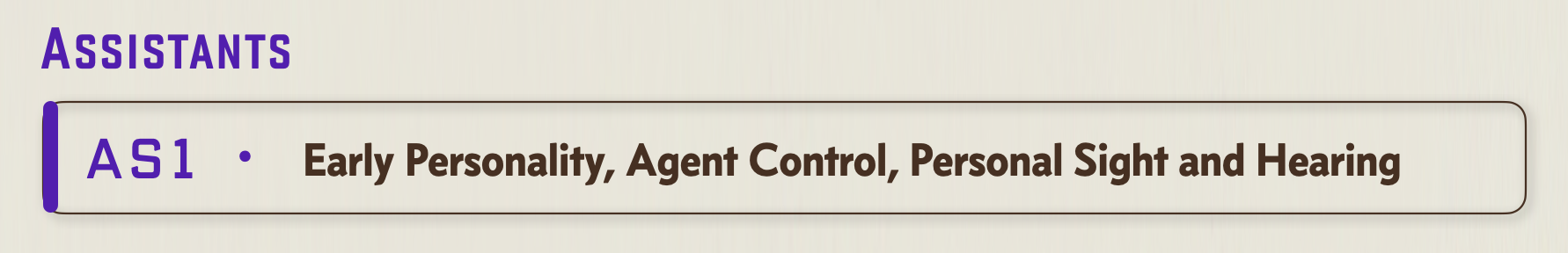

The transition begins from focus on Agents to the concept of an Assistant that uses Agents in the background to proactively pursue your goals.

- The first transition to Proactive vs. Reactive

- Assistants start to have initial personalities, both through the providers and also through custom scaffolding (persistent personality context)

- Assistants start to become our primary interface to AI

- Agents start to become less important, background elements that are working to do the bidding of your Assistant

- Your Assistant's context about you starts including things like your Goals, Challenges, Metrics, Projects, and other aspects of your life

- Voice overtakes typing as primary interface

- Your Assistant can now (significantly) see and hear what's happening on your computer

- Early signs of personal cameras and microphones that you can wear on your body (not just while on your computer) to give your Assistant awareness of your environment, i.e., seeing and hearing what you're seeing and hearing, watching behind you and around you, etc.

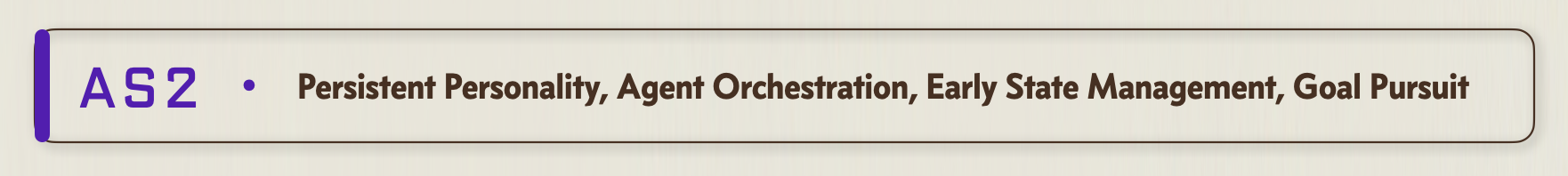

Assistant personalities start to crystalize and they begin performing as Proactive Advocates of us and our goals, vs. reactive helpers.

- Initial unification of all inputs into a cohesive picture that your Assistant can see and understand

- Full Agent orchestration, including spin up and spin down, custom task assignment, etc., all happening transparently in the background without your knowledge

The introduction of your Assistant understanding what you're trying to do in life, your goals, the metrics that matter to you, etc., which will allow him/her/it to start thinking proactively on how to help you accomplish them

We start to see the concept of Managing State, i.e., your Assistant takes periodic inventory of all inputs and assesses Current State relative to Desired State, in order to plan actions to move towards Desired State

Nearly full usage of any of your computing environments / interfaces

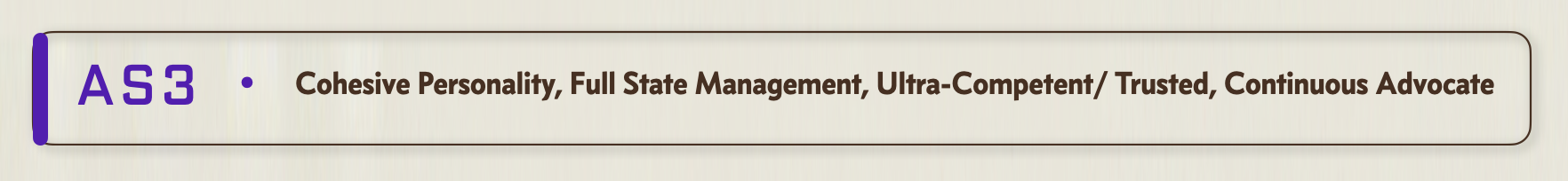

AS3 is the final level of this maturity model. This is the Digital Assistant I described in 2016, and that has been partially depicted in various ways in sci-fi books and films for decades.

There are many more features that can be added as you go further in time and tech, but I'm thinking really only up to 5-10 years from now. Even beyond 5 years is nutty given how fast things are changing.

Here are the main characteristics of AS3.

Trusted Companion—AS3-level Assistants feel more like trusted companions, partners, protectors, friends, and confidants than technology, managing both life and work while becoming (for both better and worse) many people's closest relationships

Loved Ones Monitoring—Continuous monitoring of everyone you care about who can't monitor themselves (children, elderly parents, those with special needs), watching all cameras, security systems, and logs 24/7 for signs of danger or distress

Continuous Advocate—Works continuously, without rest, as an Advocate. Constantly scanning the world for opportunities, threats, better deals, useful information, and ways to optimize your life according to your goals

Building Partner—When you sit down at a computer, your Assistant has full access to everything the computer can do, can see all your screens, can hear everything. You can speak, type, and gesture, and have your DA do most of the work using all the power of the connected systems

Environmental Customization—Automatically adjusts environments you enter into such as lighting, temperature, music, displays, and ambient settings in any space you enter based on preferences, current mood, time of day, and what you're trying to accomplish

Enhanced Perception—Will grant superhuman-like senses through available feeds: seeing through walls via building cameras, hearing specific conversations across rooms by filtering audio, accessing available mics, zooming into distant objects, accessing thermal vision and other sensory augmentation

Active Protection—Rewrites abusive messages before you see them, summarizes manipulative communications to extract what's really being asked, removes extremist propaganda from feeds, fact-checks claims in real-time during conversations, performs live character analysis on people you're interacting with

Universal Authentication—Handles all authentication continuously through multi-factor streams (voice, gait, location, behavior patterns), automatically enrolling new devices into your ecosystem with proper security settings and managing permissions across all connected systems

Deep Understanding—Deep understanding of your full context and history as a person: your upbringing, your relationship with your parents and family, your education, your traumas (optional, of course), your journal, your goals, your aspirations, etc. All in service of better helping you become who you are trying to become

Sometimes the best way to tell is to show, so here are some examples of what it'll be like to use an AS3 level Assistant.

Protecting You and Your Loved Ones

Your mom hasn't moved from her bedroom in three hours past wake time—Your DA calls her, alerts the neighbor and emergency services with her location and medical history

Walking at night, your DA monitors 47 nearby cameras and notices concerning behavior ahead—"Take the next right, safer route, you'll still make it on time"

Outsourcing Research

- You mention wanting a new couch—Six minutes later your DA interrupts: "Found the perfect one, your roommate loves it, on sale tomorrow at 4am for $1,100 less." You: "Order it." Your DA: "Done. Delivery Thursday between 2-4pm, I've already cleared your calendar."

Detecting and Filtering Influence Campaigns

- Propaganda campaign targeting your 16-year-old son, and marketing campaign trying to get you to dislike a certain product—Your DA: "Heads up, there's a coordinated propaganda campaign targeting teens in your area. I've been filtering it from your son's feeds. Also detected astroturfing trying to tank Brand X's reputation. Want the analysis or just the cleaned feed?" You: "Just keep it clean." Your DA: "Done."

Freelance Work

- You do bug bounties on the side, and a new program just opened—While you're eating dinner, Kai messages you: "New program just launched. I'm doing recon right now and already found something juicy. Just submitted the report. Team's response time is fast, so you might hear back before dessert."

Monitoring Mental State and Energy

- You've been doing negative self-talk for the past hour, energy levels dropping—Your DA notices the pattern and adjusts your lighting to warmer tones, starts playing your "getting unstuck" playlist. Then: "Hey, I've noticed you're being pretty hard on yourself today. You've actually shipped three major features this week. Want to take a walk? I can reschedule your 3pm."

Routine Management and Optimization

- Your entire morning needs coordinating—Optimal wake time, coffee started, news queued, 9am meeting moved to 10am, vitamins ordered, bills paid, 127 spam emails dismissed. Fifteen minutes in, zero decisions made

Tactical vs. Strategic Goal Monitoring

- Quarterly review time—Your DA: "We shipped 47 features and closed $280K in consulting this quarter, but we're off track on your 2026 goals. Your Q1 target was launching the AI Security Fundamentals course and signing three enterprise partnerships. We're at 12% course completion and zero partnerships. Here's the fix: transition Acme Corp and TechStart to Ryan as referrals, block Tuesdays/Thursdays for course recording starting next week, and I'll schedule intro calls with the four target companies from your January strategy doc—Microsoft, Google, Anthropic, and OpenAI."

The power of AS3-level Assistants comes from their combination of continuous awareness, proactive action, and deep understanding of your goals and context.

This shifts the relationship from tool to partner—one that actively works to make you safer, healthier, more focused on what matters, and more effective at becoming who you want to be.

- There's nothing wrong with the various companies and builders stumbling randomly towards something that ends up looking like Digital Assistants. That's fun too. I just prefer knowing—at least roughly—where it's heading and where we are along the path.

- The idea that such a thing is predictable at all is based on my belief/conjecture that tech (and the future more generally) are not predictable, but human desires are. And that they're mostly stable over time. So if we know people consistently want more safety, more connection, more capability, etc...you can stochastically anticipate this is what will get built and selected for. From there I start with what an ultimate form of that might look like, and work backwards.

- Combining that with how modern AI has progressed since late 2022, I see the rough evolution of personal AI as: Chatbots -> Agents -> Assistants. Chatbots are basic call-and-response, leaving all the work to the user. Agents are autonomous workers who can do tasks on their own. And Assistants are what we're actually building towards, i.e., competent companions that make us safer, healthier, happier, etc., and generally more effective at whatever we're trying to do in life and work.

- This model basically argues that 1) it is actually possible to know (roughly) where we are going, and that 2) it's actually useful to know this because it serves the purpose of Sensemaking. It gives order to the seemingly random, noisy tech developments along the way. And most importantly, for builders like us, it provides focus and direction on what to create next, and why.

I hope it's useful to you.

如有侵权请联系:admin#unsafe.sh