文章指出被动互联网扫描数据在现代动态基础设施中存在局限性,因其静态快照特性无法及时反映快速变化的云环境和新部署服务,导致安全团队决策基于过时信息。主动持续侦察通过每日自动化检查,可实时跟踪DNS、证书和主机变化,及时发现新暴露服务和配置错误,帮助组织有效管理不断演变的攻击面。 2025-12-5 16:45:20 Author: www.bleepingcomputer.com(查看原文) 阅读量:4 收藏

AUTHOR: Topher Lyons, Solutions Engineer at Sprocket Security

The Limits of Passive Internet-Scan Data

Most organizations are familiar with the traditional approach to external visibility: rely on passive internet-scan data, subscription-based datasets, or occasional point-in-time reconnaissance to understand what they have facing the public internet. These sources are typically delivered as static snapshots of lists of assets, open ports, or exposures observed during a periodic scan cycle.

While useful for broad trend awareness, passive datasets are often misunderstood. Many security teams assume they provide a complete picture of everything attackers can see. But in today’s highly dynamic infrastructure, passive data ages quickly.

Cloud footprints shift by the day, development teams deploy new services continuously, and misconfigurations appear (and disappear) far faster than passive scans can keep up.

As a result, organizations relying solely on passive data often make decisions based on stale or incomplete information.

To maintain an accurate, defensive view of the external attack surface, teams need something different: continuous, automated, active reconnaissance that verifies what’s actually exposed every day.

Today’s Attack Surface: Fast-Moving, Fragmented, and Hard to Track

Attack surfaces used to be relatively static. A perimeter firewall, a few public-facing servers, and a DNS zone or two made discovery manageable. But modern infrastructure has changed everything.

- Cloud adoption has decentralized hosting, pushing assets across multiple providers and regions.

- Rapid deployment cycles introduce new services, containers, or endpoints.

- Asset sprawl grows quietly as teams experiment, test, or automate.

- Shadow IT emerges from marketing campaigns, SaaS tools, vendor-hosted environments, and unmanaged subdomains.

Even seemingly insignificant changes can create material exposure. A DNS record that points to the wrong host, an expired TLS certificate, or a forgotten dev instance can all introduce risk. And because these changes occur constantly, visibility that isn’t refreshed continuously will always fall out of sync with reality.

If the attack surface changes daily, then visibility must match that cadence.

Why Passive Data Fails Modern Security Teams

Stale Findings

Passive scan data becomes outdated quickly. An exposed service may disappear before a team even sees the report, while new exposures emerge that weren’t captured at all. This leads to a common cycle where security teams spend time chasing issues that no longer exist while missing the ones that matter today.

Context Gaps

Passive datasets tend to be shallow. They often lack:

- Ownership

- Attribution

- Root-cause detail

- Impact context

- Environmental awareness

Without context, teams can’t prioritize effectively. A minor informational issue may look identical to a severe exposure.

Missed Ephemeral Assets

Modern infrastructure is full of short-lived components. Temporary testing services, auto-scaled cloud nodes, and misconfigured trail environments might live for only minutes or hours. Because passive scans are periodic, these fleeting assets often never appear in the dataset, yet attackers routinely find and exploit them.

Duplicate or Irrelevant Artifacts

Passive data commonly includes leftover DNS records, reassigned IP space, or historical entries that no longer reflect the environment. Teams must manually separate false positives from real issues, increasing alert fatigue and wasting time.

Continuous Reconnaissance: What It Is (and Isn’t)

Automated, Active Daily Checks

Continuous visibility relies on recurring, controlled reconnaissance that automatically verifies external exposure. This includes:

- Detecting newly exposed services

- Tracking DNS, certificate, and hosting changes

- Identifying new reachable hosts

- Classifying new or unknown assets

- Validating current exposure and configuration state

This is not exploitation, or intrusive actions. It’s safe, automated enumeration built for defense.

Environment-Aware Discovery

As infrastructure shifts, continuous recon shifts with it. New cloud regions, new subdomains, or new testing environments naturally enter and exit the attack surface. Continuous visibility keeps pace automatically with no manual refresh required.

What Continuous Visibility Reveals (That Passive Data Can’t)

Newly Exposed Services

These exposures often appear suddenly and unintentionally:

- A forgotten staging server coming online

- A developer opening RDP or SSH for testing

- A newly created S3 bucket left public

Daily verification catches these before attackers do.

Misconfigurations Introduced During Deployments

Rapid deployments introduce subtle errors:

- Certificates misapplied or expired

- Default configurations restored

- Ports opened unexpectedly

Daily visibility surfaces them immediately.

Shadow IT and Rogue Assets

Not every externally exposed asset originates from engineering. Marketing microsites, vendor-hosted services, third-party landing pages, and unmanaged SaaS instances often fall outside traditional inventories, yet remain publicly reachable.

Real-Time Validation

Continuous recon ensures findings reflect today’s attack surface. This dramatically reduces wasted effort and improves decision-making.

Turning Reconnaissance into Decision Making

Prioritization Through Verification

When findings are validated and current, security teams can confidently determine which exposures pose the most immediate risk.

Triage Without Hunting Through Noise

Continuous recon removes stale, duplicated, or irrelevant findings before they ever reach an analyst’s queue.

Clear Ownership Paths

Accurate attribution helps teams route issues to the correct internal group, like engineering, cloud, networking, marketing, or a specific application team.

Reduced Alert Fatigue

Security teams stay focused on real, actionable issues rather than wading through thousands of unverified scan entries.

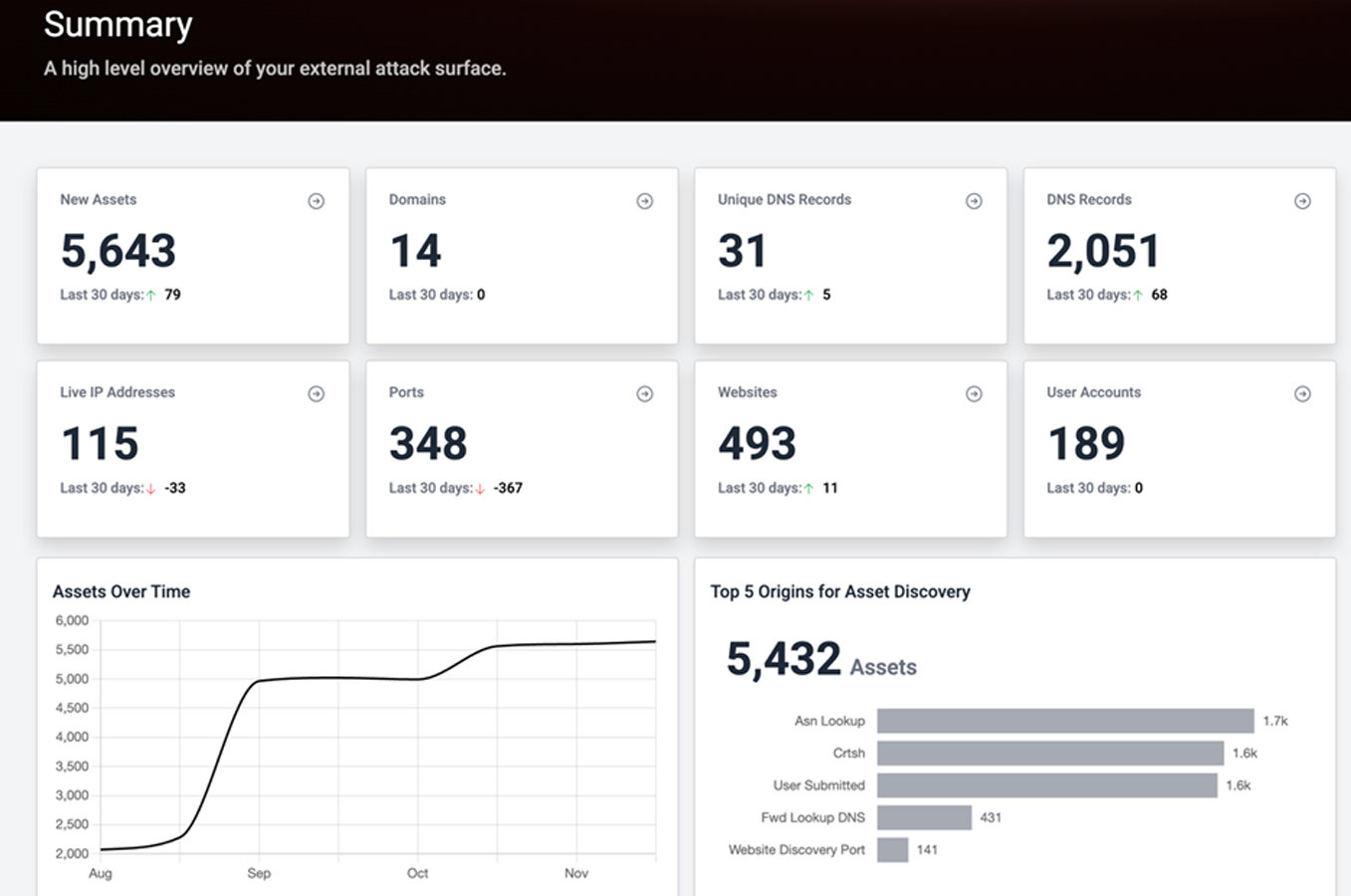

How Sprocket Security Approaches ASM

Daily Reconnaissance at Scale

Sprocket Security performs automated, continuous checks across your entire external footprint. Exposures are discovered and validated as they appear, whether they persist for hours or minutes.

Actionable Findings

Through our ASM framework, each finding is classified, verified, attributed, and prioritized. This ensures clarity, context, and impact without overwhelming volume.

Removing Guesswork from ASM

A validated, contextualized finding tells teams:

- What changed

- Why it matters

- How severe it is

- Who owns it

- What action to take

Compared to raw scan data, this eliminates ambiguity and reduces the time it takes to resolve issues.

Getting a Handle on Your Attack Surface

Here are some of the ways that organizations can ensure thorough monitoring of their attack surface:

- Maintain an accurate asset inventory.

- Implement continuous monitoring.

- Prioritize vulnerabilities based on risk.

- Automate where possible.

- Regularly update and patch systems.

For a deeper dive into improving you attack surface know-how see our full blog on Attack Surface Monitoring: Core Functions, Challenges, and Best Practices.

Modern Security Demands Continuous Visibility

Today’s attack surfaces evolve constantly. Static, passive datasets simply cannot keep up. To stay ahead of emerging exposures and prevent easily avoidable incidents, security teams need continuous, automated reconnaissance that reflects the real state of their environment.

Relying solely on passive data creates blind spots. Continuous visibility closes them. As organizations modernize their infrastructure and accelerate deployment cycles, continuous reconnaissance becomes the foundation of attack surface hygiene, prioritization, and real-world risk reduction.

Sponsored and written by Sprocket Security.

如有侵权请联系:admin#unsafe.sh