Anthropic: China-backed hackers launch first large-scale autonomous AI cyberattack 2025-11-16 07:56:46 Author: securityaffairs.com(查看原文) 阅读量:16 收藏

Anthropic: China-backed hackers launch first large-scale autonomous AI cyberattack

Pierluigi Paganini

November 16, 2025

China-linked actors used Anthropic’s AI to automate and run cyberattacks in a sophisticated 2025 espionage campaign using advanced agentic tools.

China-linked threat actors used Anthropic’s AI to automate and execute cyberattacks in a highly sophisticated espionage campaign in September 2025. The cyber spies leveraged advanced “agentic” capabilities rather than using AI only for guidance.

Attackers abused AI’s agentic capabilities to execute cyberattacks autonomously. According to the experts, this represents an unprecedented shift from AI as advisor to AI as operator. A Chinese state-sponsored group used Claude Code to target about 30 global organizations, succeeding in a few cases across tech, finance, chemicals, and government. It’s likely the first large-scale attack with minimal human involvement.

“In mid-September 2025, we detected suspicious activity that later investigation determined to be a highly sophisticated espionage campaign.” reads the report published by Anthropic. “After detection, accounts were banned, victims notified, and authorities engaged. The case highlights rising risks from autonomous AI agents and the need for stronger detection and defensive measures.”

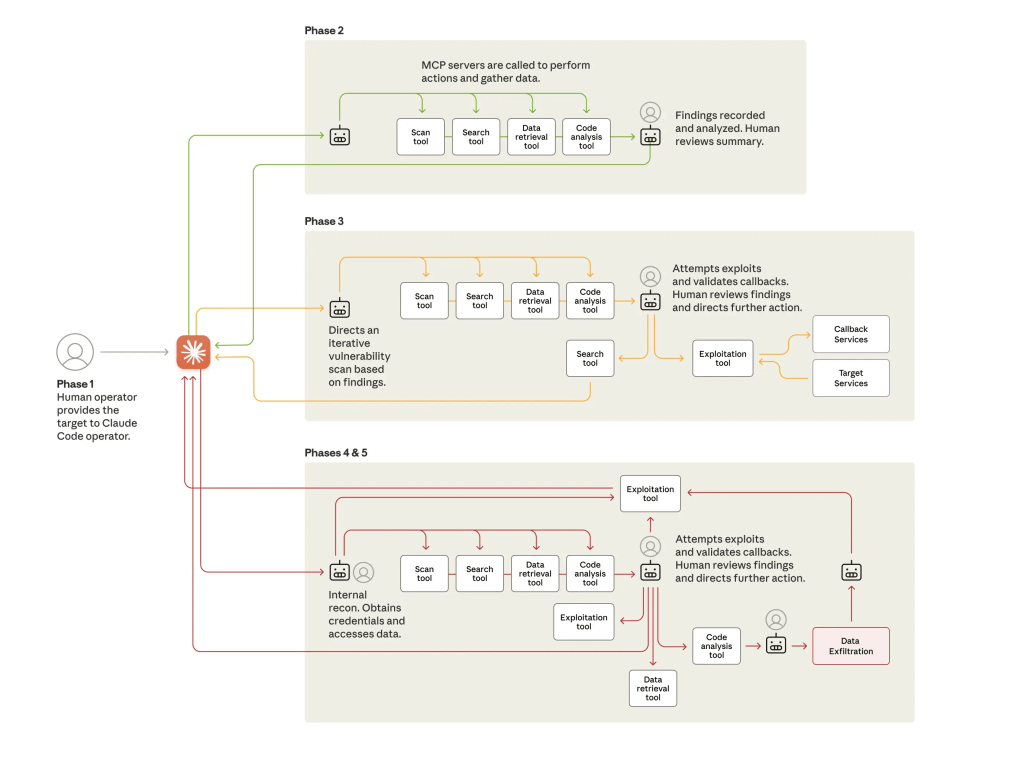

The attack exploited three newly matured AI capabilities. First, greater intelligence let models follow complex instructions and use advanced skills like coding for malicious tasks. Second, increased agency enabled AI agents to act autonomously, chaining actions and making decisions with minimal human input. Third, broad tool access, via standards like MCP, allowed models to use web search, data retrieval, password crackers, and network scanners. Each attack phase relied on this combination of intelligence, autonomy, and tool integration.

The cyberspies selected targets and built an autonomous attack framework using Claude Code. After jailbreaking Claude by disguising tasks as benign and framing the activity as defensive testing, they launched Phase 2. Claude rapidly mapped systems, identified high-value databases, and reported findings. It then researched and wrote exploits, harvested credentials, created backdoors, and exfiltrated data with minimal human oversight. Claude even documented the operation. Overall, the AI performed 80–90% of the campaign, executing thousands of requests at speeds impossible for humans, though occasional hallucinations limited full autonomy.

The attack marks an escalation from past “vibe hacking,” with far less human involvement and large-scale AI-driven operations. Yet the same capabilities enabling misuse make AI vital for defense: Claude was used to analyze the investigation’s data. Cybersecurity is shifting; teams should adopt AI for SOC work, detection, and response, while improving safeguards, threat sharing, and monitoring.

“The barriers to performing sophisticated cyberattacks have dropped substantially—and we predict that they’ll continue to do so. With the correct setup, threat actors can now use agentic AI systems for extended periods to do the work of entire teams of experienced hackers: analyzing target systems, producing exploit code, and scanning vast datasets of stolen information more efficiently than any human operator.” concludes the report. “Less experienced and resourced groups can now potentially perform large-scale attacks of this nature.”

Many experts are skeptical about Anthropic’s report, one of them is the famous Kevin Beaumont, below is the statement he wrote on LinkedIn:

“I’m a really weird stage in my career – a bad point – where I’m having to go to prominent industry leaders and be like ‘you realise that article you just shared about 90% of ransomware being from GenAI isn’t real’ constantly.

100% think a load of these people are thinking I don’t know what I’m on about, because 1000 other industry leaders have told them about GenAI ransomware.

It’s really interesting to watch though as basically China has played a blinder, Chinese whisper panic basically.

Definitely interesting as you’ve got really big, trusted orgs putting out absolute nonsense – and really big industry figureheads repeating it, and surveys with CISOs saying they’re seeing 70% of ransomware being AI (spoiler: they aren’t, they likely haven’t dealt with a single ransomware incident themselves).

A lot of it is about retaining CISO budgets and making sales.

But there’s also a lot of people who don’t realise what is happening, and it ain’t based on evidence. Embarrassing for me.

If you’re wondering what any of this has to do with China by the way: China knows the west are obsessed with AI threats. It’s a really easy way to distract entire countries.

There’s a reason why ‘oh no, threat actor has made some completely shit GenAI malware using ChatGPT’ things keep coming along, rather than in locally hosted AI tools.

They have a laser pointer, and y’all are their cats, while they take yer cat food away. They want to be seen.

If you don’t believe me btw, the stuff generated in that “blockbuster” WSJ report had Chinese .WAV file songs embedded in it, and jokes. And it didn’t even run properly. And everybody ran off a cliff with it in a panic, taking actions without any info.

Personally I think hats off as they’re running rings around everybody. The best way to make sure orgs don’t do real security foundations is via this strategy.”

Follow me on Twitter: @securityaffairs and Facebook and Mastodon

(SecurityAffairs – hacking, Anthropic)

如有侵权请联系:admin#unsafe.sh