There was recently a flurry of attention and dismay over Sarah Kendzior having been suspended 2025-11-13 20:0:0 Author: www.tbray.org(查看原文) 阅读量:3 收藏

There was recently a flurry of attention and dismay over Sarah Kendzior having been suspended from Bluesky by its moderation system. Since the state of the art in trust and safety is evolving fast, this is worth a closer look. In particular, Mastodon has a really different approach, so let’s see how the Kendzior drama would have played out there.

Disclosures · I’m a fan of Ms Kendzior, for example check out her recent When I Loved New York; fine writing and incisive politics. I like the Bluesky experience and have warm feelings toward the team there, although my long-term social media bet is on Mastodon.

Back story · Back in early October, the Wall Street Journal published It’s Finally Time to Give Johnny Cash His Due, an appreciation for Johnny’s music that I totally agreed with. In particular I liked its praise for American IV: The Man Comes Around which, recorded while he was more or less on his deathbed, is a masterpiece. It also said that, relative to other rockers, Johnny “can seem deeply uncool”.

Ms Kendzior, who is apparently also a Cash fan and furthermore thinks he’s cool, posted to Bluesky “I want to shoot the author of this article just to watch him die.” Which is pretty funny, because one of Johnny’s most famous lyrics, from Folsom Prison Blues, was “I shot a man in Reno just to watch him die.” (Just so you know: In 1968 Johnny performed the song at a benefit concert for the prisoners at Folsom, and on the live record (which is good), there is a burst of applause from the audience after the “shot a man” lyric. It was apparently added in postproduction.)

Subsequently, per the Bluesky Safety account ”The account owner of @sarahkendzior.bsky.social was suspended for 72 hours for expressing a desire to shoot the author of an article.”

There was an outburst of fury on Bluesky about the sudden vanishing of Ms Kendzior’s account, and the explanation quoted above didn’t seem to reduce the heat much. Since I know nothing about the mechanisms used by Bluesky Safety, I’m not going to dive any deeper into the Bluesky story.

On Mastodon · I do know quite a bit about Mastodon’s trust-and-safety mechanisms, having been a moderator on CoSocial.ca for a couple of years now. So I’m going to walk through how the same story might have unfolded on Mastodon, assuming Ms Kendzior had made the same post about the WSJ article. There are a bunch of forks in this story’s path, where it might have gone one way or another depending on the humans involved.

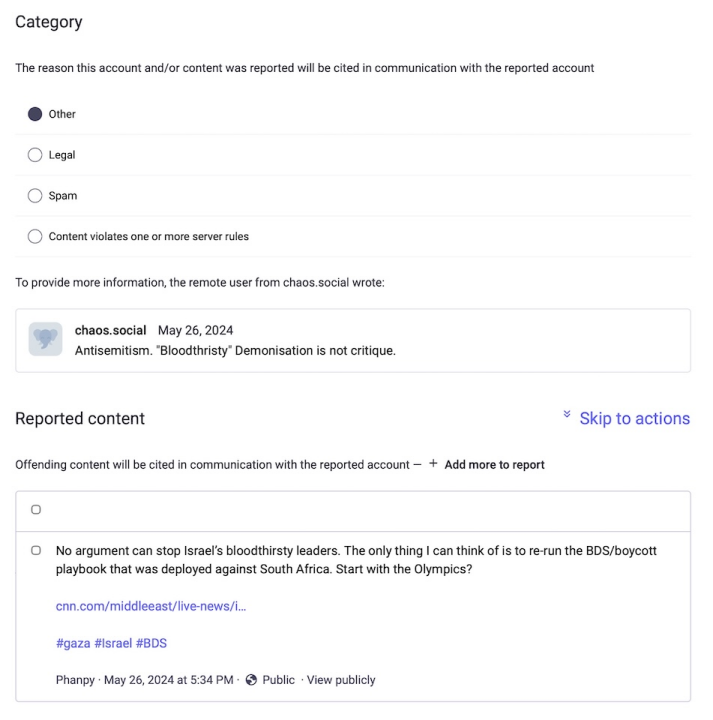

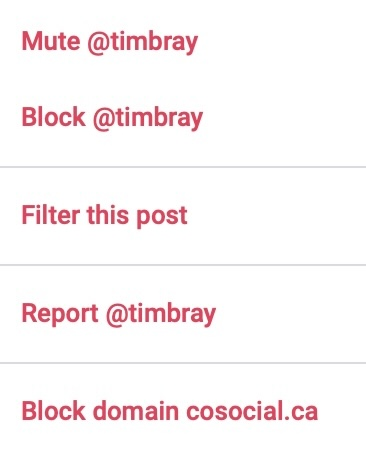

Reporting · The Mastodon process is very much human-driven. Anyone who saw Ms Kendzior’s post could pull up the per-post menu and hit the “Report” button. I’ve put a sample of what that looks like on the right, assuming someone wanted to report yours truly.

By the way, there are many independent Mastodon clients; some of them have “Report” screens that are way cooler than this. I use Phanpy, which has a hilarious little animation with an animated rubber stamp that leaves a red-ink “Spam” or whatever on the post you’re reporting.

We’ll get into what happens with reports, but here’s the first fork in the road: Would the Kendzior post have been reported? I think there are three categories of people that are interesting. First, Kendzior fans who are hip to Johnny Cash, get the reference, snicker, and move on. Second, followers who think “ouch, that could be misinterpreted”; they might throw a comment onto the post or just maybe report it. Third, Reply Guys who’ll jump at any chance to take a vocal woman down; they’d gleefully report her en masse. There’s no way to predict what would have happened, but it wouldn’t be surprising if there were both categories of report, or either, or none.

Moderating · When you file a report, it goes to the moderators both on your instance and those on the instance where the post was made and the person who posted has an account. I dug up a 2024 report someone filed against me to give a feeling for what the moderator experience is like.

I think it’s reasonably self-explanatory. Note that the account that filed the report is not identified, but that the server it came from is.

A lot of reports are just handled quickly by a single moderator and don’t take much thought: Bitcoin scammer or Bill Gates impersonator or someone with a swastika in their profile? Serious report, treated seriously.

Others require some work. In the moderation screen, just below the part on display above, there’s space for moderators to discuss what to do. (In this particular case they decided that criticism of political leadership wasn’t “antisemitism” and resolved the report with no action.)

In the Kendzior case, what might the moderators have done? The answer, as usual, is “it depends”. If there were just one or two reports and they leaned on terminology like “bitch” and “woke”, quite possibly they would have been dismissed.

If one or more reports were a heartfelt expression of revulsion or trauma at what seemed to be a hideous death threat, the moderators might well have decided to take action. Similarly if the reports were from people who’d got the reference and snickered but then decided that there really should have been a “just kidding” addendum.

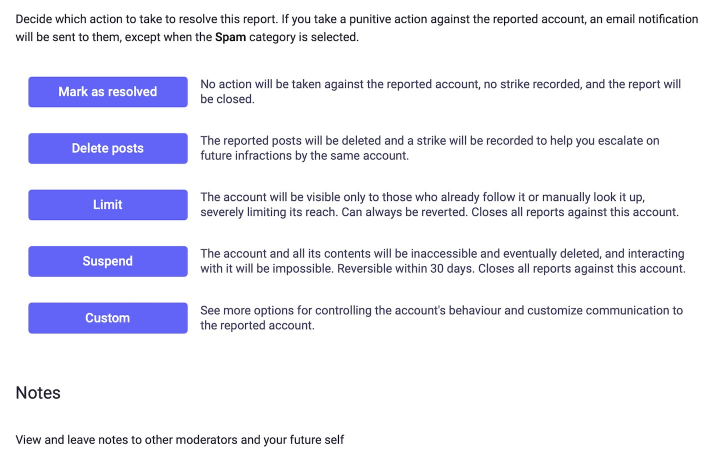

Action · Here are the actions a moderator can take.

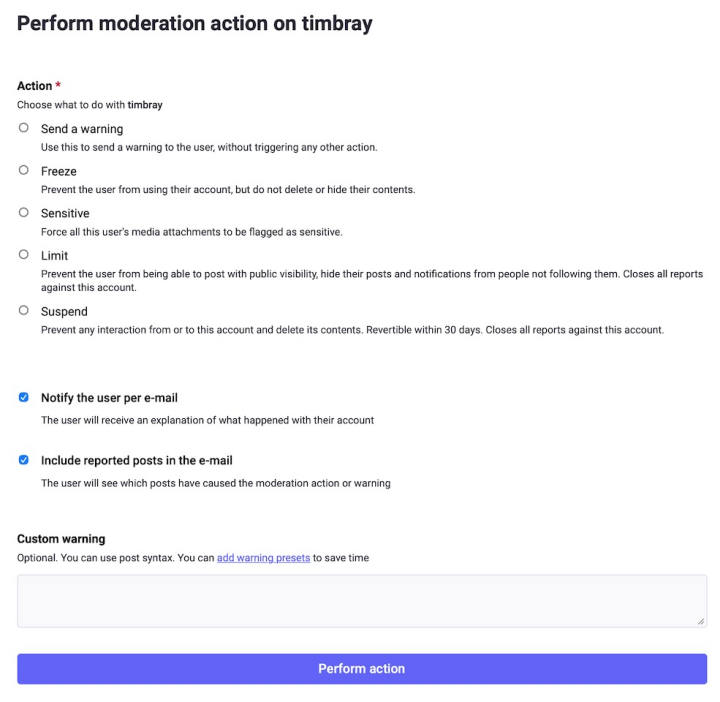

If you select “Custom”, you get this:

Once again, I think these are self-explanatory. Before taking up the question of what might happen in the Kendzior case, I should grant that moderators are just people, and sometimes they’re the wrong people. There have been servers with a reputation for draconian moderation on posts that are even moderately controversial. They typically haven’t done very well in terms of attracting and retaining members.

OK, what might happen in the Kendzior case? I’m pretty sure there are servers out there where the post would just have been deleted. But my bet is on that “Send a warning” option. Where the warning might go something like “That post of yours really shook up some people who didn’t get the Folsom Prison Blues reference and you should really update it somehow to make it clear you’re not serious.”

Typically, people who get that kind of moderation message take it seriously. If not, the moderator can just delete the post. And if the person makes it clear they’re not going to co-operate, that creates a serious risk that if you let them go on shaking people up, your server could get mass-defederated, which is the death penalty. So (after some discussion) they’d delete the account. Everyone has the right to free speech, but nobody has a right to an audience courtesy of our server.

Bottom line · It is very, very unlikely that in the Mastodon universe, Sarah Kendzior’s account would suddenly have globally vanished. It is quite likely that the shot-a-man post would have been edited appropriately, and possible that it would have just vanished.

Will it scale? · I think the possible outcomes I suggested above are, well, OK. I think the process I’ve described is also OK. The question arises as to whether this will hold together as the Fediverse grows by orders of magnitude.

I think so? People are working hard on moderation tools. I think this could be an area where AI would help, by highlighting possible problems for moderators in the same way that it highlights spots-to-look-at today for radiologists. We’ll see.

There are also a couple of a background realities that we should be paying more attention to. First, bad actors tend to cluster on bad servers, simply because non-bad servers take moderation seriously. The defederation scalpel needs to be kept sharp and kept nearby.

Secondly, I’m pretty convinced that the current open-enrollment policy adopted by many servers, where anyone can have an account just by asking for it, will eventually have to be phased out. Even a tiny barrier to entry — a few words on why you want to join or, even better, a small payment — is going to reduce the frequency of troublemakers to an amazing degree.

Take-aways · Well, now you know how moderation works in the Fediverse. You’ll have to make up your own mind about whether you like it.

如有侵权请联系:admin#unsafe.sh