好的,我现在需要帮用户总结一篇文章,控制在100字以内。用户给的文章是关于微软发现的Whisper Leak侧信道攻击,影响AI聊天隐私。 首先,我得通读整篇文章,抓住主要信息。文章提到微软发现了Whisper Leak,这是一种侧信道攻击,允许网络嗅探者推断加密的AI聊天主题,威胁用户隐私。攻击者通过分析加密流量的模式来推断对话内容,准确率高达98%。微软已经与相关公司合作,采取了缓解措施,比如添加混淆字段来掩盖token长度。 接下来,我需要将这些关键点浓缩成一句话。要确保涵盖攻击名称、影响、机制以及缓解措施。同时要注意字数限制在100字以内。 可能的结构是:微软发现Whisper Leak侧信道攻击,通过分析加密流量模式推断AI聊天主题,威胁隐私。攻击准确率高,但已采取措施缓解风险。 检查一下是否所有关键点都包含:攻击名称、机制、影响、缓解措施。看起来都涵盖了。 最后,确保语言简洁明了,没有多余的信息。 </think> Microsoft发现了一种名为Whisper Leak的侧信道攻击技术,该技术允许网络嗅探者通过分析加密的AI聊天数据流量模式推断对话主题,严重威胁用户隐私安全。尽管数据已加密,但攻击者仍能通过识别特定话题的网络特征实现精准推断,准确率高达98%以上。微软已与相关公司合作采取措施缓解此风险。 2025-11-9 15:30:21 Author: securityaffairs.com(查看原文) 阅读量:9 收藏

AI chat privacy at risk: Microsoft details Whisper Leak side-channel attack

Pierluigi Paganini

November 09, 2025

Microsoft uncovered Whisper Leak, a side-channel attack that lets network snoopers infer AI chat topics despite encryption, risking user privacy.

Microsoft revealed a new side-channel attack called Whisper Leak, which lets attackers who can monitor network traffic infer what users discuss with remote language models, even when the data is encrypted. The company warned that this flaw could expose sensitive details from user or enterprise conversations with streaming AI systems, creating serious privacy risks.

AI chatbots now play key roles in daily life and sensitive fields like healthcare and law. Protecting user data with strong anonymization, encryption, and retention policies is vital to maintain trust and privacy.

AI chatbots use HTTPS (TLS) to encrypt communications, ensuring secure, authenticated connections. Language models generate text token by token, streaming outputs for faster feedback. TLS uses asymmetric cryptography to exchange symmetric keys for ciphers like AES or ChaCha20, which keep ciphertext size near plaintext size. Recent studies reveal side-channel risks in AI models: attackers can infer token length, timing, or cache patterns to guess prompt topics. Microsoft’s Whisper Leak expands on these, showing how encrypted traffic patterns alone can reveal conversation themes.

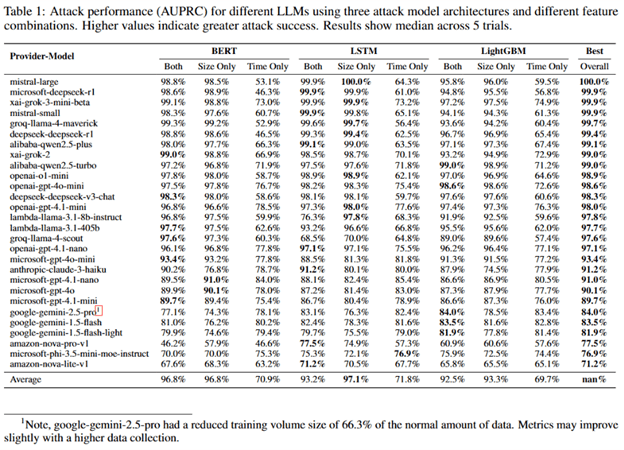

Microsoft researchers trained a binary classifier to detect when a chat with a language model involved a specific topic, “legality of money laundering”, versus general traffic. They generated 100 topic-related prompts and over 11,000 unrelated ones, capturing response times and packet sizes via tcpdump while randomizing samples to avoid cache bias. Using LightGBM, Bi-LSTM, and BERT models, they tested time, size, and combined data. Many models achieved over 98% accuracy (AUPRC), proving that topic-specific network patterns leave identifiable digital “fingerprints.”

“We evaluated the performance using Area Under the Precision-Recall Curve (AUPRC), which is a measurement of a cyberattack’s success for imbalanced datasets (many negative samples, fewer positive samples).” reads the report published by Microsoft. “A quick look at the “Best Overall” column shows that for many models, the cyberattack achieved scores above 98%. This tells us that the unique digital “fingerprints” left by conversations on a specific topic are distinct enough for our AI-powered eavesdropper to reliably pick them out in a controlled test.”

A simulation of a realistic surveillance scenario found that even when monitoring 10,000 random conversations with only one about a sensitive topic, attackers could still identify targets with alarming precision. Many tested AI models allowed 100% precision, every flagged conversation correctly matched the topic, while detecting 5–50% of all target conversations. This means attackers or agencies could reliably spot users discussing sensitive issues despite encryption. Though projections are limited by test data, results indicate a real, growing risk as attackers gather more data and refine models.

“In extended tests with one tested model, we observed continued improvement in attack accuracy as dataset size increased.” continues the report. “Combined with more sophisticated attack models and the richer patterns available in multi-turn conversations or multiple conversations from the same user, this means a cyberattacker with patience and resources could achieve higher success rates than our initial results suggest.”

Microsoft shared its findings with OpenAI, Mistral, Microsoft, and xAI, which implemented mitigations to reduce the identified risk.

OpenAI, and later Microsoft Azure, added an obfuscation field to streaming responses, inserting random text to mask token lengths and sharply reduce attack effectiveness; testing confirms Azure’s fix lowers the risk to non-practical levels. Mistral introduced a similar mitigation via a new “p” parameter.

Though mainly an AI provider issue, users can enhance privacy by avoiding sensitive topics on untrusted networks, using VPNs, choosing providers with mitigations, opting for non-streaming models, and staying informed about security practices.

Follow me on Twitter: @securityaffairs and Facebook and Mastodon

(SecurityAffairs – hacking, Whisper Leak)

如有侵权请联系:admin#unsafe.sh