LASCON XV探讨了应用安全的关键议题,包括身份威胁检测与响应(ITDR)、风险导向漏洞管理、AI工具的影响及上下文在安全中的重要性。会议强调需关注生产环境、明确资产所有权并结合业务背景以应对网络安全挑战。 2025-10-29 15:0:8 Author: securityboulevard.com(查看原文) 阅读量:7 收藏

In Austin, you’ll find the Cathedral of Junk, a handmade structure built by one local out of bicycle frames, bottles, air‑conditioning vents, and assorted scrap pieces. It's all stacked into a chaotic, yet surprisingly coherent whole. It seems a fitting metaphor for where application security often finds itself, wading through piles of signals, parts, and artifacts until a pattern emerges. This landmark made an apt backdrop for a conversation with the OWASP community about how we defend our systems by aiming to get enough context at LASCON XV.

This year's event saw 450 attendees come together at the Norris Center for two full days of training, two days of sessions, a CTF, and many, many meaningful conversations. In total, 52 speakers brought their unique perspectives to the stage in Austin. While there were a lot of themes covered, and many AI-focused talks, at the heart of so many of the conversations was the urgency to connect signals to business context and alerts to human judgments that let security teams make sense of the sprawling challenges of cybersecurity.

Here are just a few highlights from this fifteenth edition of the Lonestar Application Security Conference.

Moving Beyond Endpoints

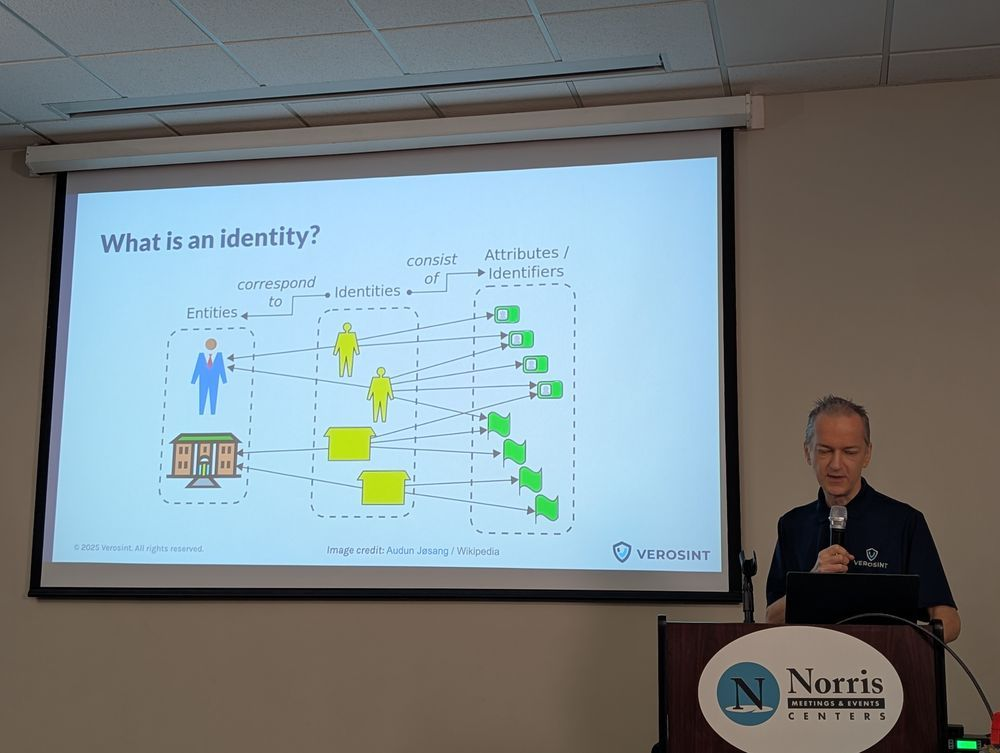

In the session “Improving Account Security with ITDR,” Bertold Kolics, Principal Quality Engineer at Imprivata, told us that credential theft, session sharing, and account takeover are first‑order threats. In his view, identity is now the perimeter. This has led to the emergence of Identity Threat Detection and Response (ITDR) to detect anomalous login behavior, session sharing, impossible travel, and non‑human identity (NHI) usage.

He walked through identity profiles that cover both human and non‑human actors, differences in behaviour by identity type, and contextual indicators such as geo‑location, time‑of‑day, device type, ISP/VPN usage, and browser fingerprinting. The attack surface has shifted and adversaries are far more likely to log in than to breach a network via old playbooks. Challenges include the scale of automated traffic, antique operating systems still in use, and look‑alike domains (e.g., Outlokk[.]com).

Bertold said we need to build risk‑scoring frameworks based on identity events, such as account creation, login failures, session sharing, MFA fatigue. Anomaly detection is good but it would be better to deploy prevention flows upstream. He also urged us to deploy honeypots and canary accounts as early warnings.

Shifting Left But With Context

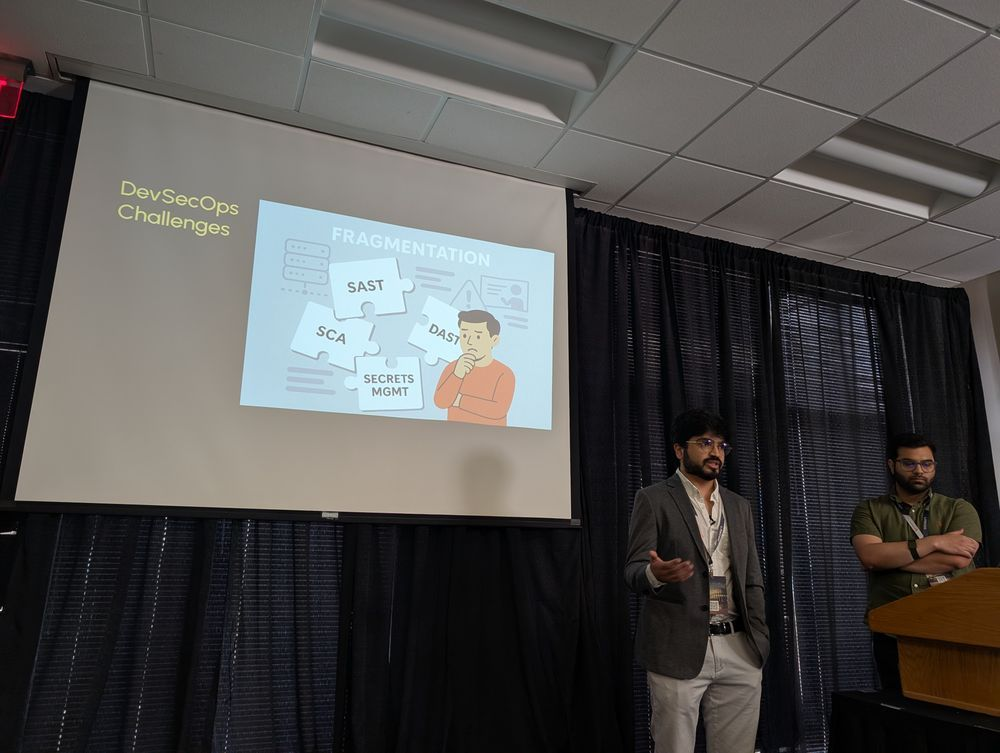

In “DevSecOps as a Launchpad,” from Milind Daftari, Cybersecurity Engineer, and Akash Rajeev Bhatia, Governance, Risk and Compliance, both at VISA, the focus was on how development workflows are changing under pressure from regulation and AI tooling. They began with "shifting left," noting that it has not really solved security issues. Instead, they argued, the emphasis must be on embedded automation, shared responsibility, and reducing false positives to maintain developer trust.

The talk covered SAST, DAST, and SCA scanning, as well as secrets management, all framed in terms of developer experience. Context and feedback loops are key for any security tooling to work, they explained. They talked about “false positive crisis,” where security tools that produce too many alerts with little remediation guidance cause dev teams to turn off or ignore them. They pointed out that regulation, including the EU AI Act and the Digital Personal Data Protection Act from India, adds an extra dimension of auditability, traceability, and resilience into the DevSecOps pipeline.

The duo also had a section about “Model Context Protocol” (MCP) and how they are seeing it integrate with code scanners and orchestration layers. They stressed that MCP is not AI; it is the infrastructure around it. In their view, security must help design the pipelines, connect the tooling, and govern the automation so that developer speed and security posture do not pull in opposite directions.

Risk‑Based Vulnerability Management

Mauve Hed, Sr. Manager, Security Engineering & Operations at Bazaarvoice, and Francesco Cipollone, CEO & Co-Founder of Phoenix Security, led a session called “Navigating the Challenges of Risk‑Based Vulnerability Management in a Cloud‑Native World.” They introduced the concept of Continuous Threat Exposure Management (CTEM) as the evolved form of vulnerability management, in which asset ownership, intelligent attribution, contextual correlation, and code‑to‑cloud visibility are core. Remediation is the metric that matters, not mere scanning counts.

They emphasised that you must move from “how many issues do we have” to “how many risks do we mitigate” and that the only way to get there is by enriching vulnerability data with business context and owning the remediation pipeline end‑to‑end. They said for security operations teams, this means accepting that scanning alone is insufficient. We must act on what is reachable, exploitable, and connected to mission-critical data.

Navigating AI's Automation Paradox

In “AI, AppSec and You: A Practitioner’s Diary,” Matt Tesauro, CTO at DefectDojo, explored how AI tooling is entering the AppSec and DevSecOps domain. He explained how he saw it both empower and complicate security’s role. Tooling that uses generative AI and agents is currently brittle and requires human governance; otherwise, there is a good chance they will amplify risk.

Matt offered cautionary tales of “vibe coding” by developers who lean on AI without proper context. He explained that “sloponomics” is a term emerged to describe the dilution of quality as AI‑generated code floods pipelines. He flagged the “lethal trifecta” of risk when AI systems gain access to untrusted sources, legitimate secrets, and the ability to communicate externally. Prompt injection is a feature, not a bug.

He argued that probabilistic LLMs do not easily slot into existing deterministic security assessment models, and that we must design for failure. The future will be shaped by how AI tools are integrated and governed. There is a lot of promise in AI tools, he thinks, but the dangers are also very real.

Production context, not castle theory

Throughout the whole event, speakers pushed hard on a production-first model for AppSec. Sensors, telemetry, and a knowledge graph should map infrastructure to assets to the business context. The goal must be to surface the small slice of issues that are both reachable and exploitable, not drown in theoretical lists. You cannot fix everything, so prioritize what runs, what attackers touch, and what connects to sensitive assets.

Identity is the front line

Identity is the new perimeter for people and non-human identities. ITDR, or Identity Threat Detection and Response, earned the spotlight with anomaly scoring, session analysis, impossible travel checks, and honeypots like canary accounts. Criminals often log in rather than break in. Detection speed matters, and ownership plus reachability must be explicit so risky logins are contained without breaking legitimate users.

Context beats noise

Alert fatigue and dashboard sprawl are symptoms of missing context. We need to lean into data-driven prioritization that leverages signals like EPSS and the Known Exploited Vulnerabilities (KEV) catalog. Automation helps, but only after you deduplicate, correlate, and tie every risk to a clear owner and control. Metrics have to tell a business story with baselines, plain language, and evidence of improvement. Otherwise, people will invent their own narrative when they see your charts.

AI raises the stakes, not the bar

AI showed up as both accelerator and adversary. Models and agents expand scale and context when harnessed through the MCP, yet they also introduce brittle logic, prompt risk, and supply-chain surprises like malicious pickle (.pkl) files. We can use AI to enrich detection, triage, and patch suggestions, but we very much need to keep human judgment at the center. Focus on what matters in production and measure progress in terms that the business can trust.

Victory Through Vigilance

The throughline at LASCON XV was that context turns noise into action. Speakers agreed we need to make ownership explicit, and measure progress by remediation, not scan or vuln counts. Identity is where attackers meet your business, and it is time to treat accounts, sessions, and non-human identities as first-class assets. Your author was able to give a talk on how to go about it by collaborating across the enterprise to get a handle on non-human identity access, working with all teams who build, pay for, and manage identities.

LASCON attendees left with the message that we need to design for observability and resilience rather than perfect, unbreachable defenses. Let AI help with triage and suggestions, while the metrics tell a plain-language business story.

*** This is a Security Bloggers Network syndicated blog from GitGuardian Blog - Take Control of Your Secrets Security authored by Dwayne McDaniel. Read the original post at: https://blog.gitguardian.com/lascon-xv/

如有侵权请联系:admin#unsafe.sh