好的,我现在需要帮用户总结这篇文章的内容,控制在100字以内。首先,我得仔细阅读文章,理解作者的主要观点。 文章的开头提到作者有多年的Unix/Linux安全事件响应和取证经验,从1983年开始使用Unix系统。他喜欢教授FOR577课程,因为有很多早期的故事仍然 relevant。接着,他提到近年来攻击者喜欢将工具隐藏或在内存文件系统如/dev/shm中进行数据外泄。 然后,作者指出无法直接dd这些tmpfs文件系统,因为它们没有块设备支持。于是他开发了一种方法来收集元数据和文件内容。他使用find和stat命令收集元数据,并通过sed处理时间戳问题,然后用ssh传输到远程系统。接着收集文件内容,使用find和tar命令打包传输。 最后,他提到这种方法在多个系统上成功应用,并将在DFIRCon会议上讨论。 总结时要抓住关键点:内存文件系统的取证挑战、作者的方法(分两步收集元数据和内容)、以及该方法的成功应用。 </think> 作者分享了在Unix/Linux系统中进行内存文件系统取证的经验,提出了一种通过分步收集元数据和文件内容的方法来解决tmpfs无法直接镜像的问题,并成功应用于多个案例。 2025-10-29 04:53:31 Author: isc.sans.edu(查看原文) 阅读量:2 收藏

I've been doing Unix/Linux IR and Forensics for a long time. I logged into a Unix system for the first time in 1983. That's one of the reasons I love teaching FOR577[1], because I have stories that go back to before some of my students were even born that are still relevant today.

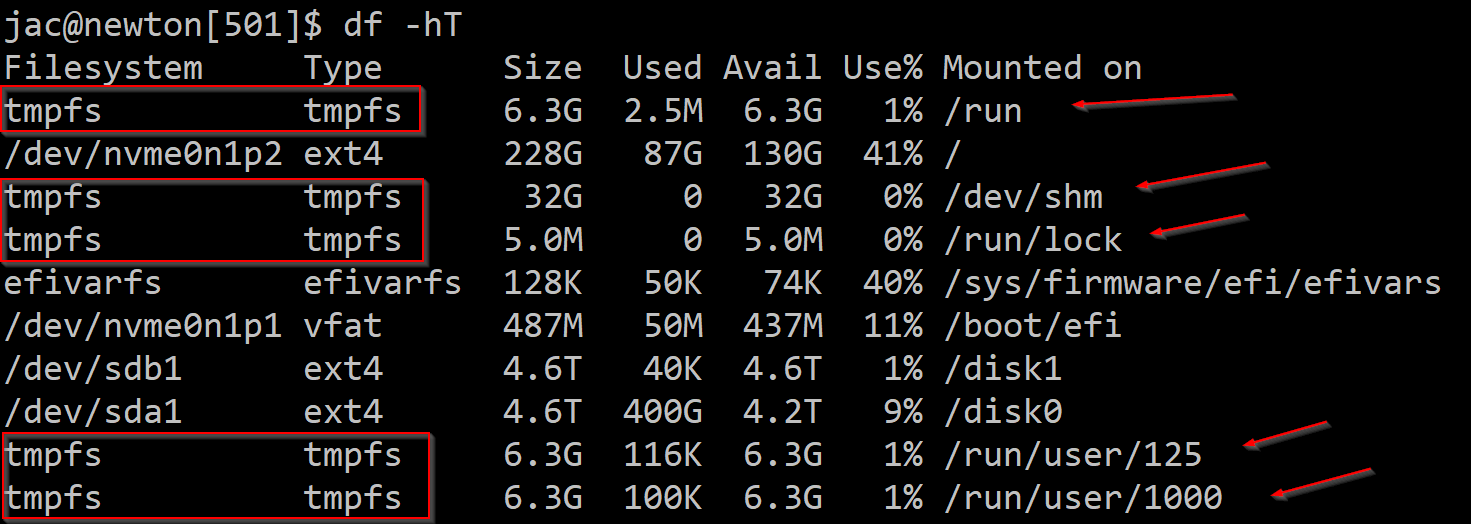

In recent years, I've noticed a lot of attackers try to hide their tools or stage their data exfiltration in memory-only filesystems like /dev/shm or other tmpfs locations.

Unfortunately, you can't just dd these tmpfs filesystems. There is no block device backing it that will let you take a forensically sound image. So, if I want to get all of the metadata and the contents of any files the attacker may have stashed there, I'm going to need to try something else. Fortunately, after thinking about it a bit, I came up with a method that worked for me. I even talked it over briefly with Hal Pomeranz and we couldn't come up with anything better. When I was thinking about this about a year ago, I did a quick Google search and didn't see anyone else having talked about this, but I'd be surprised if others haven't come up with the same idea.

The basic idea is to first collect the metadata (inode contents), then collect the file contents, since doing it in the other order would cause the access timestamp in the inode to be updated. Since I came up with this technique, I've used it on dozens (probably 100+) of systems with pretty good success. I have run into a handful that didn't have the stat command, so I could only collect the contents, but not the inode metadata. You deal with what the system has available.

# find /dev/shm -exec $(which stat) -c '0|%N|%i|%A|%u|%g|%s|%X|%Y|%Z|%W' {} \; | sed -e 's/|W$/|0/' -e s'/|?$/|0/' | ssh foo@system "cat - > $(hostname)-dev-shm-bodyfile"

This commandline will use the find command to walk the tmpfs filesystem (in this example, /dev/shm) and run the stat command against every file and directory it finds. The sed command is to compensate for versions of the stat command that don't understand the %W format string to print out the creation time (b-time). Then, I pipe the output to ssh to send to a remote system where I collect the evidence (you could, of course, save it off to a USB drive or some other mounted filesystem if you wish). Versions of the Linux coreutils package prior to, I believe, version 8.32 don't have/use the statx() system call to access the creation timestamp even though it is usually in the inode on recent systems. The output is in bodyfile format which can then be fed to the mactime program from The Sleuth Kit (TSK)[2] that can convert it into the classic filesystem timeline format.

Once the metadata is collected, it is safe to collect the file contents (understanding that some file contents may have changed in the interval between the commands). The command that I usually use is as follows.

# find /dev/shm -type f -print | tar czvO -T - | ssh foo@system "cat - > $(hostname)-dev-shm-fs.tgz"

This will use the find command to print out the names of all of the regular files (I've never found a need to collect any other type) and pipe them to the tar command which will collect the contents (printing out the file names to stderr so I can see the progress) and output the (compressed because of the z switch) tarball to stdout to be piped to ssh to be collected on the same collection system. I then hash the tarball on the receiving system. I could, maybe should, hash the files on the live system I'm collecting from, but to this point, it hasn't been an issue.

As mentioned above, I've used this technique and many systems and even had it work on other Unix-ish systems like Juniper routers (that run FreeBSD under the hood) and even an ancient Solaris 9 system or two.

My last run of FOR577 for the year is in a few weeks at DFIRCon[3] in Miami where we will talk about this technique and lots of others. Join me.

References:

[1] https://www.sans.org/cyber-security-courses/linux-threat-hunting-incident-response

[3] https://www.sans.org/cyber-security-training-events/dfircon-miami-2025

---------------

Jim Clausing, GIAC GSE #26

jclausing --at-- isc [dot] sans (dot) edu

如有侵权请联系:admin#unsafe.sh