好的,我现在需要帮用户总结一篇文章的内容,控制在100个字以内。用户的要求很明确,不需要特定的开头,直接写描述即可。首先,我得通读整篇文章,抓住主要观点。 文章主要讲OAuth 2.1在MCP中的应用。MCP是模型上下文协议,用于AI代理与API交互。OAuth 2.1作为基础,但AI代理的非确定性行为带来了新的风险。文章提到了资源指示符的重要性,控制令牌的范围和生命周期,防止凭证泄露到LLM上下文中。此外,还提到了网关式授权作为企业级解决方案。 接下来,我需要将这些关键点浓缩到100字以内。确保涵盖OAuth 2.1、MCP、资源指示符、令牌管理、网关授权以及风险控制等方面。 可能的结构是:介绍OAuth 2.1在MCP中的应用,指出AI带来的新挑战,强调资源指示符和令牌管理的重要性,并提到网关式授权作为解决方案。 最后检查字数是否符合要求,并确保语言简洁明了。 </think> 文章探讨了OAuth 2.1在模型上下文协议(MCP)中的应用及其为企业带来的安全挑战。OAuth 2.1为API授权提供了坚实基础,但AI代理的非确定性行为引入了序列级风险。文章强调了资源指示符的重要性、短生命周期令牌的使用、防止凭证泄露以及网关式授权作为企业级解决方案。 2025-10-17 15:48:13 Author: securityboulevard.com(查看原文) 阅读量:5 收藏

👉

TL;DR: OAuth 2.1 is a solid foundation for MCP, but the non-deterministic nature of agent interactions introduce sequence-level risks that classic request-level checks don’t cover.

Resource Indicators are mandatory and should be scoped aggressively; keep tokens short-lived and server-specific; never let credentials leak into LLM context.

An emergent pattern at scale, many teams are adopting gateway-based authorization to centralize policy, transform tokens, and create strong audit boundaries.

OAuth has been the backbone of API authorization for over a decade: as early as 2015, Google, Facebook, Microsoft, and GitHub had already standardized on it for third-party authorization. Since then, the typical flow has become second nature to both web users and developers: a user clicks "Sign in with Google," gets redirected to an authorization page, grants permissions, and returns to the application with an access token. The application uses that token to call APIs on the user's behalf. The path is predictable, auditable, and well-understood.

Most of its success comes from a clean separation of roles (client, resource server, authorization server) and a predictable flow for obtaining and validating tokens.

That maturity is exactly why the MCP Authorization specification (finalized June 18, 2025) chose OAuth 2.1 as the base. But unlike the traditional OAuth flow where users interact with predictable API calls, MCP introduces unique challenges.

An AI agent doesn't follow a predetermined script. It plans, chains tools, and pursues goals through sequences of operations that emerge from its reasoning process rather than from explicit programming. A request that begins as "summarize this quarter's sales data" might result in the agent querying a database, calling a visualization API, accessing a document repository, and composing a report, each step requiring authentication and authorization, and therefore representing another opportunity for compromise or leak of sensitive credentials through context.

Probably Secure: A Look At The Security Concerns Of Deterministic Vs Probabilistic Systems

Learn why deterministic security remains essential in an AI-driven world and how GitGuardian combines probability and proof for safe, auditable development.

GitGuardian Blog – Take Control of Your Secrets SecurityDwayne McDaniel

GitGuardian Blog – Take Control of Your Secrets SecurityDwayne McDaniel

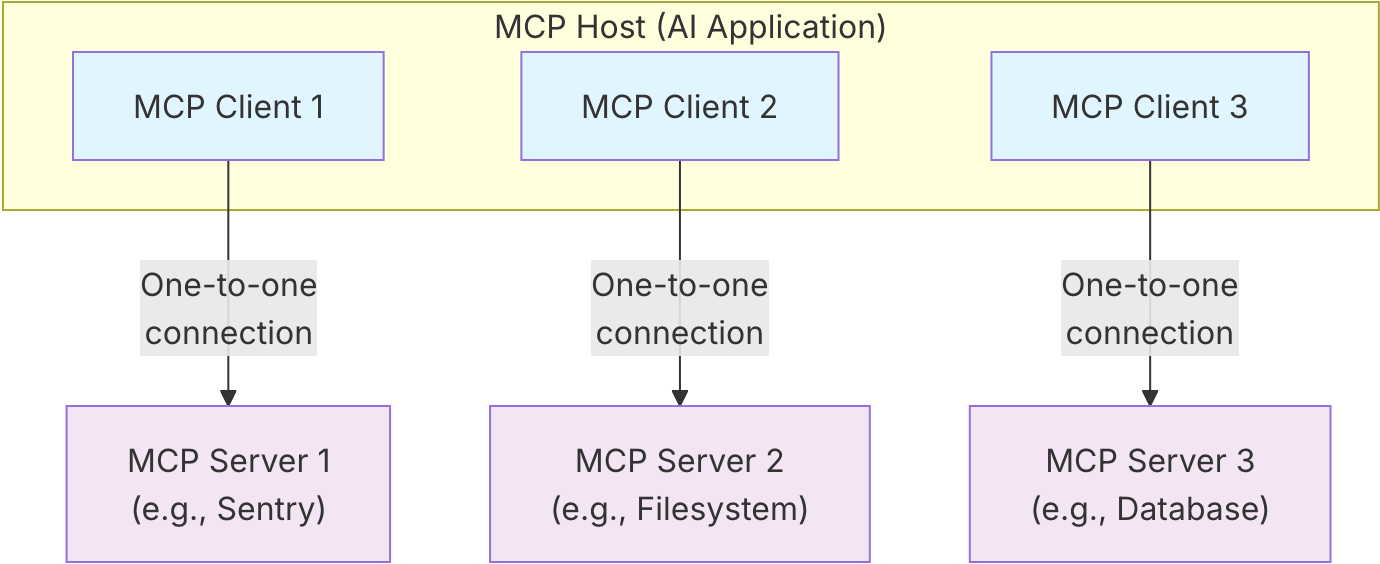

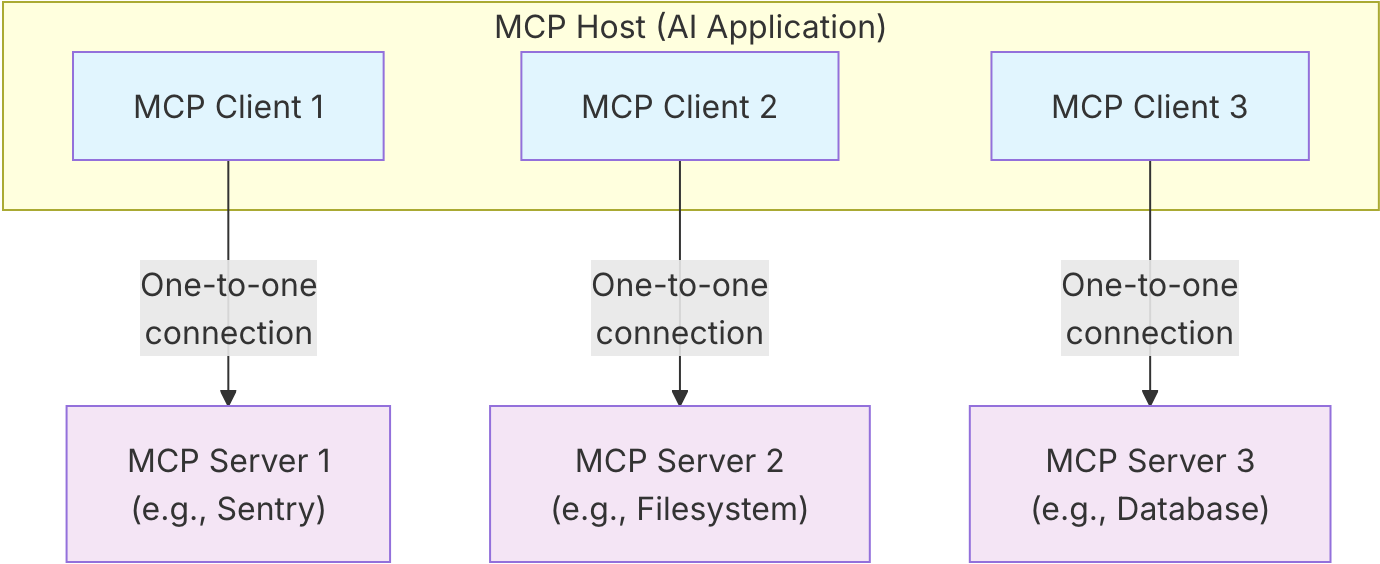

Applying OAuth to agent systems is not a copy-paste job: MCP changes the topology from

user→app→API

to

user→AI host→MCP client→(many) MCP servers→downstream services.

This means while the mechanics of OAuth still work, what changes is where risk concentrates and how you scope and handle credentials.

This guide focuses on what matters for enterprises moving from POCs to production: mapping OAuth roles to MCP, selecting the right grant for each capability, scoping tokens per server with Resource Indicators, preventing credential leakage into model context, and adopting sequence-aware authorization (often via a central gateway) as deployments grow.

How OAuth Fits MCP

OAuth defines four actors in its authorization model:

- the resource owner (typically an end user),

- the client (the application requesting access),

- the resource server (which hosts protected resources), and

- the authorization server (which issues tokens after authenticating the resource owner).

This separation of concerns has proven robust across countless implementations, from mobile applications to enterprise SaaS platforms, and this delegation of authentication to dedicated identity infrastructure is what makes the specification compatible with existing enterprise identity providers like Okta, Auth0, and Keycloak.

In a traditional OAuth deployment, the boundaries are clear: a user authorizes a mobile app to access their photos stored on a cloud service. The mobile app is the client, the photo storage API is the resource server, and the identity provider handles authorization.

However, in the MCP architectures, the client role splits across the AI host application (such as Claude Desktop or a custom LLM interface) and the MCP client component.

MCP Architecture Overview Source: Model Context Protocol official documentation

Another critical architectural decision is that MCP servers are OAuth Resource Servers: instead of authenticating users directly, they validate bearer tokens issued by trusted authorization servers, just like a traditional API.

Each MCP server should:

- validate JWT access tokens,

- publish Protected Resource Metadata (OAuth RFC 9728) at a well-known endpoint to declare supported scopes/issuers, and

- enforce scopes per tool/resource.

This architecture preserves OAuth's key security properties while adapting them to agent-driven workflows. Multiple MCP servers can trust the same authorization server, allowing users to authenticate once and access many services.

Yet, contrary to battle-tested OAuth implementations, the MCP authorization flow is by nature multi-hop: when a user asks an AI agent to perform a task, their identity and permissions must flow through several boundaries:

User ➞ AI host ➞ MCP client ➞ (potentially multiple) MCP servers ➞ backend APIs or databases.

Crucially, each hop must preserve the authorization context, prevent credential leakage, and be independently auditable to meet security and compliance requirements.

This is where Resource Indicators (scoping tokens to a specific resource server) and strict token-handling discipline become critical.

JWTs + Resource Indicators: Scoping for Blast-Radius Control

JSON Web Tokens (JWTs) provide the mechanism through which authorization decisions travel across MCP architectures. Their self-contained structure (carrying claims about the user, issuer, audience, expiration, and permissions) allows distributed components to independently verify authorization without coordinating through a central authority.

In MCP, the audience (and resource) become central: you want tokens that are only valid for the intended MCP server. Traditional OAuth often uses single tokens for multiple APIs from the same provider, but this creates risk when a compromised token could be used against any service trusting the same authorization server.

This is where Resource Indicators (RFC 8707) become so important:

- The client requests a token for a specific resource (the MCP server).

- The authorization server issues a token whose audience matches that resource.

- If a token is compromised, it’s useless against other MCP servers—even within the same org.

Then the validation process on the server-side becomes:

- Fetch and cache JWKS; verify signature.

- Check exp and short lifetimes (minutes/hours, not days).

- Verify audience matches this MCP server.

- Enforce scopes: coarse (mcp:tools:read) + fine-grained (document:read:project-abc).

One notable limitation of using JWTs is token revocation. JWTs are self-contained, so deleting a token from a database doesn't prevent its use until expiration. Organizations need either short-lived access tokens, with non-renewable access, or distributed revocation lists that MCP servers check before accepting them.

⚠️ The Gap Is Where Risk Lives.OAuth can confirm that a caller is authenticated and has permissions. What it cannot do is prevent an autonomous agent from chaining multiple legitimate tools in ways that produce unauthorized outcomes. Each individual call might be allowed, but the combined action can exceed what the system was designed to permit. An effective risk mitigation for MCP needs to enable sequence-aware authorization and policy beyond token validation.

Two Flows That Matter for MCP Agents

1) Authorization Code (user-scoped)

For scenarios where MCP servers need access to user-scoped data (private docs, personal repos, user rights in a SaaS), the authorization code grant remains the gold standard.

The familiar consent screen still applies, but the MCP twist is precision scoping and safe storage across diverse environments (desktop apps, CLI tools, IDE plugins).

Enterprise essentials:

- Scope per MCP server with Resource Indicators so a token for mcp://docs can’t hit mcp://db.

- Short lifetimes for access tokens; refresh tokens guarded like crown jewels (encrypted at rest, hardware-backed where possible, never logged).

- Never let tokens appear in prompts, traces, or model inputs. Keep auth in an isolated path outside LLM context.

- Multi-tenant isolation: no cross-user leakage via caches, histories, or shared agent state.

2) Client Credentials (system-scoped)

Not all MCP operations require user-specific context. Many agent capabilities like accessing public APIs, performing tasks, retrieving shared organizational data, don't depend on individual user permissions. These system-level operations require a different authorization approach, one where the MCP server itself acts as the principal rather than operating on behalf of a specific user.

The client credentials grant serves this purpose. In this flow, the MCP server uses client credentials to authenticate itself to backend systems. The authorization server issues an access token representing what the service itself is authorized to do, not what any individual user can do through that service. This is similar to service accounts in traditional infrastructure: a non-human identity with its own set of capabilities and constraints.

The security model shifts fundamentally with client credentials. The client secret becomes a high-value credential that must be protected with the same rigor as any secret. If compromised, an attacker gains the full capabilities of the MCP server without needing to compromise any user account.

Secrets management best practices apply in full force: secrets should never live in source code, configuration files, or environment variables that might be logged or exposed. Credential rotation becomes operationally critical: quarterly at minimum for production systems. The principle of least privilege applies: an MCP server should receive only the minimum scopes necessary for its intended functionality. Finally, credential isolation must be enforced between development, staging, and production environments, with different MCP servers using different credentials even within the same environment.

Enterprise essentials:

- Treat client secrets like production secrets: store in a secrets manager; rotate regularly; least privilege scopes.

- Use separate credentials per environment (dev/stage/prod) and, ideally, per service.

- Same scoping rules apply: request tokens targeted to the specific MCP server or downstream API.

Emerging Pattern: Gateway-Based Authorization for MCP

As organizations deploy MCP systems at scale, a pattern is emerging to address the authorization gap between individual request validation and autonomous agent behavior: centralized authorization gateways that mediate all interactions between MCP clients and servers.

The gateway model introduces a dedicated component that sits between clients and servers, intercepting every request to apply context-aware authorization policies. Rather than relying solely on bearer tokens to determine access, the gateway evaluates each request against dynamic policies that can consider time of day, user behavior patterns, device trust levels, and the specific sequence of operations being attempted. This shifts authorization from a binary token-validation decision to a continuous evaluation process.

What the gateway does:

- Single enforcement point for policy: avoid duplicating authorization logic across every server.

- Token transformation: strip incoming access token and issue short-lived signed assertions (or internal tokens) to backends, which reduces token exposure to LLM clients.

- Audit boundary: comprehensive logs with user, agent, tool, parameters, decisions.

From a security perspective, the gateway model helps mitigate OWASP LLM risks that pure OAuth implementations don't address.Implementation patterns vary, but most gateway deployments follow similar principles. The gateway maintains connections to both the authorization server (for validating incoming tokens) and to policy engines (for evaluating authorization decisions). A typical request path would go like this:

- Validate incoming OAuth token (issuer, signature, exp, audience).

- Enrich context (user, device, risk, prior actions).

- Evaluate policy (is this operation/tool allowed given the sequence so far?).

- Transform credentials (mint short-lived downstream identity).

- Forward to the MCP server; log decision and details.

Gateways aren’t required by the MCP spec, but they’re becoming a pragmatic way to close the gap between request-level and sequence-level control as deployments grow to include dozens of MCP servers serving hundreds of users.

Production Deployment: Architecting for Change

Three months into the MCP Authorization specification's life, production deployment patterns are still crystallizing. Organizations implementing OAuth 2.1 for MCP today should architect systems that can evolve as the community's understanding deepens.

For a deeper look at why gateway patterns are becoming a cornerstone for enterprise AI applications, see our guide to building a secure LLM gateway

- Use dedicated authorization servers rather than building custom implementations. The temptation to implement a simple JWT issuer for initial deployments often leads to operational debt as requirements expand. Production-grade authorization servers like Keycloak, Auth0, or Okta provide not just token issuance but also comprehensive admin interfaces, audit logging, token revocation mechanisms, and integration with enterprise identity providers. These platforms have absorbed years of real-world security lessons that custom implementations will rediscover the hard way. The operational investment in deploying and maintaining dedicated authorization infrastructure pays dividends as MCP deployments scale.

- Implement robust monitoring early. Authorization failures in agent systems don't manifest like traditional application errors. An AI agent denied access to a tool might simply choose an alternative approach, making authorization problems invisible until accumulated failures affect user experience. Production deployments need visibility into token issuance rates, validation failures, scope denials, and authorization server response times. Anomaly detection becomes particularly valuable: unusual token request patterns might indicate compromised credentials or misbehaving agents attempting operations beyond their intended scope.

- High availability patterns matter for authorization infrastructure as much as for any critical system component. When authorization servers become unavailable, agent systems lose their ability to obtain new tokens or validate existing ones. Circuit breaker patterns can prevent cascading failures: if an authorization server stops responding, MCP servers should fail gracefully rather than blocking all operations. Token caching with aggressive TTLs allows temporary operation during authorization server outages, though at increased security risk. Organizations must balance availability against security requirements based on their specific risk tolerance.

- Test agents under constraint: while traditional API integration tests validate that correct requests receive appropriate responses, agent systems generate requests dynamically based on reasoning processes that don't follow predetermined scripts. Test suites should verify not just that individual tools respect authorization scopes, but that agents denied access to preferred tools successfully degrade to allowed alternatives. Security testing should attempt to trick agents into revealing information or performing operations beyond their authorization, validating that scope enforcement holds even when agents pursue creative problem-solving strategies.

Production deployments will evolve as the community develops shared patterns for continuous authorization, policy languages for expressing agent behavior constraints, and operational practices for managing credentials at scale. Organizations succeeding with MCP will be those that architect systems expecting change rather than optimizing for today's understanding.

Conclusion: Start Now, Architect for Evolution

OAuth 2.1 gives MCP a solid foundation built on OAuth's decade of production hardening. Organizations can begin securing MCP deployments today using established infrastructure and familiar patterns. The authorization code flow for user operations, client credentials for system access, JWT validation with Resource Indicators for proper token scoping—all work exactly as OAuth 2.1 documentation describes. That solves request-level authorization.

Enterprise agent systems also need sequence-aware control to validate agent-orchestrated sequences, and credential hygiene: short-lived, per-server tokens; refresh-token discipline; zero token exposure to model context. At scale, they'll also probably need a gateway to centralize policy and create an auditable boundary.

This creates opportunity rather than blocker. Start with proper OAuth 2.1 implementation:

- dedicated authorization servers,

- scope tokens using Resource Indicators,

- protect credentials with the same rigor as any production secret.Then layer additional authorization controls as needed: gateway-based policy enforcement, sequence-level validation, and continuous monitoring of agent behavior patterns when these start to emerge, hopefully in the coming months.

FAQs

How is OAuth 2.1 in MCP different from “classic” API auth?

The actors are the same, but MCP adds multi-hop paths (user → AI host → MCP client → multiple servers). Tokens must be scoped per server (Resource Indicators), and you must ensure no credentials ever reach model context. The risk concentrates in the spaces between calls (agent sequences), not just the calls themselves.

What is the recommended OAuth 2.1 flow for user-centric operations in MCP environments?

Use the Authorization Code grant for user-centric tasks. In MCP, this flow provides explicit user consent and issues short-lived access tokens that are scoped per MCP server using Resource Indicators (RFC 8707). Store tokens securely (encrypted at rest), treat refresh tokens as high-risk secrets, and ensure no credentials ever enter LLM prompts, traces, or logs. This preserves least privilege, limits blast radius, and keeps user context clean across the multi-hop path (User → AI host → MCP client → MCP server).

How should organizations manage client credentials securely in MCP deployments?

Treat client credentials like production secrets. Keep them in a dedicated secrets manager (not source code, config files, or environment variables that might be logged), enforce regular rotation, and assign least-privilege scopes specific to each service and environment (dev/stage/prod). Use hardware-backed encryption where possible, restrict network access to token endpoints, and audit every read/use. Separate credentials per MCP server to prevent lateral movement if compromise occurs.

What are the key considerations for JWT token validation in MCP?

Implement a tight validation loop: (1) verify signature against cached JWKS from the issuer, (2) check expiration and prefer short lifetimes, (3) require the audience to match this MCP server (via Resource Indicators), and (4) enforce scopes (coarse + resource-specific). Cache keys with sensible TTLs, handle rotation gracefully, fail closed on verification errors, and log structured validation results for auditing without exposing token material.

How can organizations prevent token leakage and model poisoning in OAuth 2.1 for MCP implementations?

Keep authentication paths fully isolated from model context: tokens must never appear in prompts, conversation history, debug traces, or analytics. Encrypt tokens at rest, use mutually authenticated TLS in transit, and prefer hardware-backed key storage. Redact sensitive headers/bodies before logging, implement allow-listed telemetry, and monitor for anomalies (unexpected issuances, refresh spikes, audience mismatches). If feasible, use a gateway to transform tokens into short-lived downstream assertions, reducing exposure to LLM-facing components.

What production deployment strategies are recommended for OAuth 2.1-enabled MCP systems?

Use a dedicated authorization server (don’t roll your own), instrument early with metrics on issuance, validation failures, scope denials, and latency, and design for high availability (circuit breakers, token/JWKS caching with bounded risk). Add gateway-based authorization as you scale to centralize policy, transform tokens, and create an audit boundary. Finally, test agents under constraint—verify scope enforcement, graceful degradation when denied, and defenses against sequence-level abuse—not just single request success paths.

*** This is a Security Bloggers Network syndicated blog from GitGuardian Blog - Take Control of Your Secrets Security authored by Guest Expert. Read the original post at: https://blog.gitguardian.com/oauth-for-mcp-emerging-enterprise-patterns-for-agent-authorization/

如有侵权请联系:admin#unsafe.sh