文章探讨了传统合规审计的局限性以及AI技术如何将其从手动、周期性检查转变为持续、智能化保障。AI通过自动化证据收集、跨框架关联控制、持续分析等功能提升效率与准确性,并帮助企业应对日益严格的监管要求。 2025-10-16 22:49:39 Author: securityboulevard.com(查看原文) 阅读量:3 收藏

Key Takeaways

- Why traditional procedural audits can’t keep pace with modern environments

- How AI turns audits from manual snapshots into continuous assurance

- Core capabilities that make AI audit systems credible and effective

- How regulatory timelines are driving faster, smarter audit models

- Practical steps to implement AI auditing in real organizations

For many organizations, compliance audits are still synonymous with spreadsheets, evidence gathering, and last-minute scrambles. Teams spend weeks tracking down screenshots, reports, and ticket records to prove that their controls are working as intended.

That’s beginning to change. AI-powered compliance audits are shifting the model from periodic, manual checks to continuous, intelligence-driven assurance. Platforms like Centraleyes are at the forefront of this shift, applying AI to procedural audits to make cybersecurity and compliance programs both smarter and more efficient.

Why Traditional Compliance Audits Struggle to Keep Up

Traditional procedural audits follow a well-worn path:

- plan the scope

- collect evidence

- test controls

- document findings

- issue a report

Three structural challenges stand out:

1. Manual evidence handling

Modern organizations run dozens of cloud platforms, SaaS tools, and security solutions. Collecting and mapping evidence manually across this ecosystem takes enormous time and coordination.

2. Periodic visibility

Audits typically happen once or twice a year. Any gaps that appear between cycles can go unnoticed for months.

3. Reactive posture

Teams often discover misconfigurations, expired certificates, or missing records only during an audit.

A Short History of Procedural Audits

Procedural audits were never designed for the digital world we operate in today. Their roots go back to financial reporting, where organizations relied on periodic checks to verify that internal controls were functioning as intended. When cybersecurity frameworks like SOC, ISO, HIPAA, and PCI DSS emerged, they largely borrowed this model: define control criteria, gather evidence periodically, test, and issue a report.

For a while, this worked. Systems were mostly on-premises, technology stacks were relatively stable, and regulatory expectations were aligned with annual or semiannual reviews. Evidence collection could happen through manual screenshots, logs, or interviews without overwhelming teams. The audit served as a structured point-in-time snapshot to verify that nothing major had fallen through the cracks.

That model began to strain as organizations shifted to cloud services, SaaS platforms, and hybrid environments. Traditional audits, which capture a static moment, struggle to reflect this dynamic reality. This is why so many compliance teams still find themselves in fire-drill mode before each audit cycle: the underlying structure hasn’t caught up to how organizations operate. This gap is increasingly visible in regulatory investigations following breaches, where gaps that existed “between audits” become central issues.

The Rise of AI in Compliance Auditing

AI is particularly well-suited to procedural audits because audits follow structured, rules-based logic: gather evidence, test against criteria, and produce results. Over the past two years, a new generation of AI compliance audit tools has emerged that can:

- Ingest and classify evidence automatically, mapping data points to relevant controls across frameworks like SOC 2, ISO 27001, HIPAA, and the GDPR.

- Run control checks at scale, using predefined logic or dynamically generated procedures to verify whether controls are functioning.

- Generate audit-ready reports, summarizing findings and attaching evidence in standardized formats.

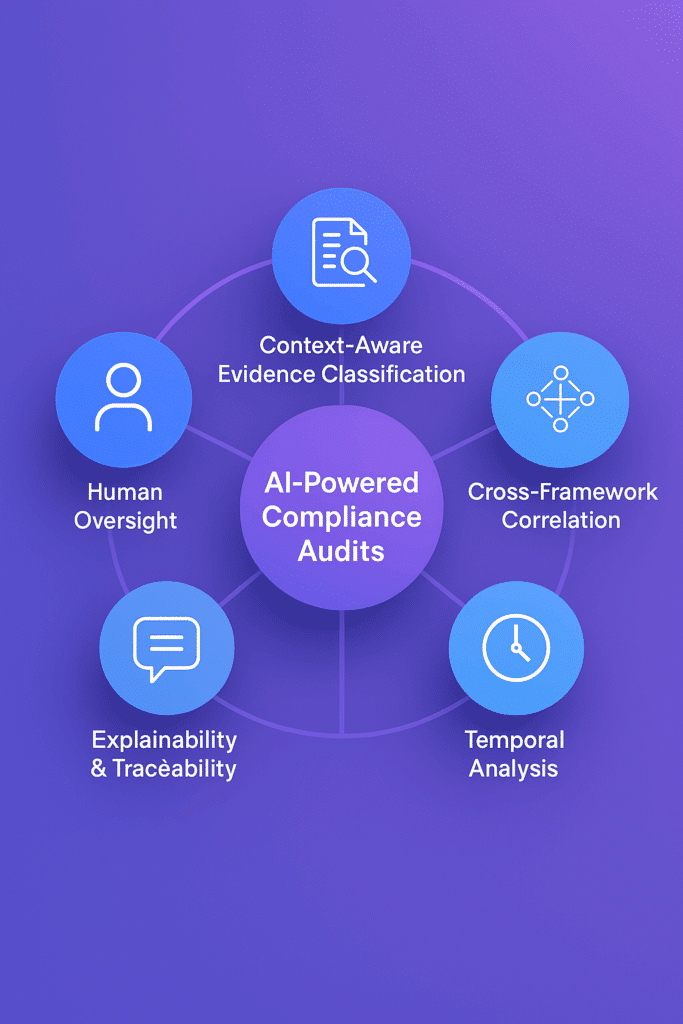

Core Capabilities of Effective AI Audit Systems

As AI becomes integrated into compliance auditing, its value depends on the maturity of the underlying capabilities. Effective systems are characterized by clear evidence handling, rigorous logic, and transparent operations that can withstand regulatory and audit scrutiny. Five capabilities, in particular, distinguish advanced solutions from basic automation tools.

1. Context-Aware Evidence Classification

AI systems must be able to analyze not only the content of evidence but also its context. This includes metadata, origin systems, timestamps, and relationships to control objectives. Proper classification ensures that evidence is mapped accurately to relevant control requirements and frameworks, reducing the risk of gaps or misinterpretations.

2. Cross-Framework Control Correlation

Many organizations operate under multiple overlapping frameworks (e.g., SOC 2, ISO 27001, HIPAA). Advanced AI solutions can automatically identify and correlate common controls across these frameworks, allowing evidence collected once to support multiple requirements. This reduces duplication, improves consistency, and simplifies ongoing compliance activities.

3. Temporal and Continuous Analysis

Static evidence reviews are insufficient in dynamic digital environments. Effective AI audit systems incorporate temporal awareness- tracking how control states and evidence evolve over time.

4. Explainability and Traceability

AI-generated outputs must be interpretable by auditors, regulators, and compliance teams. Systems should provide clear decision paths, document the logic applied, and retain the ability to reproduce results for external validation.

5. Human Oversight and Governance

While AI can automate evidence handling and procedural checks, governance remains a human responsibility. Mature systems include defined oversight mechanisms for exception handling, judgment calls, and strategic decisions. This ensures that automation strengthens compliance operations without diminishing accountability.

These capabilities form the foundation of trustworthy AI audit systems. They provide the structure necessary for organizations to rely on AI not merely as a time-saving tool, but as a credible component of their assurance programs.

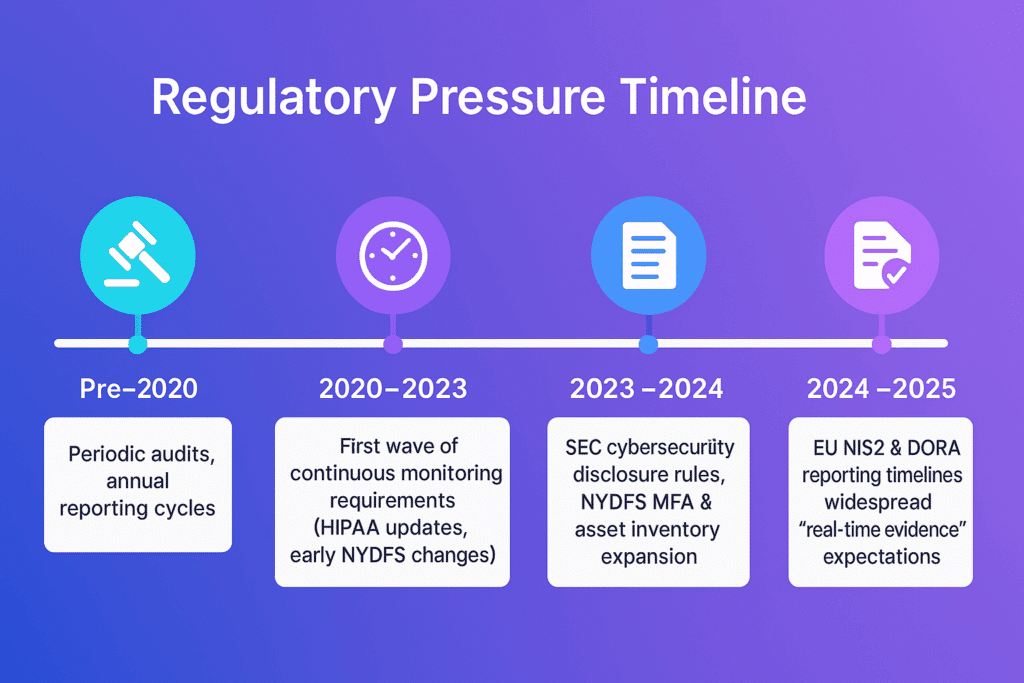

Regulatory Expectations

Regulators don’t demand that organizations use AI compliance tools. But the rules they’ve introduced in recent years set timelines and expectations that are almost impossible to meet with manual audit preparation. The shift toward faster reporting, continuous monitoring, and fresh evidence is clear across many locations and industries.

European Union

The NIS2 Directive and DORA both require organizations to detect problems quickly and report incidents within short time frames, sometimes within 24 to 72 hours. These rules expect companies to have an ongoing view of their security and compliance, not just a snapshot during an annual audit.

United States

Similar changes are happening in the U.S. The SEC’s cybersecurity disclosure rules give companies only four business days to report material incidents. The NYDFS Cybersecurity Regulation has added stricter requirements for asset inventories, multifactor authentication, and continuous monitoring. HIPAA guidance now focuses more on ongoing safeguards than static documentation. These expectations go far beyond what manual, periodic reviews can support.

Audit and Assurance Practices

Audit firms are changing how they work in response. Many now rely on continuous control testing and automated evidence collection to keep pace with regulatory demands. Regulators increasingly expect that evidence can be provided at any time, not just during scheduled audits.

Benefits of AI in Audit Preparation

The shift toward AI-powered compliance auditing brings measurable advantages. With Centraleyes, organizations typically see improvements in both efficiency and security:

Faster audit cycles

Evidence collection and control testing happen automatically, compressing timelines from weeks to days.

Early detection of issues

AI checks catch configuration drift, expired controls, or non-compliance early- before incidents occur.

Consistent procedural accuracy

AI agents run the same checks the same way every time, reducing human error and audit fatigue.

More strategic human roles

Compliance professionals can focus on judgment, communication, and remediation, not administrative busywork.

Stronger alignment between security and compliance

By embedding control checks directly into operational data sources, compliance becomes a real-time reflection of security posture.

Implementation Considerations

Introducing AI into compliance auditing requires thoughtful setup. Organizations adopting Centraleyes or similar platforms should consider:

- Integration Depth: Ensure connectors cover the relevant systems and data sources so evidence can be collected automatically.

- Framework Coverage: Map required frameworks clearly; Centraleyes supports a broad range, but custom frameworks can be configured as well.

- Governance and Oversight: Keep human review in the loop for exceptions and strategic decisions.

- Regulatory Context: External auditors and regulators increasingly welcome AI-assisted evidence handling, but human attestation remains essential.

The Future: Always-Ready Audits

As regulatory expectations grow and digital environments expand, periodic, manual audits are becoming unsustainable. AI compliance procedural audits offer a path to continuous assurance.

Centraleyes exemplifies this future: AI agents for audit and compliance checks, r, evidence repositories updating automatically, and audit-ready reports available on demand.

Final Word

By automating evidence gathering and procedural checks, platforms like Centraleyes help organizations keep pace with cybersecurity demands and regulatory complexity without adding headcount or sacrificing accuracy.

For teams juggling multiple frameworks, tight deadlines, and complex environments, AI compliance auditing represents a fundamental step forward. It blends the rigor of traditional audits with the speed and intelligence of modern technology.

FAQs

Can AI-powered auditing work in environments with legacy or partially manual systems?

Yes, but it typically starts with hybrid approaches. Many teams use AI for the systems that can be instrumented automatically, while maintaining manual checks for older infrastructure. Over time, they expand coverage as connectors and processes mature.

What happens if the AI classifies evidence incorrectly?

Mature systems flag uncertain classifications for human review rather than making assumptions. This is a common setup: AI handles the bulk of structured evidence, while compliance teams review exceptions and edge cases.

Is there a risk of ‘over-reliance’ on AI during audits?

Yes, if organizations switch off human judgment entirely. Most discussions in practitioner forums stress maintaining governance layers, periodic spot checks, and clear accountability for final sign-offs.

The post AI-Powered Compliance Audits: Boosting Cybersecurity & Efficiency appeared first on Centraleyes.

*** This is a Security Bloggers Network syndicated blog from Centraleyes authored by Rebecca Kappel. Read the original post at: https://www.centraleyes.com/ai-powered-compliance-audits/

如有侵权请联系:admin#unsafe.sh