现代数据中心面临机器身份管理危机:传统静态API密钥和服务账户已无法应对AI与微服务规模需求。短期凭证、OAuth 2.0、JWT与X.509证书结合服务网格提供动态安全方案,实现自动认证与授权,并支持开发者友好型架构转型。 2025-10-14 15:25:47 Author: securityboulevard.com(查看原文) 阅读量:77 收藏

Understanding the Credential Management Crisis

Walking into a modern data center – Servers hum quietly while processing millions of transactions. AI agents make split-second decisions. Microservices communicate across continents. Yet beneath this technological sophistication lies a troubling reality: most organizations still manage machine credentials the same way they did a decade ago.

Security leaders face a mounting crisis. API keys multiply like weeds across code repositories. Service accounts accumulate privileges they no longer need. Credentials remain unchanged for months or years. This approach worked when companies had dozens of systems. It breaks down completely when organizations operate thousands of AI agents and microservices.

The root problem runs deeper than poor hygiene. We built our credential systems around human workflows. Humans log in once per day. They perform predictable tasks. They work during business hours. Machines operate nothing like this. An AI agent might authenticate hundreds of times per minute. It switches between tasks in milliseconds. It never sleeps.

During the development of GrackerAI and LogicBalls, this mismatch became painfully clear. Our AI systems needed to access customer data, process security alerts, and coordinate with external services. Each integration required credentials. Traditional API keys created bottlenecks because they required manual rotation and careful privilege management. We realized that human-centric credential models simply cannot support machine-scale operations.

What happens when an API key gets compromised. With traditional systems, that key might provide access for months before anyone notices the breach. The attacker can explore your systems, escalate privileges, and exfiltrate data. By the time you detect the compromise, significant damage has occurred. This delayed detection happens because static credentials provide no behavioral context. You know someone used the key, but you cannot tell if that someone was your legitimate service or an attacker.

The Mathematics of Short-Lived Credentials

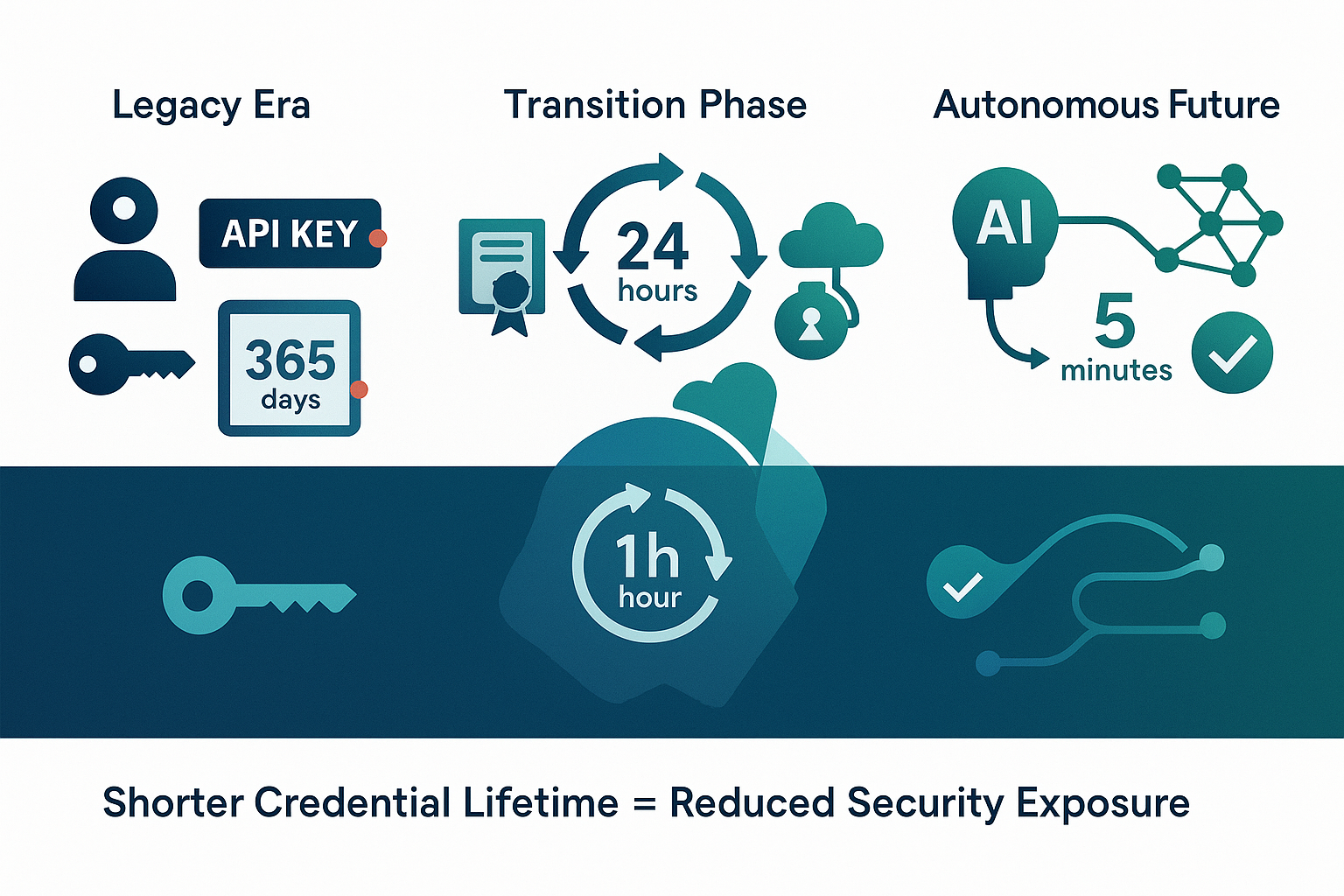

The solution lies in completely rethinking how credentials work. Instead of issuing keys that last for months, we need credentials that expire in minutes. This shift requires understanding the mathematical relationship between credential lifetime and security risk.

Consider this simple calculation. If you rotate credentials every 30 days, a compromised key provides 30 days of unauthorized access. Rotate every hour, and the exposure window drops to one hour. Rotate every 5 minutes, and the window becomes almost negligible. The relationship is linear: shorter credential lifetimes directly reduce your exposure to credential compromise.

But short-lived credentials create new challenges. You need systems that can issue, validate, and rotate credentials faster than they expire. You need applications that can handle credential refresh without interrupting operations. Most importantly, you need infrastructure that can operate these processes automatically without human intervention.

OAuth 2.0's Client Credentials flow provides the foundation for this approach. Think of it as a vending machine for access tokens. Your application presents its credentials to an authorization server. The server validates the request and returns a short-lived access token. The application uses this token to access protected resources. When the token expires, the process repeats automatically.

JSON Web Tokens (JWTs) make this process efficient and secure. A JWT contains cryptographically signed claims about what the bearer can do. The receiving service can validate these claims without contacting the authorization server. This reduces network traffic and improves performance. More importantly, JWTs can include temporal constraints that automatically limit their validity period.

Here is where most implementations go wrong. Many organizations treat short-lived tokens as a compliance checkbox rather than a fundamental architectural component. They implement 24-hour token lifetimes instead of 5-minute lifetimes. They skip automatic rotation mechanisms. They fail to implement behavioral monitoring that can detect unusual token usage patterns.

Effective short-lived credential systems require rethinking your entire approach to authorization. Instead of asking "does this service have permission to access this resource," you ask "does this service have permission to access this resource right now, given current conditions." The difference matters enormously for security and operational flexibility.

X.509 Certificates as Identity Foundation

While developers focus on JWTs and OAuth flows, X.509 certificates quietly handle the most critical machine identity functions. Every HTTPS connection depends on certificates. Every container registry uses certificate-based authentication. Every service mesh relies on certificate validation. Understanding certificates is essential for building robust machine identity systems.

Think of X.509 certificates as digital driver's licenses for machines. Like a driver's license, a certificate contains identity information about the holder. It includes the machine's name, organizational affiliation, and authorized capabilities. Most importantly, it contains a public key that enables cryptographic verification of the certificate's authenticity.

Certificates provide several advantages over other credential types. First, they bind identity to cryptographic keys rather than shared secrets. This means you cannot simply copy a certificate to gain unauthorized access. You need both the certificate and its corresponding private key. This binding makes certificate theft significantly more difficult than API key theft.

Second, certificates support hierarchical trust models through certificate chains. Your organization's root certificate authority can issue intermediate certificates to different departments. Each department can then issue specific certificates to their applications. This hierarchy enables fine-grained access control while maintaining centralized trust management.

Third, certificates include built-in expiration and revocation mechanisms. Unlike API keys that might live forever, certificates have explicit expiration dates. Certificate authorities can also maintain revocation lists that immediately invalidate compromised certificates. This provides both automatic cleanup and emergency response capabilities.

The key insight from scaling identity systems is that certificates work best when they live for hours, not years. Traditional certificate management treated certificates like precious resources that required careful protection and infrequent rotation. Modern workload identity treats certificates as disposable tokens that get replaced continuously.

Implementing short-lived certificates requires automated certificate lifecycle management. Your systems need to request new certificates before current ones expire. They need to handle certificate rollover without service interruption. They need to validate certificate chains and check revocation status. This automation removes human error from certificate management while enabling much shorter certificate lifetimes.

Consider how this works in practice. When your AI agent starts up, it requests a certificate from your certificate authority. The certificate contains the agent's identity and authorized capabilities. The certificate expires in one hour. Before expiration, the agent automatically requests a new certificate. If the agent behaves suspiciously, you can add its current certificate to the revocation list, immediately cutting off its access.

Service Mesh as Your Digital Immune System

Service mesh technology represents one of the most significant advances in machine identity management. While most people think of service meshes as traffic management tools, they actually function as comprehensive identity enforcement platforms. Understanding this distinction helps you leverage service mesh capabilities for security rather than just performance.

A service mesh creates a dedicated infrastructure layer that handles all communication between services. Every service gets a lightweight proxy that intercepts inbound and outbound connections. These proxies handle authentication, authorization, encryption, and monitoring for every interaction. This design removes security responsibilities from application code and centralizes them in the infrastructure layer.

The identity benefits of this approach are substantial. First, service mesh can automatically issue and rotate TLS certificates for every workload. Your applications never handle certificate management directly. The mesh infrastructure ensures that every connection uses fresh certificates and strong encryption. This eliminates entire categories of security misconfigurations that plague traditional deployments.

Second, service mesh enables zero-trust networking at the infrastructure level. Traditional networks rely on perimeter security. Once inside the network, services can communicate freely. Service mesh treats every connection as potentially hostile. Each service must prove its identity before receiving access to any other service. This prevents lateral movement attacks that have become common in modern breaches.

Third, service mesh provides cryptographic proof of every machine-to-machine interaction. Traditional network monitoring can tell you that traffic flowed between services. Service mesh monitoring can tell you which specific workloads communicated, what data they exchanged, and whether the interaction was authorized. This visibility is essential for compliance and incident response.

Think of service mesh as your organization's digital immune system. Just as your biological immune system identifies and neutralizes threats while allowing legitimate interactions, service mesh automatically validates every machine interaction while blocking unauthorized communication. This happens without application awareness or developer intervention.

The implementation details matter enormously for security effectiveness. Many organizations deploy service mesh for performance benefits but fail to enable its security features. They skip mutual TLS authentication between services. They use permissive authorization policies that allow all traffic by default. They neglect to monitor the rich security telemetry that service mesh provides.

Effective service mesh security requires treating the mesh as a security platform rather than just a networking tool. You need explicit authorization policies that define which services can communicate. You need automated certificate lifecycle management that rotates credentials frequently. You need monitoring systems that can detect unusual communication patterns and unauthorized access attempts.

Consider how this transforms your security posture. Without service mesh, a compromised application can potentially access any other service on your network. With properly configured service mesh, that same compromised application can only access services that you explicitly authorized. Even then, all interactions are encrypted, authenticated, and logged. The blast radius of any compromise becomes much smaller and more manageable.

Making Security Easy for Developers

The most sophisticated security architecture fails if developers cannot use it effectively. Machine identity systems must integrate seamlessly with development workflows and application frameworks. This requires understanding how developers actually build and deploy applications rather than how security teams think they should build them.

Modern development frameworks have evolved to handle authentication and authorization as built-in capabilities rather than custom implementations. Spring Security for Java applications provides automatic OAuth 2.0 and JWT handling with minimal configuration. Passport.js simplifies authentication flows for Node.js applications. Authlib provides comprehensive authentication tools for Python services. These frameworks handle the complex cryptographic operations while exposing simple programming interfaces.

The key insight is that developers will choose the easiest path to solve their problems. If secure authentication requires writing custom cryptographic code, developers will avoid it or implement it incorrectly. If secure authentication works through simple configuration changes, developers will adopt it readily. The goal is making secure practices the default choice rather than an additional burden.

This principle applies throughout the machine identity stack. Certificate management should happen automatically through infrastructure tooling rather than manual developer processes. Token refresh should occur transparently within application frameworks. Authorization policies should be expressed through declarative configuration rather than imperative code.

Consider how this works with OAuth 2.0 integration. Traditional implementations required developers to handle token requests, validation, refresh cycles, and error handling manually. Modern frameworks abstract these details behind simple annotations or configuration settings. Developers declare that a service requires authentication, and the framework handles all implementation details automatically.

The same pattern applies to certificate-based authentication. Instead of requiring developers to generate certificate signing requests, manage private keys, and handle certificate validation, modern workload identity systems provide these capabilities through platform services. Developers simply declare their service's identity requirements, and the platform ensures appropriate certificates are available and current.

Testing and debugging also become simpler with proper abstraction. Developers can test authentication flows locally without needing production certificates or authorization servers. They can simulate various authorization scenarios through configuration changes rather than complex test setups. This reduces the friction of building and testing secure applications.

Documentation and training materials must reflect this approach. Instead of explaining cryptographic protocols and certificate formats, focus on showing developers how to use framework capabilities effectively. Provide working examples that solve common problems. Create templates that new projects can adopt immediately. The goal is enabling developers to build secure applications without becoming security experts themselves.

Building Your Implementation Roadmap

Transforming your organization's credential management requires a systematic approach that builds capabilities incrementally while maintaining operational stability. Rushing this transformation can create security gaps or operational disruptions that undermine the entire effort. Success depends on careful planning, pilot testing, and gradual rollout.

The foundation phase focuses on understanding your current state and identifying improvement opportunities. Begin by cataloging every credential type currently in use across your organization. This includes API keys stored in configuration files, service account passwords, application certificates, and shared secrets. Map how each credential connects to business systems and data flows. Identify which credentials have never been rotated, which systems share credentials, and which accounts have excessive privileges.

This inventory process often reveals surprising information. Most organizations discover they have significantly more credentials than expected. Many find credentials that provide access to systems that no longer exist. Some uncover shared passwords that have remained unchanged for years. This baseline assessment provides the foundation for all subsequent improvements.

Risk assessment follows credential inventory. Not all credentials carry equal risk to your organization. An API key that provides read-only access to public data presents different risks than a certificate that grants administrative access to customer databases. Focus your initial efforts on the highest-risk credentials that would cause the most damage if compromised.

During this assessment phase, also evaluate your current development and deployment processes. Understanding how developers currently handle credentials helps you design solutions that fit existing workflows rather than requiring complete process changes. The most secure solution that developers cannot adopt will fail to improve your security posture.

The pilot phase implements short-lived credentials for a carefully selected subset of your systems. Choose pilot systems that currently use high-risk static credentials but do not impact critical business operations if something goes wrong. This allows you to refine your processes and build confidence before tackling mission-critical systems.

Start by implementing OAuth 2.0 token-based authentication for one internal API. Configure token lifetimes of 15 minutes initially, then gradually reduce to 5 minutes as you gain operational experience. Measure the performance impact of frequent token refresh. Monitor for authentication failures that might indicate configuration problems. Document the operational procedures needed to support short-lived credentials.

Simultaneously, deploy certificate-based authentication for internal service communication. Begin with development environments where you can experiment freely. Implement automated certificate lifecycle management that rotates certificates every 24 hours initially, then every hour as your processes mature. Test certificate revocation procedures to ensure you can quickly cut off compromised credentials.

Performance monitoring during the pilot phase is crucial. Short-lived credentials create additional network traffic for token refresh and certificate validation. Authorization servers experience higher load from frequent token requests. These impacts need measurement and optimization before broad deployment.

The scaling phase extends short-lived credentials to all new workloads while gradually migrating existing systems. Create standard templates that new projects can adopt immediately. Establish automated testing that validates credential handling in all applications. Build monitoring systems that can detect credential-related failures and security anomalies.

Developer experience becomes critical during scaling. Create documentation and training materials that help development teams adopt new credential patterns. Provide working examples for common frameworks and languages. Establish support processes that can help teams troubleshoot authentication problems quickly.

The evolution phase adds advanced capabilities like behavioral analytics and adaptive authorization policies. These features build on the foundation of short-lived credentials to provide additional security benefits. Behavioral analytics can detect when credentials are used in unusual patterns that might indicate compromise. Adaptive policies can adjust authorization requirements based on current risk levels and operational context.

This phase also prepares for AI agent integration. AI agents present unique challenges for credential management because they operate autonomously and make decisions without human oversight. Your credential infrastructure needs to handle AI-specific requirements like contextual authorization and explainable access decisions.

Measuring Success and Continuous Improvement

Successful credential transformation produces measurable improvements across security, operational, and business metrics. Tracking these improvements helps justify the investment and identifies areas for continued optimization. Effective measurement requires establishing baseline metrics before implementation and monitoring changes throughout the transformation process.

Security metrics focus on credential-related incidents and exposure windows. Track the number of static credentials in your environment over time. This number should decrease steadily as you migrate to short-lived alternatives. Monitor credential rotation frequency to ensure automated processes are working correctly. Measure time-to-detection for credential compromise incidents. Short-lived credentials should dramatically reduce this metric because compromised tokens expire quickly.

Operational metrics examine the efficiency and reliability of credential management processes. Monitor authentication failure rates to ensure short-lived credentials do not impact service availability. Track the time required to provision credentials for new services. Automated processes should make credential provisioning faster and more reliable than manual alternatives. Measure the operational overhead of credential management tasks like rotation and revocation.

Business metrics connect credential improvements to organizational outcomes. Calculate the cost savings from automated credential management compared to manual processes. Measure the time-to-market for new services that benefit from standardized credential patterns. Track compliance audit efficiency improvements that result from better credential visibility and control.

The most important metric is mean time to remediation for credential security incidents. Traditional static credentials might remain compromised for weeks or months before detection and remediation. Modern short-lived credentials should reduce this to hours or minutes because automatic expiration limits the window of exposure.

Regular assessment and optimization ensure continued improvement. Credential requirements evolve as your organization adopts new technologies and business models. AI agents, edge computing, and cross-organizational integration create new credential challenges that require ongoing attention. Build processes that can adapt your credential infrastructure to meet emerging requirements.

The organizations that master this transformation will build competitive advantages through faster AI deployment, better security, and lower operational costs. They will create the foundation for autonomous systems that can operate securely at unprecedented scale. Most importantly, they will prepare for a future where machine identities outnumber human identities by orders of magnitude.

The transition from static to dynamic credential management represents more than a security improvement. It enables new business models where AI agents can operate autonomously while maintaining complete accountability and control. Companies that build this capability early will lead the autonomous enterprise era while their competitors struggle with credential management bottlenecks that limit their AI ambitions.

*** This is a Security Bloggers Network syndicated blog from Deepak Gupta | AI & Cybersecurity Innovation Leader | Founder's Journey from Code to Scale authored by Deepak Gupta - Tech Entrepreneur, Cybersecurity Author. Read the original post at: https://guptadeepak.com/beyond-passwords-and-api-keys-building-identity-infrastructure-for-the-autonomous-enterprise/

如有侵权请联系:admin#unsafe.sh