read file error: read notes: is a directory 2025-10-9 00:11:32 Author: securityboulevard.com(查看原文) 阅读量:18 收藏

Understanding the AI Authorization Challenge

Imagine teaching a child to ride a bicycle. You want to give them enough freedom to learn and explore, but you also need guardrails to prevent serious accidents. This balance between autonomy and control captures the essence of authorizing AI agents in modern enterprises. Traditional security models assume predictable behavior patterns, but AI systems can surprise you in ways that no security manual anticipated.

During the development of GrackerAI's marketing automation agents, this challenge became deeply personal. Our AI agents needed to access customer databases, analyze sensitive marketing data, and make purchasing decisions on behalf of clients. Each task required different levels of access, and the agents sometimes combined these tasks in unexpected ways. Traditional role-based access control could not handle this complexity.

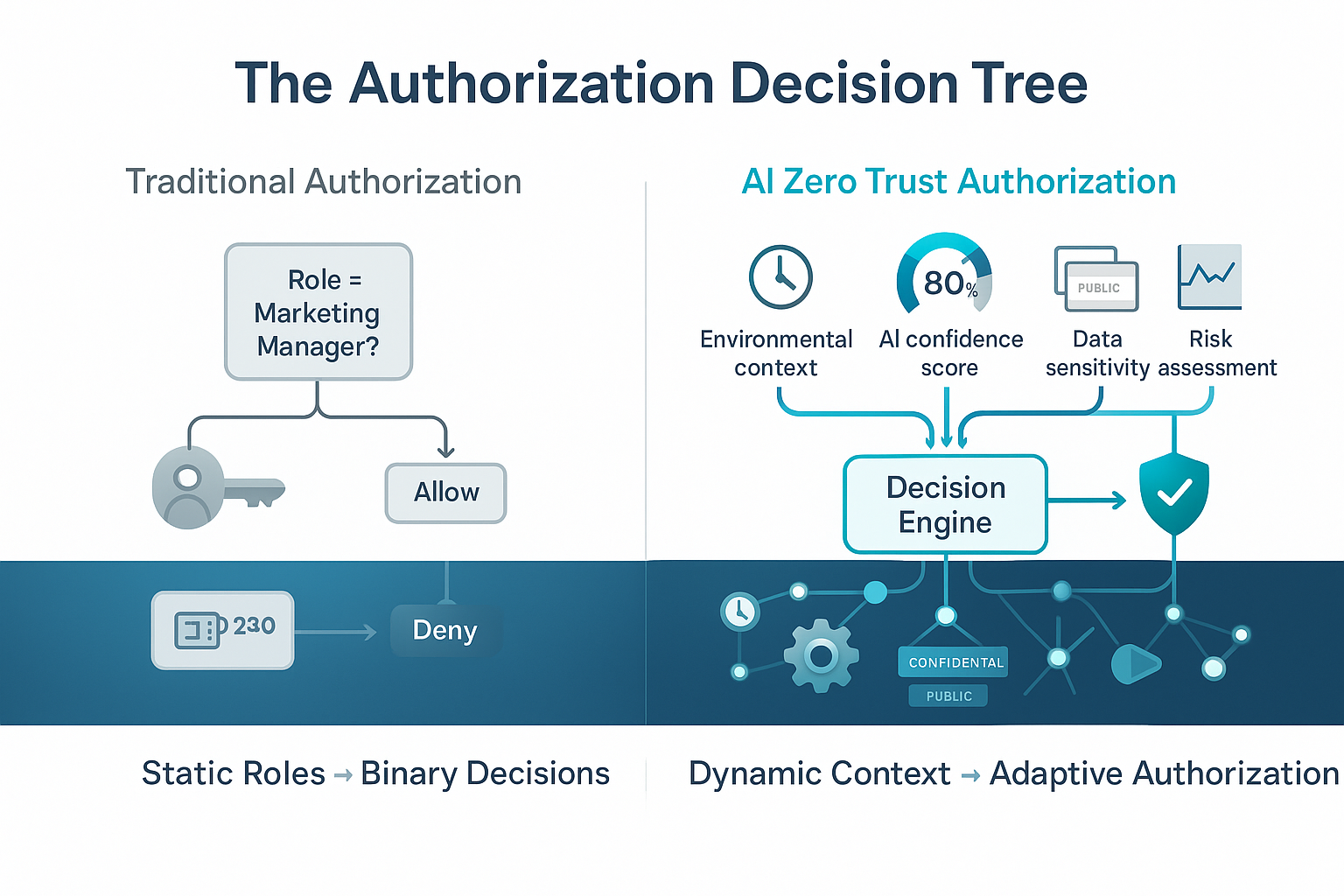

Think about how human authorization typically works in your organization. You assign someone a role like "Marketing Manager" and that role provides a fixed set of permissions. The person can access certain databases during business hours and approve marketing spend up to a specific limit. This model works because humans follow predictable patterns. They log in once per day, work during standard hours, and perform tasks in recognizable sequences.

AI agents operate fundamentally differently. An agent might authenticate hundreds of times per hour, switch between tasks in milliseconds, and combine activities in patterns that no human designed. The same agent that processes routine customer inquiries at 2 PM might need elevated privileges to handle a security incident at 2 AM. Static roles cannot adapt to this dynamic behavior.

The authorization paradox becomes clear when you consider emergent AI behavior. Machine learning systems can develop strategies that their creators never anticipated. An AI agent trained to optimize marketing campaigns might discover that accessing competitor pricing data improves its performance. This behavior could be beneficial or problematic, depending on your business policies and legal constraints. Traditional authorization systems cannot evaluate these emergent behaviors in real-time.

This challenge extends beyond technical considerations into business risk management. AI agents that operate autonomously can make decisions that significantly impact your organization. They might approve large purchases, modify customer data, or share sensitive information with external partners. Each decision carries potential consequences that traditional security models were not designed to handle.

Moving Beyond Static Roles to Dynamic Context

Traditional role-based access control works like a medieval guild system. You assign someone to a specific guild (role), and that guild membership determines what they can do throughout their career. This approach made sense when job functions remained stable for years and people worked in predictable patterns.

Attribute-based access control represents a fundamental shift in thinking. Instead of asking "what role does this person have," ABAC asks "what is the current context of this access request." This shift enables authorization decisions that consider multiple factors simultaneously rather than relying on a single role assignment.

Consider how this applies to AI agent authorization. An AI agent processing customer service requests needs different permissions based on numerous contextual factors. During normal business hours, it might access basic customer information and standard response templates. During a security incident, the same agent might need access to detailed audit logs and elevated escalation procedures. After hours, it might operate with reduced permissions that require human approval for sensitive actions.

Environmental context provides the foundation for dynamic authorization decisions. Time-based controls seem simple but offer powerful security benefits. An AI agent that processes financial data might receive full access during business hours but require additional approval for after-hours access. Location-based controls add another layer of protection. An agent running in your primary data center might receive different permissions than the same agent running in a disaster recovery facility.

Network conditions also influence authorization decisions. An AI agent operating on a secure internal network might receive broader permissions than one accessing resources through the public internet. These environmental factors combine to create a rich context that informs every authorization decision.

AI agent state provides another crucial dimension for authorization decisions. Unlike humans, AI agents can expose their internal state for security evaluation. An agent's confidence score indicates how certain it is about its current decisions. An agent with a 95% confidence score might receive broader permissions than one operating at 70% confidence. Recent actions also matter. An agent that has been making accurate decisions for hours might receive more trust than one that recently triggered error conditions.

Data sensitivity classification adds regulatory and business context to authorization decisions. An AI agent accessing public marketing data needs different controls than one processing personally identifiable information or financial records. These classifications often carry legal requirements that must be enforced automatically rather than relying on human oversight.

Risk assessment provides the dynamic element that makes ABAC particularly powerful for AI authorization. Real-time threat intelligence can adjust authorization decisions based on current security conditions. If your security team detects unusual network activity, authorization policies can automatically require additional validation for sensitive operations. Behavioral analysis can identify when AI agents deviate from their normal patterns, triggering enhanced security measures.

JWT Tokens as Portable Authorization Context

Understanding JSON Web Tokens requires thinking about them differently than traditional authentication tokens. Most people view JWTs as proof of identity, similar to showing an ID card. While JWTs can serve this function, their real power lies in carrying rich contextual information that enables sophisticated authorization decisions.

Think of a JWT as a digital passport that contains multiple types of information about the bearer. Like a physical passport, it includes identity information that proves who the bearer is. Unlike a physical passport, a JWT can also include current permissions, temporal constraints, and behavioral history. This additional context enables authorization decisions that adapt to changing conditions.

The structure of a JWT makes this flexibility possible. A JWT consists of three parts: a header that describes the token format, a payload that contains the actual claims, and a signature that proves the token's authenticity. The payload section can include any information that helps with authorization decisions.

Dynamic permissions represent one of the most powerful features of JWT-based authorization. Traditional tokens provide binary access: you either have permission or you do not. JWT tokens can include permission lists that change based on current conditions. An AI agent might receive a token that includes basic customer service permissions during normal hours but automatically gains incident response permissions when security alerts are active.

Temporal constraints add time-based security to authorization decisions. A JWT can include "not before" and "expires at" timestamps that automatically limit when the token is valid. For AI agents, this enables fine-grained temporal control. An agent processing batch jobs might receive tokens that are only valid during maintenance windows. An agent handling customer inquiries might receive tokens that expire outside business hours.

Behavioral signatures provide cryptographic proof of authorized actions. When an AI agent performs a sensitive operation, it can include a behavioral signature in its next token request. This signature proves that the agent completed the previous action successfully and according to policy. Subsequent authorization decisions can consider this behavioral history when evaluating new requests.

Audit trails become automatic when authorization information is embedded in JWTs. Every token contains a complete record of what permissions were granted when, and under what conditions. This creates an immutable audit trail that proves compliance with authorization policies. Unlike traditional audit logs that can be modified or deleted, JWT-based audit trails are cryptographically signed and tamper-evident.

The implementation details of JWT-based authorization require careful attention to security best practices. Token signing must use asymmetric cryptography so that authorization servers can validate tokens without sharing secret keys. Token expiration times should be short enough to limit exposure from compromised tokens but long enough to avoid performance problems from frequent refresh cycles.

OpenID Connect for Machine Workloads

OpenID Connect was originally designed to solve human authentication problems, but its extension to machine workloads creates powerful new capabilities for AI agent authorization. Understanding this extension requires recognizing how machine authentication differs from human authentication while building on the same foundational protocols.

Human authentication typically involves interactive processes. A person enters credentials, possibly completes multi-factor authentication, and receives access to applications. This process assumes human intelligence can handle complex authentication flows and make security decisions about whether to trust various systems.

Machine authentication must be completely automated. An AI agent cannot complete interactive authentication flows or make security decisions about untrusted systems. However, machines can handle cryptographic operations that would be impractical for humans. This creates opportunities for more sophisticated authentication mechanisms that provide better security properties than human-centered approaches.

Federated AI identity enables AI agents to authenticate across organizational boundaries while maintaining security and accountability. Consider an AI agent that processes customer orders by coordinating with supplier systems. Traditional approaches would require manual integration work for each supplier relationship. Federated identity allows the agent to authenticate automatically with any supplier that supports the same identity standards.

Standard claims provide consistent identity attributes across all AI systems. Unlike humans, who might have different usernames and attributes in various systems, AI agents can maintain consistent identity information through standardized claim formats. This consistency simplifies policy management and reduces integration complexity when AI agents work across multiple systems.

Discovery protocols enable automatic configuration for new AI agents. When you deploy a new agent, it can automatically discover available authorization servers, understand their capabilities, and configure itself appropriately. This automation reduces deployment complexity and ensures that new agents follow established security patterns without manual configuration.

Token introspection provides real-time validation of AI agent permissions. Unlike traditional systems that validate permissions once at login, token introspection enables continuous validation throughout an agent's session. If an agent's permissions change due to policy updates or security conditions, token introspection ensures that these changes take effect immediately.

The practical implementation of OpenID Connect for machines requires extending standard protocols to handle machine-specific requirements. Machine credentials need automatic rotation capabilities that human credentials do not require. Machine identity tokens need to include behavioral and contextual information that human tokens typically omit. Machine authorization flows need to handle high-frequency authentication requests that would overwhelm human-centered systems.

Practical Implementation Patterns for AI Authorization

Building effective AI authorization requires concrete patterns that you can implement and test in real environments. These patterns provide starting points for your implementation while allowing customization for your specific requirements. Understanding each pattern helps you choose the right approach for different types of AI agents and use cases.

The AI Agent Passport pattern treats each agent's authorization token as a comprehensive identity document. Just as a human passport contains identity information, visa stamps, and entry/exit records, an AI agent passport contains identity claims, capability grants, temporal restrictions, and behavioral history. This pattern works particularly well for AI agents that operate across multiple systems or organizational boundaries.

Identity claims in an AI agent passport include information like the agent's version number, the system that created it, and the business function it serves. These claims help other systems understand what type of agent they are authorizing and apply appropriate security policies. For example, a customer service agent might receive different treatment than a financial analysis agent, even when both request access to customer data.

Capability claims define what the agent is authorized to do rather than what systems it can access. This distinction matters because AI agents often combine multiple system interactions to complete single business functions. Traditional role-based systems grant access to specific applications or databases. Capability-based systems grant permission to perform business functions like "process customer orders" or "generate financial reports."

Temporal claims add time-based restrictions that automatically limit when the agent can operate. These restrictions can be absolute (only during business hours) or relative (only for the next 30 minutes). Temporal claims enable sophisticated authorization policies that adapt to operational schedules and security requirements without manual intervention.

Behavioral claims provide information about how the agent should operate rather than just what it can do. These claims might include confidence thresholds that the agent must maintain, approval requirements for high-value decisions, or escalation procedures for unusual situations. Behavioral claims help ensure that AI agents operate within acceptable risk parameters even when they have broad technical capabilities.

Dynamic Policy Evaluation represents a shift from asking "does this agent have permission" to asking "should this agent have permission right now." This pattern evaluates authorization requests against current conditions rather than static role assignments. The evaluation considers the agent's current state, environmental conditions, data sensitivity, and real-time risk assessment.

Consider this practical example. An AI agent requests permission to modify customer billing information. Static role-based authorization would either allow or deny this request based on the agent's assigned role. Dynamic policy evaluation considers additional factors: Is this during business hours? What is the agent's recent accuracy rate? How sensitive is the specific customer's data? Are there any active security alerts that might indicate compromised systems?

The policy evaluation logic can be expressed as conditional statements that combine multiple factors. If the agent's confidence score exceeds 95%, the data classification is "internal" or lower, and the request occurs during business hours, then grant access immediately. If any of these conditions fail, the policy might require human approval or additional verification steps.

Behavioral Monitoring provides continuous oversight of AI agent actions to detect anomalies that might indicate problems or security compromises. Unlike traditional monitoring that focuses on system performance, behavioral monitoring analyzes whether AI agents are operating within expected parameters and following established patterns.

Deviation detection identifies when agents behave differently than their historical patterns. This might indicate that an agent has learned new behaviors, encountered unusual data, or been compromised by an attacker. The monitoring system can automatically adjust authorization policies when it detects significant behavioral changes.

Privilege escalation detection watches for agents that attempt to exceed their authorized capabilities. This might involve requesting access to systems they do not normally use, attempting operations outside their typical scope, or trying to modify their own authorization policies. These behaviors trigger additional security measures like human review or temporary permission restrictions.

Performance monitoring identifies agents whose decision-making capabilities have degraded. An agent that begins making poor decisions might be experiencing technical problems or attempting to operate outside its trained parameters. Authorization policies can automatically reduce such an agent's privileges until human administrators can investigate the situation.

Building a Trust Architecture for Autonomous Systems

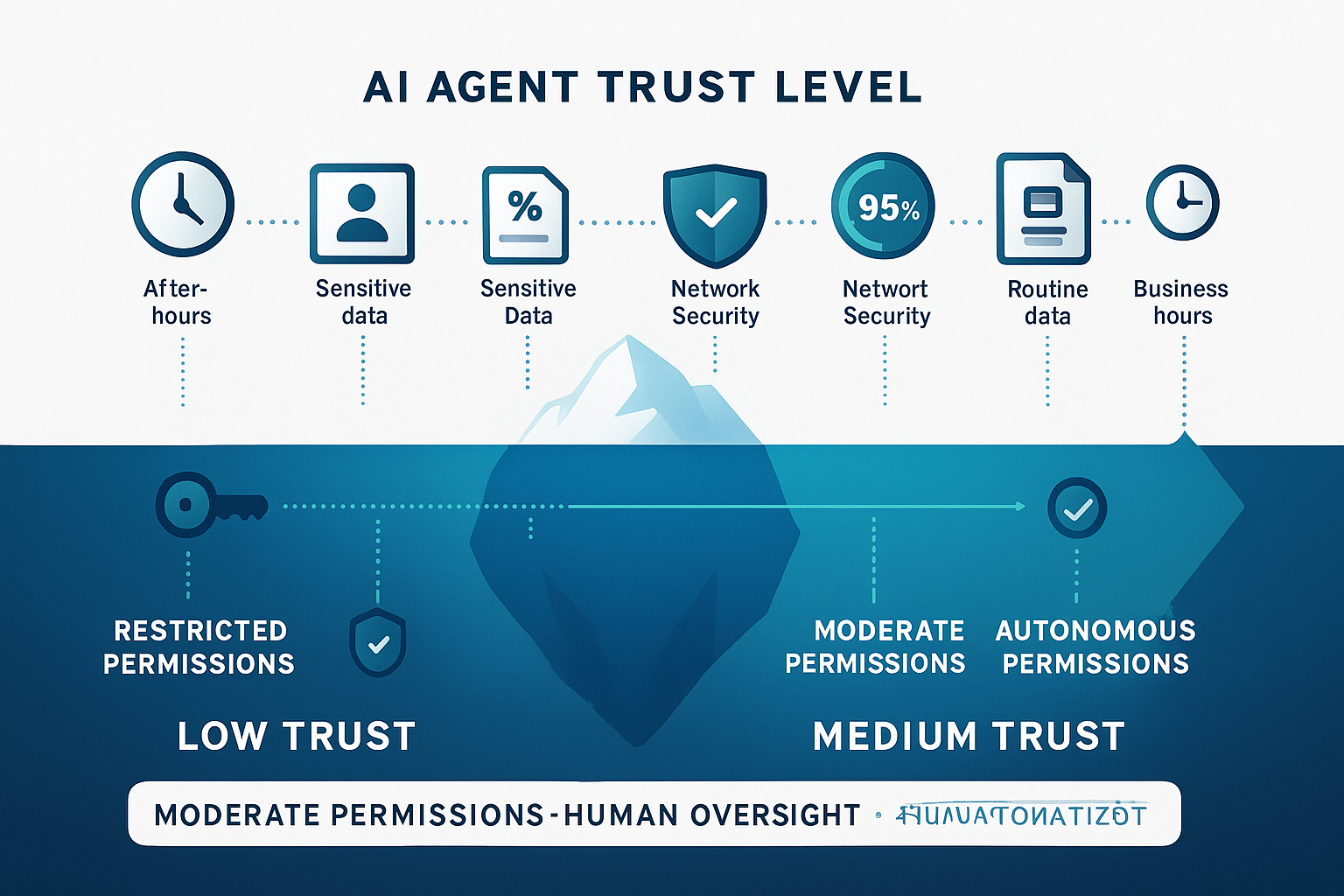

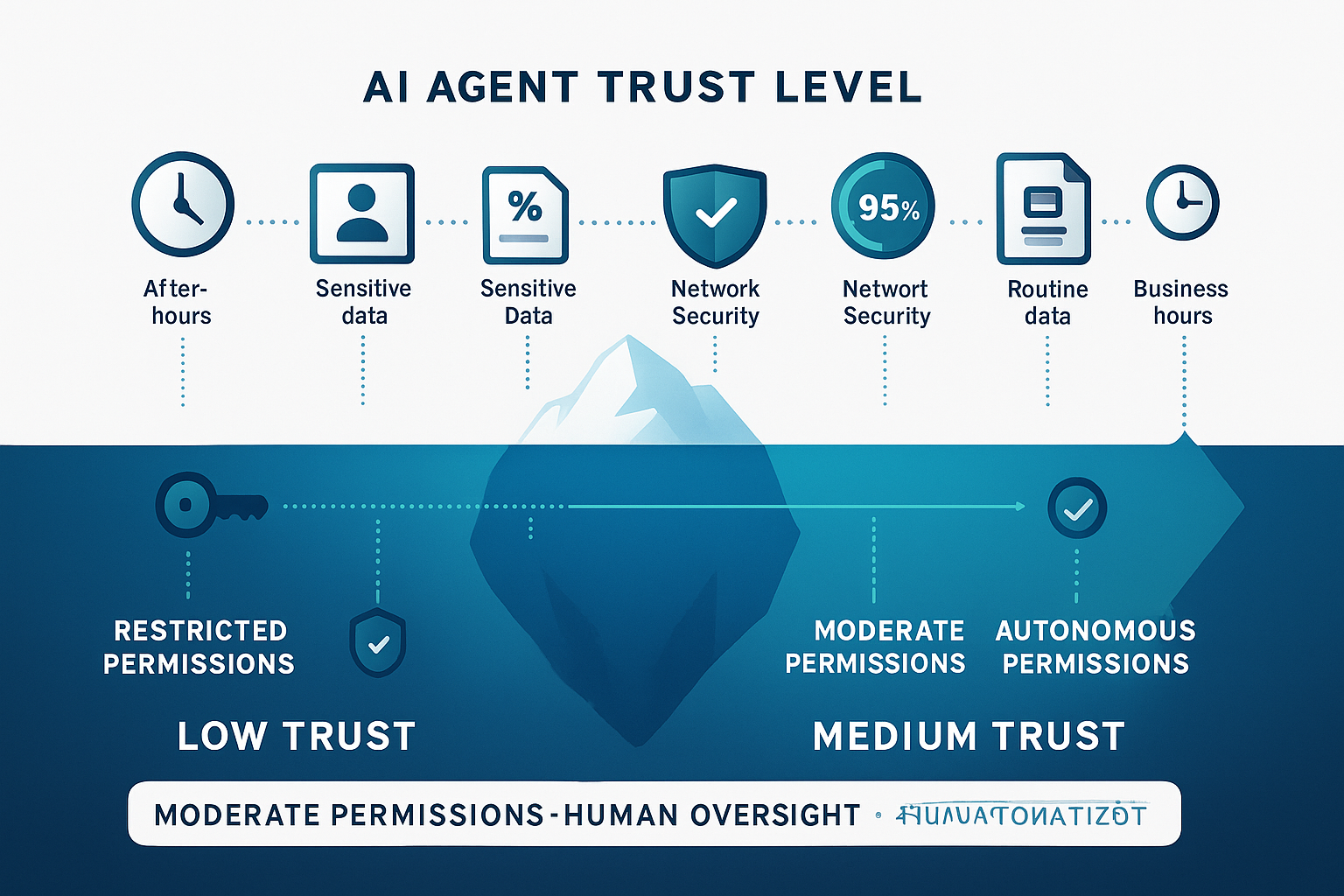

Creating trust in autonomous systems requires understanding that trust is not a binary concept but rather a spectrum of confidence levels that change based on evidence and context. Traditional security systems often treat trust as permanent once established through initial authentication. AI authorization systems must treat trust as dynamic and continuously validated through ongoing evidence.

Cryptographic verification provides the foundation for all trust decisions in AI systems. Every action that an AI agent performs must be cryptographically signed using private keys that only that agent possesses. This creates mathematical proof that specific agents performed specific actions at specific times. Unlike traditional audit logs that can be modified, cryptographic signatures provide tamper-evident records that maintain their validity indefinitely.

The implementation of cryptographic verification requires careful key management for AI agents. Each agent needs unique private keys that are generated securely and stored in hardware security modules or similar protected environments. The keys must rotate regularly to limit exposure from any potential compromise. The verification system must be able to validate signatures using current and historical public keys to maintain audit trail integrity.

Behavioral attestation extends cryptographic verification by requiring AI agents to prove not just what they did, but how they did it. Traditional systems verify that an authorized agent performed an action. Behavioral attestation verifies that the agent performed the action using approved methods and within acceptable parameters. This additional layer of verification helps ensure that AI agents operate according to their design specifications rather than developing unexpected behaviors.

Consider how behavioral attestation works in practice. An AI agent that processes loan applications must not only prove that it made approval decisions, but also prove that it followed established underwriting criteria and did not use prohibited factors like race or gender. The attestation mechanism requires the agent to provide cryptographic proof of its decision-making process along with its final decisions.

Continuous monitoring provides real-time evaluation of AI agent behavior to detect problems before they cause significant damage. Unlike periodic audits that might discover issues days or weeks after they occur, continuous monitoring can identify anomalies within minutes or seconds. This rapid detection enables automated responses that limit the scope of any problems.

The monitoring system must be capable of analyzing multiple types of AI agent behavior simultaneously. Performance monitoring tracks whether agents are making accurate decisions and operating within acceptable error rates. Security monitoring watches for signs of compromise or malicious activity. Compliance monitoring ensures that agents follow regulatory requirements and business policies.

Graceful degradation ensures that AI systems can continue operating safely when trust levels decrease. Rather than completely shutting down when problems occur, well-designed AI authorization systems can reduce agent capabilities while maintaining essential functions. This approach prevents single points of failure from disrupting entire business operations.

The implementation of graceful degradation requires defining multiple trust levels and corresponding capability restrictions. High trust levels enable full autonomous operation. Medium trust levels might require human approval for sensitive decisions. Low trust levels might restrict agents to read-only operations or simple, low-risk tasks. The system can automatically adjust trust levels based on real-time evidence and gradually restore full capabilities as trust is reestablished.

Compliance and Regulatory Advantages

Organizations that implement comprehensive AI authorization frameworks position themselves ahead of emerging regulatory requirements while gaining operational advantages today. Understanding the compliance benefits helps justify the investment in sophisticated authorization systems and provides competitive advantages in regulated industries.

Current regulations increasingly require organizations to demonstrate control over automated decision-making systems. Financial services regulations demand audit trails for algorithmic trading decisions. Healthcare regulations require oversight of AI systems that influence patient care. Privacy regulations mandate controls over AI systems that process personal data. These requirements will only become more stringent as AI systems become more prevalent and powerful.

AI authorization frameworks provide the technical infrastructure needed to meet these regulatory demands. Cryptographic audit trails prove exactly which AI agents made which decisions at what times. Behavioral monitoring demonstrates that agents operated within approved parameters. Dynamic policy evaluation shows that access controls adapted to changing risk conditions. This evidence satisfies regulatory requirements while providing business value through improved risk management.

The documentation and reporting capabilities of modern AI authorization systems exceed what traditional audit processes can achieve. Instead of manually reviewing log files and interviewing personnel, auditors can verify AI behavior through cryptographic proof and automated compliance checks. This reduces audit costs while providing higher confidence in the results.

Proactive compliance preparation also creates competitive advantages. Organizations that can deploy AI systems with built-in compliance capabilities can enter new markets and customer segments faster than competitors who must retrofit compliance controls. They can also respond more quickly to new regulatory requirements because their authorization infrastructure already provides the necessary visibility and control mechanisms.

The international nature of modern business requires AI authorization systems that can adapt to multiple regulatory frameworks simultaneously. An AI agent that operates in both the United States and European Union must comply with both American and European privacy regulations. Authorization policies can enforce different data handling requirements based on the location of data and users, enabling global AI deployments while maintaining compliance with local laws.

Implementation Strategy and Next Steps

Implementing AI authorization requires a systematic approach that builds capabilities incrementally while maintaining operational stability. Organizations that attempt to transform their entire authorization infrastructure simultaneously often encounter resistance from development teams and operational disruptions that undermine the entire effort.

Begin by identifying AI agents or automated systems that currently use traditional authentication mechanisms like service accounts or API keys. These systems represent the highest-risk targets for authorization improvements because they often have broad privileges and limited oversight. Focus initial efforts on agents that handle sensitive data or make high-value decisions.

Pilot implementations should start with non-critical systems that can tolerate some experimentation and refinement. Choose AI agents that are well-understood and have clear behavioral patterns. Implement basic attribute-based access control policies that consider environmental factors like time and location. Measure the impact on system performance and operational complexity.

As you gain experience with dynamic authorization policies, gradually expand to more complex scenarios that include AI agent state and behavioral factors. Test different JWT token structures to find the right balance between security information and performance impact. Develop monitoring systems that can detect authorization policy violations and unusual AI agent behavior.

The long-term success of AI authorization depends on integration with broader security and compliance initiatives. Authorization policies should align with business risk management strategies. Monitoring systems should integrate with security incident response procedures. Compliance reporting should feed into broader governance frameworks that demonstrate organizational control over AI systems.

Training and change management represent critical success factors that many organizations underestimate. Development teams need to understand how to build AI agents that work effectively with dynamic authorization policies. Operations teams need tools and procedures for managing authorization policies and responding to policy violations. Business stakeholders need visibility into how authorization controls impact AI system capabilities and performance.

The future of AI authorization will likely include more sophisticated capabilities like cross-organizational federation, blockchain-based audit trails, and machine learning-powered anomaly detection. Organizations that master the fundamentals of AI authorization today will be prepared to adopt these advanced capabilities as they mature.

Building effective AI authorization requires balancing multiple competing priorities: security and performance, autonomy and control, flexibility and simplicity. The organizations that succeed will be those that treat AI authorization as a core capability rather than an afterthought, investing in the people, processes, and technology needed to authorize AI agents safely at scale.

The autonomous future is not a distant possibility but a current reality for organizations that have already deployed AI agents in production environments. These organizations must solve AI authorization challenges today, not tomorrow. The frameworks and patterns described in this article provide starting points for that work, but each organization must adapt these concepts to their specific requirements and constraints.

The companies that master AI authorization will gain competitive advantages through faster AI deployment, better risk management, and stronger compliance postures. They will build the foundation for autonomous systems that operate safely at unprecedented scale while maintaining complete visibility and accountability. Most importantly, they will prepare for a future where AI agents outnumber human users and make decisions that directly impact business success.

*** This is a Security Bloggers Network syndicated blog from Deepak Gupta | AI & Cybersecurity Innovation Leader | Founder's Journey from Code to Scale authored by Deepak Gupta - Tech Entrepreneur, Cybersecurity Author. Read the original post at: https://guptadeepak.com/zero-trust-for-ai-agents-implementing-dynamic-authorization-in-an-autonomous-world/

如有侵权请联系:admin#unsafe.sh