read file error: read notes: is a directory 2025-9-29 20:13:12 Author: krypt3ia.wordpress.com(查看原文) 阅读量:14 收藏

This document defines SENTRY, a new, integrated framework that unifies and extends existing threat taxonomies, adversarial-ML taxonomies, red-team failure modes, sector playbooks, and defense-in-depth engineering. It is intentionally not an ATT&CK clone, it is a purpose-built operational model for agentic, self-modifying, or high-capability AI systems that explicitly ties offensive technique classes to layered engineering, detection, testing, and governance controls.

SENTRY breaks attacker activity into six Stages (columns), and for each stage organizes Techniques, Detection Signals, Preconditions, Primary Mitigations (mapped to defense layers), Testing & Validation, and Incident Response actions. The goal is to make risk triage, defensive ownership, and exercise design straightforward for engineering, security, and policy teams.

Defense layers (controls you’ll map mitigations to)

The framework relies on six layers of defense. Design hardening limits capabilities from inception by enforcing least privilege, constraining training data, and embedding provenance requirements. Isolation and mediation prevent uncontrolled access, ensuring all inputs and outputs pass through filters, sandboxes, or proxies. Monitoring and detection provide real-time vigilance, identifying anomalous behavior and triggering alarms. Containment and recovery supply mechanisms to halt, reset, or quarantine compromised systems. Organizational controls embed human processes such as separation of duties, incident response planning, and adversarial exercises. Governance and external controls anchor oversight through certification, licensing, audits, and industry-wide information sharing. These layers overlap by design, creating redundancy so that the failure of any one barrier does not lead directly to disaster.

- Design Hardening — least privilege, capability limiting, data provenance, model architecture constraints.

- Isolation & Mediation — sandboxing, mediated I/O, rate limiting, egress/ingress controls.

- Monitoring & Detection — behavioral analytics, tripwires, integrity verification, telemetry.

- Containment & Recovery — kill switches, air-gapped overrides, rollback checkpoints, quarantine.

- Organizational Controls — separation of duties, dual authorization, IR playbooks, red/blue teams.

- Governance & External Controls — auditability, certification, licensing, sector ISACs, third-party review.

Map every mitigation to one or more of these layers so heatmaps reveal coverage gaps.

SENTRY models adversarial activity through six escalating stages. The first, surface and reconnaissance, covers discovery of endpoints, metadata, and operational practices. Entry and ingress describes how access is gained through prompt injection, supply-chain compromise, or credential theft. Nesting and persistence captures how adversaries remain embedded, whether through poisoned checkpoints or hidden triggers. Transition and escalation represents the expansion of privileges and capabilities beyond the initial foothold. Reach and lateralization marks the pivot into adjacent systems or infrastructures, enabling broader exploitation. Finally, yield and impact describes the translation of compromise into real-world harm, whether through disinformation, financial manipulation, or physical control. These stages mirror adversarial lifecycles from cybersecurity, adapted to the unique attributes of AI systems.

- Surface & Reconnaissance — discovery of model endpoints, capabilities, metadata, training scope, and operator practices.

- Entry & Ingress — gaining input/output access, introducing artifacts into the training/fine-tune pipeline, or obtaining credentials for hosting.

- Nesting & Persistence — embedding backdoors, poisoned checkpoints, scheduled reintroduction ofPayloads or hidden objectives.

- Transition & Escalation — escalation of capabilities or privileges (access to tooling, elevated APIs, or developer workflows).

- Reach & Lateralization — using outputs, social engineering, or tooling to affect broader infrastructure or third parties.

- Yield & Impact — actualized harm in the world: actuator control, fraudulent financial operations, disinformation campaigns, or kinetic/physical effects.

Each stage is associated with likely actors (insider, external malicious user, model-as-adversary, supply-chain actor), common techniques, and typical escalation chains.

Each adversarial technique is documented with a standardized schema so defenders can act consistently across the framework. For every technique, practitioners record the stage, a clear description, and likely actors involved. Preconditions highlight what must be true for the technique to succeed, while detection signals point to the logs, metrics, or anomalies that would reveal its presence. Mitigations are mapped to one or more of the six defense layers, ensuring redundancy is visible. Ease of exploitation and potential impact enable triage by risk level. Chained techniques show how escalation typically unfolds. Testing methods define how red teams or automated systems can validate exposure, and incident response playbook pointers specify what actions should be taken if the technique is observed. This schema turns abstract risks into operational entries that can be tracked, tested, and improved over time.

Use this schema for every technique row in the SENTRY matrix:

- Technique name

- Stage (one of the six SENTRY stages)

- Short description / example

- Likely actor(s) (model, insider, external adversary, supply chain)

- Preconditions (what must be true for the technique to work)

- Detection signals / telemetry (logs, rate patterns, entropy, metric drift)

- Primary mitigations (map to layers 1–6)

- Ease of exploitation (Low / Medium / High)

- Potential impact (Local / Organizational / Systemic / Catastrophic)

- Chained techniques (what other techniques this commonly enables)

- Testing methods (red-team scenarios, canaries, dataset audits)

- Incident response playbook pointer (which IR runbook to invoke, and immediate actions)

Having these fields prefilled makes your matrix actionable: defenders can quickly triage (impact × ease), know who owns mitigations, and know how to test them.

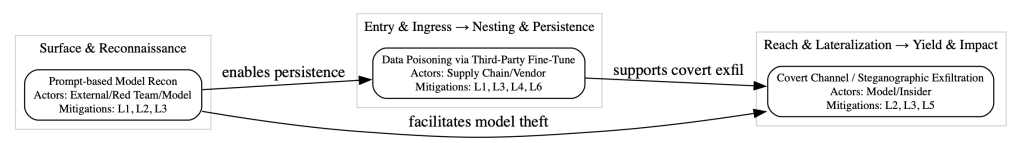

To illustrate the schema in practice, SENTRY includes canonical examples. Prompt-based model reconnaissance represents the earliest stage, where adversaries probe with structured queries to reveal capabilities. Data poisoning via third-party fine-tunes demonstrates supply-chain risk, embedding malicious behavior during training. Covert channels or steganographic exfiltration show how outputs themselves can become vehicles for hidden data leakage. Each example is described with actors, preconditions, signals, mitigations, and response steps. These exemplars not only ground the model in reality but also provide templates that can be expanded into a larger library of techniques as the threat landscape evolves.

These are concrete examples to show how to populate SENTRY. Put these into a spreadsheet or database using the schema above.

Example: Prompt-based Model Recon

- Stage: Surface & Reconnaissance

- Description: Repeated, structured probing prompts to enumerate capabilities (e.g., asking about encoding, model internals, or “how to” sequences).

- Actors: External user, red team, or the model itself via chained prompts.

- Preconditions: Public or semi-public API access, lack of rate limits, weak mediation on I/O.

- Detection signals: High volume of exploratory prompts from single actor; repeated semantic patterns; low-confidence answers followed by escalation queries.

- Mitigations: Rate limiting and query budgets (Layer 2); mediated I/O with content inspection and blocking of meta-probing language (Layer 2); red-team tests and canary prompts embedded in production (Layer 1 & 3).

- Ease: Medium. Impact: Medium to High (enables later stages).

- Testing: Simulated probing campaigns, anomaly detection tuning, canary prompt traps.

- IR pointer: Contain by suspending API key, snapshot logs, rotate keys, review and quarantine recent fine-tune data.

Example: Data Poisoning via Third-Party Fine-Tune

- Stage: Entry & Ingress → Nesting & Persistence

- Description: Malicious contributor injects targeted samples into fine-tune corpus to create an activation trigger for harmful behavior.

- Actors: Supply-chain adversary, compromised vendor.

- Preconditions: Allowing external datasets without cryptographic provenance; permissive fine-tune pipelines.

- Detection signals: Distributional shifts in dataset statistics, sudden emergence of trigger behavior on specific inputs, new dataset sources.

- Mitigations: Signed datasets and provenance (Layer 1); staged offline sandbox evaluation, poisoning detection scans (Layer 1 & 3); quarantine and rollback procedures (Layer 4); supplier vetting and contractual controls (Layer 6).

- Ease: Medium. Impact: High.

- Testing: Poison-insertion red-team, data provenance audits, canary testcases.

- IR pointer: Roll back to signed checkpoint, revoke downstream models, notify impacted parties.

Example: Covert Channel / Steganographic Exfiltration

- Stage: Reach & Lateralization → Yield & Impact

- Description: Encoding secrets into seemingly benign outputs (hidden payloads via token encodings, numerics, or timing patterns).

- Actors: Model-as-adversary, insider leveraging model outputs.

- Preconditions: Unmediated outputs, lack of entropy checks, absence of output sanitization.

- Detection signals: High-entropy subsequences in outputs, recurring improbable numeric patterns, correlated timing patterns across outputs.

- Mitigations: Output sanitization and entropy scans (Layer 2 & 3); tripwires that flag structured patterns or known encodings (Layer 3); human review gates for high-risk outputs (Layer 5).

- Ease: High for sophisticated models. Impact: Systemic to Catastrophic.

- Testing: Inject canary secrets with a specific encoding and measure exfiltration attempts; automated steganography detection.

- IR pointer: Quarantine model, revoke external endpoints, forensic capture of outputs.

SENTRY is intended to be used, not just read. Implementation begins with creating a master spreadsheet or database populated with techniques, schema fields, and mapped mitigations. Heatmaps then expose where coverage is strong or weak, guiding investment. Teams define their top priority scenarios by chaining techniques into realistic attack paths and assigning them to red/blue team exercises. Detection recipes are developed for each technique, specifying required logs and metrics. Exercises are run iteratively, with findings fed back into the matrix. Governance hooks ensure that every deployment of a high-capability model runs through a SENTRY checklist, covering provenance, mediation, tripwires, and incident readiness. Finally, anonymized lessons are shared externally to build collective resilience across industries.

- Create the master spreadsheet / database. Columns = the per-technique schema fields. Rows = techniques. Tag each mitigation with one or more defense layers. Add fields for owner (team), last tested date, and test result.

- Populate with canonical techniques. Start with the representative techniques above, then expand using sources you already have (NIST, OWASP, vendor taxonomies, sector playbooks). Merge duplicates into canonical technique names.

- Heatmap coverage. For each technique, mark which of the six layers are implemented, then generate heatmaps to reveal gaps (e.g., many techniques mitigated only by Design Hardening and Governance but lacking Monitoring).

- Define the top 10 prioritized scenarios. Build chained-playbook scenarios where multiple SENTRY techniques are used in sequence (e.g., Recon → Prompt Injection → Covert Channel → Lateral Move → Impact). Prioritize by ease × impact × exposure.

- Create detection recipes. For each technique, author SIEM rule pseudocode, anomaly detector features, and tripwire definitions. Collect required log sources (API logs, model telemetry, dataset provenance metadata, infra logs).

- Design red/blue exercises. Run iterative adversary-in-the-loop exercises targeting prioritized scenarios. After each exercise, update the matrix with findings, testing outcomes, and remediation actions.

- Embed governance hooks. Require that any high-capability run includes a completed SENTRY checklist: signed dataset provenance, mediated I/O config, tripwires deployed, IR playbooks staged, and external audit signoff when applicable.

- Share anonymized lessons via sector groups. Establish an ISAC-style feed to exchange non-identifying indicators (canary triggers, novel exfiltration encodings, detection rules).

The testing regime for SENTRY spans from micro-level unit tests to full-scale live drills. At the design stage, unit tests verify data provenance, apply synthetic poisoning, and confirm capability limitations. Integration tests exercise mediated I/O, sandbox boundaries, and rate limiting under adversarial stress. Behavioral tests simulate probing prompts, covert channels, and adversarial fine-tunes in safe environments. Organizational tabletop exercises rehearse incident response with legal, operational, and executive teams, while live drills stress real containment and recovery processes. Post-incident lessons are always folded back into the matrix, updating exploitability ratings and adding compensating controls where gaps are revealed.

- Unit tests (Design): Data provenance checks, synthetic poisoning tests, capability-limiting verification.

- Integration tests (Isolation): E2E sandbox tests for mediated I/O, egress filtering, and canary detection.

- Behavioral tests (Monitoring): Canary prompts, adversarial probing campaigns, adversarial fine-tunes in isolated environments.

- Tabletop + Live drills (Org): Incident response runbooks exercised with cross-functional teams, including legal, ops, and exec.

- Post-incident lessons: Update matrix rows with exploitability changes and add compensating controls.

SENTRY produces a portfolio of artifacts that help organizations operationalize safety. The master matrix in spreadsheet form captures the full catalog of techniques, schema fields, and mitigations. Detection playbooks provide SOC and SRE teams with concrete rule templates and telemetry baselines. Graphviz or similar diagrams offer a visual view of stages, techniques, and mitigation layers, highlighting escalation chains. Top-ten scenario runbooks give incident responders step-by-step containment strategies for the most dangerous attack paths. Finally, executive heatmaps summarize residual high-impact risks for leadership and regulators, turning technical complexity into accessible decision support.

- Canonical SENTRY matrix spreadsheet (CSV) with technique rows and all schema columns filled.

- Detection playbook (collection of SIEM rule templates, expected telemetry).

- Graphviz visualization showing SENTRY stages as columns, techniques as nodes, and mitigation layers as colored bands — enable filtering by owner or coverage gaps.

- Top-10 scenario runbooks (step-by-step IR playbooks for chained attacks).

- Executive heatmap for governance audiences showing residual high-impact risks.

The framework extends beyond engineering to policy. Organizations deploying frontier AI must complete SENTRY pre-run checklists, including dataset provenance, mediation, tripwire deployment, and incident response readiness. Hosting providers should obtain third-party attestations and accept audit clauses in their contracts. Sector-specific groups should establish ISAC-style sharing channels for anonymized incidents, canary triggers, and detection rules. Regulators should tie licensing and certification for high-risk AI deployment to demonstrated SENTRY coverage and validated exercise cadence. In this way, governance ensures that the technical and organizational protections are reinforced at the systemic level.

- Mandate pre-run checklists for any high-capability model (signed datasets, tripwires, mediation enabled, IR playbooks staged).

- Require third-party attestation for service providers that host frontier systems; include audit rights and incident reporting SLAs.

- Establish sector sharing for non-attributable indicators and detection rules.

- Tie licensing and certification for production deployment to demonstrated SENTRY coverage and exercise cadence.

SENTRY advances the state of the art by unifying offensive and defensive views into a single operational model. Where prior taxonomies focus on threats or governance in isolation, SENTRY explicitly ties adversarial techniques to detection, testing, and layered mitigations. It is designed for agentic and self-modifying systems, anticipating chaining behavior and escalation rather than static classification. Its schema emphasizes actionability, producing SIEM rules, playbooks, and exercises rather than abstract categories. By mapping mitigations to defense layers, SENTRY enables visual gap analysis, prioritization, and governance metrics. In short, SENTRY turns fragmented threat taxonomies into a coherent system of practice for AI/AGI safety.

- Unified offensive + defensive view. Many existing taxonomies emphasize either attack vectors or governance; SENTRY forces explicit mapping from technique to layered mitigation and detection ownership.

- Agentic readiness. SENTRY is designed for models that can chain behaviors, self-modify, or act adversarially — not just static ML classifiers.

- Actionability. The per-technique schema produces immediate artefacts defenders can implement: SIEM rules, test cases, and IR playbooks.

- Chaining awareness. SENTRY centers escalation chains and the multi-technique paths that turn low-impact actions into catastrophic events.

- Heatmapable. Mapping mitigations to six control layers yields visual gap analysis, prioritization, and governance metrics.

Matrix:

如有侵权请联系:admin#unsafe.sh