LunaLock 勒索软件团伙通过窃取艺术作品并威胁将其用于 AI 模型训练来实施网络勒索。该团伙入侵 Artists&Clients 网站,窃取数字艺术并要求赎金 5 万美元。若不支付赎金,将公开数据并提交艺术品用于训练大型语言模型 (LLMs)。专家警告此举可能为其他勒索团伙树立危险先例。艺术家已采取措施保护作品免受黑客和 AI 抓取威胁。 2025-9-9 05:48:39 Author: securityaffairs.com(查看原文) 阅读量:36 收藏

LunaLock Ransomware threatens victims by feeding stolen data to AI models

Pierluigi Paganini

September 09, 2025

LunaLock, a new ransomware gang, introduced a unique cyber extortion technique, threatening to turn stolen art into AI training data.

A new ransomware group, named LunaLock, appeared in the threat landscape with a unique cyber extortion technique, threatening to turn stolen art into AI training data.

Recently, the LunaLock group targeted the website Artists&Clients and stole digital art. The group demanded $50K to the victims, threatening leaks and the use of the stolen data to train large language models (LLMs).

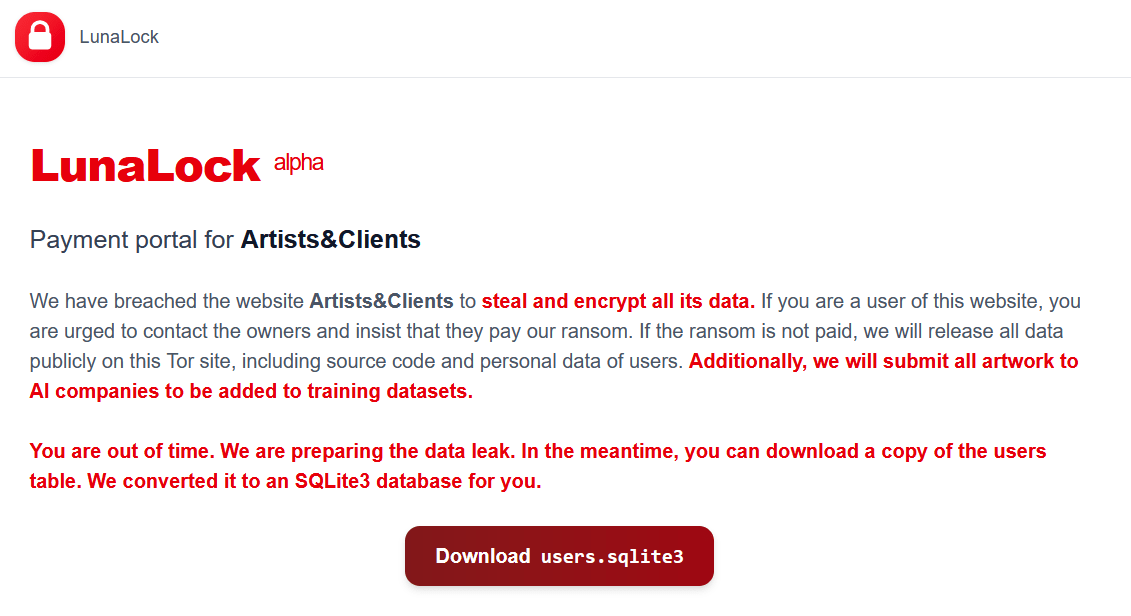

“We have breached the website Artists&Clients to steal and encrypt all its data. If you are a user of this website, you are urged to contact the owners and insist that they pay our ransom. If the ransom is not paid, we will release all data publicly on this Tor site, including source code and personal data of users. Additionally, we will submit all artwork to AI companies to be added to training datasets.” reads the announcement published by the ransomware group on its Tor data leak site.

LunaLock hackers are technically skilled and appear to be native English speakers.

This new type of extortion aims to compromise victims’ intellectual property by including stolen data in datasets used to train LLMs. In the future, other ransomware groups could upload stolen data to publicly accessible databases, making it easily scraped by AI training pipelines. Once included in AI models, the data becomes effectively permanent, unlike dark web leaks that may fade over time. LunaLock’s approach sets a dangerous precedent.

Experts note LunaLock’s attack on Artists&Clients is unusual, as ransomware usually targets sectors likely to pay. Double or triple extortion may fail with freelancers. Artists are already protecting work from both hackers and AI data scraping.

AI firms like OpenAI, Google, and Anthropic scrape online art to train models. Anthropic recently agreed to pay at least $1.5B to settle a copyright lawsuit by authors, the first U.S. AI-copyright case.

To counter this, Ben Zhao, a computer science professor at the University of Chicago Ben Zhao, created Glaze and Nightshade, tools that subtly alter images so they appear normal to humans but mislead AI training.

Launched in 2022, Zhao’s tools Glaze and Nightshade have over 3M downloads and are widely used by artists to protect their work from AI scraping and ransomware threats like LunaLock.

Follow me on Twitter: @securityaffairs and Facebook and Mastodon

(SecurityAffairs – hacking, LunaLock ransomware)

如有侵权请联系:admin#unsafe.sh