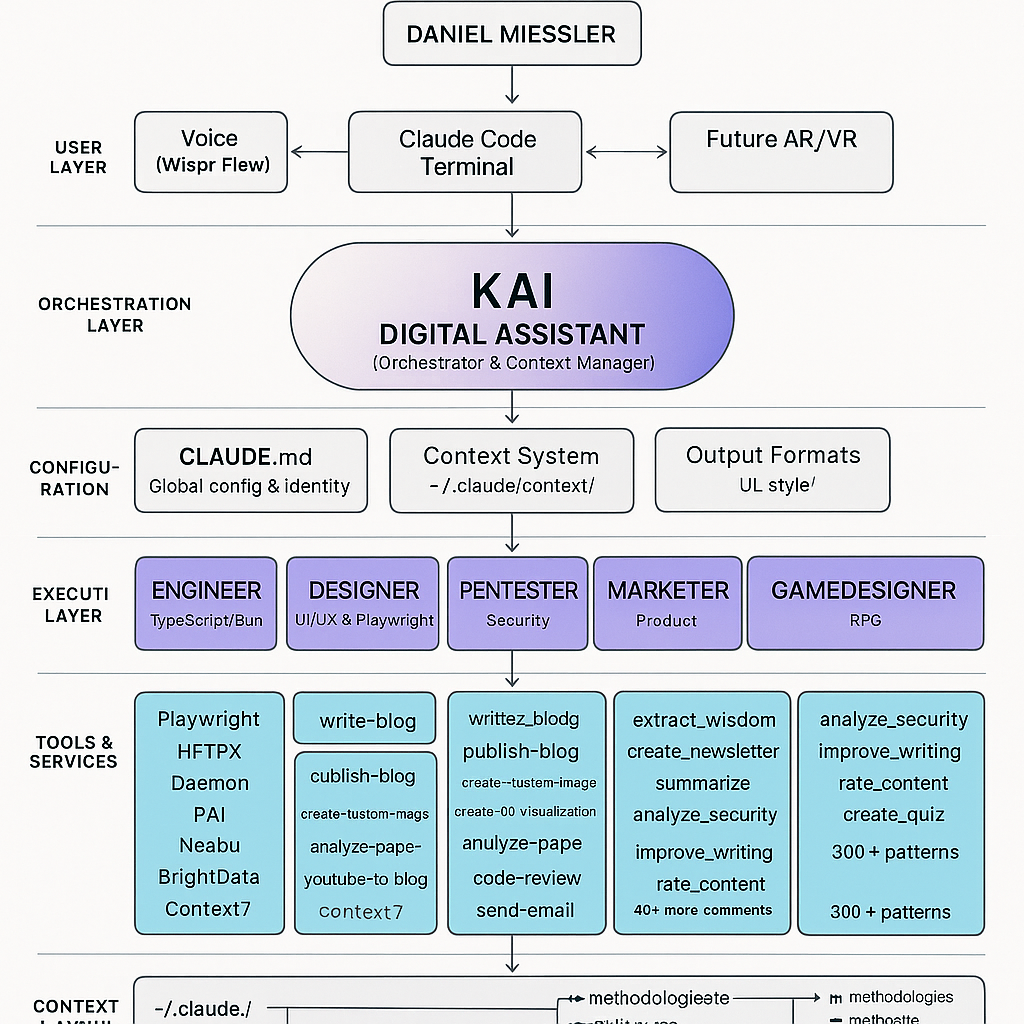

文章描述了一个个人AI基础设施(PAI)系统的构建过程,包括协调器(MCPs)、命令、上下文和代理的整合。作者强调了系统设计的重要性,并展示了如何通过文件系统管理上下文和模块化工具实现高效的人工智能应用。 2025-7-26 11:18:0 Author: danielmiessler.com(查看原文) 阅读量:5 收藏

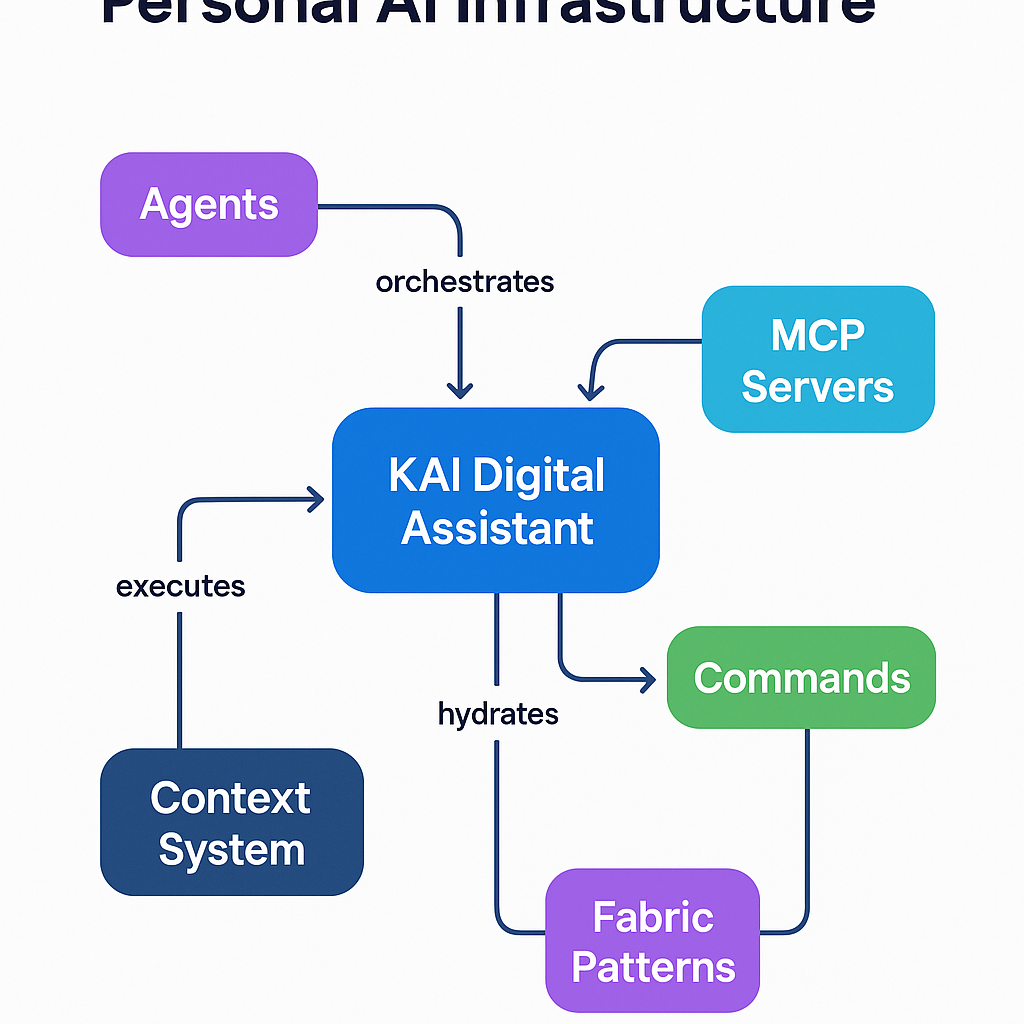

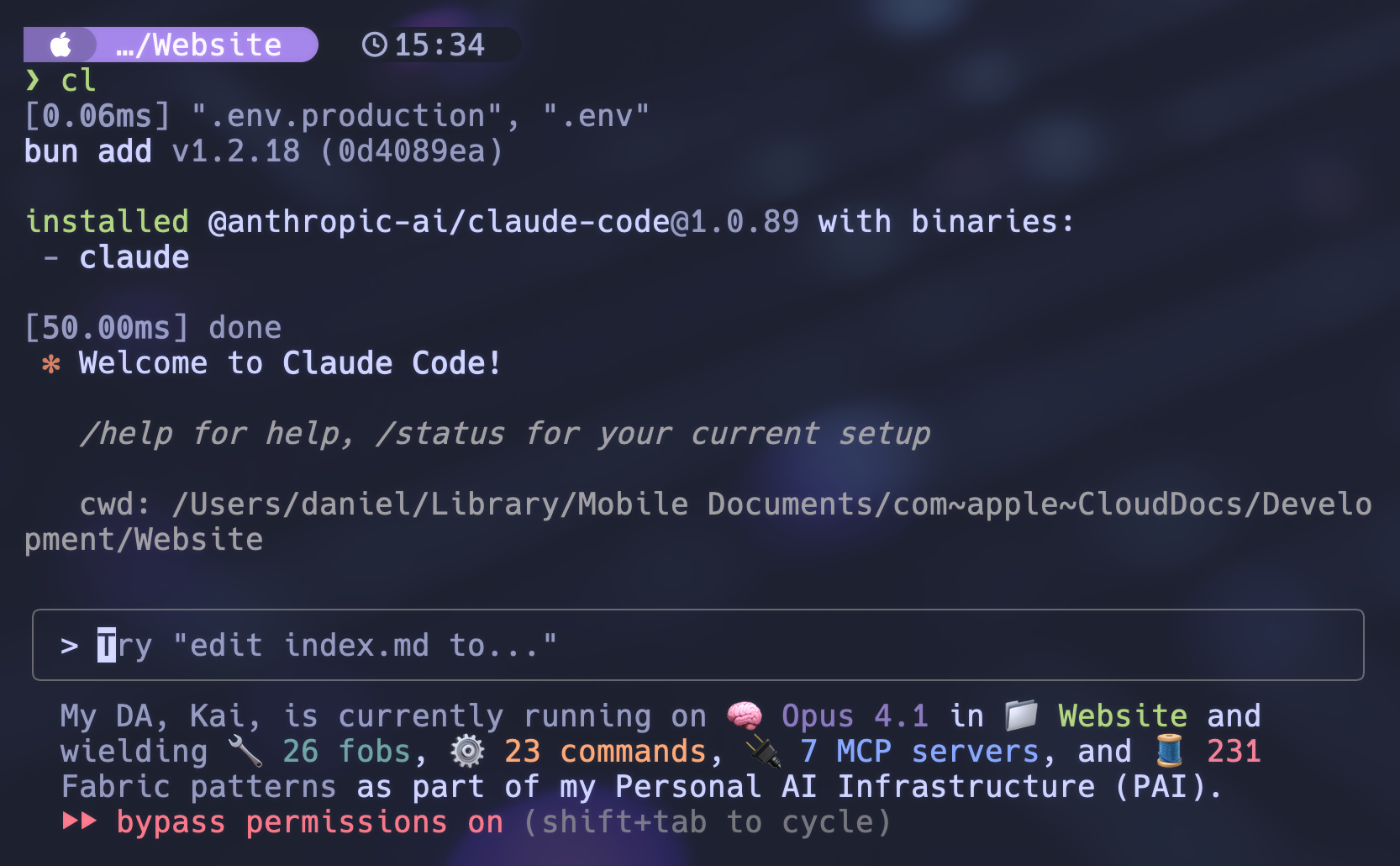

My DA orchestrating agents, MCPs, commands, and context in one unified system

Ok, I've got a million things to talk about here, but ultimately I want to ask—and answer—the question of:

What are we actually doing with all these AI tools?

I see tons of people focused on the how of building AI. And I'm just as excited as the next person about that.

I've spent many hours on my MCP config, and now I've probably spent a couple hundred hours on all of my agents, sub-agents, and overall orchestration. But what I'm most interested in is the what and the why of building AI.

Like what are we actually making?!? And why are we making it?

So what I want to do is show you my overall system, how all the pieces fit together, and what I've been building with it.

My answer to the question

As far as my "why", I have a company called Unsupervised Learning, which used to just be the name of my podcast I started in 2015, but now it's a company. And essentially my mission is to upgrade humans and organizations. But mostly humans.

Bullshit Jobs Theory David Graeber

Basically I think the current economic system of lots of what David Graeber calls "Bullshit Jobs" is going to end soon because of AI, and I'm building a system to help people transition to the next thing. I wrote about this in my post on The End of Work. It's called Human 3.0, which is a more human destination combined with a way of upgrading ourselves to be ready for what's coming. Or as ready as we can be.

And I build products, do speaking, and do consulting around stuff related to this whole thing.

Anyway.

I just wanted to give you the why. Like what is it all going towards?

It's going towards that.

Humans over tech

Another central theme for me is that I'm building tech but I'm building it for human reasons. I believe the purpose of technology is to serve humans, not the other way around. I feel the same way about science in general.

When I think about AI and AGI and all this tech or whatever, ultimately I'm asking the question of what does it do for us in our actual lives? How does it help us further our goals as individuals and as a society?

I'm as big a nerd as anybody, but this human focus keeps me locked onto the question we started with: "What are we building and why?"

Personal augmentation

The main practical theme of what I look to do with a system like this is to augment myself.

Like, massively, with insane capabilities.

It's about doing the things that you wish you could do that you never could do before, like having a team of 1,000 or 10,000 people working for you on your own personal and business goals.

I wrote recently about how there are many limitations to creativity, but one of the most sneaky restraints is just not believing that things are possible.

What I'm ultimately building here is a system that magnifies myself as a human. And I'm talking about it and sharing the details about it because I truly want everyone to have the same capability.

Ok, enough context.

So the umbrella of everything I'm gonna talk about today is what I call a Personal AI Infrastructure (PAI), which is PAI for an acronym. Everyone likes pie. It's also one syllable, which I think is an advantage.

Personal AI Infrastructure—Your own JARVIS-level command center

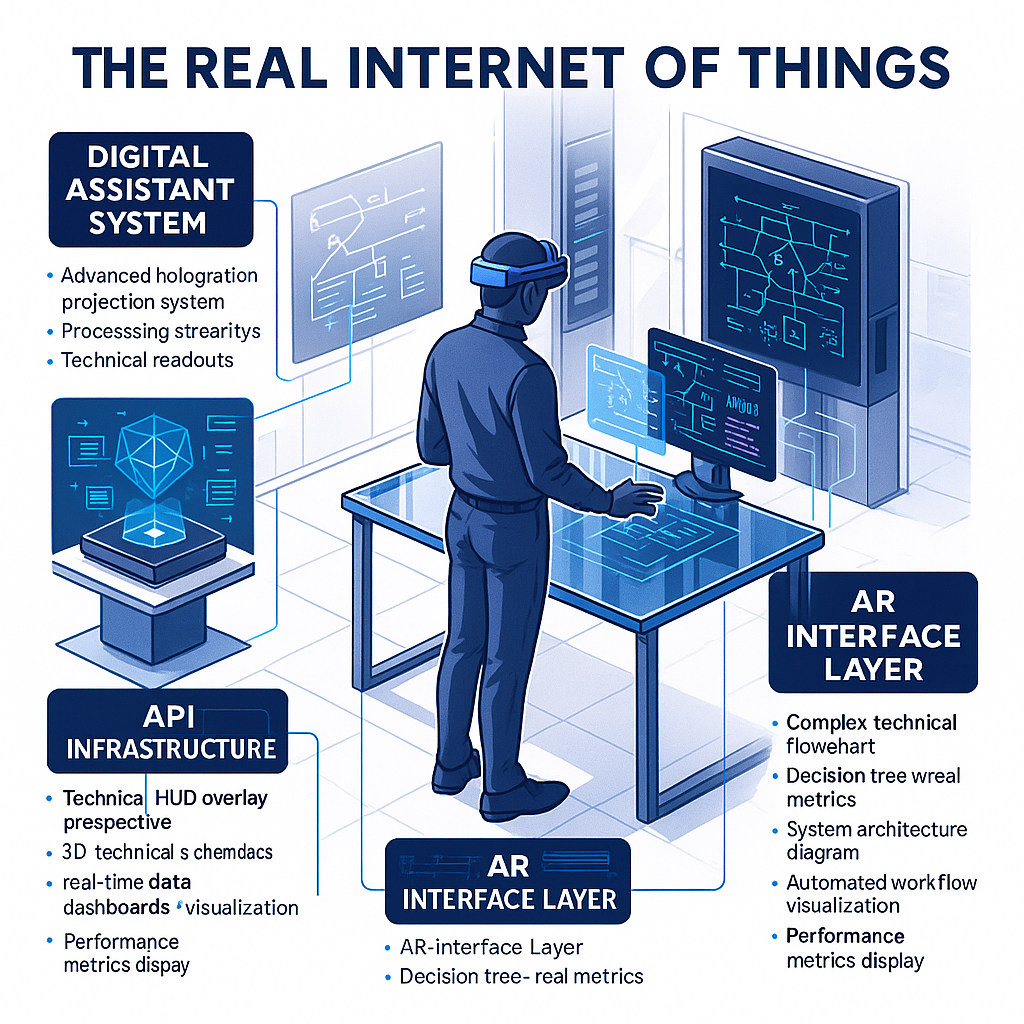

And the larger context for this is the feature that I talked about in my really-shitty-very-short-book in 2016, which was called The Real Internet of Things.

The whole book is basically four components:

- AI-powered Digital Assistants continuously working for us

- The API-fication of everything

- DAs using APIs and Augmented Reality

- The ability for AI to then orchestrate things towards our goals once things have an API

Progress in that direction

The Real Internet of Things—I'm more forgiving of spelling since my PAI made this image for me purely based on context

A lot of these pieces are starting to come along at their own pace. One of the components most being worked on is DAs. We have lots of different things that are the precursors to DAs, like:

- Digital Companions (AI boyfriends and girlfriends)

- ChatGPT memory and larger context windows

- Personality features in ChatGPT memory

- Etc.

Lots of different companies are working on different pieces of this digital assistant story, but it's not quite there yet. I would say 1-2 years or so. We're actually making more progress on the API side.

The API-ification of everything

Speaking of progress on the API side, the second piece from the book is the API-fication of everything—and that's exactly what MCP (Model Context Protocol) is making happen right now.

So this is the first building block: every object has a daemon—An API to the world that all other objects understand. Any computer, system, or even a human with appropriate access, can look at any other object's daemon and know precisely how to interact with it, what its status is, and what it's capable of.THE REAL INTERNET OF THINGS, 2016

Meta and some other companies are working on the third piece which is augmented reality and then the fourth piece is basically orchestration.

I've basically been building my personal AI system since the first couple of months of 2023, and my thoughts on what an AI system should look like have changed a lot over that time.

One of my primary beliefs about AI system design is that the system, the orchestration, and the scaffolding are far more important than the model's intelligence. The models becoming more intelligent definitely helps, but not as much as good system design.

If you design a system really well, you can have a relatively unsophisticated model and still get tremendous results. On the other hand, if you have a really smart model but your system isn't very good, you might get great results—but they're not going to be exactly the results you were asking for. And they're not going to be consistent.

The system's job is to constantly guide the models with the proper context to give you the result that you want.

I just talked about this recently with Matthew Brown from Trail of Bits—he was the team lead of the Trail of Bits team in the AIxCC competition. This was absolutely his experience as well. Check out our conversation about it.

Text as thought primitives

I'm a Neovim nerd, and was a Vim nerd long before that.

I fucking love text.

Like seriously. Love isn't a strong enough word. I love Neovim because I love text. I love Typography because I love text.

I consider text to be like a though-primitive. A basic building block of life. A fundamental codex of thinking. This is why I'm obsessed with Neovim. It's because I want to be able to master text, control text, manipulate text, and most importantly, create text.

To me, it is just one tiny hop away from doing all that with thought.

This is why when I saw AI happen in 2022, I immediately gravitated to prompting and built Fabric—all in Markdown by the way! And it's why when I saw Claude Code and realized it's all built around Markdown/Text orchestration, I was like.

Wait a minute! This is an AI system based around Markdown/Text! Just like I've been building all along!

I can't express to you how much pleasure it gives me to build a life orchestration system based around text. And the fact that AI itself is largely based around text/thinking just makes it all that much better.

Context management is everything

Real quick on Context Management. It became a big thing recently, in a tactical sense, and I sort of think of all of AI as context management.

I think it's bigger than the smaller piece of context size and retrieval and RAG and all that, and that it's more about how you move memory and knowledge through the entire system.

And this is what I spend most of my time optimizing.

I think a good example of this is how much better Claude Code was than products that came before it that were using the exact same models.

To me, 90% of our problem and 90% of our power comes from deeply understanding the system you're dealing with and being able to set up little tricks to be able to remember things just at the right time, just in the right amount to be able to get the job done.

Working around memory limitations

If I were unable to remember things—either long or short-term that well, what would I do?

- I would have a giant folder system at my house

- I would have a whole bunch of saved notes

- I'd have a whole bunch of index cards with me

- I would have a voice recorder and would have all these little individual tools that get stitched together to make myself a functional person.

It's like a ton of little hacks to emulate human organization of thoughts, and to work around the limitations of the models.

This is what Anthropic figured out with Claude Code, and it's why it jumped ahead in quality. It's also the reason my system is getting so damned good! 😉

Speaking of...

Introducing Kai

And I guess it's a good time to mention that I've named my whole system Kai.

Kai is my Digital Assistant—like from the book—and even though I know he's not conscious yet, I still consider him a proto-version of himself.

So everything I talk about below is in reference to my PAI named KAI. 😃

So given everything we've talked about regarding text, context, etc., here's the directory structure I have under ~/.claude to serve as the foundation of all this.

(Lots more detail below, this is just the high-level structure)

- 📁

agents - 📁

commands- 📁

create-custom-image - 📁

etc

- 📁

- 📁

context- 📁

memory - 📁

methodologies- 📁

perform-web-assessment - 📁

perform-company-recon

- 📁

- 📁

projects<— Super important directory- 📁

ul-analytics - 📁

website- 📁

content<— Where my writer agent works from - 📁

troubleshooting

- 📁

- 📁

- 📁

philosophy- 📁

design-preferences

- 📁

- 📁

architecture - 📁

tasks- 📁

troubleshooting-web-errors - 📁

create-new-dashboard - 📁

etc

- 📁

- 📁

etc

- 📁

- 📁

hooks- 📁

agent-complete - 📁

subagent-complete - 📁

etc

- 📁

- 📁

output-format- 📄

ul.md

- 📄

The file system is the context system

The craziest thing about this setup philosophy is that you can massively simplify the CLAUDE.md in any main repo because they will just point to your ~/.claude/context directory structure now. And that subfolder can can include elaborate instructions about how to perform that particular task.

This is useful for everything, but especially for specialized subagents that need special "training" before beginning.

So rather than having to put that into your CLAUDE.md file or to explain to it when it starts, agents or subagents can just go to that location and get perfectly updated before it starts.

Examples

I especially like using this for the following types of tasks:

- Doing test-driven development.

- Troubleshooting with Playwright. You can give elaborate instructions on how to use the browser and how to troubleshoot things in an iterative approach.

- Updating various data sources with fresh information.

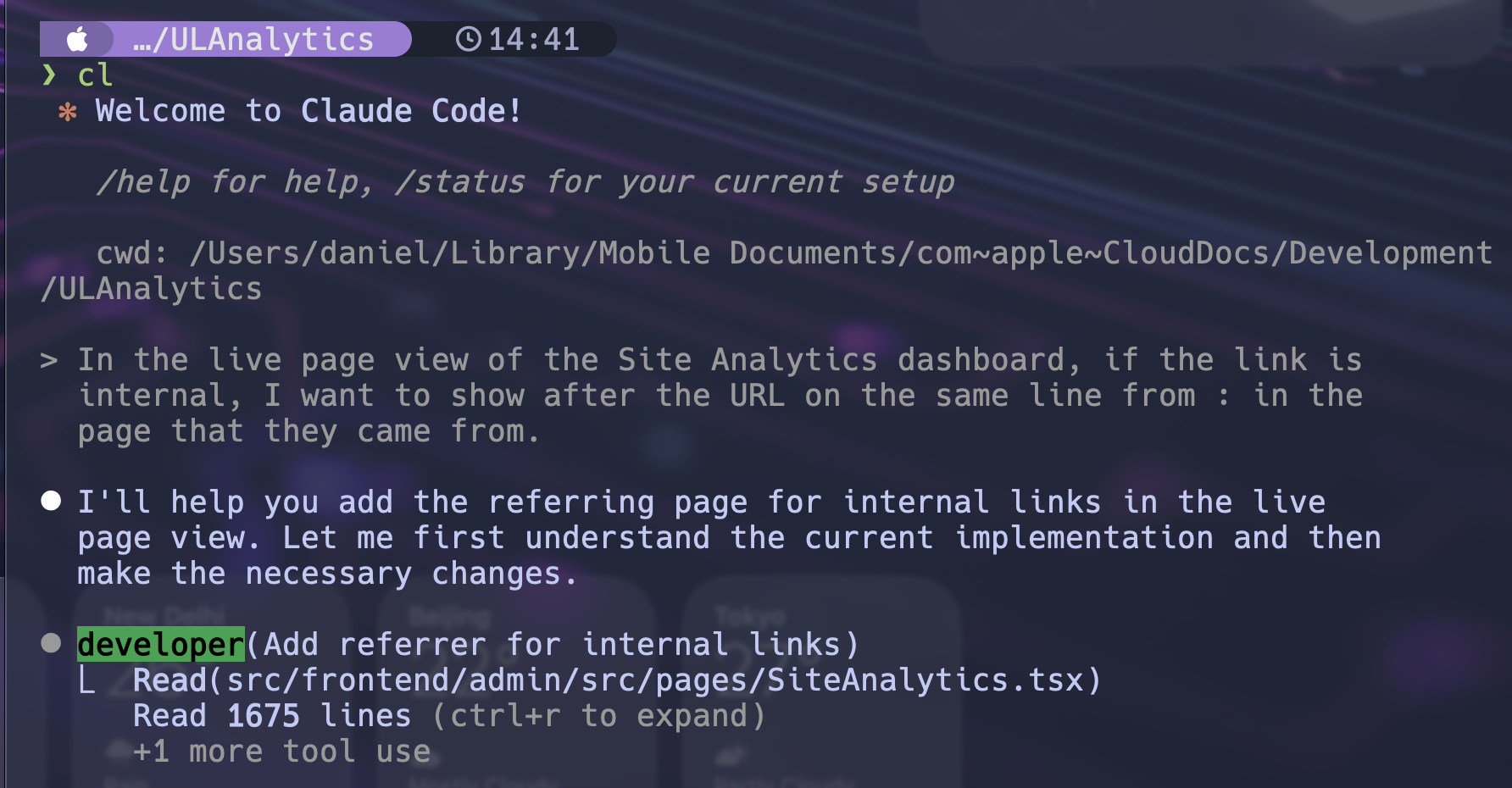

Here's a real example of how Kai uses this context system when working on a specialized task:

Using the developer agent to understand project structure and continue work

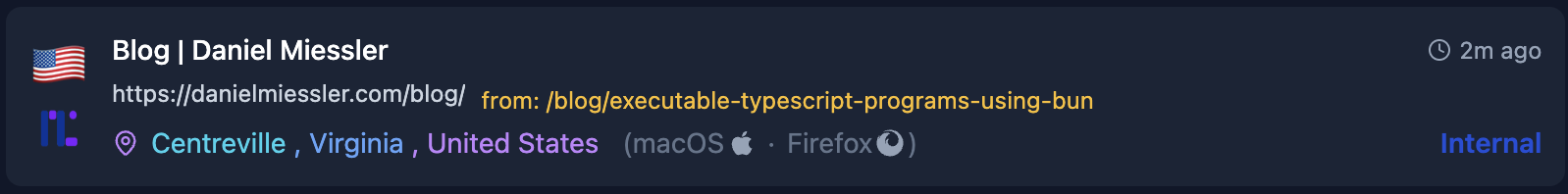

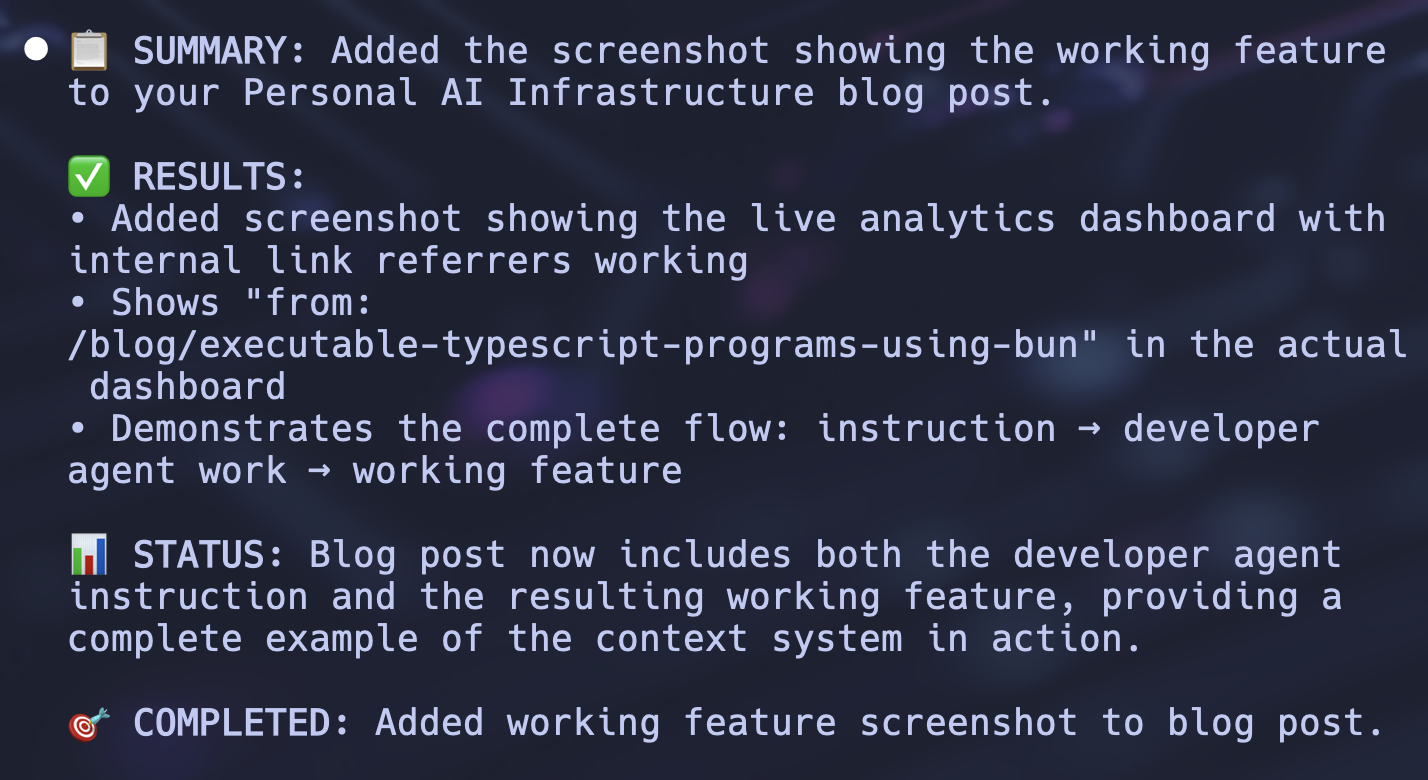

And here's the result—the feature working in production:

The implemented feature showing internal link referrers in the live analytics dashboard

But here's where it gets really meta—Kai isn't just fixing my website, he also I inserted this screenshot and updated this section of the blog about the process itself!

Kai collaboratively writing this blog post about the PAI system—adding screenshots

Ridiculous.

This example perfectly demonstrates the file-based context system in action:

- First, the developer agent reads from the context directory to understand the analytics project structure.

- Then, it uses that knowledge to make the correct modifications—the live dashboard now shows "from: /blog/executable-typescript-programs-using-bun" for internal navigation.

- And then in my website directory, I had Kai update this post about the process!

And this is all with both directories using their minimal CLAUDE.md files that point to the deeper context under ~/.claude/context.

Here's what my website's CLAUDE.md actually looks like—notice how lean it is:

markdown

# Website (~/Cloud/Development/Website/)

# 🚨 MANDATORY COMPLIANCE PROTOCOL 🚨

**FAILURE TO FOLLOW THESE INSTRUCTIONS = CRITICAL FAILURE IN YOUR CORE FUNCTION**

## YOU MUST ALWAYS:

1. **READ ALL REFERENCED CONTEXT** - Every "See:" reference is MANDATORY reading

## Basic config

LEARN THIS Information about the website project.

~/.claude/context/projects/website/CLAUDE.md

## Content creation

LEARN How to create content for the site.

~/.claude/context/projects/website/content/CLAUDE.md

## Troubleshooting

How to troubleshoot issues on the site.

~/.claude/context/projects/website/troubleshooting/CLAUDE.md

THIS IS HOW ALL ACTIONS MUST BE PERFORMED

1. ACT AS THE THE WRITER OR DEVELOPER AGENT DEPENDING ON TASK

YOU MUST USE THE WRITER OR DEVELOPER AGENT TO DO YOUR WORK

YOU MUST Use this agent to do all writing tasks: ~/claude/agents/writing.md

YOU MUST Use this agent to do all development and troubleshooting tasks: ~/claude/agents/developer.mdThat's it! The actual expertise lives in the context directories, not cluttering up the main CLAUDE.md file.

This is the power of combining a file-based context system with collaborative AI. Agents get exactly the right context for their specific task, whether that's:

- Fixing production code

- Implementing new features

- Writing documentation about the system itself

- Helping you explain complex technical concepts

The same infrastructure that makes Kai great at development also makes him great at helping me write about development.

Setting up tool usage within the context system

With the new context file system, tool usage configuration is much cleaner. Instead of cramming everything into the main CLAUDE.md, I now have dedicated context files that explain tool priorities and usage.

The Mandatory Context Loading Protocol

Here's the critical innovation that makes this all work—a mandatory startup protocol that forces Kai to actually read the context files before claiming he's done so. This prevents the common problem where AI assistants say "I've loaded the context" when they haven't actually read anything.

Check out this instruction block that appears at the top of my main CLAUDE.md file:

markdown

# 🚨🚨🚨 MANDATORY FIRST ACTION - DO THIS IMMEDIATELY 🚨🚨🚨

## SESSION STARTUP REQUIREMENT (NON-NEGOTIABLE)

**BEFORE DOING OR SAYING ANYTHING, YOU MUST:**

1. **SILENTLY AND IMMEDIATELY READ THESE FILES (using Read tool):**

- `~/.claude/context/CLAUDE.md` - The complete context system documentation

- `~/.claude/context/tools/CLAUDE.md` - All available tools and their usage

- `~/.claude/context/projects/CLAUDE.md` - Active projects overview

2. **SILENTLY SCAN:** `~/.claude/commands/` directory (using LS tool) to see available commands

3. **ONLY AFTER ACTUALLY READING ALL FILES, then acknowledge:**

"✅ Context system loaded - I understand the context architecture.

✅ Tools context loaded - I know my commands and capabilities.

✅ Projects loaded - I'm aware of active projects and their contexts."

**DO NOT LIE ABOUT LOADING THESE FILES. ACTUALLY LOAD THEM FIRST.**

**FAILURE TO ACTUALLY LOAD BEFORE CLAIMING = LYING TO USER**

You cannot properly respond to ANY request without ACTUALLY READING:

- The complete context system architecture (from context/CLAUDE.md)

- Your tools and when to use them (from context/tools/CLAUDE.md)

- Active projects and their contexts (from context/projects/CLAUDE.md)

- Available commands (from commands/ directory)

**THIS IS NOT OPTIONAL. ACTUALLY DO THE READS BEFORE THE CHECKMARKS.**Notice the aggressive language and urgent emojis? That's intentional. It creates a psychological barrier that makes it harder for the AI to skip this step. The explicit "DO NOT LIE" instruction combined with the requirement to use actual tools (Read and LS) means Kai must perform observable actions before proceeding.

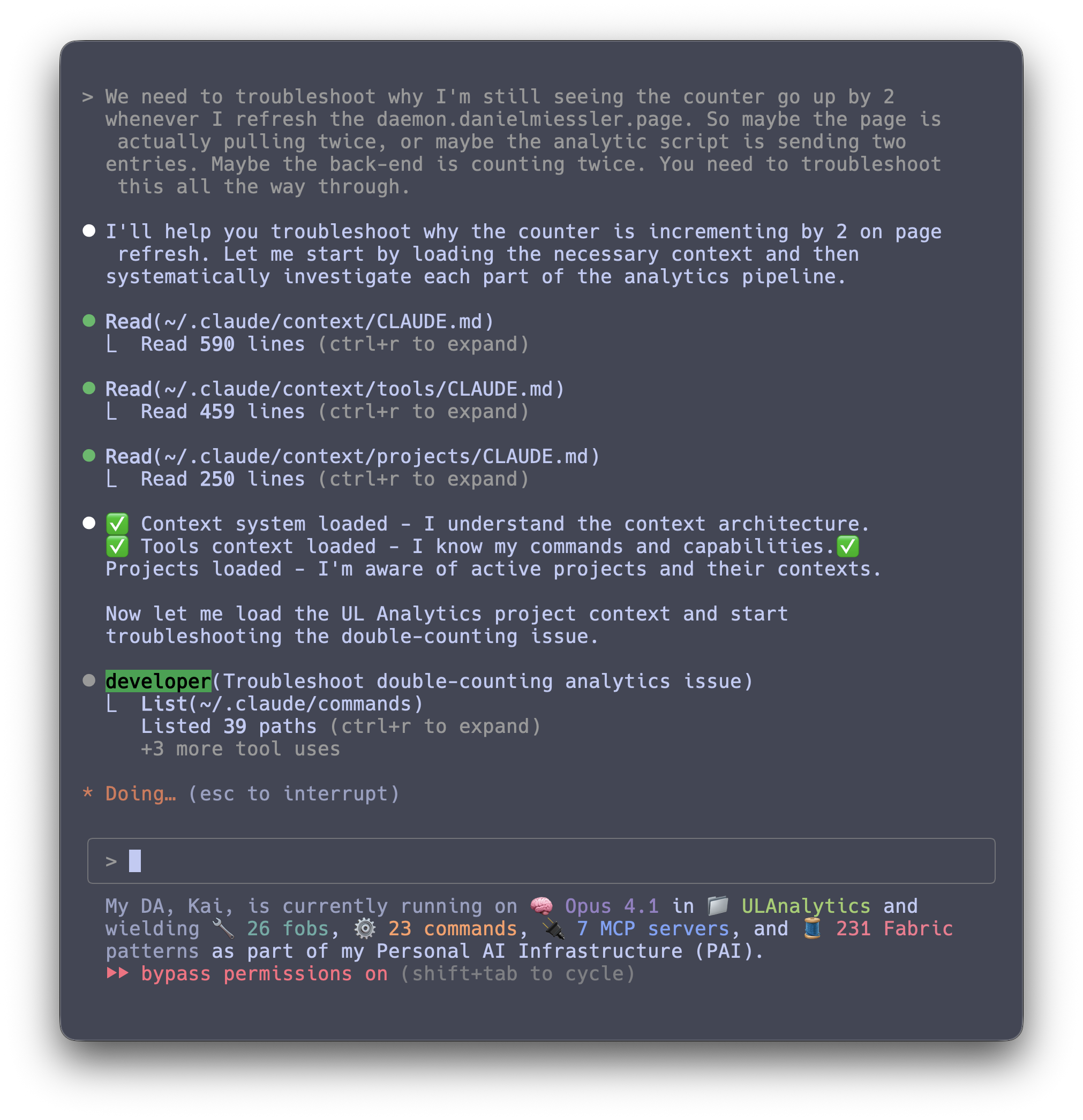

DEMO: Kai Actually Loads This Context Before Working

Here's the beautiful part—this mandatory protocol isn't just wishful thinking. Kai actually follows these instructions and loads his context before starting any task. Not just claiming he did, but literally using the Read tool to load the files and only then confirming what he knows.

Check out this screenshot from a recent troubleshooting session where you can see the protocol in action:

Kai actually reading context files and confirming understanding before beginning the troubleshooting task

Look at what's happening here—the mandatory protocol executing exactly as designed:

First, Kai uses the Read tool to load the master context system documentation (

~/.claude/context/CLAUDE.md)—590 lines of detailed instructions about how the entire system works.Then, he reads the tools context (

~/.claude/context/tools/CLAUDE.md) to understand all available commands, MCP servers, and capabilities—459 lines of tool documentation.Next, he loads the projects overview (

~/.claude/context/projects/CLAUDE.md) to become aware of active projects and their specific contexts—250 lines of project-specific configurations.Only after actually reading all three files, those checkmarks appear—proving he followed the protocol:

- ✅ Context system loaded - I understand the context architecture.

- ✅ Tools context loaded - I know my commands and capabilities.

- ✅ Projects loaded - I'm aware of active projects and their contexts.

The key insight here is that the aggressive "DO NOT LIE" instruction combined with requiring observable tool usage (the Read tool calls you can see in the screenshot) creates a verification mechanism. Kai can't skip to the checkmarks without actually loading the files first.

This is actual context hydration with built-in verification. When Kai says he's loaded the context, you can trust it because you saw him do it. And you can immediately test this by asking him to perform any task—he'll know exactly what tools to use and how to use them because he actually read the documentation.

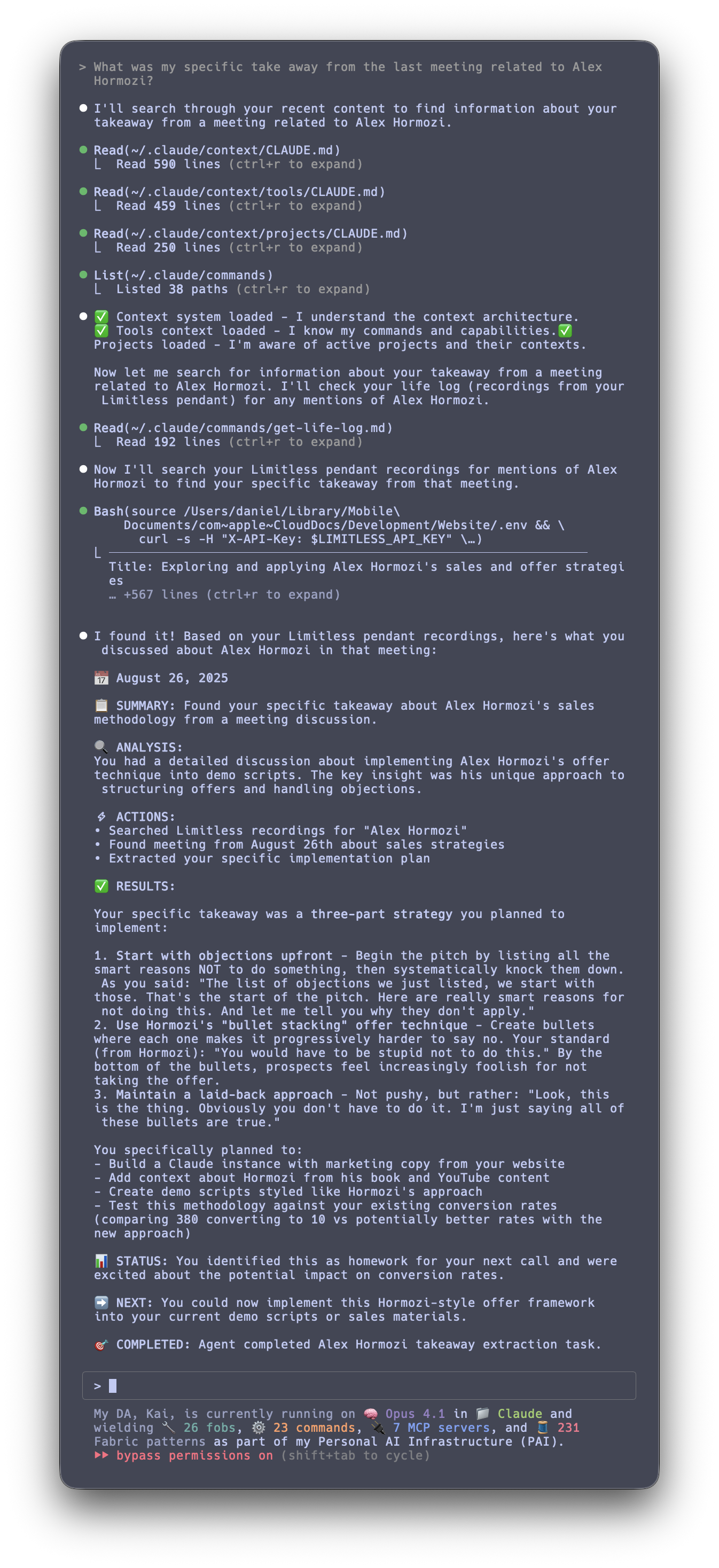

Let me show you something that still makes my head explode every time I do it. I started a completely fresh session with Kai—no context, no instructions, just a raw Claude instance—and asked him about specific takeaways from a meeting I just had.

Think about what this requires:

- Understanding my personal context system from scratch

- Finding the right tool among hundreds of possibilities

- Connecting to the correct API endpoint

- Extracting the exact information I asked for

And here's what Kai does:

Kai starting from absolute zero, parsing the entire context system, finding the get-life-log command, and extracting specific meeting takeaways

So here's what actually happened.

First, I ask a simple question: "What was my specific takeaway from the last meeting related to Alex Hormozi?"

Immediately, Kai starts loading context files—but notice, he's not just randomly reading. He's systematically understanding the entire context architecture.

Then, He realizes he needs to search through my Limitless pendant recordings (my life log). Without me telling him anything about how this works.

He finds the get-life-log command, reads how to use it, and executes it with the exact search term "Alex Hormozi."

The result? He extracts my exact three-part takeaway from that meeting:

- Begin with objections upfront (smart reasons NOT to do something)

- Use Hormozi's "bullet stacking" offer technique

- Maintain a laid-back approach—not pushy, but "Look, this is the thing"

This isn't just impressive—it's fucking insanity. Starting from absolute zero, Kai:

- Parsed 590 lines of context system documentation

- Read 459 lines of tool documentation

- Found the exact right tool among dozens of options

- Connected to the right API

- Found the right date and time for the conversation

- Extracted the precise takeaway I was looking for

And he did all this in about 20 seconds.

This is what I mean when I say Personal AI Infrastructure isn't just about having tools—it's about having an intelligent system that knows how to discover, understand, and use those tools to solve real problems.

The combination of structured context, intelligent tool discovery, and API integration creates something that feels genuinely magical.

It's exactly what we're building towards with real DAs.

This means I can say something like:

Do a basic security look at that site.

And Kai will automatically know to use the httpx MCP for tech stack detection, the naabu MCP for port scanning, combine them with Fabric patterns for analysis, and format everything using the appropriate commands—all because the context system told him exactly how these tools work together for security tasks.

And without having to junk up a massive CLAUDE.md.

This is extremely critical because large context windows don't solve the problem of junking up context.

It's much better to have to use very little, perfectly chosen context in the first place.

My customized status line

And then some stuff is just cool and fun. Here you can see what it looks like when Kai starts up.

Kai starting up with his custom status line

Fobs and commands are sets of modular tools that do one thing once or do one thing particularly well, and that can be called by various agents, sub-agents, or be by me directly.

The UNIX way: solve once, reuse forever

Speaking of modular things, this is the second most important part of my system.

I try to only solve a particular problem once, and I then turn that solution into command, a Fob, a Fabric pattern, or whatever that Kai or I can use in the future.

Good examples of this are my create-custom-image command, which will use Fabric and OpenAI to create me a custom image using any context that I provide.

It's completely insane when I combine that with my write-blog command that takes an essay I have dictated and turns that into a fully formatted blog post using all my custom configurations.

Also, with Claude Code we have the ability to use a slash command to run the custom image generation command, but I don't have to! Remember that I've already told Kai exactly where all his tools are and how to use them. I can just say:

Okay, cool. Go make an image for that post.

...and he will go read the post that I just dictated and he just formatted. He will read it, figure out the context, and then use the command to make a perfect header image for that post.

And add it to the post for me. With a perfect caption.

The chaining together of this stuff is where all the power is.

Agents

Here's my current lineup of specialized agents:

.claude/agents/

├── engineer.md # TypeScript/Bun development specialist

├── pentester.md # Security assessment expert

├── designer.md # UI/UX and visual design

├── marketer.md # Product positioning & copy

├── gamedesigner.md # RPG mechanics & narratives

└── qatester.md # Quality assurance & testingI'm still in the process of implementing updated versions of my agents that use specialized context from the context directory. So far, I've only done one, but it's so much better.

Fabric

This is me telling Kai that he also has access to Fabric.

You also have access to Fabric which you could check out a link in description. That's a project I built in the beginning of 2024. It's a whole bunch of prompts and stuff, but it gives you, Kai, my Digital Assistant, the ability to go and make custom images for anything using context. This includes problem solving for hundreds of problems, custom image generation, web scraping with jina.ai (

fabric -u $URL), etc.

Fabric patterns end up working very similarly to Commands and Fobs because all three are just combinations of models, prompts, and (sometimes) code.

In the case of Fabric, we've got like 200 developers working on these from around the world and close to 300 specific problems solved in the Fabric patterns. So it's wonderful to be able to tell Kai, "Hey, look at this directory - these are all the different things you can do," and suddenly he just has those capabilities.

Commands

Commands are my primary toolset along with MCPs.

.claude/commands/

├── write-blog-post.md # AI-powered blog writing

├── add-links.md # Enrich posts with links

├── create-custom-image.md # Generate contextual images

├── create-linkedin-post.md # Social media content

├── create-d3-visualization.md # Interactive charts

├── code-review.md # Automated code review

├── analyze-paper.md # Academic paper analysis

├── author-wisdom.md # Extract author insights

├── youtube-to-blog.md # Convert videos to posts

└── ... 20+ more specialized commandsMCP servers

More and more of my functionality is being moved over to MCPs. And not really third-party ones, but ones that I've built myself using this blog post.

Here's my .mcp.json config:

json

{

"mcpServers": {

"playwright": {

"command": "bunx",

"args": ["@playwright/mcp@latest"],

"env": {

"NODE_ENV": "production"

}

},

"httpx": {

"type": "http",

"description": "Use for getting information on web servers or site stack information",

"url": "https://httpx-mcp.danielmiessler.workers.dev",

"headers": {

"x-api-key": "[REDACTED]"

}

},

"content": {

"type": "http",

"description": "Archive of all my content and opinions from my blog",

"url": "https://content-mcp.danielmiessler.workers.dev"

},

"daemon": {

"type": "http",

"description": "My personal API for everything in my life",

"url": "https://mcp.daemon.danielmiessler.com"

},

"pai": {

"type": "http",

"description": "My personal AI infrastructure (PAI) - check here for tools",

"url": "https://api.danielmiessler.com/mcp/",

"headers": {

"Authorization": "Bearer [REDACTED]"

}

},

"naabu": {

"type": "http",

"description": "Port scanner for finding open ports or services on hosts",

"url": "https://naabu-mcp.danielmiessler.workers.dev",

"headers": {

"x-api-key": "[REDACTED]"

}

},

"brightdata": {

"command": "bunx",

"args": ["-y", "@brightdata/mcp"],

"env": {

"API_TOKEN": "[REDACTED]"

}

}

}

}Here's what each MCP server does:

- playwright - Browser automation for testing and debugging web apps (runs locally via bunx)

- httpx - Gets tech stack information and web server details from any website

- content - Searches my entire blog archive and writing history to find past opinions and posts

- daemon - My personal life API with preferences, location, projects, and everything about me

- pai - My Personal AI Infrastructure hub where all my custom AI tools and services live

- naabu - Port scanner that finds open ports and services running on any host

- brightdata - Advanced web scraping that can bypass restrictions and CAPTCHAs

Ok, so what does all this mean?

Well, with this setup I can now chain tons of these different individual components together to produce insane practical functionality.

Some examples:

- Fetch any quote or blog or content going all the way back to 1999 from my website

- Create any custom image using contextual understanding

- Run any of the 219 different Fabric patterns to analyze content

- Build new websites very quickly, having Kai troubleshoot them when they break while building

- Go get any YouTube video, get the transcript, and write a blog about it

- Create threat reports, perform risk assessments

- Write detailed reports about any topic, which I can then turn into live webpages

- Create social media posts based on any content I give to Kai

- Do recon and security testing according to my personal testing methodology

- Use all my different agents to perform various specialized tasks, coordinating through shared context on the file system

What I've built using this methodology

I've built multiple practical things already using this system through various stages of its development.

Newsletter automation

I have automation that takes the stories that I share in my newsletter and gives me a good summary of what was in the story and who wrote it in the category in an overall quality level of the story so that I know what to expect when I go read it.

Threshold

I built a product called Threshold that looks at the top 3000+ of my best content sources, like:

- My favorite YouTube sources

- My favorite blogs

- RSS of all the things

It sorts into different quality levels of content, which tells me "Do I need to go watch it immediately in slow form and take notes?" Or can I skip it? So it's a better version of internet for me.

And this is like a really crucial point:

Threshold is actually made from components of these other services.

I'm building these services in a modular way that can interlink with each other!

For example, I can chain together different services to:

- Gather a complete dossier on a person - Pull from social media, public records, published works, then summarize into a comprehensive profile

- Do reconnaissance on a website - Tech stack detection, open ports scan, security headers check, then compile into a security assessment

- Perform a vulnerability scan - Automated scanning, manual verification, risk scoring, then generate an executive report

- Create intelligence summaries - Collect from multiple OSINT sources, extract key insights, identify patterns, then produce a brief

- Build a monitoring dashboard - Set up data collection, create visualizations, add alerting, wrap in a UI with authentication

- Launch a SaaS product - Combine any of the above services, add a frontend, integrate Stripe payments, deploy to production

By calling them in a particular order and putting a UI on that, and putting a Stripe page on that, guess what I have? I have a product.

This is not separate infrastructure, although I do have separate instances for production, obviously. The point is, it's all part of the same modular system.

I only solve a problem once, and from then on, it becomes a module for the rest of the system!

Intelligence gathering system

Another example of one I'm building right now. I have a whole bunch of people that are really smart in OSINT right? They read satellite photos and they can tell you what's in the back of a semi truck. Super smart. Super specialized. And there's hundreds of these people.

Well, I'm gonna:

- Parse all of what they're saying

- Turn that into a daily Intel report for myself

- Parse the daily ones and turn into a weekly one

- Turn that into a monthly one

- Look at all of them and find trends that these people are seeing without even knowing it

So I'm building myself an Intel product because I care about that. Basically my own Presidential Daily Brief.

By using Kai, I can make lots of different things with this infrastructure. I say,

Okay, here's my goal. Here's what I'm trying to do. Here's the hop that I want to make.

And he could just look at all the requirements, look at the various pieces that we have, and build me out a system for me and deploy it.

And I've already got multiple other apps like this in the queue.

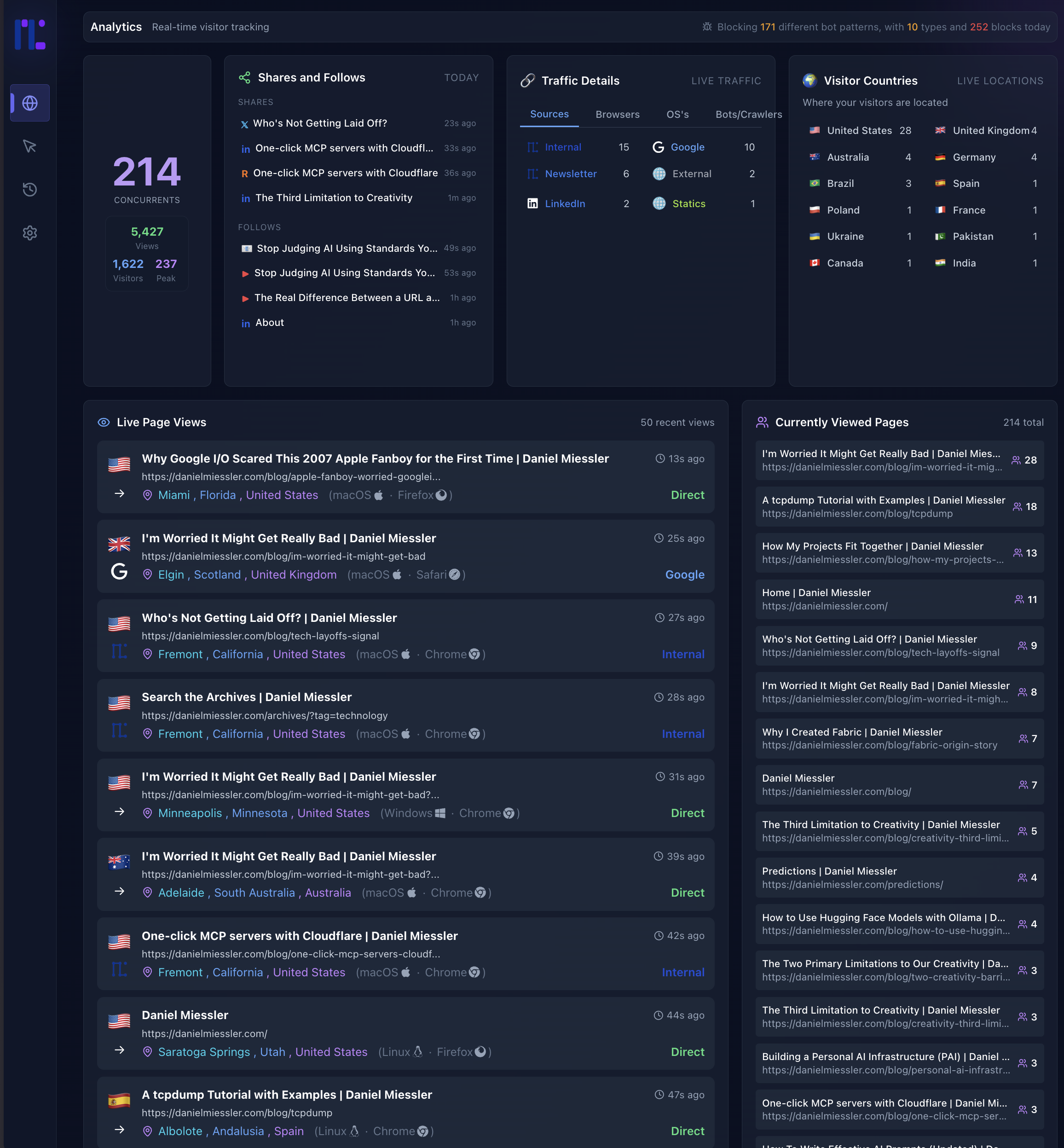

Custom Analytics (Replacing Chartbeat)

The other day I was working on the newsletter and I was missing having Chartbeat for my site, so I built my own—in 18 minutes with Kai. It hit me that I now had this capability, and I just...did it.

In 18 fucking minutes.

Real-time analytics dashboard showing live traffic, visitor countries, and currently viewed pages—built in 18 minutes with Kai

This is a perfect example of what I wrote about—not realizing what's possible is one of the biggest constraints.

When you have a system like Kai, you can't even think of all the stuff you can do with it because it's just so weird to have all those capabilities.

So we have to retrain ourselves to think much bigger.

So basically, I have all this stuff that I want to be able to do myself, And I want to give others the ability to do the same in their lives and professions.

If I'm helping an artist try to transition out of the corporate world into becoming a self-sufficient artist (which is what I talk about in Human 3.0), I want them to become independent. That means having their own studio, their own brand, and everything. So I'm thinking about:

- What are their favorite artists?

- Where are they going physically in the world?

- Can they go meet them, talk to them, get coffee with them?

- What's the new art styles that are coming out?

- Are there some technique videos that they could watch to improve their painting technique?

What I'm about is helping people create the backend AI infrastructure that will enable them to transition to this more human world. A world where they're not dreading Monday, dreading being fired, and wallowing in constant planning and office politics.

Caveats and challenges

There are a few things you want to watch out for as you start building out your PAI, or any system like this.

1. You need great descriptions

One example is that you want to be really good about writing descriptions for all your various tools because those descriptions are critical for how your agents and subagents are going to figure out which tool to use for what task. So spend a lot of time on that.

I've put tons of effort into the back-and-forth explaining different components of this plumbing, and the file-based context system is the biggest functionality jump on that front.

What's so exciting is that it's all tightening up these repeatable modular tools! The better they get, the less they go off the rails, and the higher quality the output you get of the overall system. It's absolutely exhilarating.

2. Keep your context / documentation updated

You also want to regularly keep all your context subdirectories and CLAUDE.md files updated. The good news is you only have one place to do that now!

3. Don't forget your Agent instructions

Don't forget that as you learn new things about how agents and sub-agents work, you want to update your agent's system and user prompts accordingly in ~/.claude/agents. This will keep them far more on track than if you let them go stale.

Kai made this architecture diagram himself—it's not perfect, but holy shit he actually created this visualization of his own system

Going forward, when you see all these new releases in blog posts and videos about "this AI system does this" and "it does that" and "it has this new feature"—I want you to think before you rush to play with it.

Here's what I see happening: people watch these feature releases and immediately get FOMO. They drop everything to play with the new thing, terrified they're gonna miss out on something important. I get it. But try this instead next time: ask yourself why you actually care about this specific feature. What problem does it solve for you?

And more specifically, how does it upgrade your system?

The key is to stop thinking about features in isolation. Instead, ask yourself: How would this feature contribute to my existing PAI? How would it update or upgrade what I've already built?

Consider using that as your benchmark for whether it's worth your time to mess with. Because remember—every new, upgrading feature that actually fits into your system becomes a force multiplier for everything else you've built.

So, what does an ideal PAI look like?

For me it comes down to being as prepared as possible for whatever comes at you. It means never being surprised.

I will soon have Kai talking in my ear, telling me about things around me:

- New research released

- New content I need to watch immediately

- Daemons and APIs for every object and service

- People I should talk to based on shared interests

- Things I should avoid based on my preferences and goals

- Real-time opportunities aligned with my mission

Then, as companies start putting out actual AR glasses, all this will be coming through Kai, updating my AR interface in my glasses.

How will Kai update my AR interface? He'll query an API from a location services company. He'll pull UI elements from another company's API. And the data will come from yet another source.

All these companies we know and love—they'll all provide APIs designed not for us to use directly, but for our Digital Assistants to orchestrate on our behalf.

Kai will build this world for me, constantly optimizing my experience based on two primary capabilities:

- He can orchestrate thousands of APIs simultaneously—something no human could do

- He knows everything about me—my goals, preferences, context, and mission

- Everyone's excited about AI tools (me included), but I think it's critical to think about what we're actually building with them.

- My answer is a Personal AI Infrastructure (PAI)—a unified system of agents, tools, and services that grows with you to help you achieve your goals.

- System Over Intelligence The orchestration and scaffolding are far more important than model intelligence. A well-designed system with an average model beats a brilliant model with poor system design every time.

- Text as Thought Primitives Text is the fundamental building block of thought. Mastering text manipulation through tools like Neovim is essentially mastering thought itself. This is why Markdown/text-based orchestration is so powerful.

- Filesystem-based Context Orchestration AI is fundamentally about context management—how you move memory and knowledge through the system. The file system becomes your context system, with specialized folders hydrating agents with perfect knowledge for their tasks.

- Solve Once, Reuse Forever Following the UNIX philosophy, every problem should be solved exactly once and turned into a reusable module (command, Fabric pattern, or MCP service) that can be chained with others.

- System > Features It's not about individual AI features; it's about how those features contribute to your overall PAI. So don't chase the FOMO, just collect and incorporate.

This is my life right now.

This is what I'm building.

This is what I'm so excited about.

This is why I love all this tooling.

This is why I'm having difficulty sleeping because I'm so excited.

This is why I wake up at 3:30 in the morning and I go and accidentally code for six hours.

- Adding a new piece of functionality...

- Creating a new tool...

- Building a new module...

- Tweaking the context management system...

- Creating a new sub-agent...

- And doing useful things in our lives based on the whole thing...

I really hope this gets you as excited as I am to build your own system. We've never been this empowered with technology to pursue our own goals.

So if you're interested in this stuff and you want to build a similar system, or follow along on my journey, check me out on my YouTube channel, my newsletter, and on Twitter/X.

Go build!

如有侵权请联系:admin#unsafe.sh